Today at Spark + AI Summit, we announced Koalas, a new open source project that augments PySpark’s DataFrame API to make it compatible with pandas.

Python data science has exploded over the past few years and pandas has emerged as the lynchpin of the ecosystem. When data scientists get their hands on a data set, they use pandas to explore. It is the ultimate tool for data wrangling and analysis. In fact, pandas’ read_csv is often the very first command students run in their data science journey.

The problem? pandas does not scale well to big data. It was designed for small data sets that a single machine could handle. On the other hand, Apache Spark has emerged as the de facto standard for big data workloads. Today many data scientists use pandas for coursework, pet projects, and small data tasks, but when they work with very large data sets, they either have to migrate to PySpark to leverage Spark or downsample their data so that they can use pandas.

Now with Koalas, data scientists can make the transition from a single machine to a distributed environment without needing to learn a new framework. As you can see below, you can scale your pandas code on Spark with Koalas just by replacing one package with the other.

pandas:

import pandas as pd

df = pd.DataFrame({'x': [1, 2], 'y': [3, 4], 'z': [5, 6]})

# Rename columns

df.columns = [‘x’, ‘y’, ‘z1’]

# Do some operations in place

df[‘x2’] = df.x * df.xKoalas:

import databricks.koalas as ks

df = ks.DataFrame({'x': [1, 2], 'y': [3, 4], 'z': [5, 6]})

# Rename columns

df.columns = [‘x’, ‘y’, ‘z1’]

# Do some operations in place

df[‘x2’] = df.x * df.xpandas as the standard vocabulary for Python data science

As Python has emerged as the primary language for data science, the community has developed a vocabulary based on the most important libraries, including pandas, matplotlib and numpy. When data scientists are able to use these libraries, they can fully express their thoughts and follow an idea to its conclusion. They can conceptualize something and execute it instantly.

But when they have to work with libraries outside of their vocabulary, they stumble, they check StackOverflow every few minutes, and they have to interrupt their workflow just to get their code to work. Even though PySpark is simple to use and similar in many ways to pandas, it is still a different vocabulary they have to learn.

At Databricks, we believe that enabling pandas on Spark will significantly increase productivity for data scientists and data-driven organizations for several reasons:

- Koalas removes the need to decide whether to use pandas or PySpark for a given data set

- For work that was initially written in pandas for a single machine, Koalas allows data scientists to scale up their code on Spark by simply switching out pandas for Koalas

- Koalas unlocks big data for more data scientists in an organization since they no longer need to learn PySpark to leverage Spark

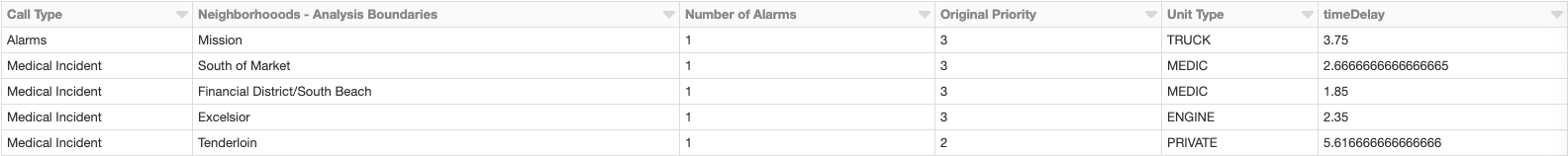

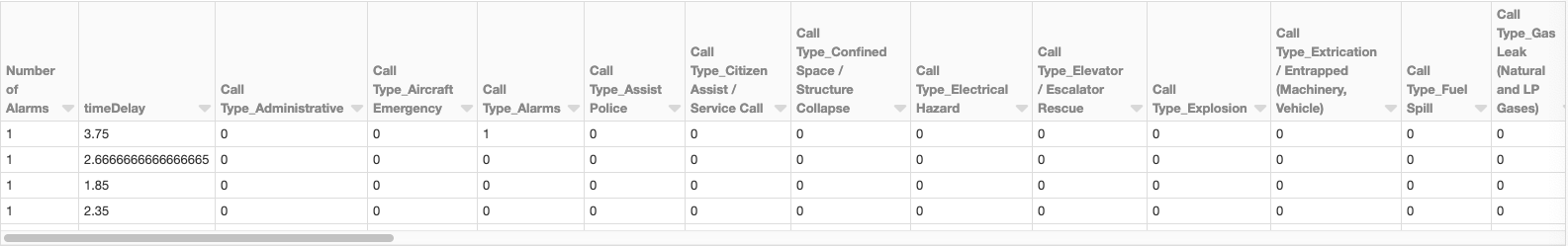

Below, we show two examples of simple and powerful pandas methods that are straightforward to run on Spark with Koalas.

Feature engineering with categorical variables

Data scientists often encounter categorical variables when they build ML models. A popular technique is to encode categorical variables as dummy variables. In the example below, there are several categorical variables including call type, neighborhood and unit type. pandas’ get_dummies method is a convenient method that does exactly this. Below we show how to do this with pandas:

import pandas as pd

data = pd.read_csv("fire_department_calls_sf_clean.csv", header=0)

display(pd.get_dummies(data))

Now thanks to Koalas, we can do this on Spark with just a few tweaks:

import databricks.koalas as ks

data = ks.read_csv("fire_department_calls_sf_clean.csv", header=0)

display(ks.get_dummies(data))And that’s it!

Arithmetic with timestamps

Data scientists work with timestamps all the time but handling them correctly can get really messy. pandas offers an elegant solution. Let’s say you have a DataFrame of dates:

import pandas as pd

import numpy as np

date1 = pd.Series(pd.date_range('2012-1-1 12:00:00', periods=7, freq='M'))

date2 = pd.Series(pd.date_range('2013-3-11 21:45:00', periods=7, freq='W'))

df = pd.DataFrame(dict(Start_date = date1, End_date = date2))

print(df)

End_date Start_date

0 2013-03-17 21:45:00 2012-01-31 12:00:00

1 2013-03-24 21:45:00 2012-02-29 12:00:00

2 2013-03-31 21:45:00 2012-03-31 12:00:00

3 2013-04-07 21:45:00 2012-04-30 12:00:00

4 2013-04-14 21:45:00 2012-05-31 12:00:00

5 2013-04-21 21:45:00 2012-06-30 12:00:00

6 2013-04-28 21:45:00 2012-07-31 12:00:00To subtract the start dates from the end dates with pandas, you just run:

df['diff_seconds'] = df['End_date'] - df['Start_date']

df['diff_seconds'] = df['diff_seconds']/np.timedelta64(1,'s')

print(df) End_date Start_date diff_seconds

0 2013-03-17 21:45:00 2012-01-31 12:00:00 35545500.0

1 2013-03-24 21:45:00 2012-02-29 12:00:00 33644700.0

2 2013-03-31 21:45:00 2012-03-31 12:00:00 31571100.0

3 2013-04-07 21:45:00 2012-04-30 12:00:00 29583900.0

4 2013-04-14 21:45:00 2012-05-31 12:00:00 27510300.0

5 2013-04-21 21:45:00 2012-06-30 12:00:00 25523100.0

6 2013-04-28 21:45:00 2012-07-31 12:00:00 23449500.0Now to do the same thing on Spark, all you need to do is replace pandas with Koalas:

import databricks.koalas as ks

df = ks.from_pandas(pandas_df)

df['diff_seconds'] = df['End_date'] - df['Start_date']

df['diff_seconds'] = df['diff_seconds'] / np.timedelta64(1,'s')

print(df)Once again, it’s that simple.

Next steps and getting started with Koalas

You can watch the official announcement of Koalas by Reynold Xin at Spark + AI Summit:

We created Koalas because we meet a lot of data scientists who are reluctant to work with large data. We believe that Koalas will empower them by making it really easy to scale their work on Spark.

So far, we have implemented common DataFrame manipulation methods, as well as powerful indexing techniques in pandas. Here are some upcoming items in our roadmap, mostly focusing on improving coverage:

- String manipulation for working with text data

- Date/time manipulation for time series data

This initiative is in its early stages but is quickly evolving. If you are interested in learning more about Koalas or getting started, check out the project’s GitHub repo. We welcome your feedback and contributions!