Today we are excited to introduce Databricks Workflows, the fully-managed orchestration service that is deeply integrated with the Databricks Lakehouse Platform. Workflows enables data engineers, data scientists and analysts to build reliable data, analytics, and ML workflows on any cloud without needing to manage complex infrastructure. Finally, every user is empowered to deliver timely, accurate, and actionable insights for their business initiatives.

The lakehouse makes it much easier for businesses to undertake ambitious data and ML initiatives. However, orchestrating and managing production workflows is a bottleneck for many organizations, requiring complex external tools (e.g. Apache Airflow) or cloud-specific solutions (e.g. Azure Data Factory, AWS Step Functions, GCP Workflows). These tools separate task orchestration from the underlying data processing platform which limits observability and increases overall complexity for end-users.

Databricks Workflows is the fully-managed orchestration service for all your data, analytics, and AI needs. Tight integration with the underlying lakehouse platform ensures you create and run reliable production workloads on any cloud while providing deep and centralized monitoring with simplicity for end-users.

Orchestrate anything anywhere

Workflows allows users to build ETL pipelines that are automatically managed, including ingestion, and lineage, using Delta Live Tables. You can also orchestrate any combination of Notebooks, SQL, Spark, ML models, and dbt as a Jobs workflow, including calls to other systems. Workflows is available across GCP, AWS, and Azure, giving you full flexibility and cloud independence.

Reliable and fully managed

Built to be highly reliable from the ground up, every workflow and every task in a workflow is isolated, enabling different teams to collaborate without having to worry about affecting each other's work. As a cloud-native orchestrator, Workflows manages your resources so you don't have to. You can rely on Workflows to power your data at any scale, joining the thousands of customers who already launch millions of machines with Workflows on a daily basis and across multiple clouds.

Simple workflow authoring for every user

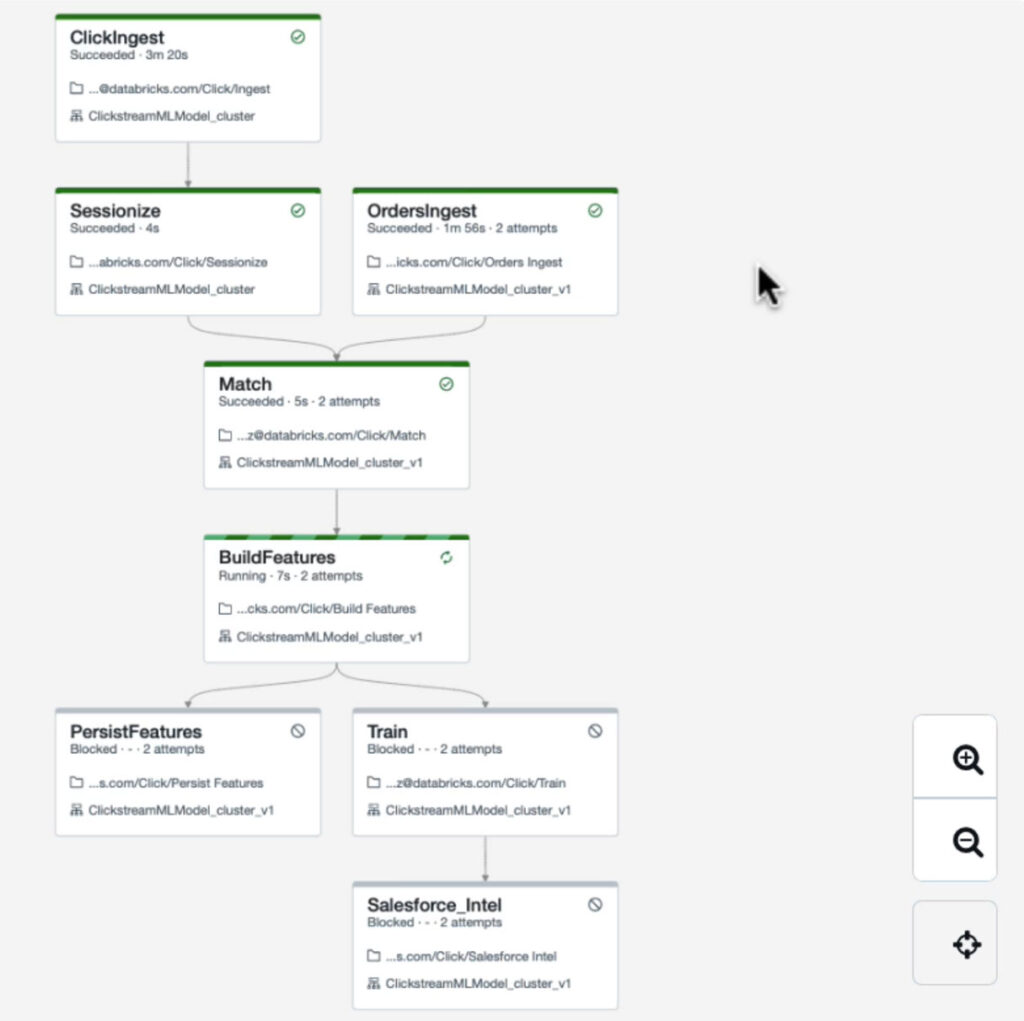

When we built Databricks Workflows, we wanted to make it simple for any user, data engineers and analysts, to orchestrate production data workflows without needing to learn complex tools or rely on an IT team. Consider the following example which trains a recommender ML model. Here, Workflows is used to orchestrate and run seven separate tasks that ingest order data with Auto Loader, filter the data with standard Python code, and use notebooks with MLflow to manage model training and versioning. All of this can be built, managed, and monitored by data teams using the Workflows UI. Advanced users can build workflows using an expressive API which includes support for CI/CD.

"Databricks Workflows allows our analysts to easily create, run, monitor, and repair data pipelines without managing any infrastructure. This enables them to have full autonomy in designing and improving ETL processes that produce must-have insights for our clients. We are excited to move our Airflow pipelines over to Databricks Workflows." Anup Segu, Senior Software Engineer, YipitData

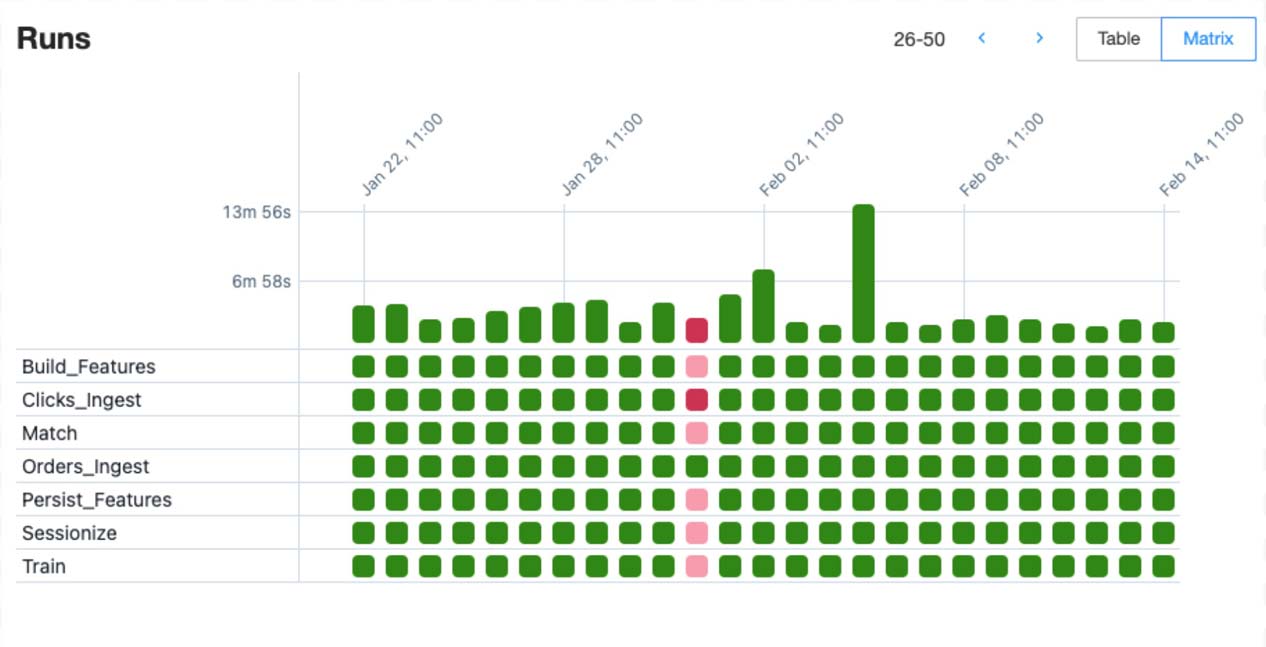

Workflow monitoring integrated within the Lakehouse

As your organization creates data and ML workflows, it becomes imperative to manage and monitor them without needing to deploy additional infrastructure. Workflows integrates with existing resource access controls in Databricks, enabling you to easily manage access across departments and teams. Additionally, Databricks Workflows includes native monitoring capabilities so that owners and managers can quickly identify and diagnose problems. For example, the newly-launched matrix view lets users triage unhealthy workflow runs at a glance:

As individual workflows are already monitored, workflow metrics can be integrated with existing monitoring solutions such as Azure Monitor, AWS CloudWatch, and Datadog (currently in preview).

"Databricks Workflows freed up our time on dealing with the logistics of running routine workflows. With newly implemented repair/rerun capabilities, it helped to cut down our workflow cycle time by continuing the job runs after code fixes without having to rerun the other completed steps before the fix. Combined with ML models, data store and SQL analytics dashboard etc, it provided us with a complete suite of tools for us to manage our big data pipeline." Yanyan Wu VP, Head of Unconventionals Data, Wood Mackenzie – A Verisk Business

Get started with Databricks Workflows

To experience the productivity boost that a fully-managed, integrated lakehouse orchestrator offers, we invite you to create your first Databricks Workflow today.

In the Databricks workspace, select Workflows, click Create, follow the prompts in the UI to add your first task and then your subsequent tasks and dependencies. To learn more about Databricks Workflows visit our web page and read the documentation.

Watch the demo below to discover the ease of use of Databricks Workflows:

In the coming months, you can look forward to features that make it easier to author and monitor workflows and much more. In the meantime, we would love to hear from you about your experience and other features you would like to see.