Genome Sequencing in a Nutshell

Free Edition has replaced Community Edition, offering enhanced features at no cost. Start using Free Edition today.

This is a guest post from Deborah Siegel from the Northwest Genome Center and the University of Washington with Denny Lee from Databricks on their collaboration on genome variant analysis with ADAM and Spark.

This is part 1 of the 3 part series Genome Variant Analysis using K-Means, ADAM, and Apache Spark:

- Genome Sequencing in a Nutshell

- Parallelizing Genome Variant Analysis

- Predicting Geographic Population using Genome Variants and K-Means

Introduction

Over the last few years, we have seen a rapid reduction in costs and time of genome sequencing. The potential of understanding the variations in genome sequences range from assisting us in identifying people who are predisposed to common diseases, solving rare diseases, and enabling clinicians to personalize prescription and dosage to the individual.

In this three-part blog, we will provide a primer of genome sequencing and its potential. We will focus on genome variant analysis - that is the differences between genome sequences - and how it can be accelerated by making use of Apache Spark and ADAM (a scalable API and CLI for genome processing) using Databricks Community Edition. Finally, we will execute a k-means clustering algorithm on genomic variant data and build a model that will predict the individual’s geographic population of origin based on those variants.

This first post will provide a primer on genome sequencing. You can also skip ahead to the second post Parallelizing Genome Variant Analysis focusing on parallel bioinformatic analysis or the third post on Predicting Geographic Population using Genome Variants and K-Means.

Genome Sequencing

A very simple language analogy

Imagine one long string composed of 3 billion characters and containing roughly 25,000 words interspersed with other characters. Some of the words even make sentences. Changing, adding, or deleting characters or groups of characters could change the structure or meaning of the words and sentences.

Each long string has very roughly 10-30 million places where such differences may occur. And this makes things interesting. Of course, everything is more complicated. But this has shown itself to be a useful abstraction of genome data.

In the genome, we have been building knowledge about where the words (genes) are located in the string of characters (bases), and we have been discovering the places where they differ (the variants). But we don’t know everything. We are still learning about what the effect of the variants are, how the genes are related to each other, and how they may be expressed in different forms and in different quantities under certain circumstances.

Genome Sequencing in a Nutshell

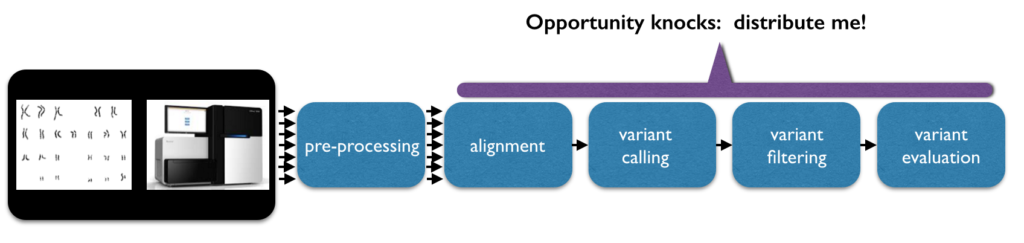

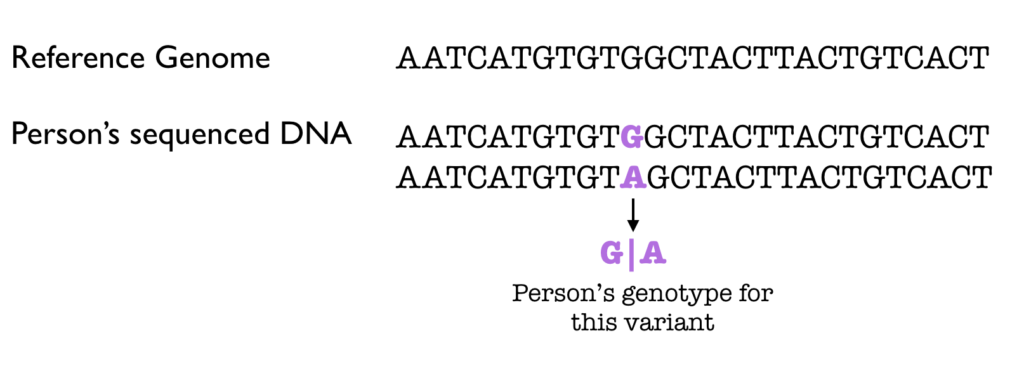

Genome sequencing involves using chemistry and a recording technique to read the characters which code the genome (A,G, C, T) in order (in sequence).

The data is initially read in the form of short strings. For a 30x coverage of a person’s genome (30x is a common goal), there may be approximately 600 million short strings of 150 characters each. During data preprocessing, the strings will be mapped/aligned, typically to a reference sequence. There are many different approaches to alignment. Ultimately, this gives every base a defined position. Variant analysis of aligned sequence data finds code differences by comparing the sequence to the reference or to other aligned sequences and assigns genotypes to a person’s variants.

Some of the detected variants will be based on noise, and can be filtered with rigid thresholds on parameters such as coverage, quality, and domain-specific biases. Rather than hard filtering, some analysts threshold the variants by fitting a Gaussian mixture model. Even further downstream, analysts quantify and explore the data, try and identify highly significant variants (a small number given the input size), and try to predict what their functional effect might be.

Why sequence?

Genome sequence (and exome sequence, which is a subset) is interesting data from a data science perspective. We can use our knowledge of sequences to gain hints at how and why the code has evolved over long periods of time. Knowledge from genome sequencing studies is becoming more integrated into medicine. Genome sequencing is now used for non-invasive prenatal diagnostics. Genome sequencing will soon be used in clinical screening and diagnostic tests, with much ongoing work to expand genomic medicine.

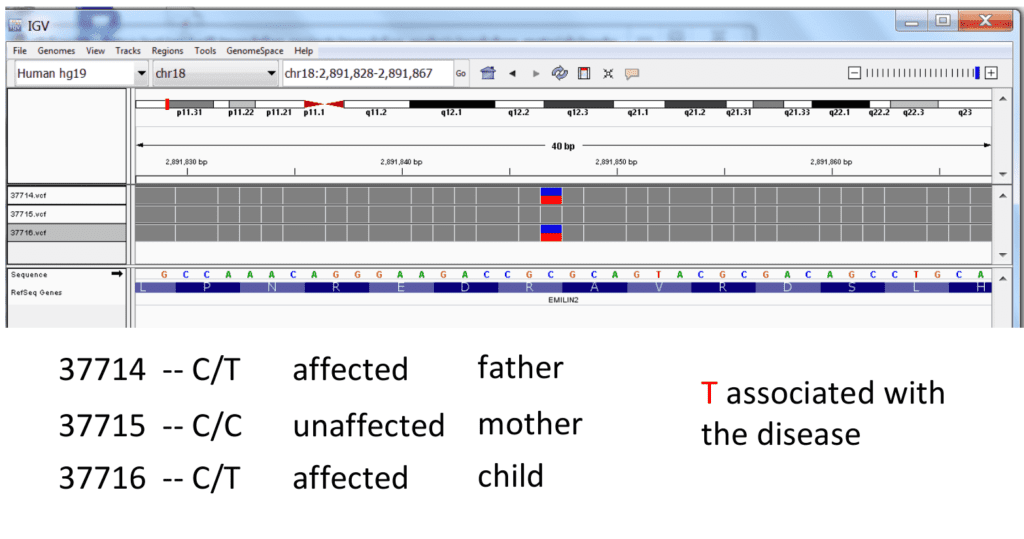

On the research and discovery side, large cohort and population-scale genome sequencing studies find variants or patterns of variance which may predispose people to common diseases such as autism, heart disease, and specific cancers. Sequencing studies also indicate variants influencing drug metabolism, enabling clinicians to personalize prescriptions and dosage to each individual. In the case of rare heritable diseases, sequencing certain members of a family often leads to finding the causal variants.

(image credit: Frederic Reinier, used with permission)

In the past five years, sequencing experiments have linked genomic variants to hundreds of rare diseases:

“Individually, a rare disease may affect only a handful of families. Collectively, rare diseases impact 20 to 30 million people in the U.S. alone.”

For these reasons, there are resources going towards the reading and analysis of sequences. The National Health Service of the UK has a project to sequence 100,000 genomes of families with members who have rare diseases or cancer by 2017. In the US, The National Human Genome Research Institute (NHGRI) plans to fund common disease research for $240 million and rare disease research for $40 million over the next 4 years. There are also other kinds of sequencing which will benefit from efforts to scale bioinformatics and lower the barrier to applying data science to a large amount of sequence data, such as RNA-seq, microbiome sequencing, and immune system and cancer profile sequencing.

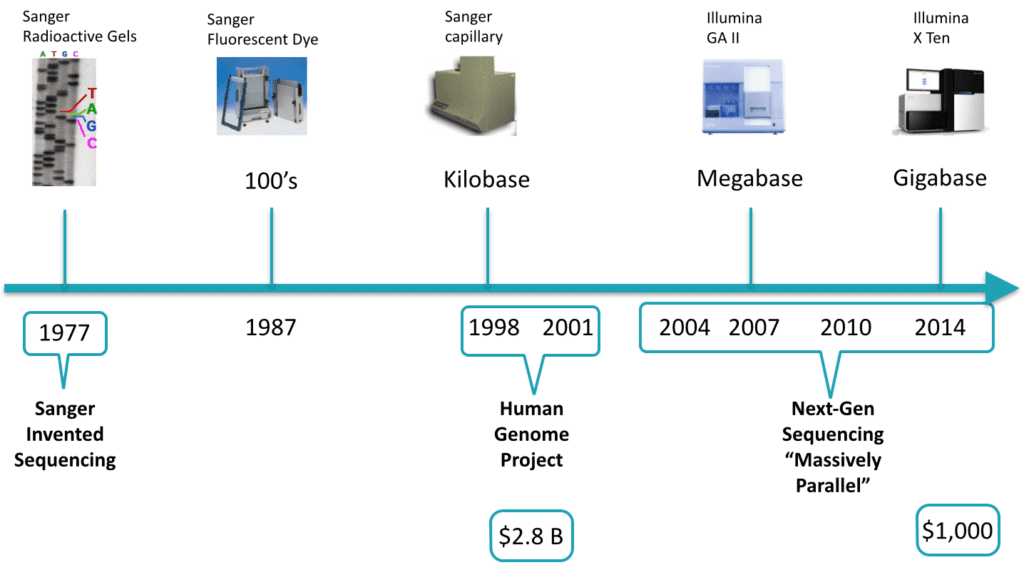

Sequencing technology has been an object of accelerated growth. Between 1998 and 2001, the first human genome was sequenced. It cost $2.8 Billion of 2009 dollars. Today, a genome can be sequenced in 3 days for around $1,000 (for more information, please review National Institutes of Health: National Human Genome Research Institute > DNA Sequencing Costs). During roughly the first 25 years of sequencing experiments, the chemistry allowed only one stretch of DNA to be sequenced at a time, making it laborious, slow, and expensive. The next-generation of sequencing has become massively parallel, enabling sequencing to occur on many stretches of DNA within the same experiment. Also, with molecular indexing, multiple individual’s DNA can be sequenced together and the data can be separated out during analysis. It is not implausible to speculate that most people on the planet who opt-in will have their genomes sequenced in the not-so-distant future. To find out more detail about next-generation sequencing, see Coming of age: ten years of next-generation sequencing technologies

Depending on the application and settings, current sequencing instruments can read ~600 gigabases per day. A medium to large size sequencing center has several such instruments running concurrently. As we will see later on in detail, one of the challenges facing bioinformatics is that downstream software for analyzing variants had been previously optimized for specific, non-extensible file formats, rather than on the data models themselves. The result is that there exist pipeline fragility and obstacles to scalability. Now that we have massively parallel sequencing, many are looking towards parallel bioinformatic analysis.

Public Data

Genome sequence data is generally private. Between 2007 and 2013, The 1000 genomes project was an initial effort for public “population level sequencing”. By its final phase, it provided some sequencing coverage data for 2,504 individuals from 26 populations. We used the easily accessible data from this project as a resource to build a notebook in Databricks Community Edition.

Next Steps

In the next blog Parallelizing Genome Variant Analysis we will look into parallel bioinformatic analysis. You can also skip ahead to Predicting Geographic Population using Genome Variants and K-Means.

Attribution

We wanted to give a particular call out to the following resources that helped us create the notebook

- ADAM: Genomics Formats and Processing Patterns for Cloud Scale Computing (Berkeley AMPLab)

- Andy Petrella’s Lightning Fast Genomics with Spark and ADAM and associated GitHub repo.

- Neil Ferguson Population Stratification Analysis on Genomics Data Using Deep Learning.

- Matthew Conlen Lightning-Viz project.

- Timothy Danford’s SlideShare presentations (on Genomics with Spark)

- Centers for Mendelian Genomics uncovering the genomic basis of hundreds of rare conditions

- NIH genome sequencing program targets the genomic bases of common, rare disease

- The 1000 Genomes Project

As well, we’d like to thank for additional contributions and reviews by Anthony Joseph, Xiangrui Meng, Hossein Falaki, and Tim Hunter.