Generative AI Architecture Patterns

Build production-quality generative AI for any architectural pattern

Start building your generative AI solution

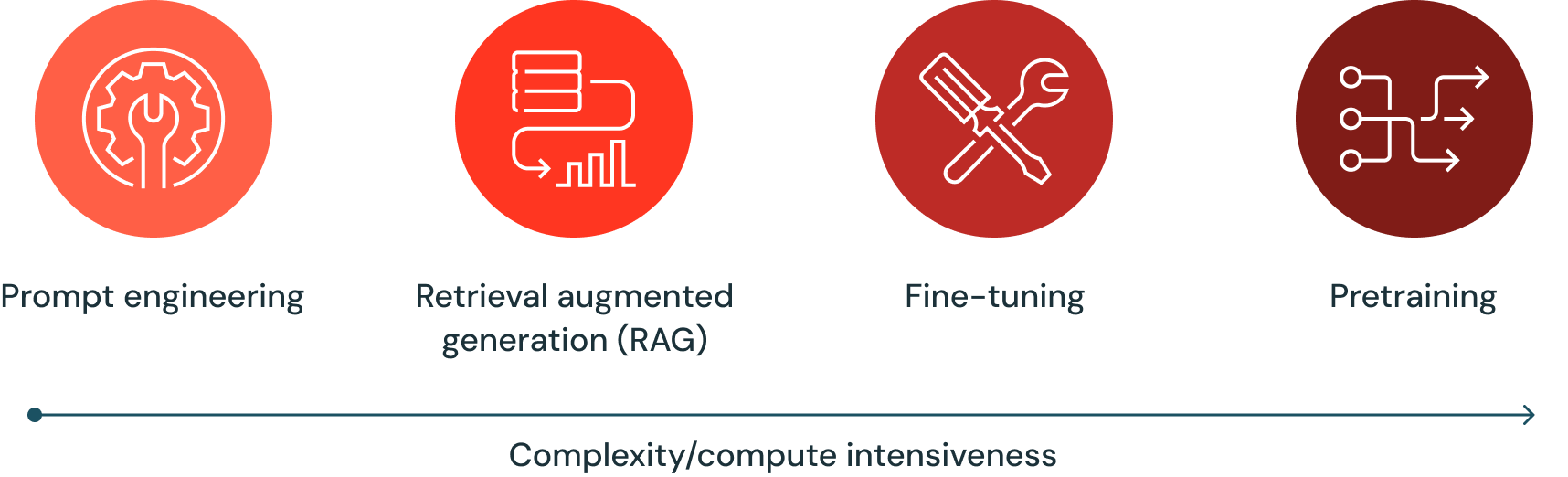

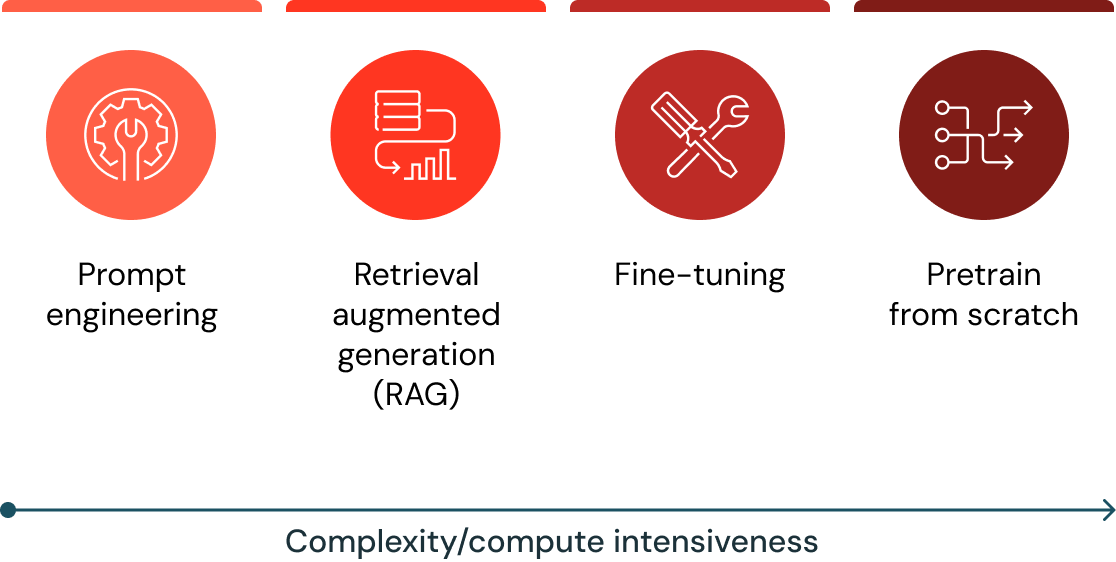

There are four architectural patterns to consider when building a large language model (LLM) solution.

Databricks is the only provider that lets you build high-quality solutions at low cost across all four generative AI architectural patterns:

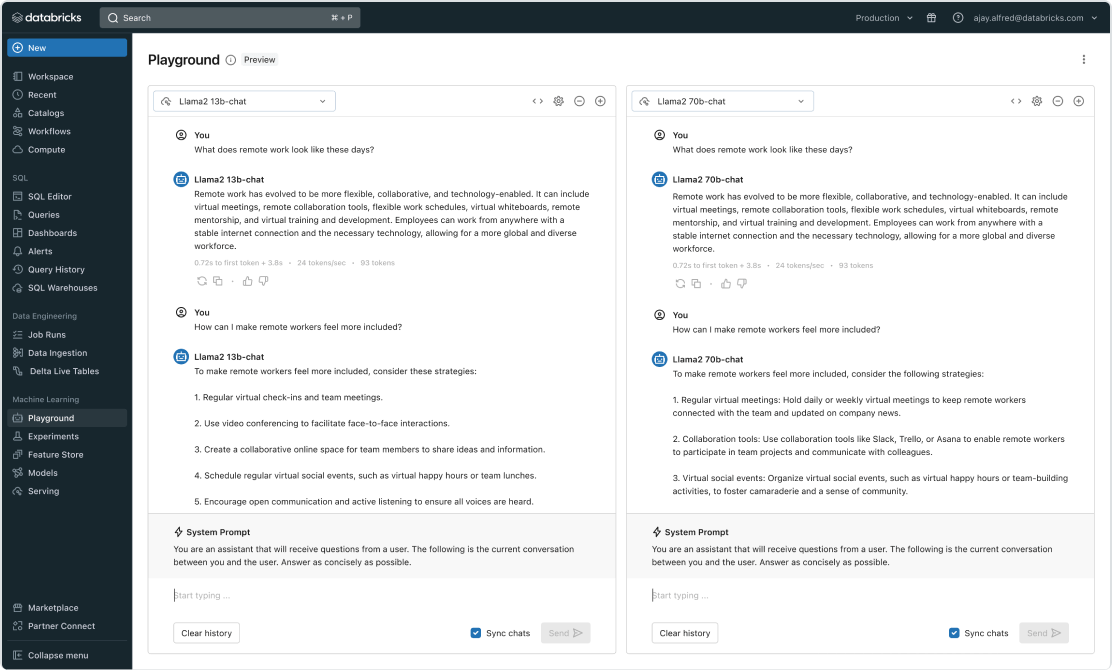

Prompt engineering

Prompt engineering is the practice of customizing prompts to elicit better responses without changing the underlying model. Prompt engineering is easy in Databricks by finding available models in the Marketplace (including popular open source models such as Llama 2 and MPT), serving models behind an endpoint in Model Serving, and evaluating prompts in an easy-to-use UI with Playground or MLflow.

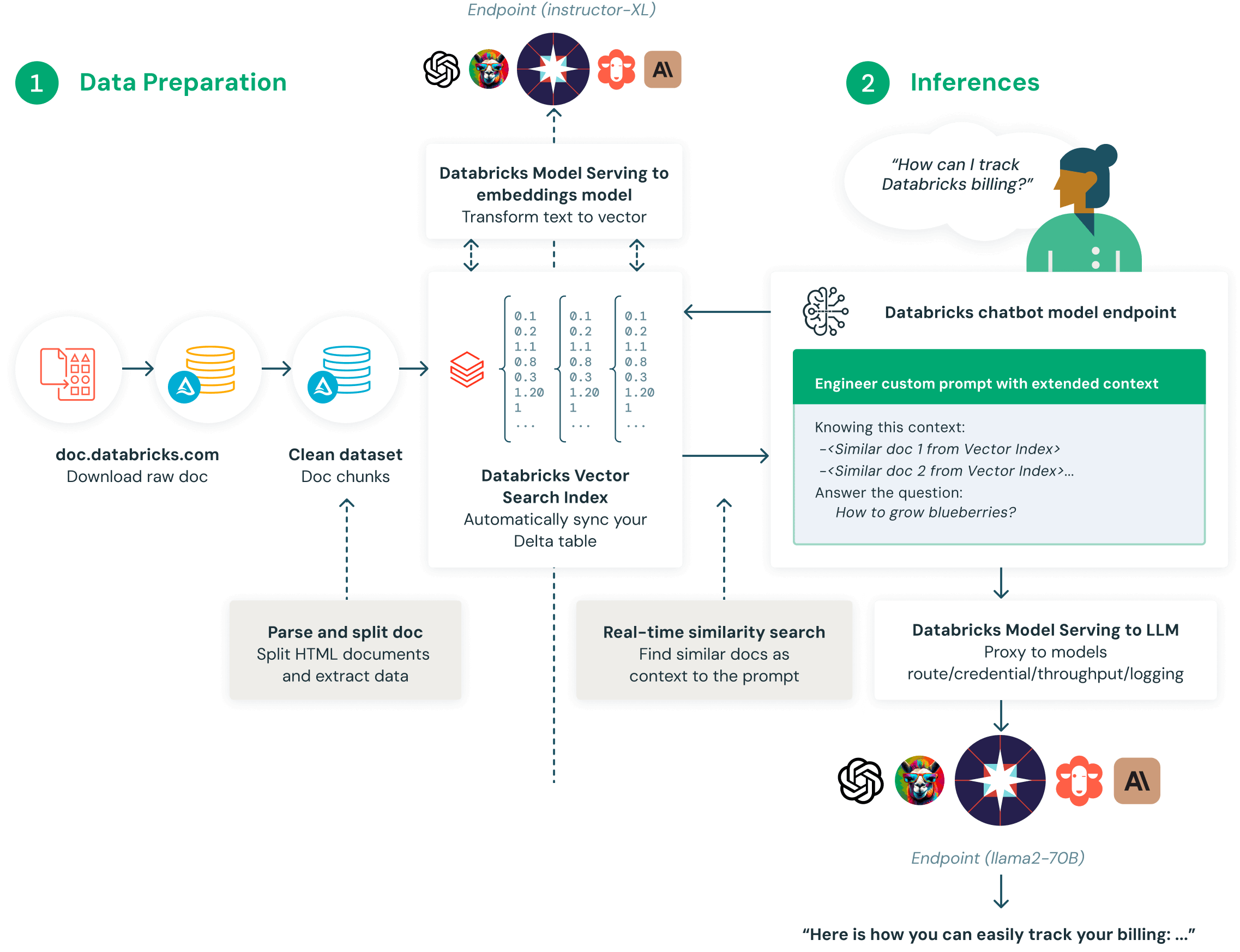

Retrieval augmented generation (RAG)

RAG finds data/documents that are relevant to a question or task and provides them as context for the LLM to give more relevant responses.

Databricks has a suite of RAG tools that helps you combine and optimize all aspects of the RAG process such as data preparation, retrieval models, language models (either SaaS or open source), ranking and post-processing pipelines, prompt engineering, and training models on custom enterprise data.

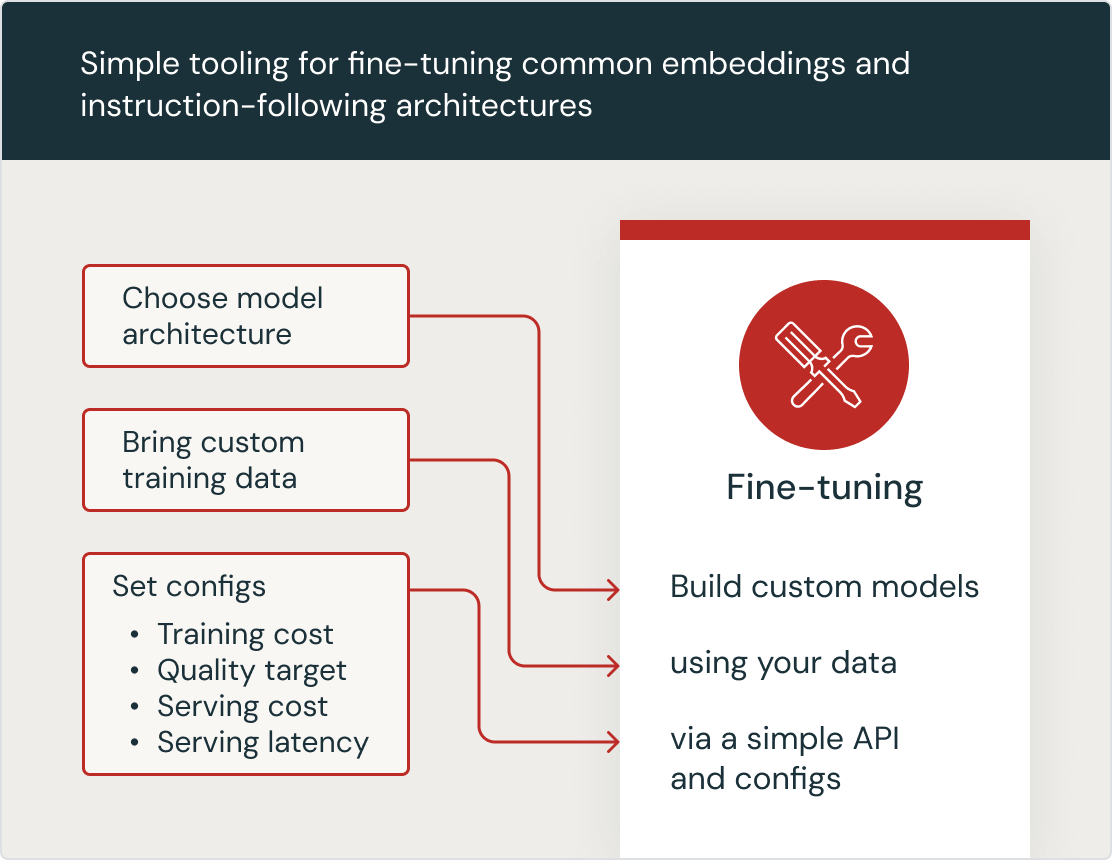

Fine-tuning

Fine-tuning adapts an existing general-purpose LLM model by doing additional training using your organization’s IP with your data. Databricks fine-tuning lets you do this easily by allowing you to start with your preferred LLM model — including curated models by Databricks such as MPT-30B, Llama 2 and BGE — and giving you the ability to do further training on new datasets.

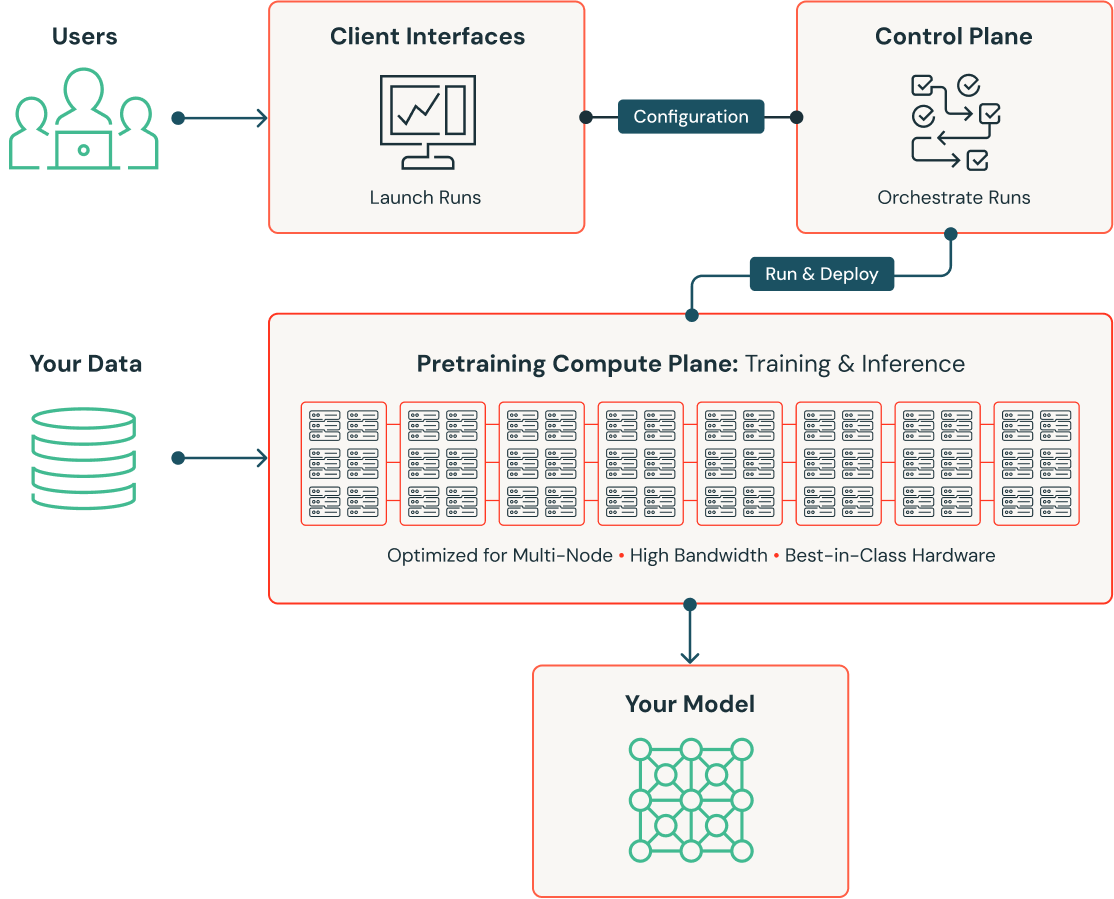

Pretraining

Pretraining is the practice of building a new LLM model from scratch to ensure the foundational knowledge of the model is tailored to your specific domain. By training on your organization’s IP with your data, it creates a customized model that is uniquely differentiated. Databricks Mosaic AI Pretraining is an optimized training solution that can build new multibillion parameter LLMs in days with up to 10x lower training costs.

Choosing the best pattern

These architectural patterns are not mutually exclusive. Rather, they can (and should) be combined to take advantage of the strengths of each in different generative AI deployments. Databricks is the only provider that enables all four generative AI architectural patterns, ensuring you have the most options and can evolve as your business requirements demand.