LakeFlow

Acquisisci, trasforma e orchestra i dati con una soluzione unificata per il data engineering

LE PRINCIPALI AZIENDE CHE UTILIZZANO LAKEFLOWLa soluzione end-to-end per fornire dati di alta qualità.

Strumenti che facilitano la creazione di pipeline di dati affidabili per l'analisi e l'AI.85% di velocità di sviluppo in più

50% di riduzione dei costi

99% di riduzione nella latenza delle pipeline

2,500 esecuzioni di job al giorno

Corning utilizza Lakeflow per semplificare l'orchestrazione dei dati, creando un processo automatizzato e ripetibile per più team all'interno dell'organizzazione. Questi flussi di lavoro automatizzati spostano enormi quantità di dati attraverso un'architettura a medaglione, dalle tabelle Bronze a quelle Gold.

Strumenti unificati per i data engineer

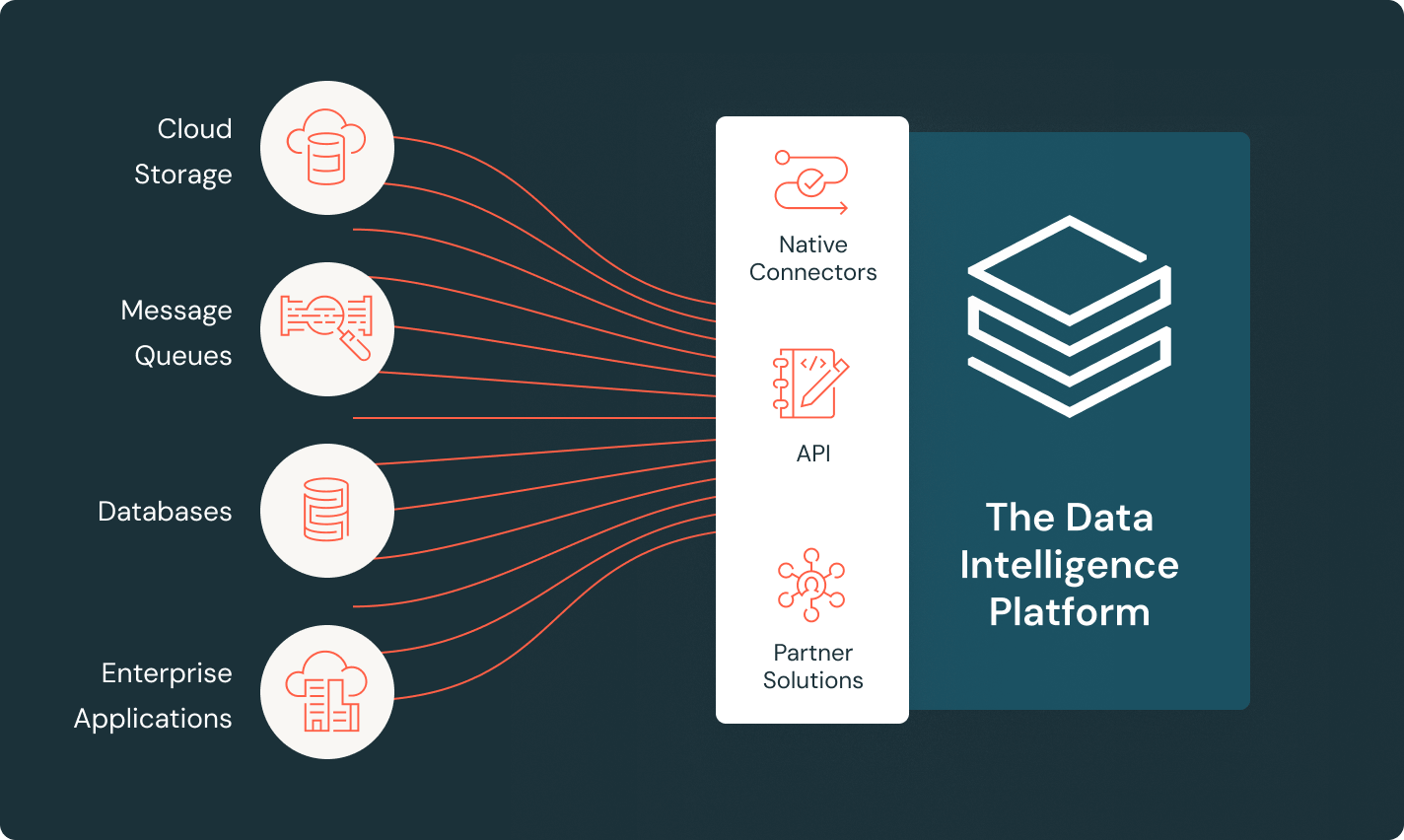

LakeFlow Connect

Connettori efficienti per l'acquisizione di dati e integrazione nativa con la Data Intelligence Platform facilitano l'accesso all'analisi e all'AI, con una governance unificata.

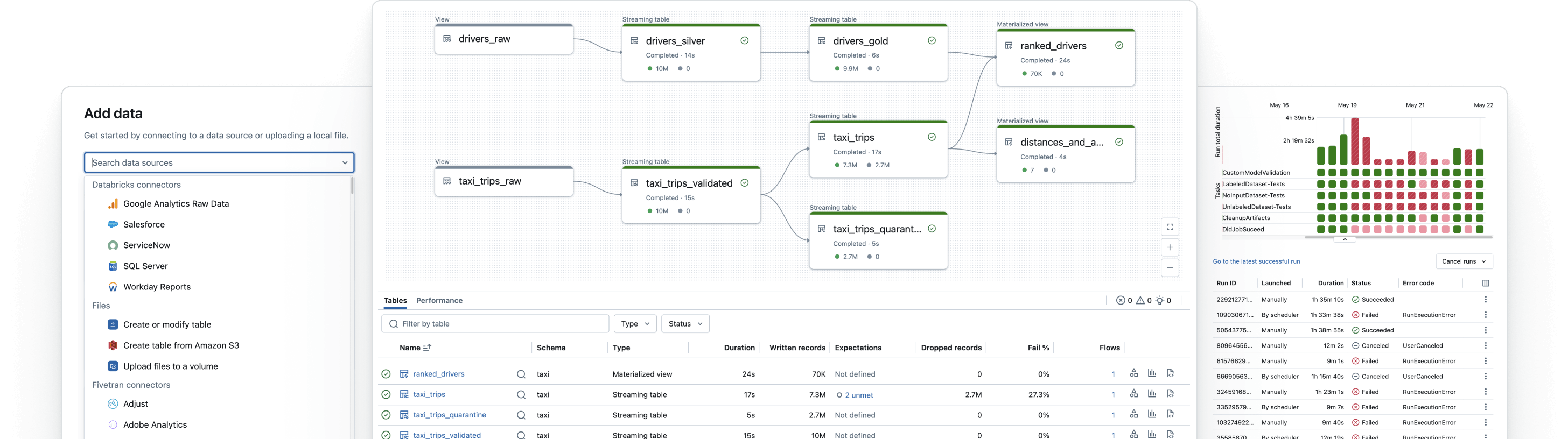

Spark Declarative Pipelines

Semplifica l’ETL batch e in streaming grazie a funzionalità automatizzate per la qualità dei dati, il Change Data Capture (CDC), l’ingestione, la trasformazione e la governance centralizzata.

Job di Lakeflow

Fornisci ai team gli strumenti per automatizzare e orchestrare al meglio qualsiasi flusso di lavoro ETL, di analisi o AI, con osservabilità avanzata, alta affidabilità e supporto per una vasta gamma di attività.

Unity Catalog

Gestisci tutti i tuoi asset di dati con l'unica soluzione di governance unificata e aperta del settore per dati e AI, integrata nella Databricks Data Intelligence Platform.

Archiviazione nel lakehouse

Unifica i dati nel tuo lakehouse, di qualunque formato e tipo, per tutti i tuoi carichi di lavoro di analisi e AI.

Data Intelligence Platform

Esplora l'intera gamma di strumenti disponibili sulla Databricks Data Intelligence Platform per integrare perfettamente dati e AI in tutta l'organizzazione.

Crea pipeline di dati affidabili

Sfrutta al massimo il valore dei tuoi dati, indipendentemente da dove si trovino

Porta tutti i tuoi dati nella Data Intelligence Platform utilizzando i connettori di Lakeflow per sorgenti file, applicazioni aziendali e database. I connettori completamente gestiti, basati su elaborazione incrementale dei dati, garantiscono un’ingestione efficiente, mentre la governance integrata ti permette di mantenere il pieno controllo dei dati lungo l’intera pipeline.

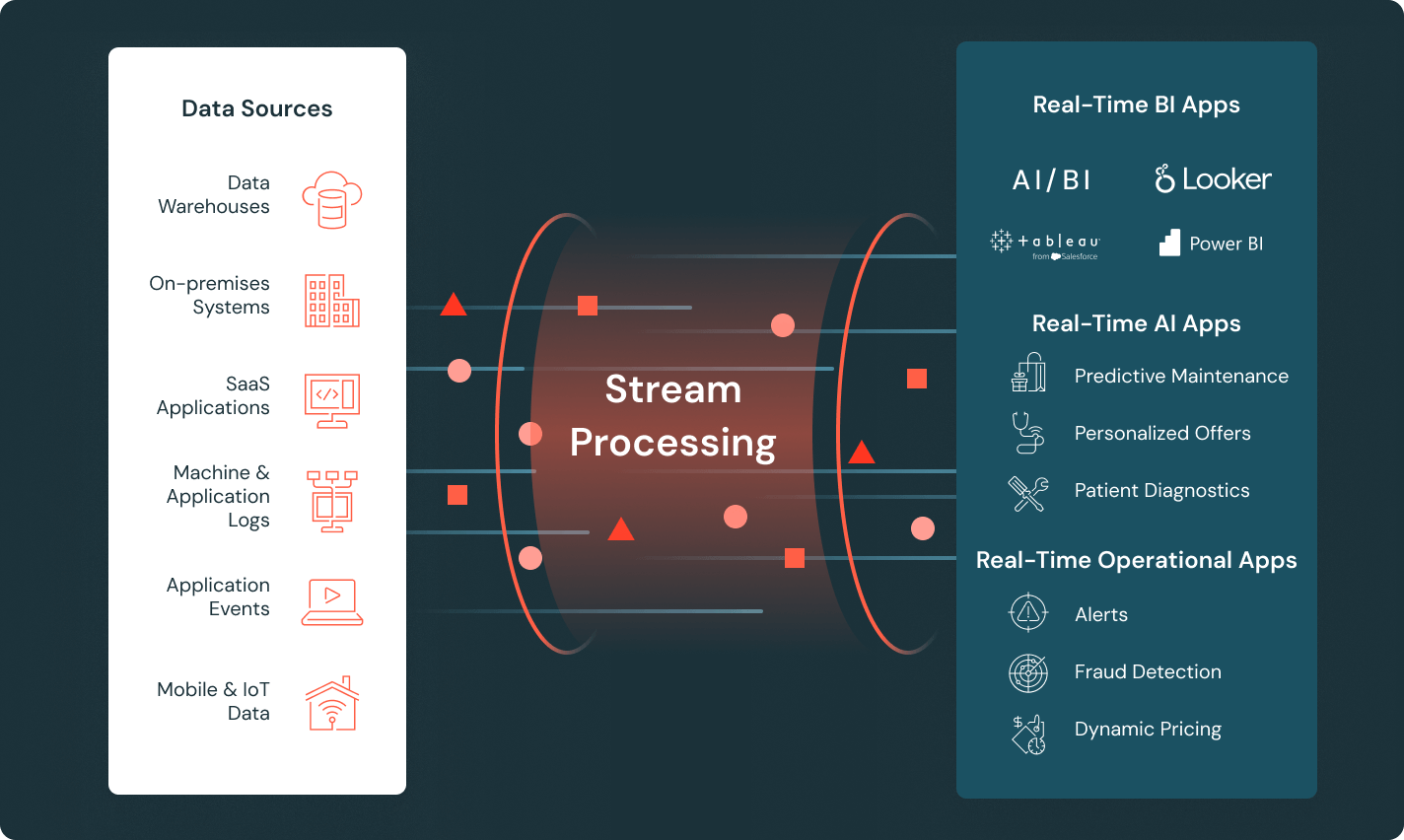

Fornisci dati aggiornati per insight in tempo reale

Crea pipeline in grado di elaborare dati in tempo reale provenienti da sensori, clickstream e dispositivi IoT, alimentando applicazioni real-time. Riduci la complessità operativa con Spark Declarative Pipelines e semplifica lo sviluppo delle pipeline grazie all’uso di tabelle streaming.

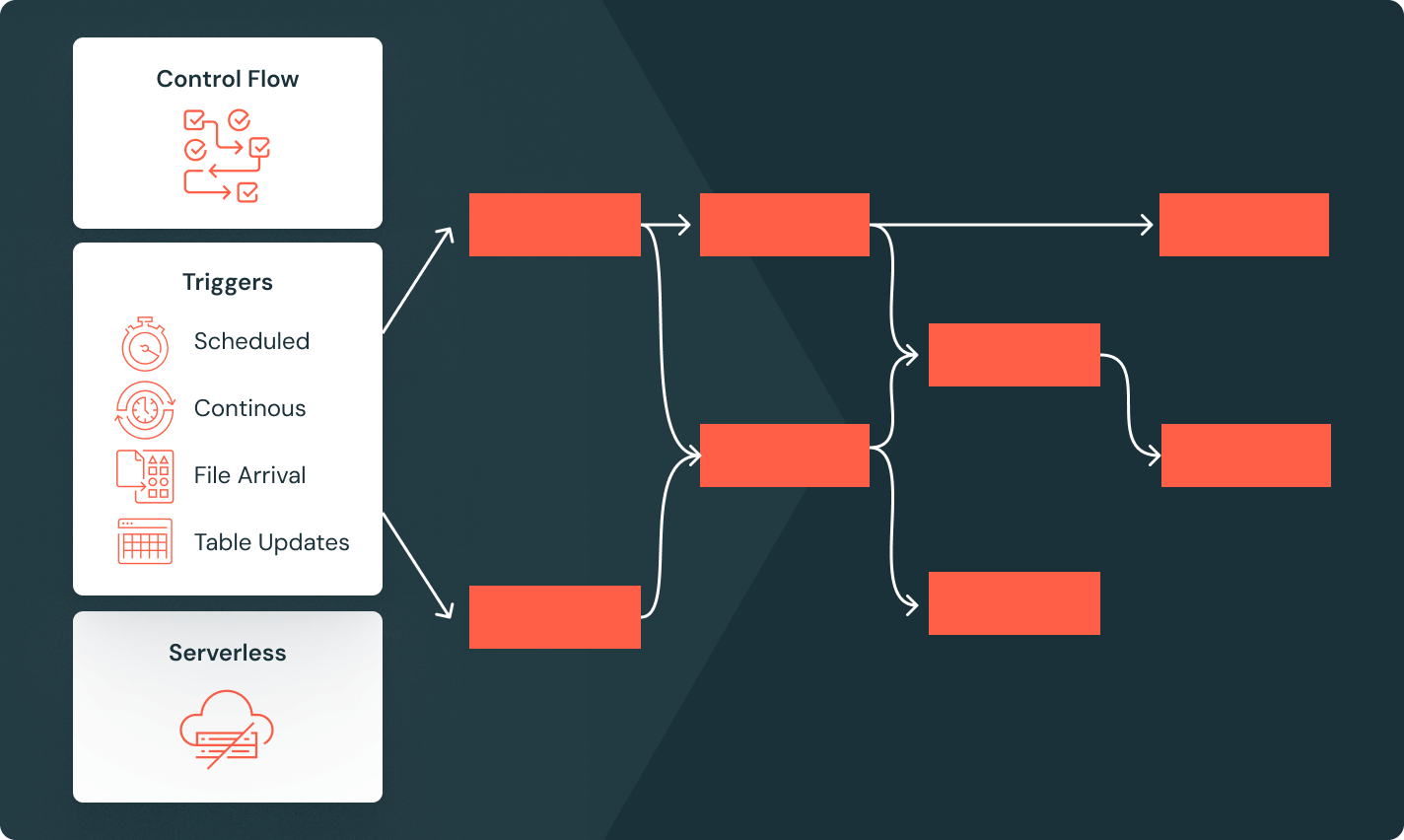

Orchestra flussi di lavoro complessi con facilità

Definisci flussi di lavoro affidabili per analisi e AI con un orchestratore gestito integrato nella tua piattaforma dati. Implementa facilmente DAG complessi grazie a funzionalità avanzate di controllo del flusso, come esecuzioni condizionali, cicli e una varietà di trigger per i job.

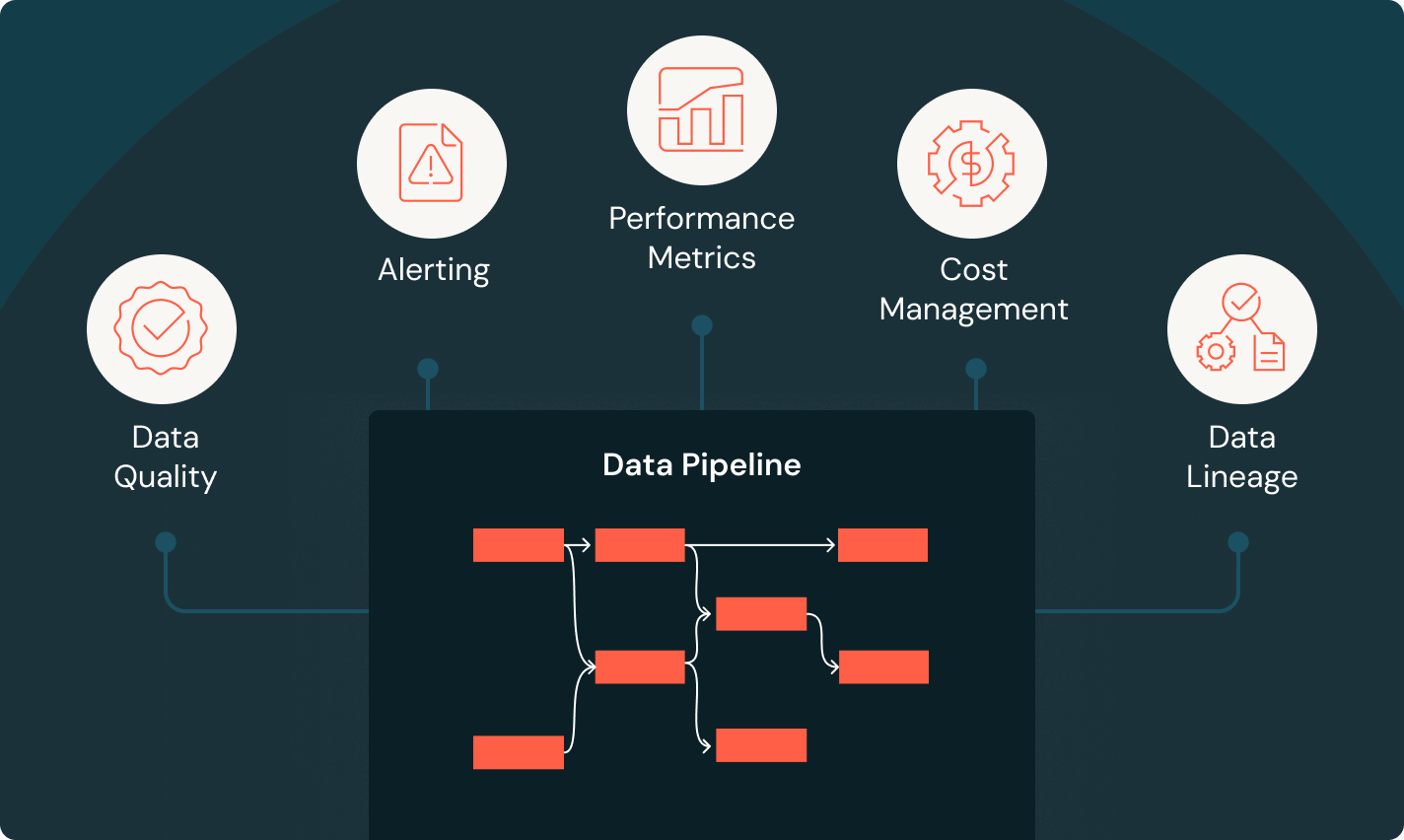

Monitora ogni fase di ogni pipeline

Ottieni piena visibilità sullo stato delle pipeline con metriche dettagliate in tempo reale. Avvisi personalizzati ti garantiscono di sapere esattamente quando si verifica un problema, mentre visualizzazioni dettagliate aiutano a identificarne rapidamente la causa e velocizzarne la risoluzione. Con un monitoraggio end-to-end, mantieni il pieno controllo sui tuoi dati e sulle tue pipeline.

Fai il passo successivo

Contenuti associati

FAQ sul data engineering

Sei pronto a mettere dati e AI alla base della tua azienda?

Inizia il tuo percorso di trasformazione dei dati