LAKEFLOW를 사용하는 주요 회사들고품질 데이터를 제공하는 종단간 솔루션.

모든 팀이 분석 및 AI를 위한 신뢰할 수 있는 데이터 파이프라인을 구축하기 쉽게 하는 도구입니다.85% 빠른 개발

99% 파이프라인 지연 감소

데이터 엔지니어를 위한 통합 도구

LakeFlow Connect

효율적인 데이터 수집 커넥터와 Data Intelligence Platform과의 기본 통합을 통해 분석 및 AI에 쉽게 접근할 수 있으며, 통합된 거버�넌스를 제공합니다.

Spark 선언형 파이프라인

자동화된 데이터 품질, 변경 데이터 캡처(CDC), 데이터 수집, 변환, 통합 거버넌스를 통해 배치 및 스트리밍 ETL을 단순화합니다.

Lakeflow 작업

팀이 깊은 관찰성, 높은 신뢰성, 넓은 작업 유형 지원을 통해 모든 ETL, 분석, AI 워크플로우를 더 잘 자동화하고 조정할 수 있도록 장비를 갖춥니다.

Unity Catalog

업계 유일의 통합된 오픈 거버넌스 솔루션을 통해 모든 데이터 자산을 원활하게 관리하십시오. 이 솔루션은 Databricks Data Intelligence Platform에 내장되어 있습니다.

레이크하우스 스토리지

레이크하우스의 모든 형식과 유형의 데이터를 통합하여 모든 분석 및 AI 작업에 사용합니다.

Data Intelligence Platform

Databricks 데이터 인텔리전스 플랫폼에서 제공하는 다양한 도�구를 활용하여 조직 전체에서 데이터와 AI를 원활하게 통합하세요.

신뢰할 수 있는 데이터 파이프라인 구축

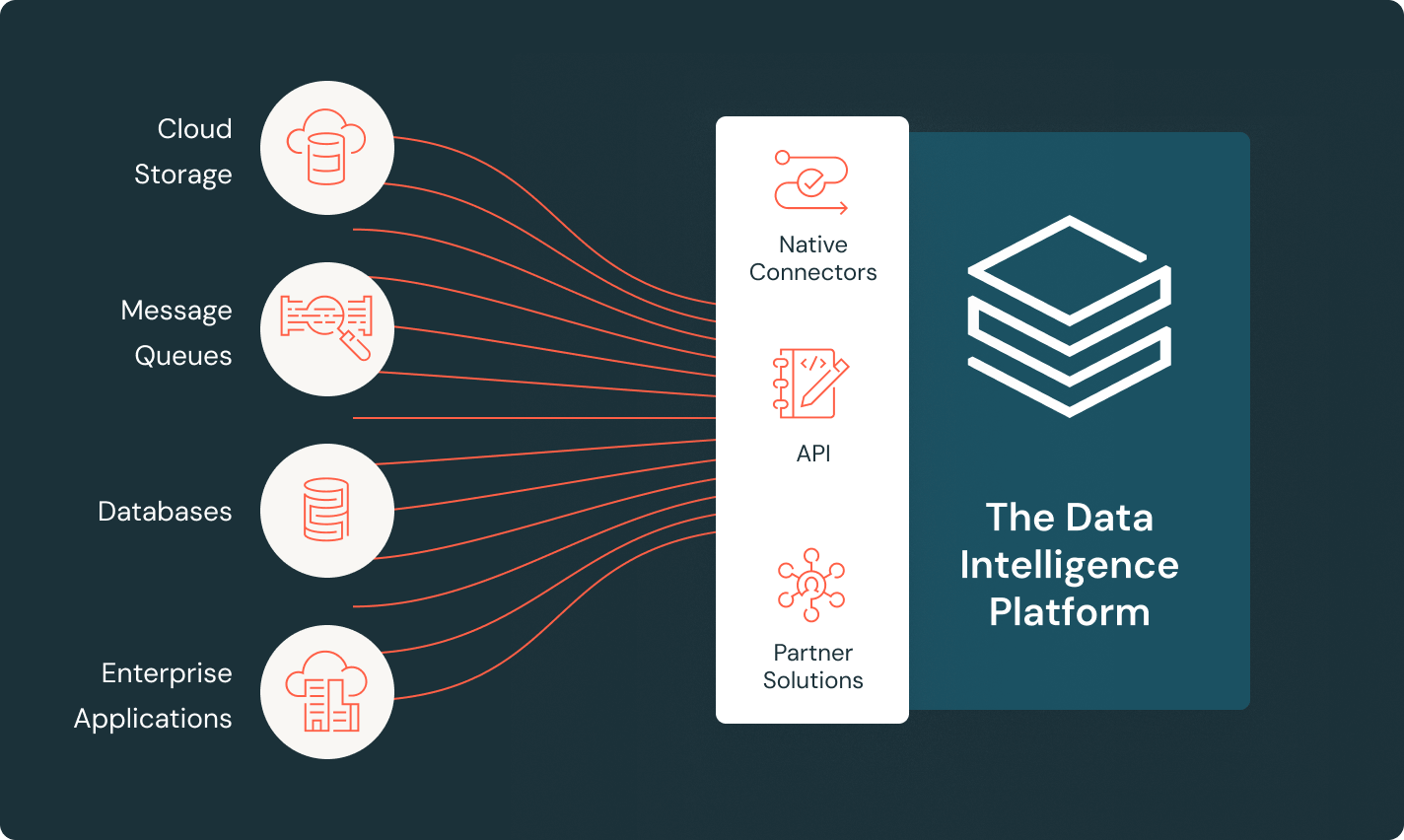

데이터가 어디에 있든 가치를 창출하세요

Lakeflow의 커넥터를 사용하여 파일 소스, 엔터프라이즈 애플리케이션 및 데이터베이스에서 모든 데이터를 데이터 인텔리전스 플랫폼으로 가져옵니다. 증분 데이터 처리를 활용하는 완전 관리형 커넥터는 효율적인 수집을 제공하고 내장된 거버넌스는 파이프라인을 통해 데이터를 제어하는 데 도움이 됩니다.

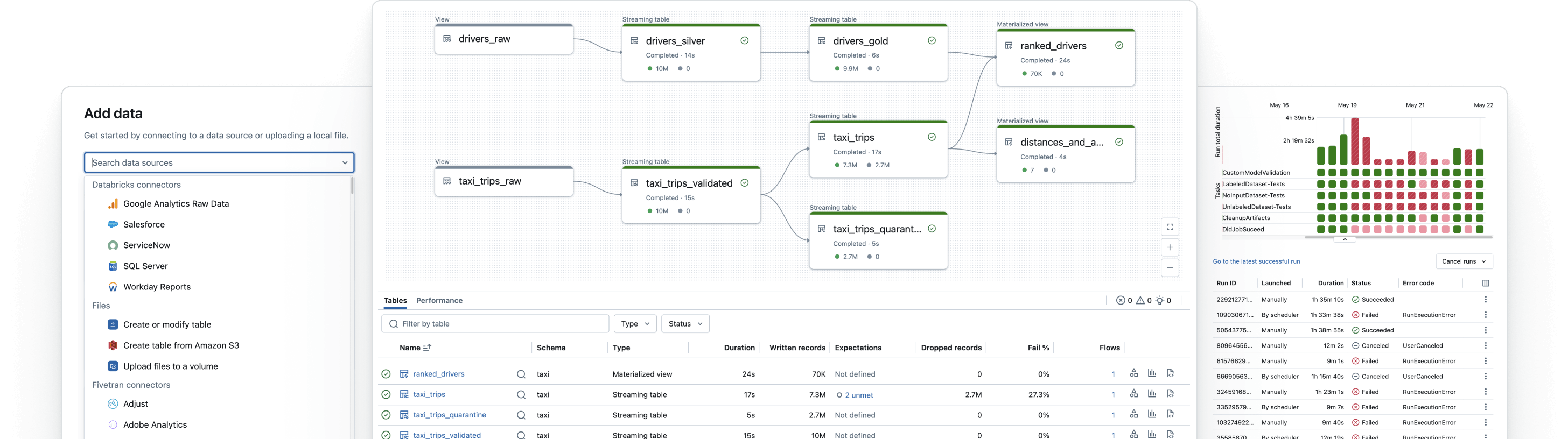

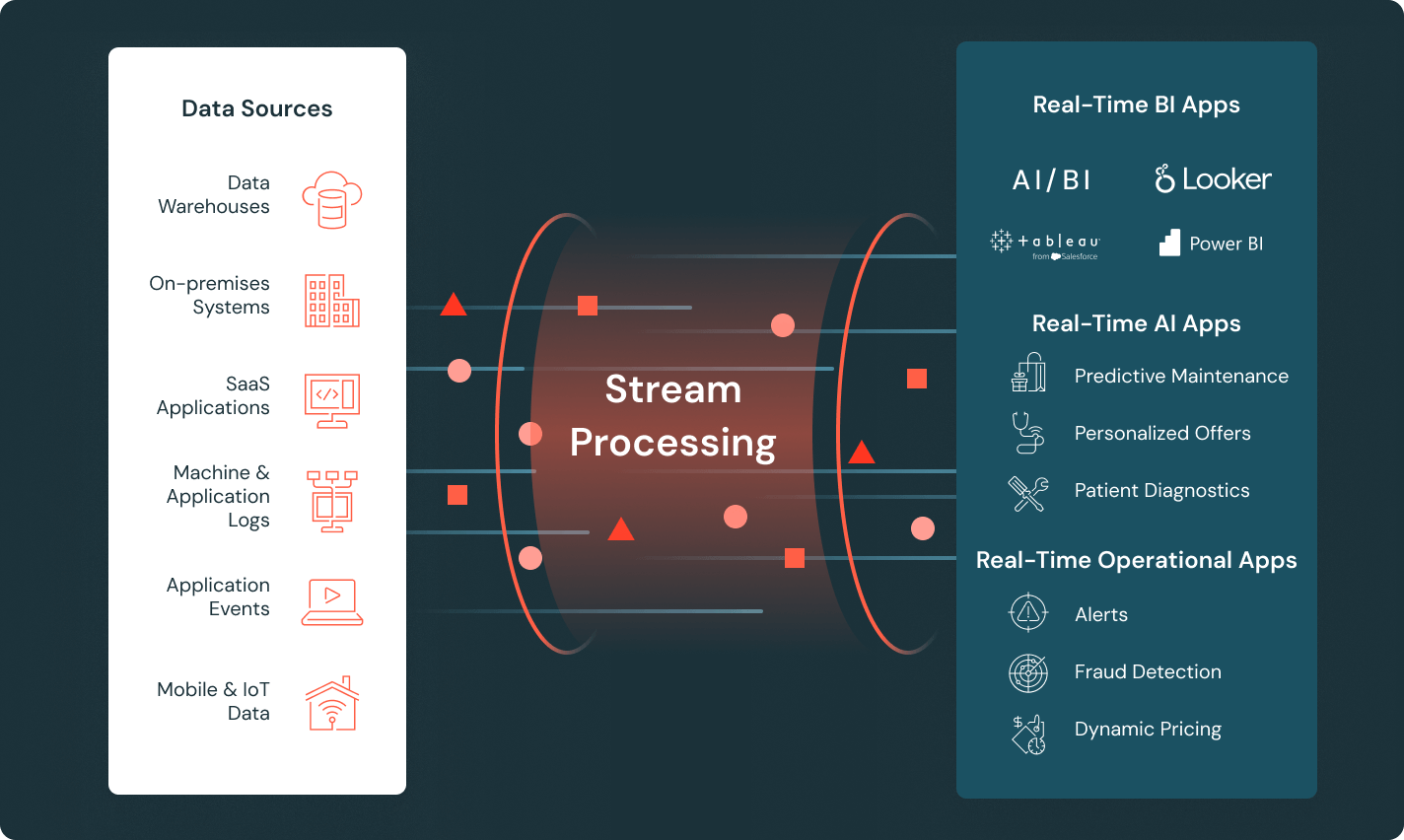

실시간 인사이트를 위한 신선한 데이터 제공

센서, 클릭 스트림 및 IoT 장치에서 실시간으로 도착하는 데이터를 처리하는 파이프라인을 구축하고 실시간 애플리케이션에 피드를 제공합니다. Spark 선언적 파이프라인을 사용하여 운영 복잡성을 줄이고 스트리밍 테이블을 사용하여 파이프라인 개발을 단순화합니다.

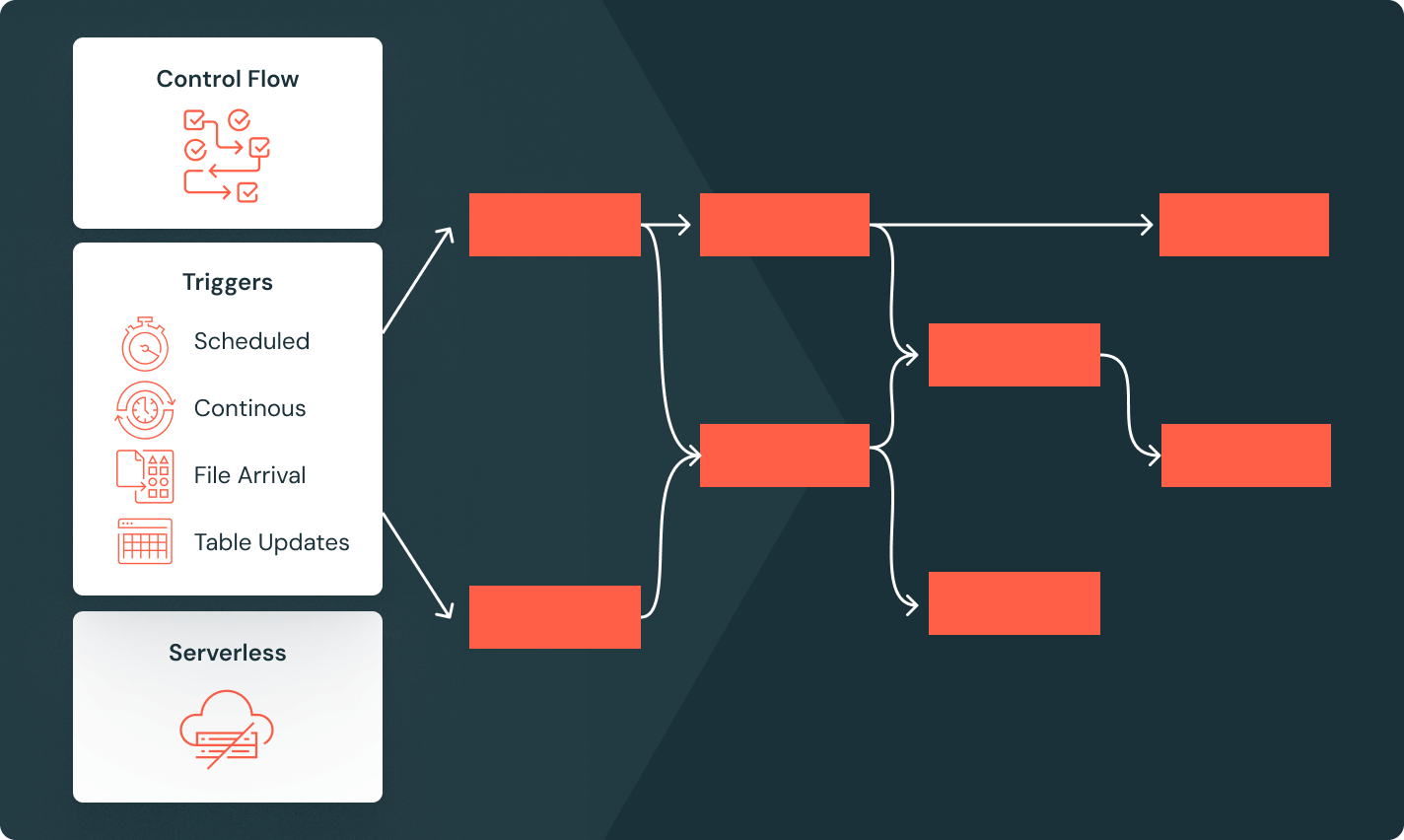

복잡한 워크플로우를 쉽게 조정하세요

데이터 플랫폼과 통합된 관리형 오케스트레이터를 사용하여 신뢰할 수 있는 분석 및 AI 워크플로우를 정의합니다. 조건 실행, 루핑 및 다양한 작업 트리거와 같은 향상된 제어 흐름 기능을 사용하여 복잡한 DAG를 쉽게 구현합니다.

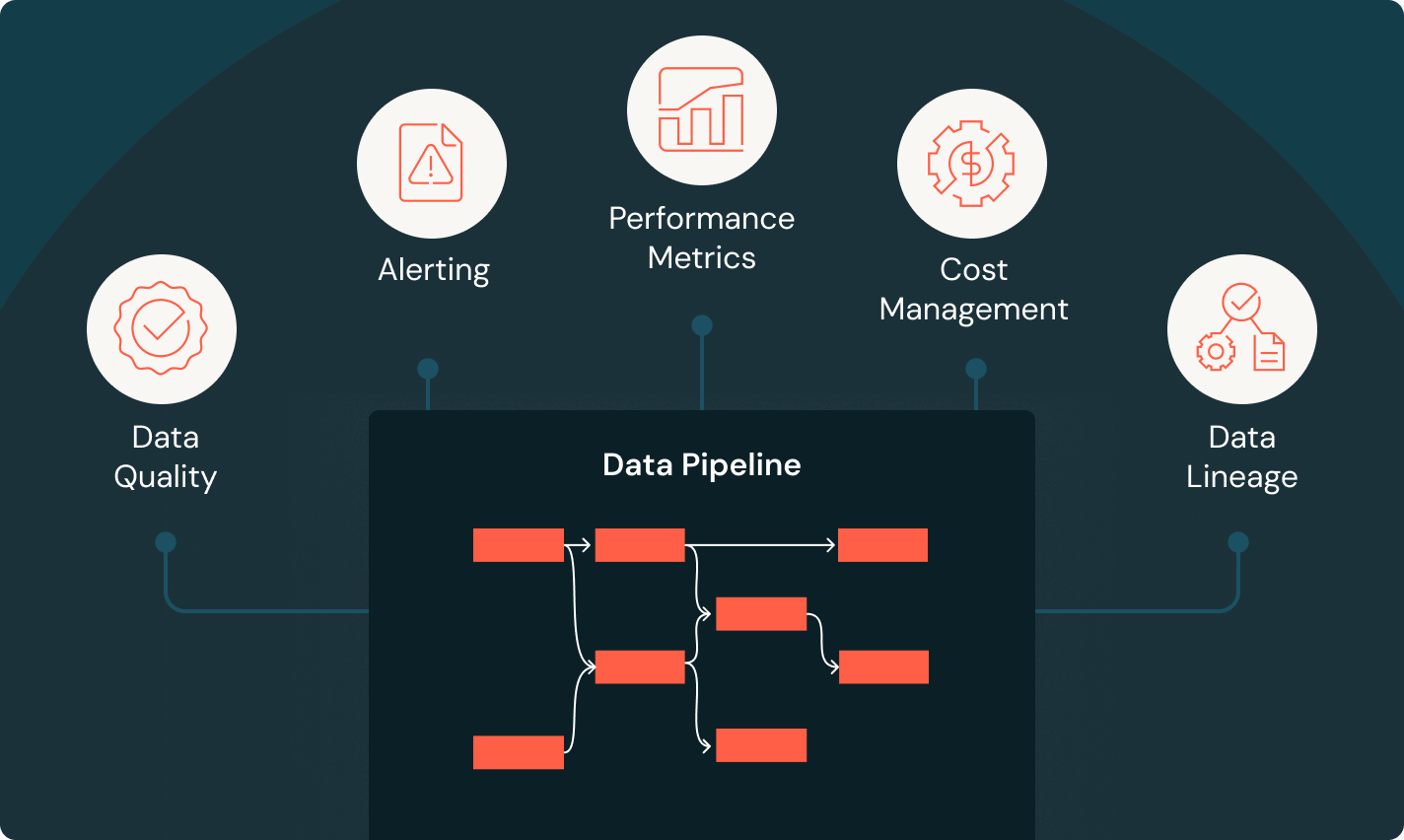

모든 파이프라인의 모든 단계를 모니터링하세요

실시간으로 포괄적인 메트릭을 통해 파이프라인 건강 상태를 완전히 파악할 수 있습니다. 사용자 정의 알림은 문제가 발생했을 때 정확히 알 수 있도록 보장하며, 상세한 시각 자료는 실패의 근본 원인을 정확히 지적하여 빠르게 문제를 해결할 수 있습니다. 종단간 모니터링에 접근할 수 있을 때, 데이터와 파이프라인을 통제할 수 있습니다.