Data Lakehouse

Qu'est-ce qu'un data lakehouse ?

Le data lakehouse est une nouvelle architecture, ouverte, de gestion des données. Il associe la souplesse, le faible coût et l'échelle des data lakes aux possibilités de gestion et aux transactions ACID des data warehouses. Il permet d'exploiter toutes les données à des fins de business intelligence (BI) et de machine learning (ML).

Poursuivez votre exploration

Data lakehouse : simplicité, flexibilité, rentabilité

Les data lakehouses s'appuient sur une conception innovante et ouverte. Des structures et des fonctions de gestion des données similaires à celles d'un data warehouse sont directement implémentées dans un stockage à faible coût de type data lake. Grâce à cette fusion, les équipes peuvent exploiter les données sans devoir accéder à plusieurs systèmes, ce qui accélère considérablement leur travail. Autre avantage des data lakehouses, les collaborateurs disposent toujours des données les plus complètes et les plus à jour pour tous leurs projets de data science, de machine learning et d'analytique commerciale.

Les technologies clés qui sous-tendent le data lakehouse

Plusieurs progrès technologiques décisifs ont permis le développement du data lakehouse :

- les couches de métadonnées pour les data lakes

- de nouvelles conceptions de moteur de requête, qui permettent d'exécuter du SQL avec des performances élevées sur les data lakes

- un accès optimisé aux outils de data science et de machine learning.

Les couches de métadonnées, comme le Delta Lake open source, se superposent aux formats de fichiers ouverts (les fichiers Parquet par exemple) et suivent l'appartenance des fichiers aux différentes versions de tables pour délivrer de puissantes fonctionnalités de gestion, comme les transactions ACID. Les couches de métadonnées soutiennent également d'autres fonctionnalités courantes des data lakehouses, comme la prise en charge des E/S en streaming (pour éliminer les bus de messages comme Kafka), la restauration d'anciennes versions des tâches, l'application et l'évolution des schémas, ainsi que la validation des données. Les performances sont essentielles pour que le data lakehouse devienne l'architecture prédominante dans le monde de l'entreprise. Elles sont l'un des facteurs clés de l'existence des data warehouses dans l'architecture à deux niveaux. Certes, les data lakes utilisant des magasins d'objets à bas coût étaient lents par le passé. Les nouveaux moteurs de requête délivrent désormais d'excellentes performances pour l'analyse SQL. Ils bénéficient en effet de nombreuses optimisations : mise en cache des données à chaud dans la RAM ou sur SSD (avec possibilité de transcodage dans des formats plus efficaces), optimisations de l'agencement des données pour créer des clusters en accès concurrent, structures de données auxiliaires (statistiques, index) et exécution vectorisée sur les CPU modernes. La combinaison de ces technologies permet aux data lakehouses d'atteindre, avec les grands datasets, des performances rivalisant avec celles des data warehouses populaires, comme l'attestent les benchmarks TPC-DS. Grâce aux formats de données ouverts utilisés par les data lakehouses (comme Parquet), les data scientists et les ingénieurs en machine learning accèdent très facilement aux données du lakehouse. Ils peuvent s'appuyer sur les outils classiques de l'écosystème DS/ML – pandas, TensorFlow, PyTorch... – déjà capables d'accéder aux sources comme Parquet et ORC. Spark DataFrames propose même, pour ces formats ouverts, des interfaces déclaratives qui ouvrent la voie à davantage d'optimisation E/S. Les autres fonctionnalités du data lakehouse, comme l'historique d'audit et le time travel, contribuent également à améliorer la reproductibilité dans le domaine du machine learning. Pour en savoir plus sur les avancées technologiques qui justifient l'adoption du data lakehouse, lisez l'article du CIDR Lakehouse : une nouvelle génération de plateformes qui unifie l'entreposage des données et l'analytique avancée, ainsi que l'article de recherche Delta Lake : stockage en tables ACID haute performance sur des magasins d'objets cloud.

Une histoire des architectures de données

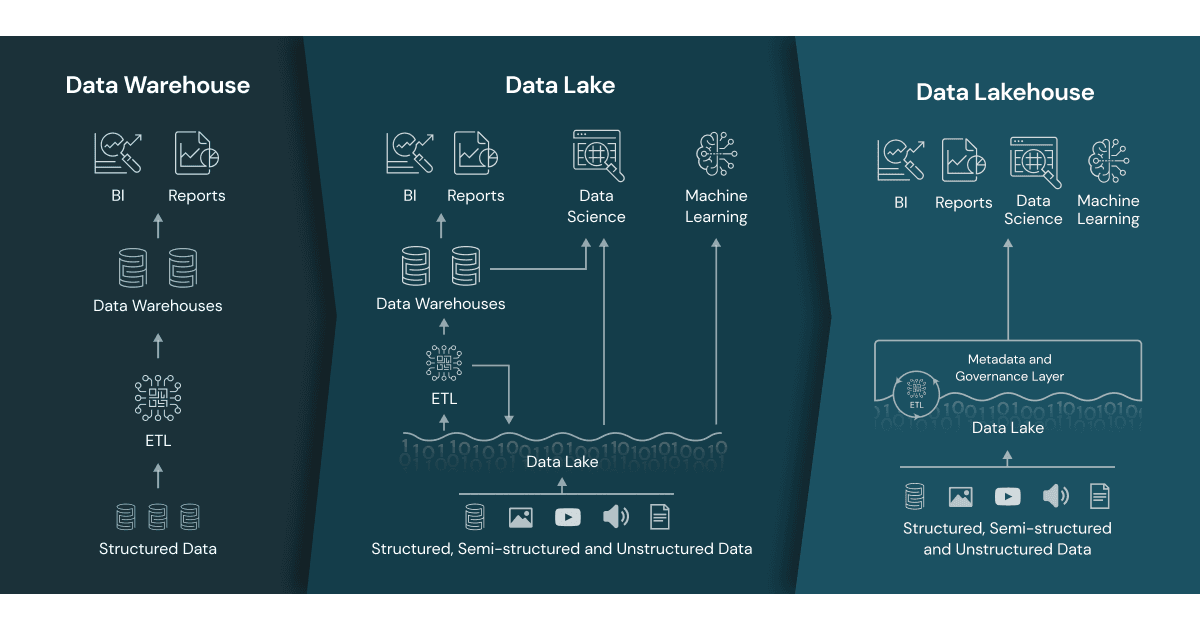

Un peu de contexte sur les data warehouses

Les data warehouses soutiennent depuis longtemps la prise de décisions et les applications de business intelligence, mais ils sont inadaptés ou trop coûteux pour traiter les données non structurées, semi-structurées, très diversifiées, à grande vitesse ou en grands volumes.

L'émergence des data lakes

Les data lakes sont apparus pour prendre en charge, sur un stockage à bas coût, les données brutes aux formats divers utilisés par la data science et le machine learning. Il leur manquait toutefois des fonctionnalités cruciales des data warehouses, comme la prise en charge des transactions et l'application de règles de qualité des données. Enfin, leur manque d'homogénéité et d'isolation empêche presque de mélanger ajouts et lectures, tâches en batch et en streaming.

Architecture de données commune à deux niveaux

Les équipes de données relient constamment ces systèmes pour réaliser des tâches de BI et de ML sur toutes les données qu'ils contiennent, ce qui entraîne de nombreux problèmes : duplication des données, coûts d'infrastructure supplémentaires, défis de sécurité et coûts d'exploitation importants. Dans une architecture de données à deux niveaux, les données passent des bases de données opérationnelles au data lake via un traitement ETL. Le lake stocke les données de toute l'entreprise dans un magasin d'objet à faible coût, dans un format compatible avec les principaux outils de machine learning. Mais ces données sont rarement organisées et maintenues correctement. Ensuite, un segment réduit des données métier stratégiques suit à nouveau un processus ETL pour aboutir dans le data warehouse, où il sera exploité à des fins de business intelligence et d'analytique. En raison de ces multiples étapes d'ETL, l'architecture à deux niveaux exige une maintenance régulière et les données sont souvent obsolètes – un grave problème pour les data analysts et les data scientists, selon une récente enquête de Kaggle et Fivetran. Explorez les problèmes courants des architectures à deux niveaux.

Ressources complémentaires

- Qu'est-ce qu'un lakehouse ? - Blog

- L'architecture du lakehouse : de la vision à la réalité

- Introduction au lakehouse et à l'analytique SQL

- Lakehouse : une nouvelle génération de plateformes ouvertes qui unifie l'entreposage des données et l'analytique avancée

- Delta Lake : la fondation de votre Lakehouse

- La Plateforme Lakehouse<br /> de Databricks

- Webcast Data Brew, saison 1 : les data lakehouses

- La montée en puissance du paradigme du Lakehouse

- Créer le data lakehouse, par Bill Inmon

- La plateforme data lakehouse pour les nuls