LakeFlow

Importez, transformez et orchestrez vos données avec une solution de data engineering unifiée

LES MEILLEURES ENTREPRISES UTILISENT LAKEFLOWUne solution de bout en bout pour des données de haute qualité.

Des outils qui aident toutes les équipes à développer des pipelines de données fiables pour l'analytique et l'IA.85 % d'accélération du développement

50 % de réduction des coûts

99 % de réduction de la latence des pipelines

2 500 exécutions de tâches par jour

Corning utilise Lakeflow pour simplifier l'orchestration des données et mettre un processus automatisé et reproductible à la disposition de différentes équipes de l'organisation. Ces workflows automatisés déplacent des quantités considérables de données des tables bronze à gold au sein d'une architecture en médaillon.

Outils unifiés pour les data engineers

LakeFlow Connect

Avec des connecteurs d'ingestion de données efficaces et une intégration native à la Data Intelligence Platform, les équipes accèdent en toute simplicité à l'analytique et à l'IA avec une gouvernance unifiée.

Spark Declarative Pipelines

Simplifiez l'ETL par batch et en streaming grâce à la qualité de données automatisée, la change data capture (CDC), l'acquisition et la transformation des données, ainsi que la gouvernance unifiée.

Tâches Lakeflow

Donnez aux équipes les moyens de mieux automatiser et orchestrer tous les workflows d'ETL, d'analytique et d'IA grâce à une observabilité approfondie, une haute fiabilité et la prise en charge d'un large éventail de tâches.

Unity Catalog

Encadrez sans problème tous vos assets de données avec la seule solution de gouvernance unifiée et ouverte de l'industrie pour les données et l'IA, intégrée à la Databricks Data Intelligence Platform

Stockage en lakehouse

Unifiez les données du lakehouse, quels que soient leur format et leur type, pour les mettre à disposition de toutes vos charges d'analytique et d'IA.

Data Intelligence Platform

Explorez tout l'éventail des outils disponibles sur la Databricks Data Intelligence Platform pour intégrer les données et l'IA de toute votre organisation de façon fluide et transparente.

Développez des pipelines de données fiables

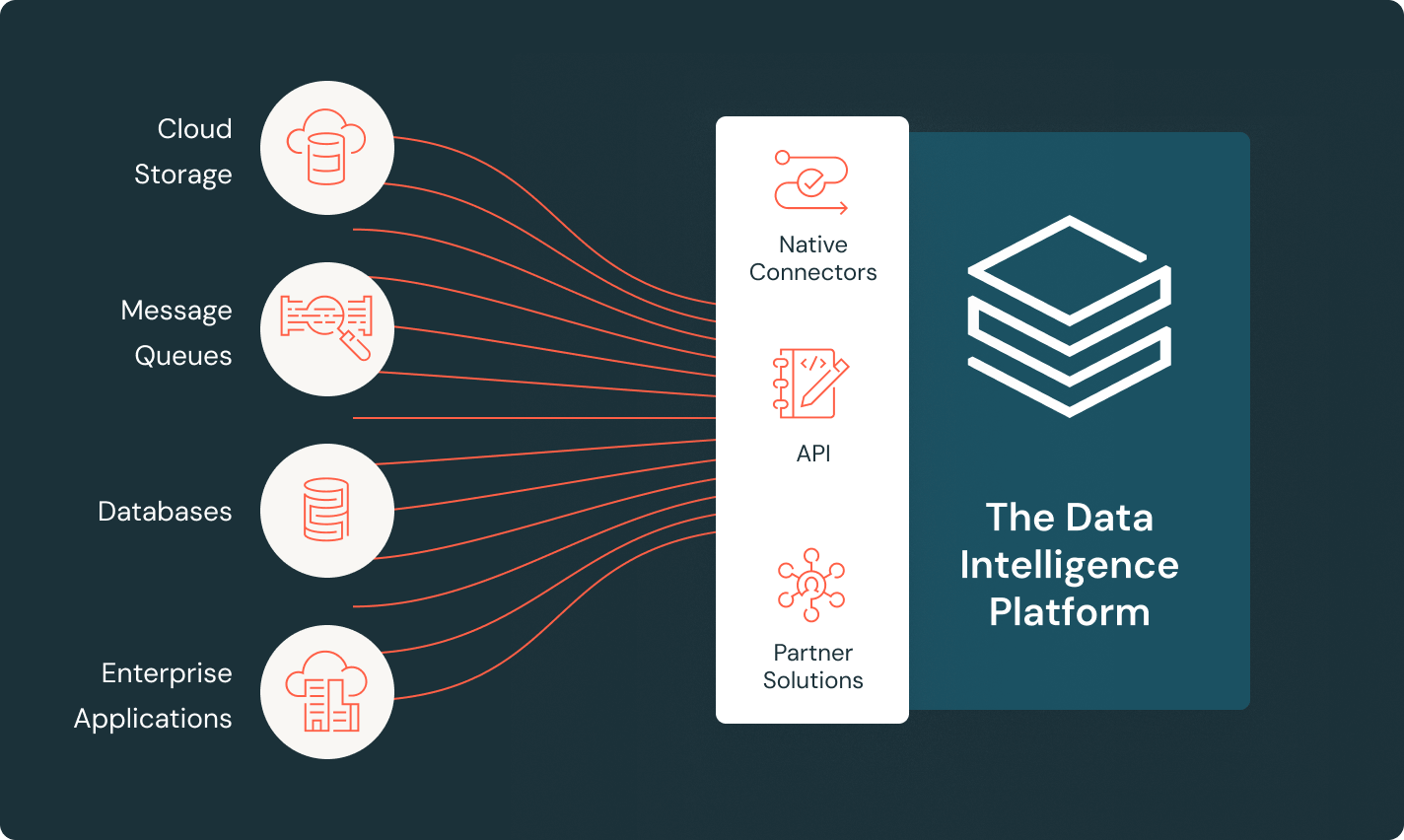

Libérez la valeur de vos données, où qu'elles se trouvent

Intégrez toutes vos données dans la Data Intelligence Platform à l'aide des connecteurs Lakeflow pour les sources de fichiers, les applications d'entreprise et les bases de données. Ces connecteurs entièrement gérés appliquent un traitement incrémentiel pour une acquisition efficace et une gouvernance intégrée. Le résultat : vous gardez le contrôle sur vos données tout au long du pipeline.

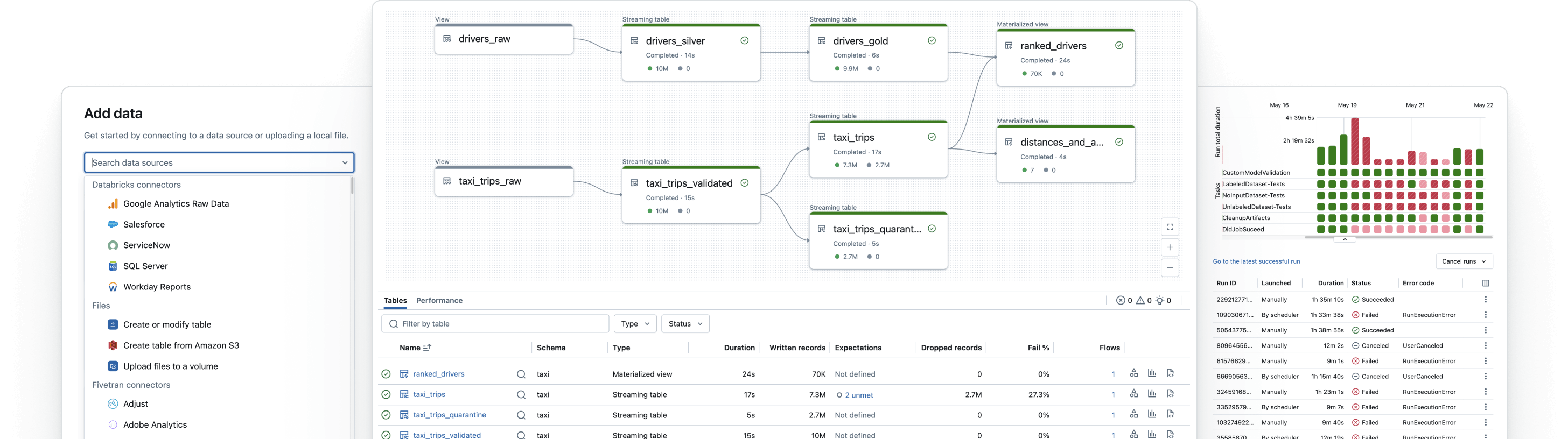

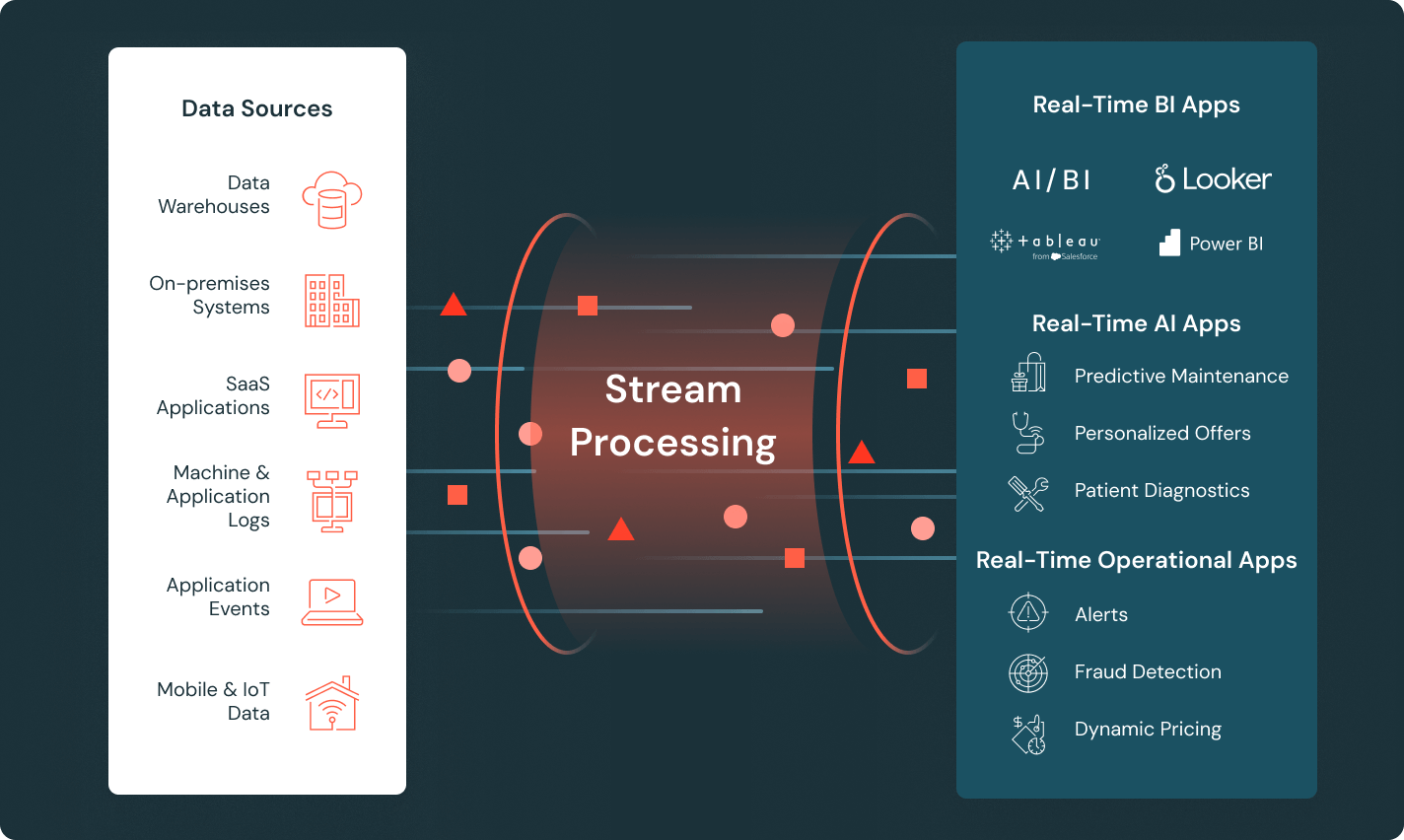

Des données fraîches pour des insights en temps réel

Créez des pipelines pour traiter les données provenant en temps réel de capteurs, de flux de clics et d'appareils IoT afin d'alimenter des applications en temps réel. Allégez la complexité opérationnelle avec les Spark Declarative Pipelines et utilisez des tables de streaming pour simplifier le développement de pipeline.

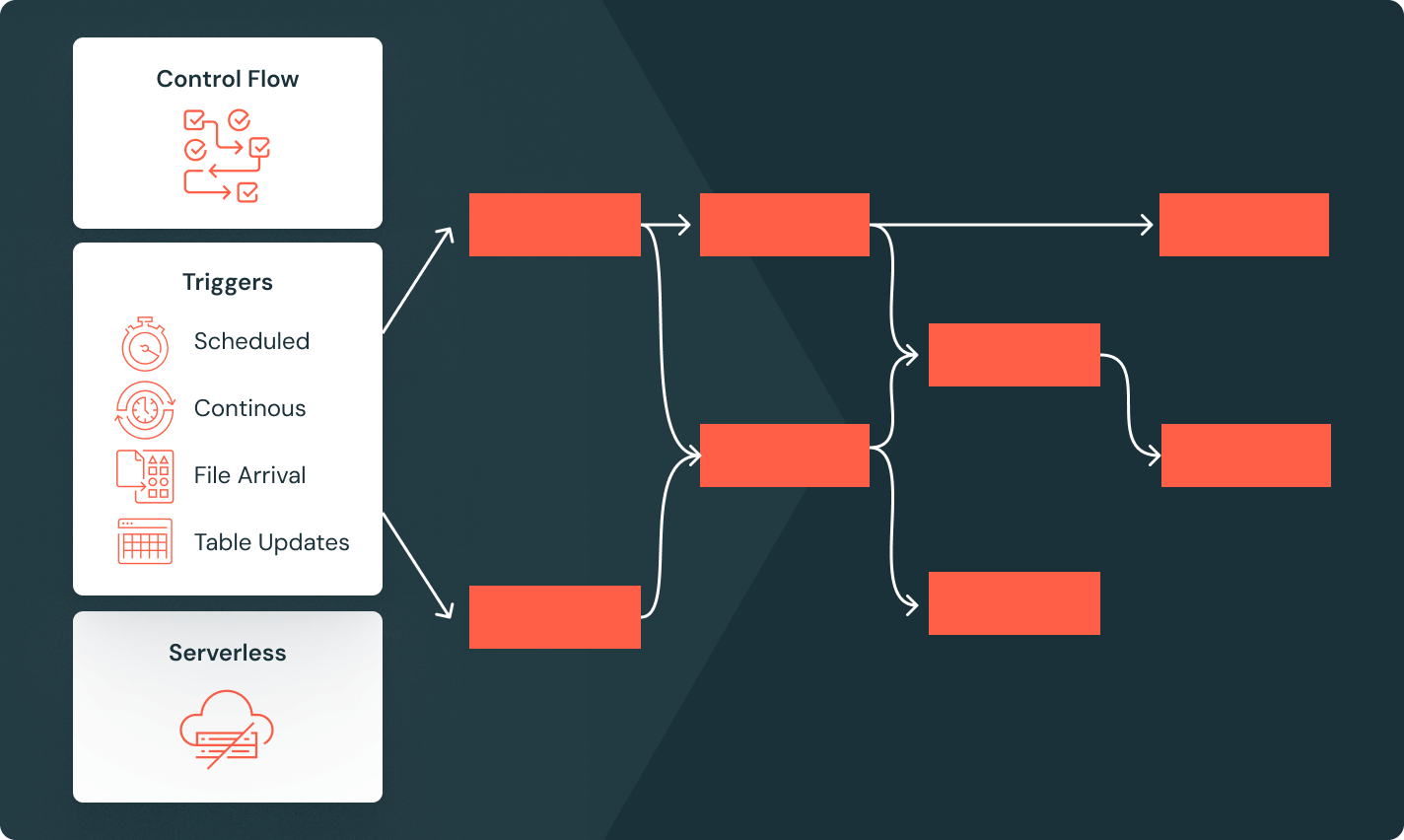

Orchestrez des workflows complexes en toute simplicité

Élaborez des workflows d'analytique et d'IA fiables à l'aide d'un orchestrateur géré intégré à votre plateforme de données. Implémentez sans difficulté des DAG complexes en exploitant des capacités avancées de contrôle de flux, comme l'exécution conditionnelle, la création de boucles et tout un éventail de déclencheurs.

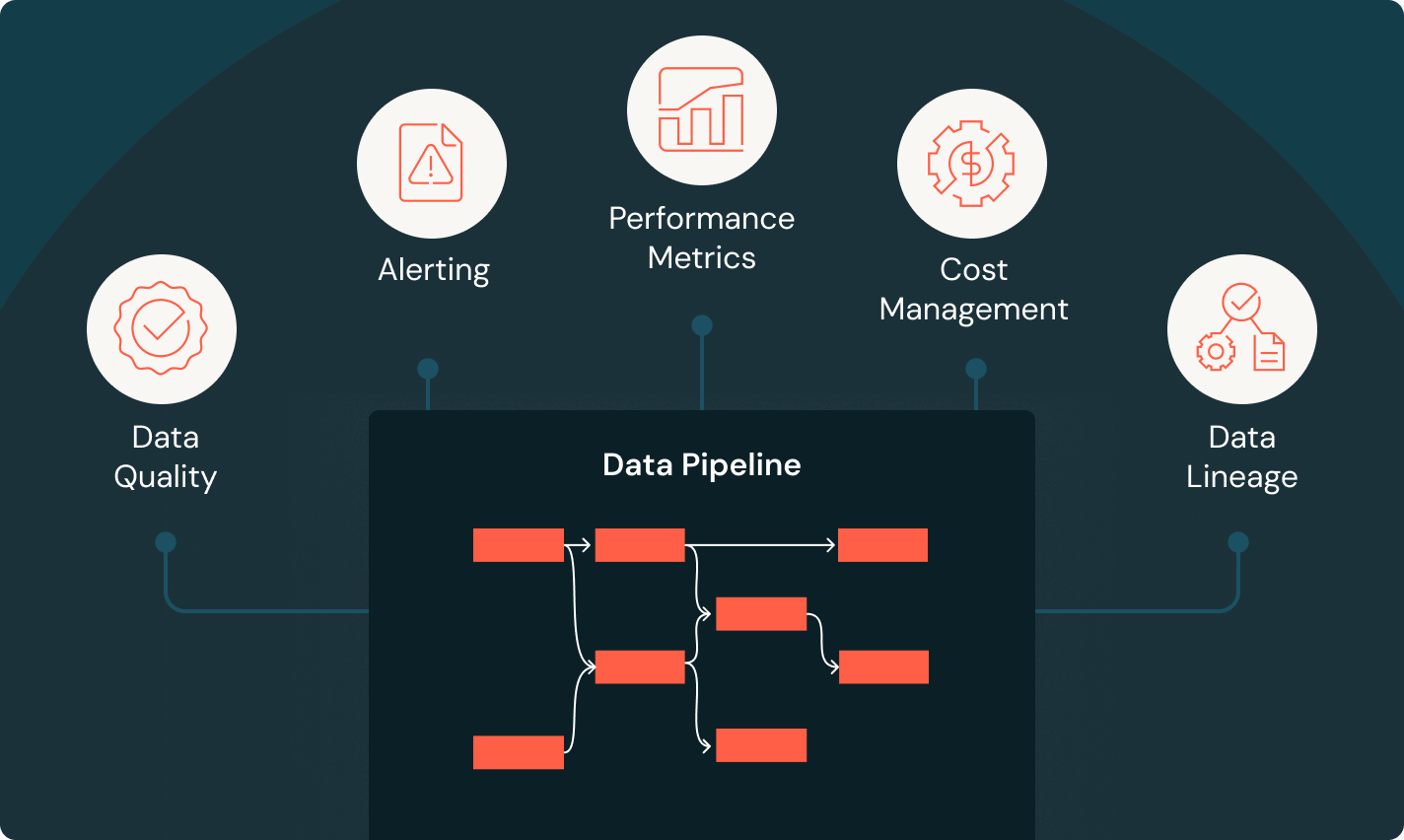

Surveillez chaque étape de chaque pipeline

Bénéficiez d'une observabilité complète sur la santé des pipelines grâce aux métriques complètes en temps réel. Avec les alertes personnalisées, vous savez exactement quand des problèmes surviennent. De plus, des visuels détaillés mettent en évidence la cause première des défaillances pour accélérer le dépannage. Avec une surveillance de bout en bout, vous gardez le contrôle sur vos données et vos pipelines.

Passez à l'étape suivante

FAQ sur le data engineering

Prêts à devenir une entreprise axée sur les données et l'IA ?

Faites le premier pas de votre transformation data