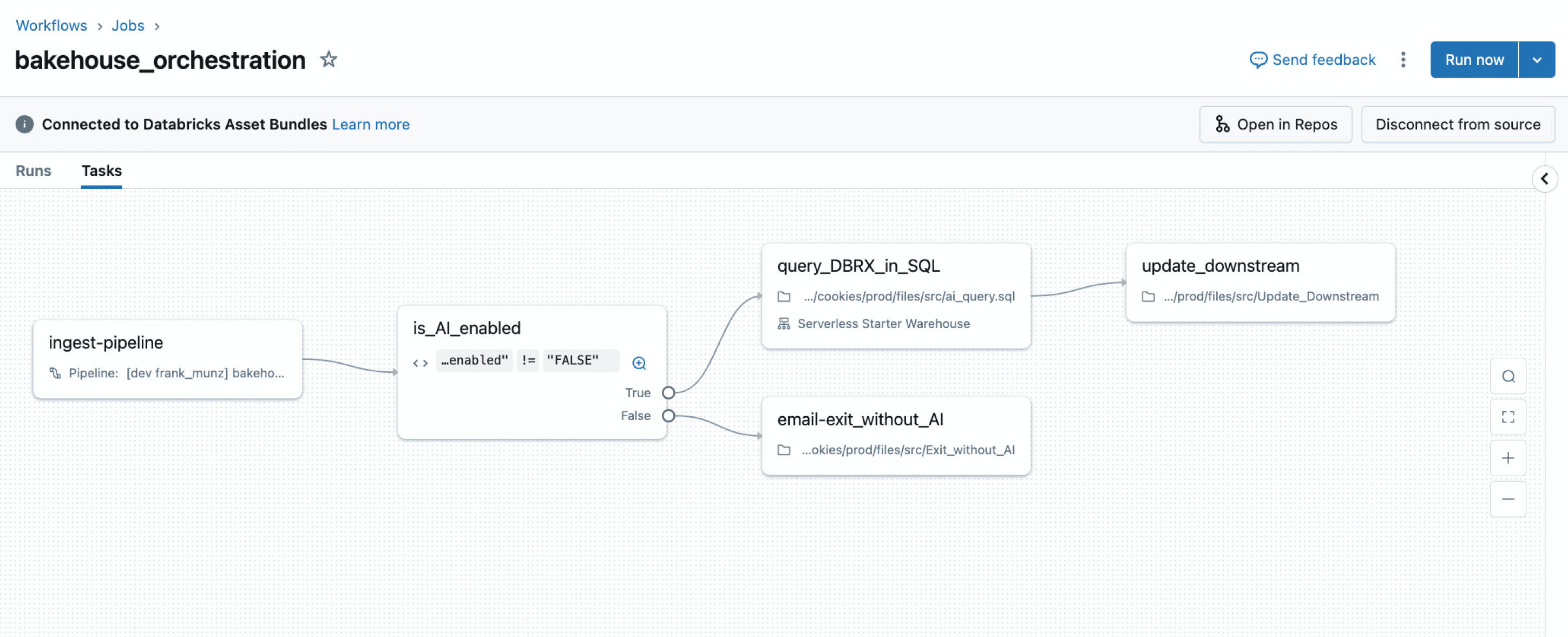

Natively managed orchestration for any workload

Unified orchestration for data, analytics and AI on the Data Intelligence Platform.

CUSTOMERS USING LAKEFLOW JOBS

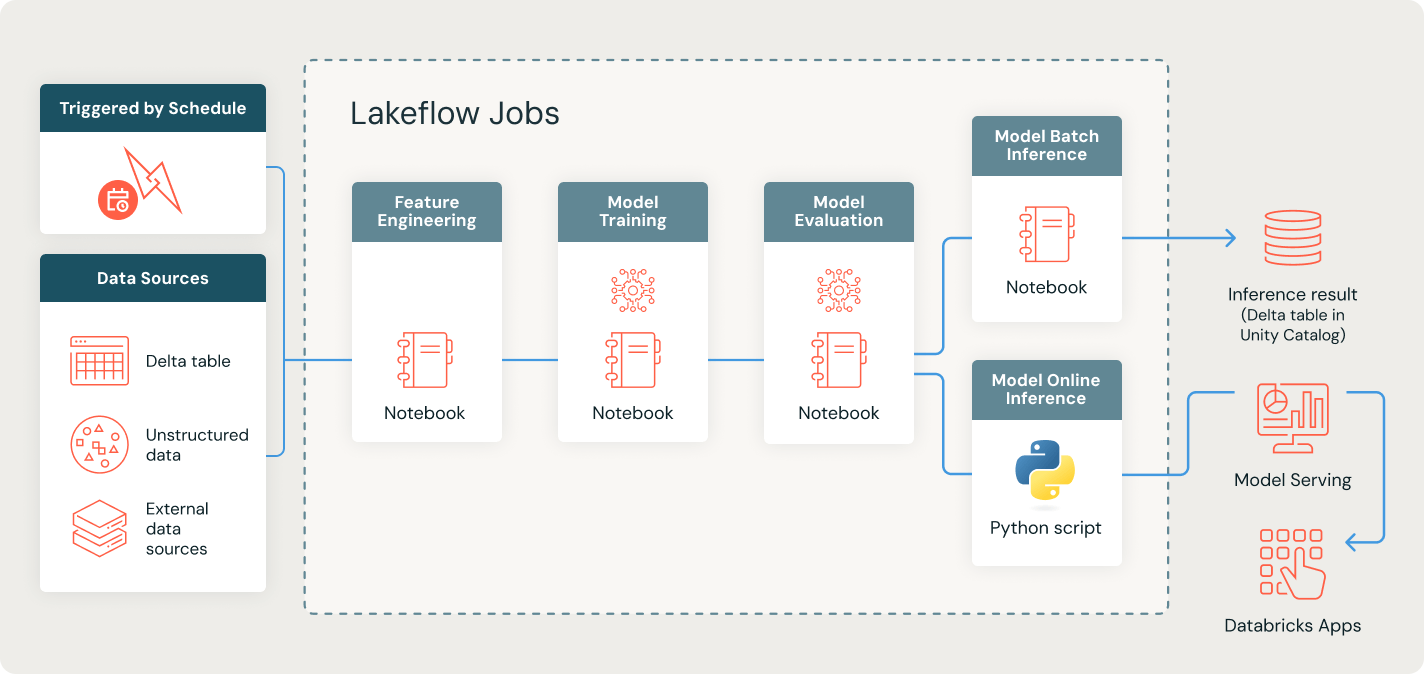

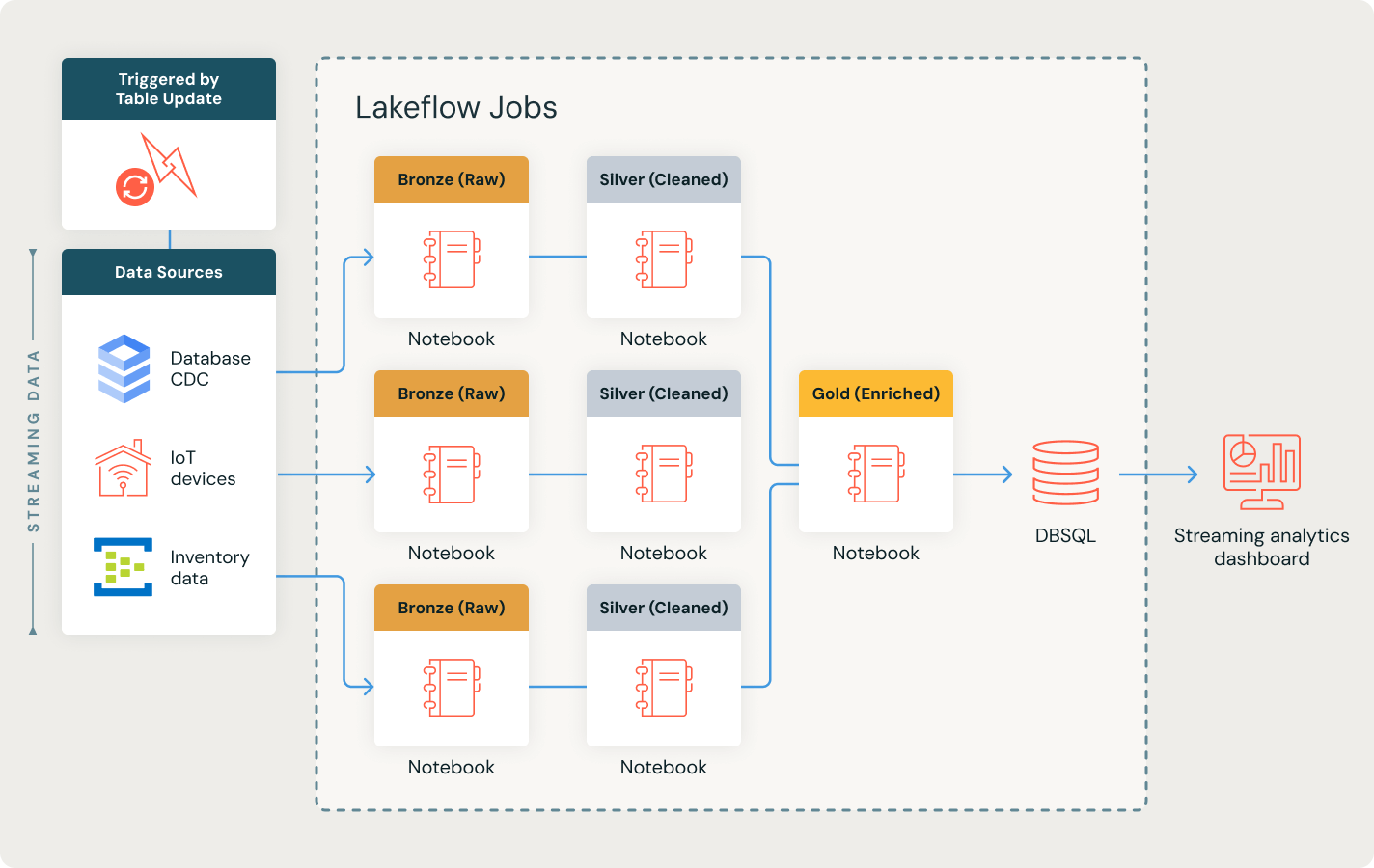

Orchestrate any data, analytics or AI workflow

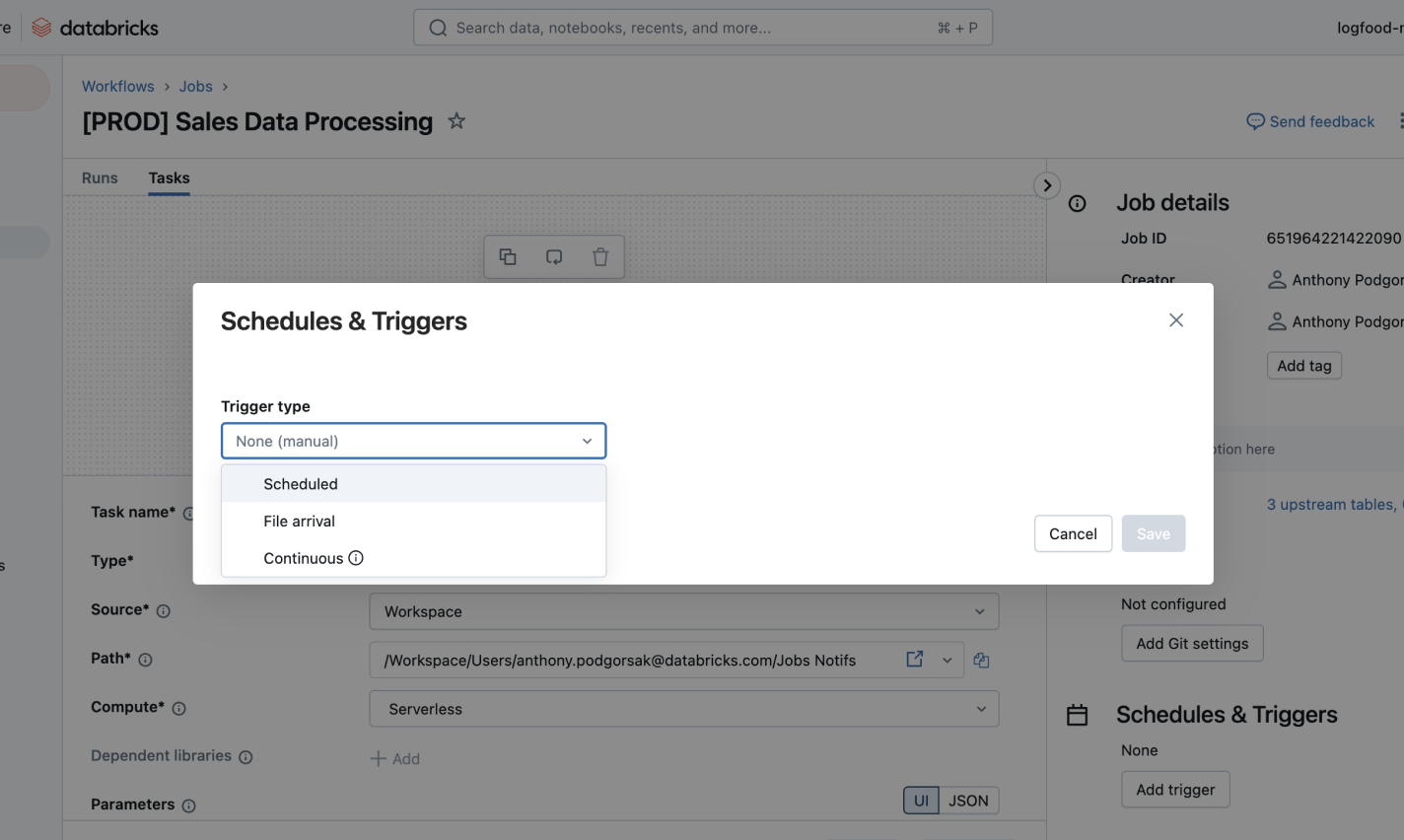

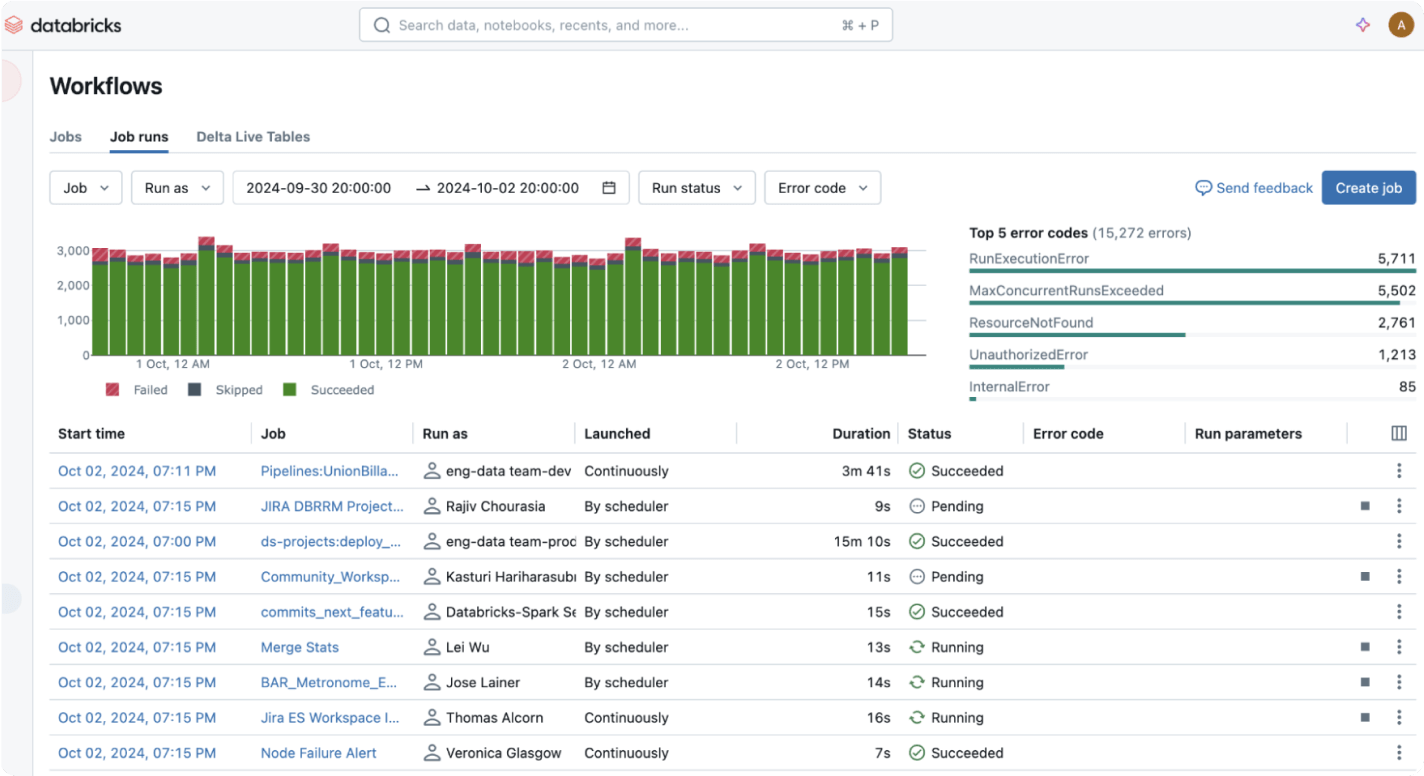

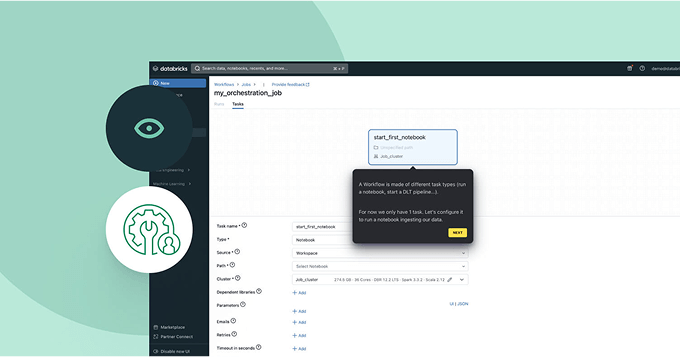

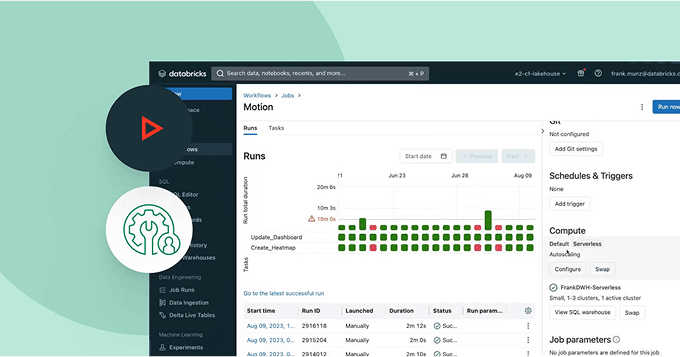

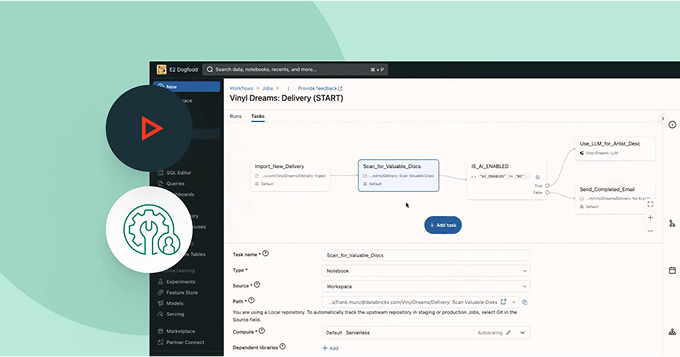

Streamline your pipelines with a managed orchestration service fully integrated into your data platform. Easily define, monitor and automate ETL, analytics and ML workflows with reliability and deep observability.Streamline and orchestrate your data pipelines

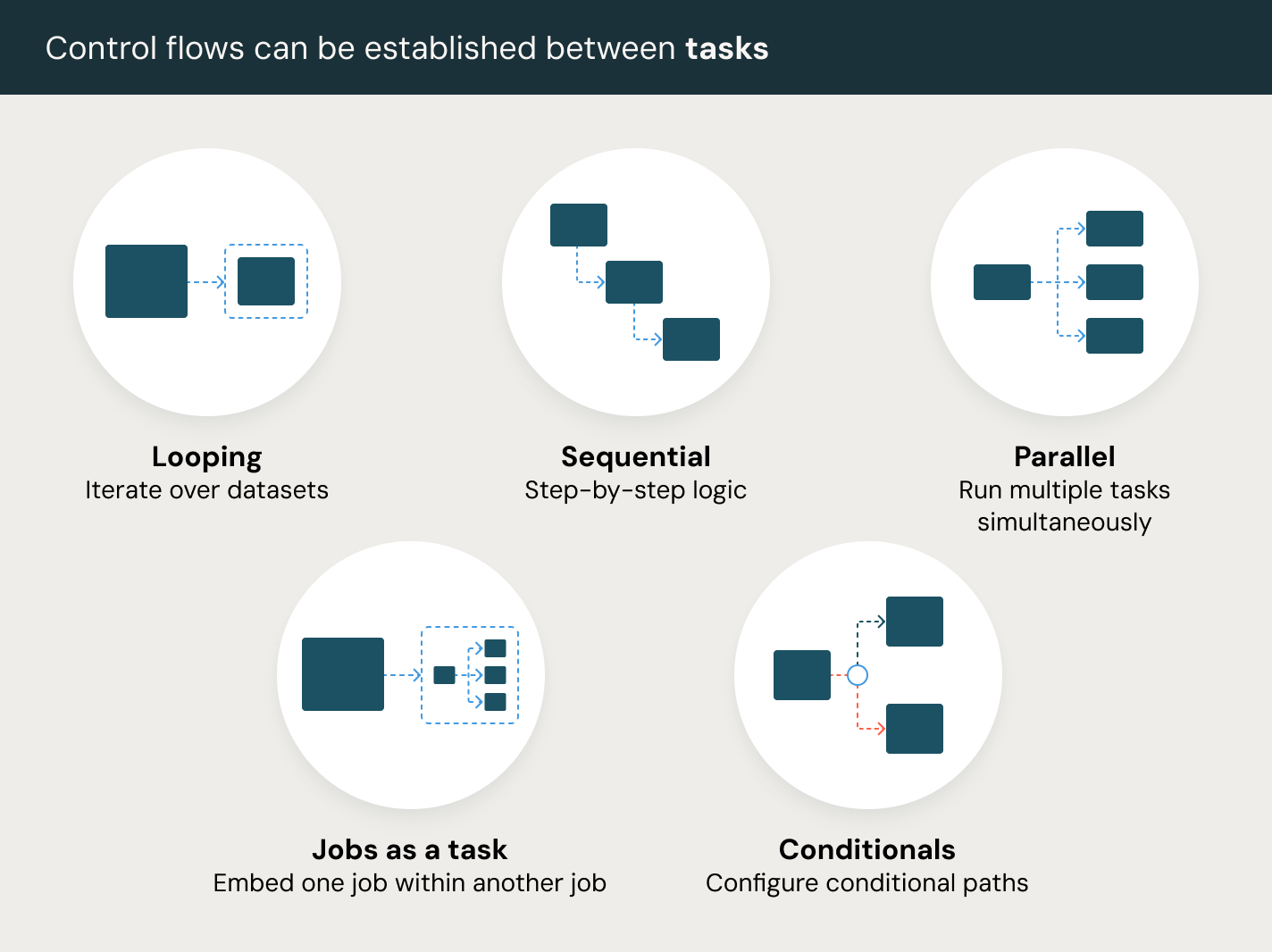

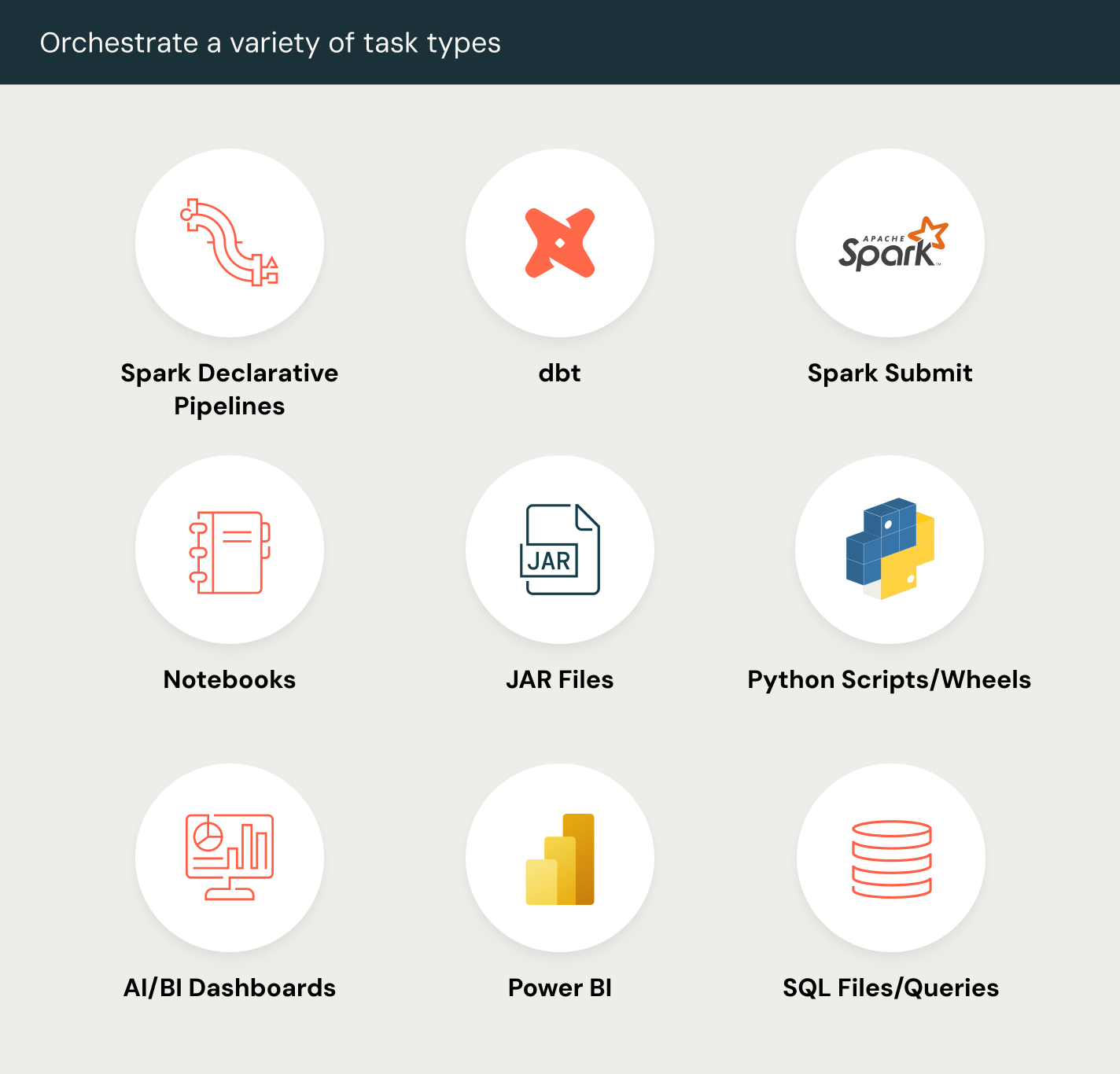

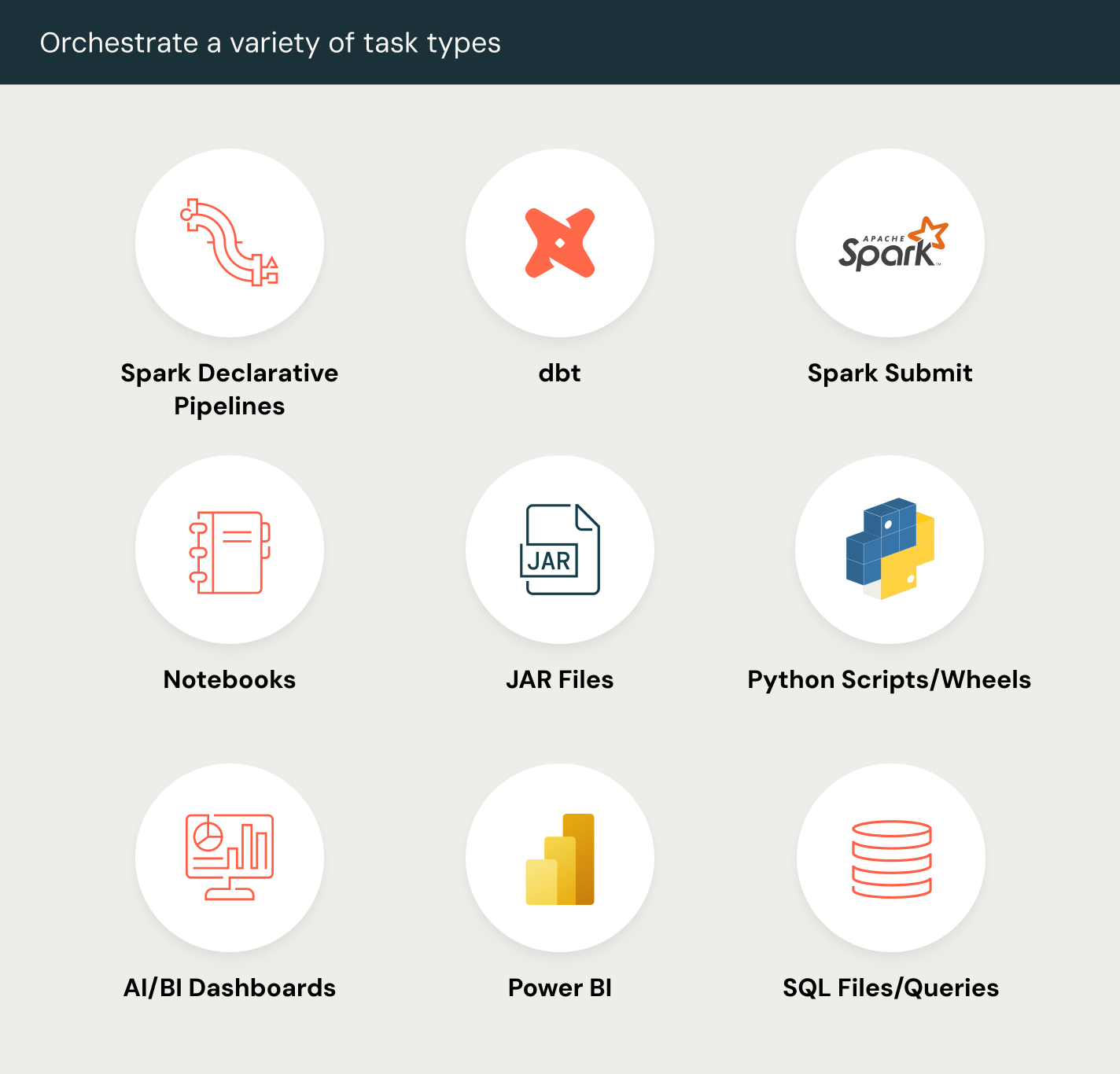

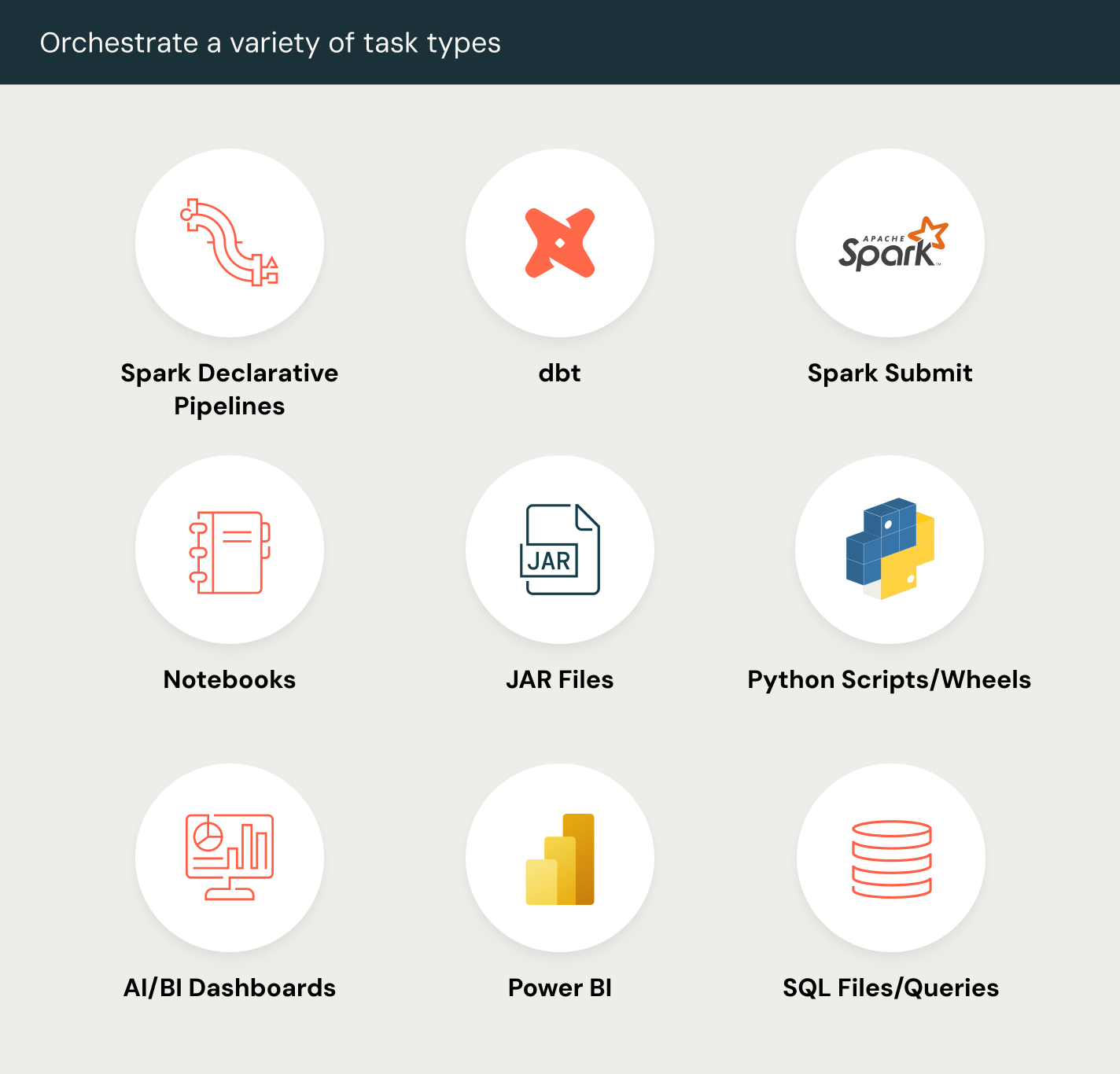

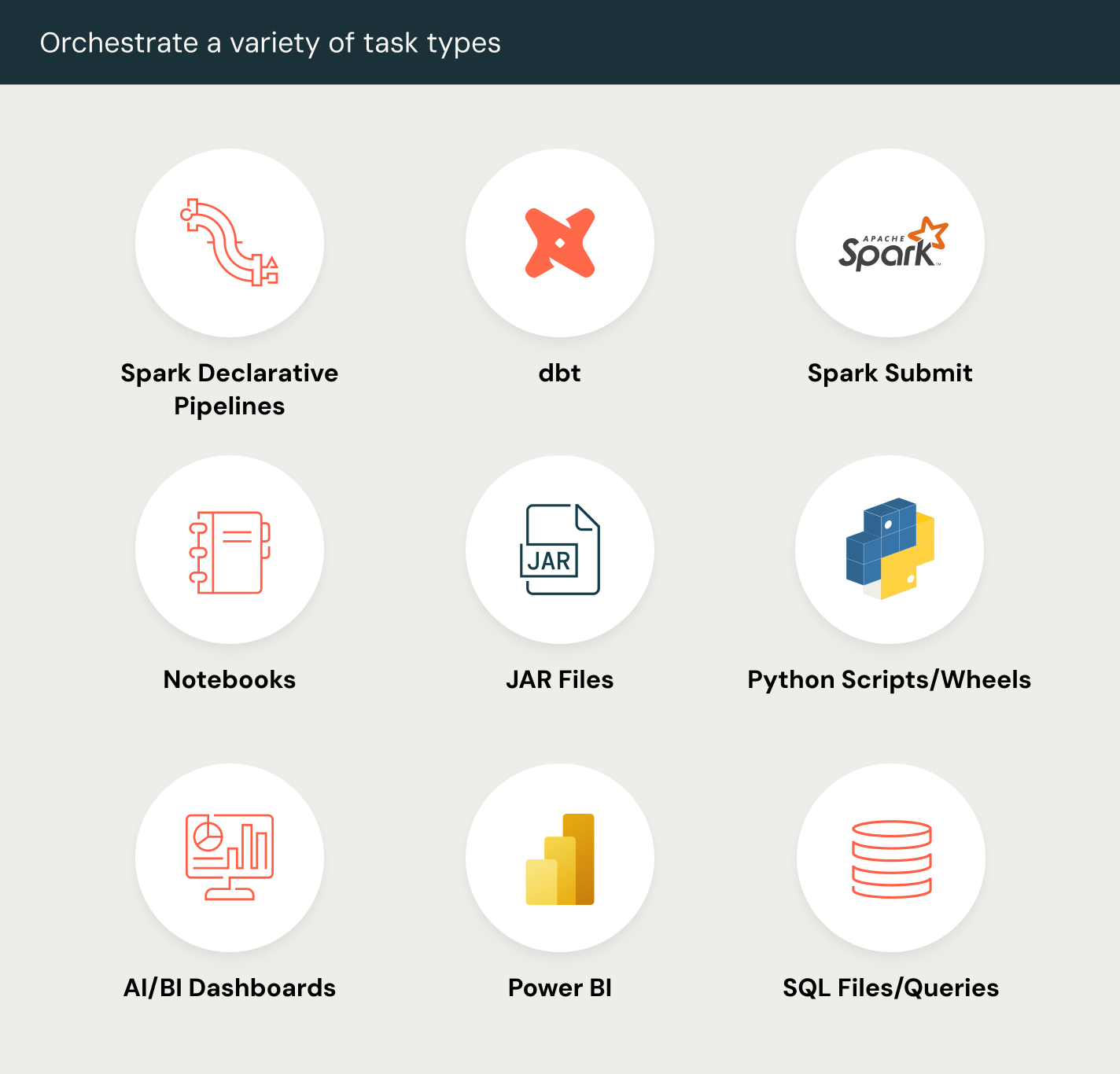

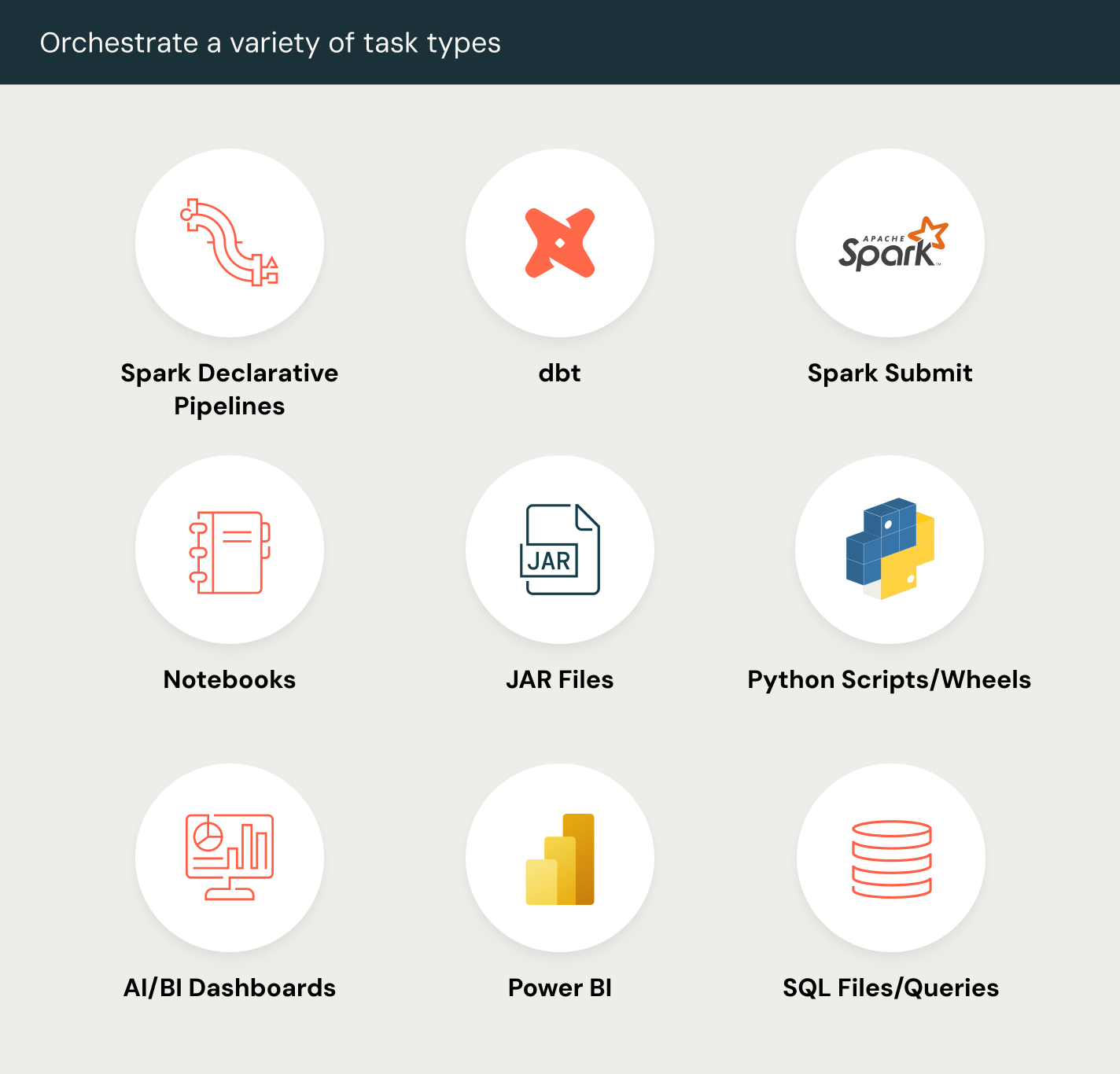

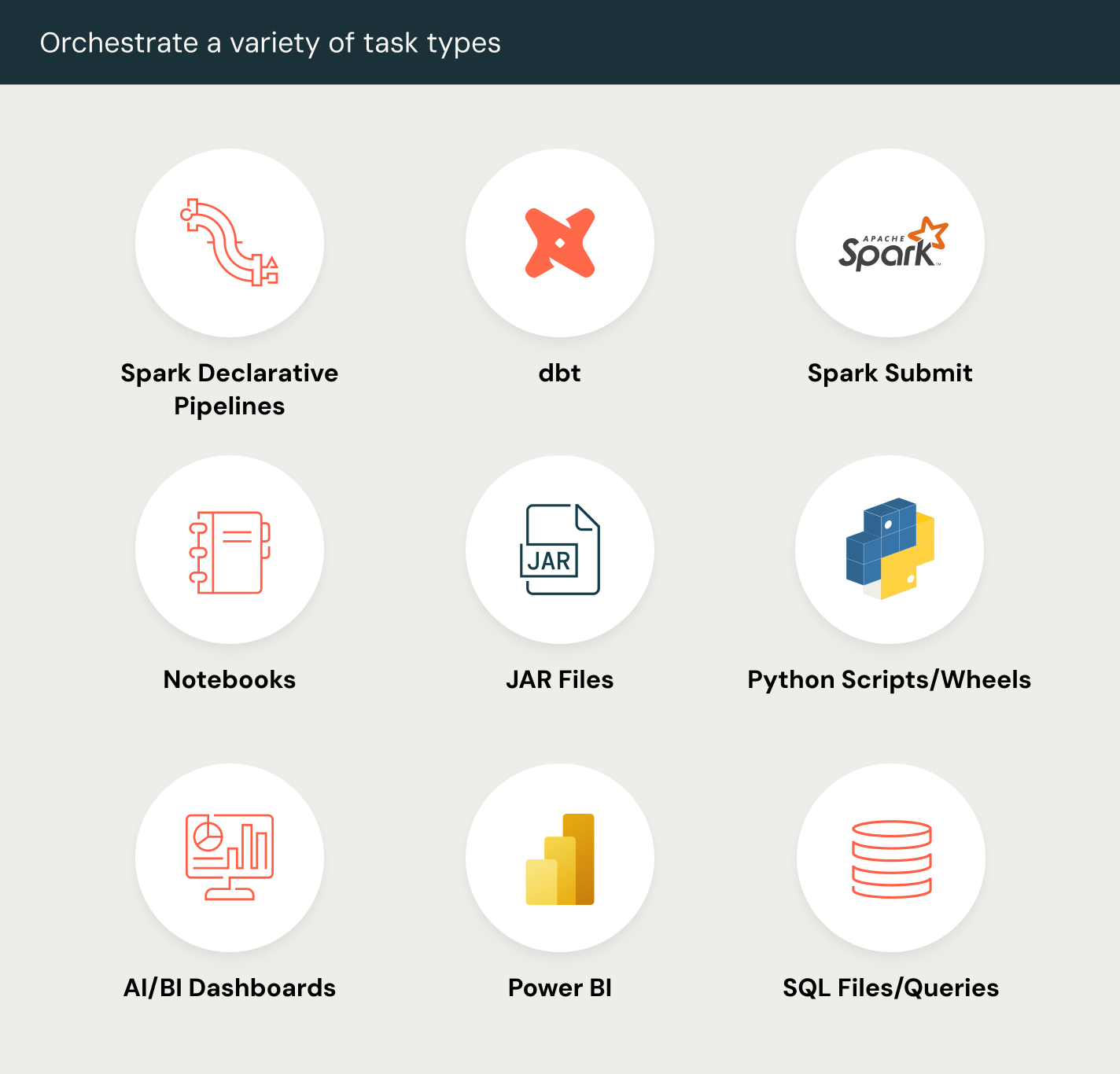

Easily define, manage and monitor jobs across ETL, analytics and AI pipelines.Unlock seamless orchestration with versatile task types, including notebooks, SQL, Python and more, to power any data workflow.

More features

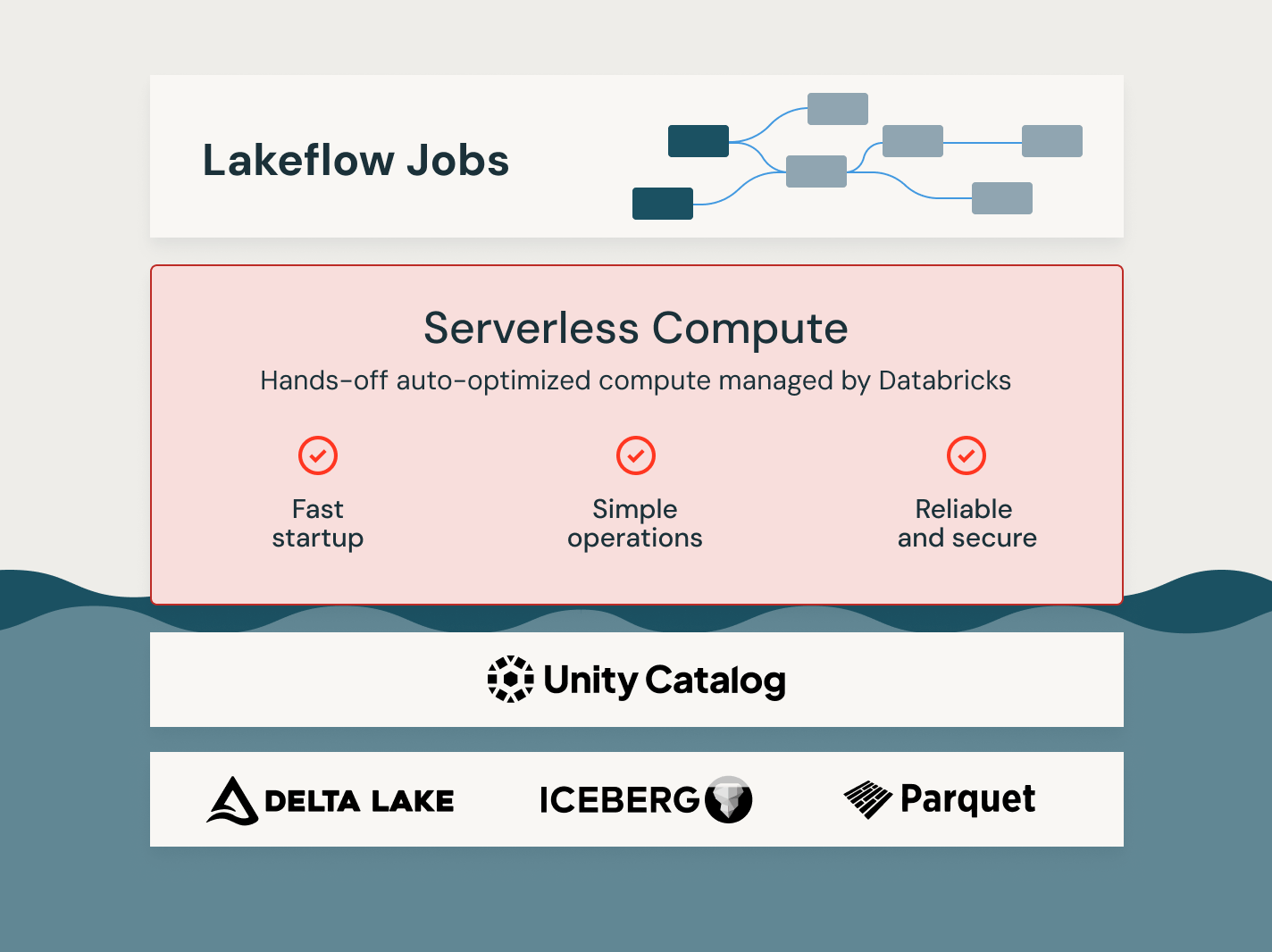

Unlock the power of Lakeflow Jobs

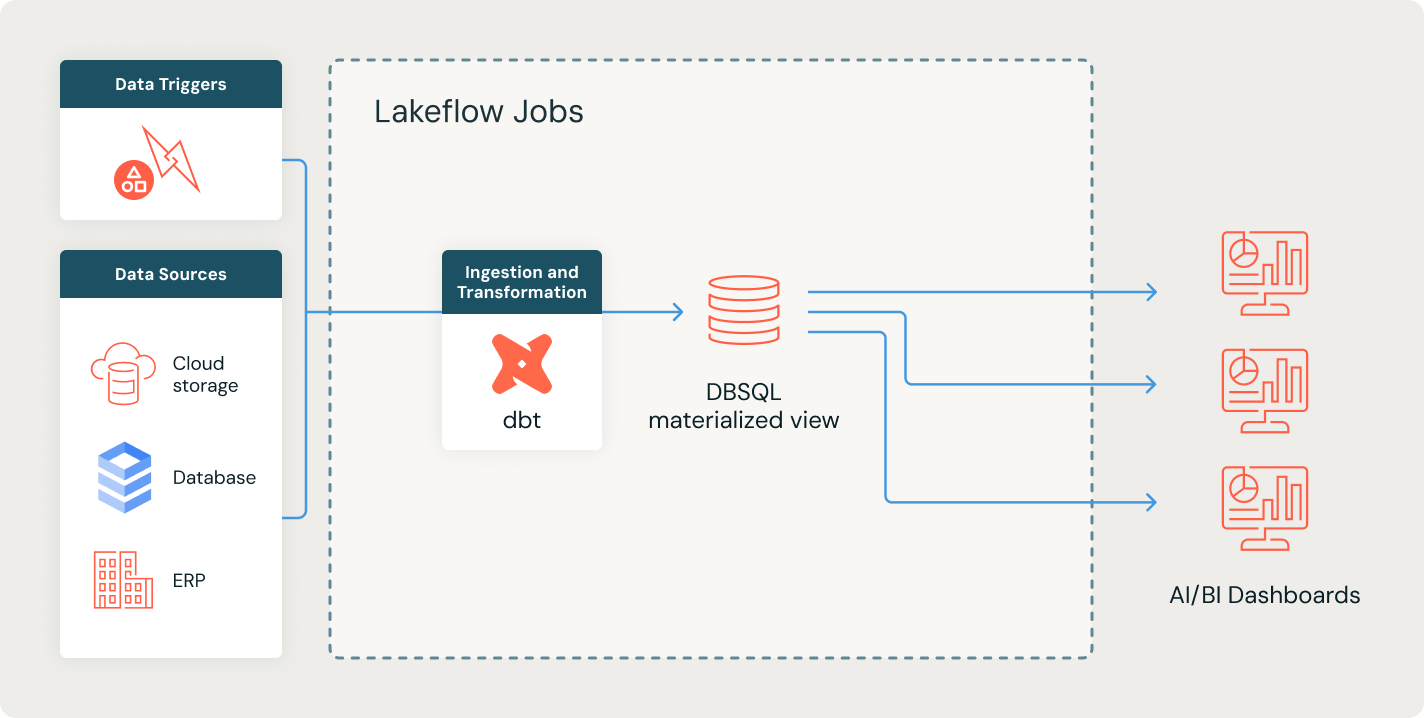

Automate data orchestration for extract, transform, load (ETL) processes

Automate data ingestion and transformation from various sources into Databricks, ensuring accurate and consistent data preparation for any workload.

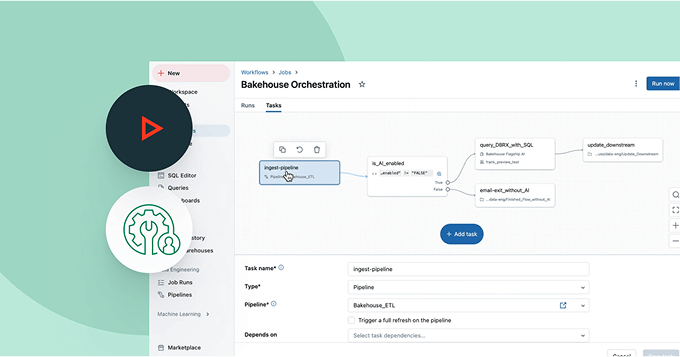

Explore Lakeflow Jobs demos

Discover more

Learn more about how the Databricks Data Intelligence Platform empowers data teams across all your data and AI workloads.Lakeflow Connect

Efficient data ingestion connectors from any source and native integration with the Data Intelligence Platform unlock easy access to analytics and AI, with unified governance.

Spark Declarative Pipelines

Delivers simplified, declarative pipelines for batch and streaming data processing, ensuring automated, reliable transformations for analytics and AI/ML tasks.

Databricks Assistant

Leverage AI-powered assistance to simplify coding, debug workflows and optimize queries using natural language for faster and more efficient data engineering and analysis.

Lakehouse Storage

Unify the data in your lakehouse, across all formats and types, for all your analytics and AI workloads.

Unity Catalog

Seamlessly govern all your data assets with the industry’s only unified and open governance solution for data and AI, built into the Databricks Data Intelligence Platform.

The Data Intelligence Platform

Find out how the Databricks Data Intelligence Platform enables your data and AI workloads.

Take the next step

Lakeflow Jobs FAQ

Ready to become a data + AI company?

Take the first steps in your data transformation