Built for open, intelligent data storage

Choose your storage location and format, with full ownership and portability of your data.

TOP TEAMS SUCCEED WITH DATA INTELLIGENCELakehouse storage that’s flexible and fast

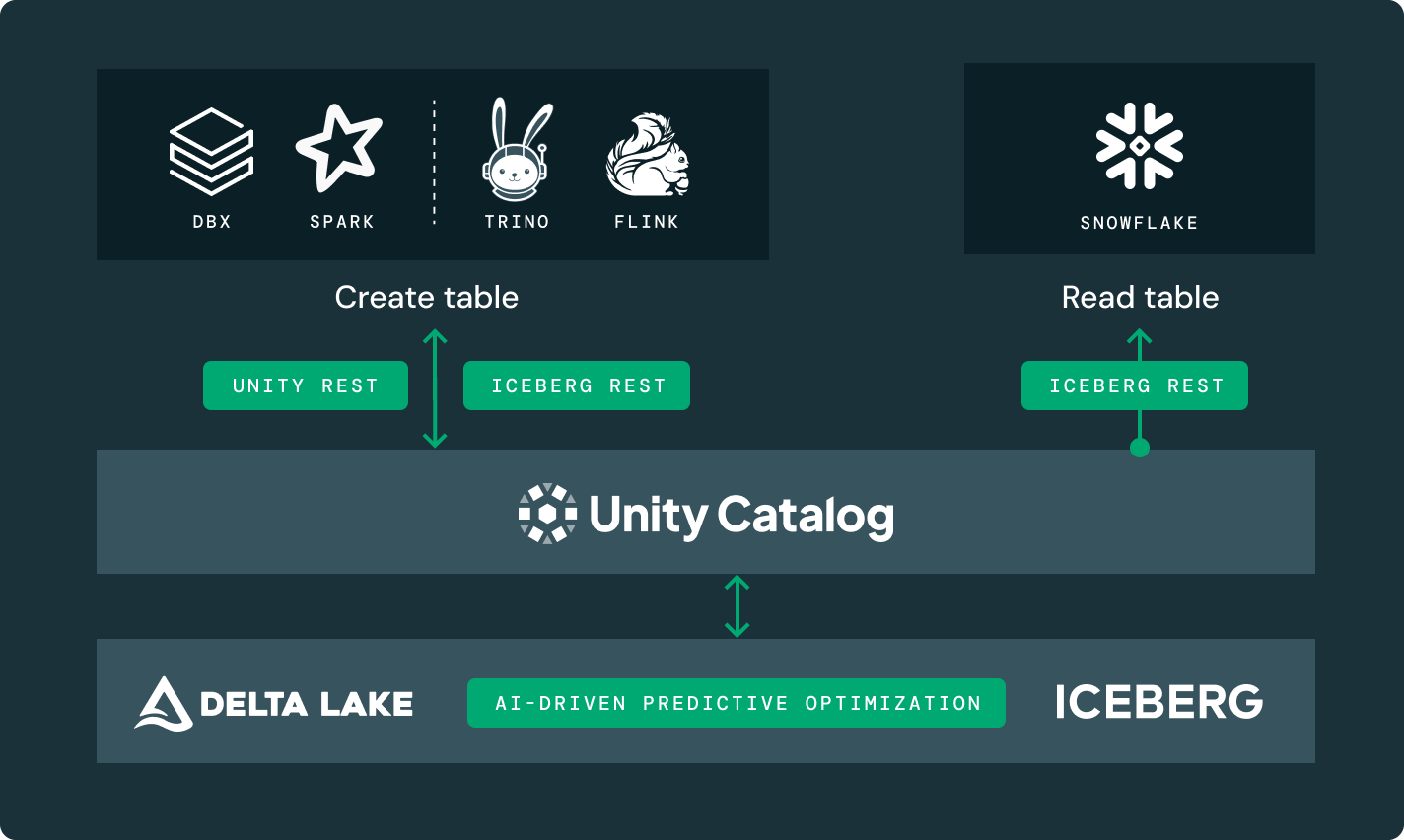

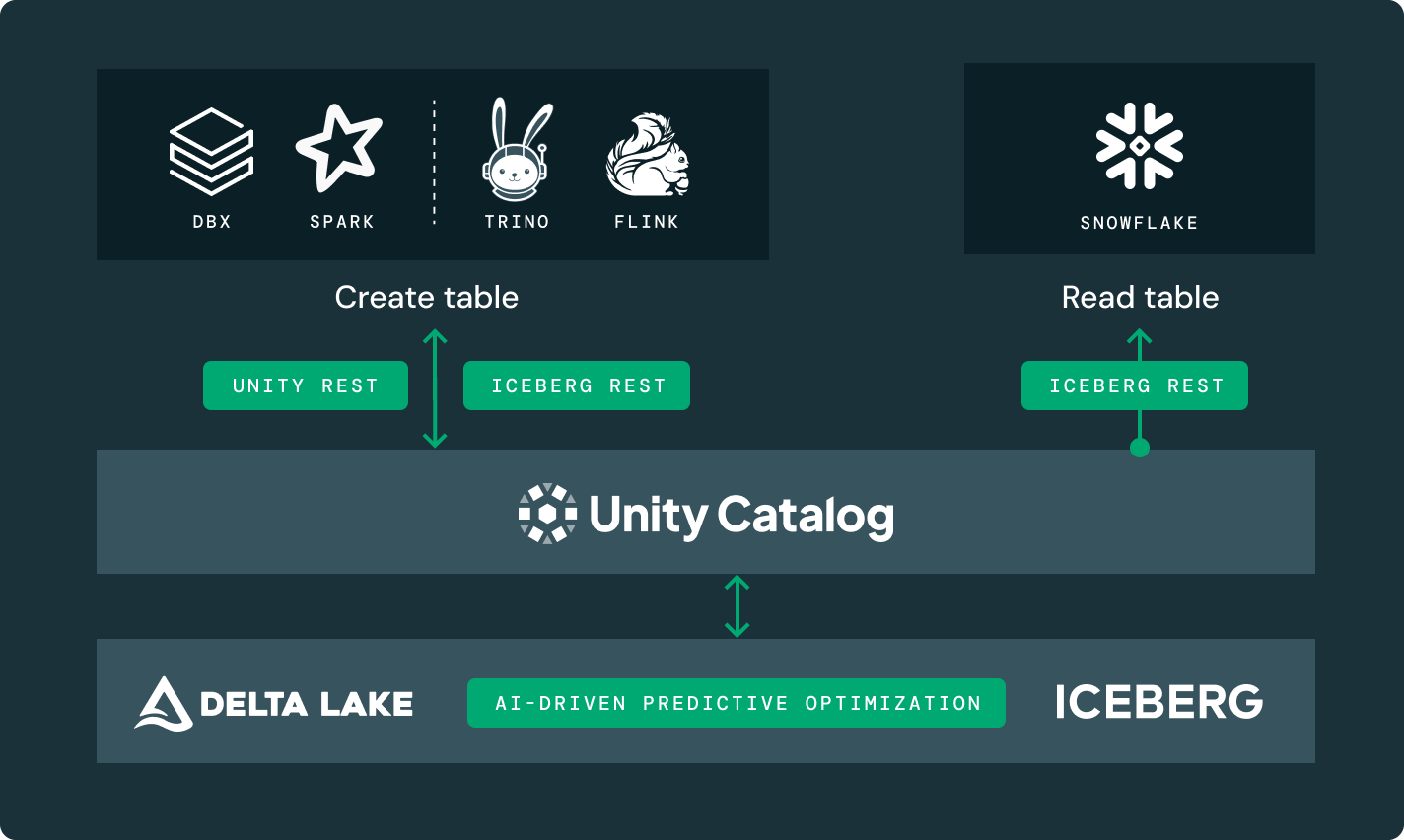

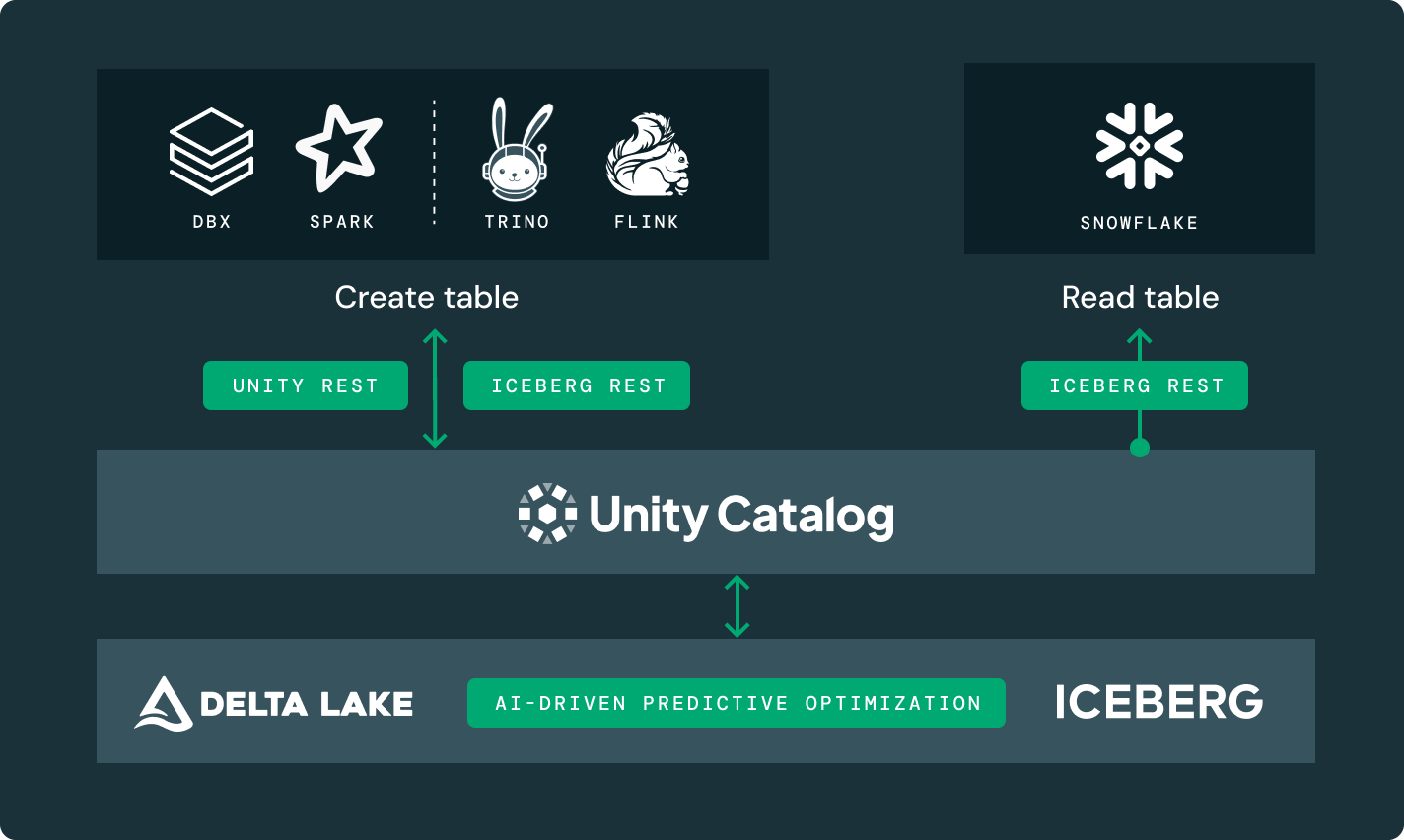

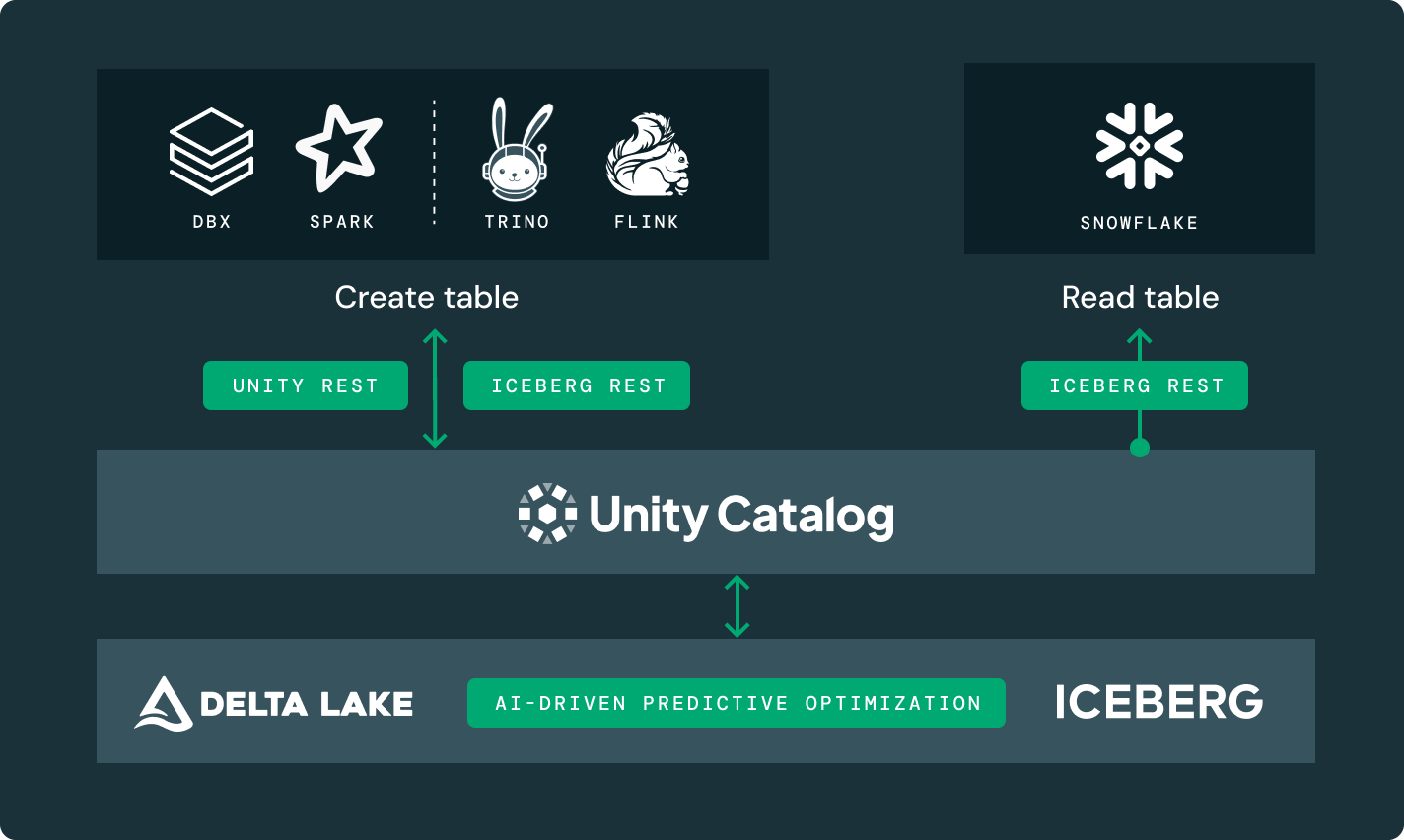

Eliminate data management headaches with open table formats, centralized governance and automatic data optimizations.Your data, your way

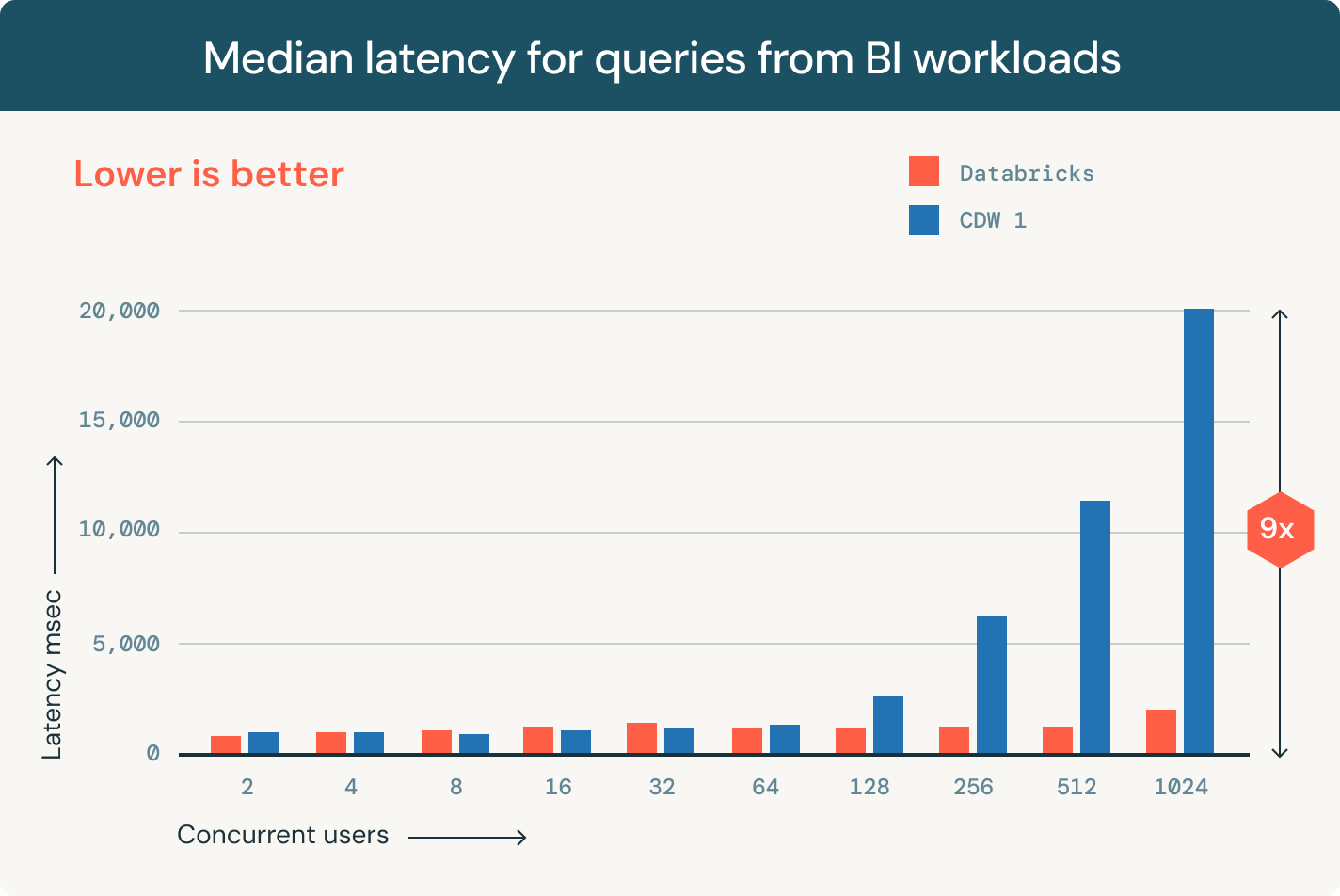

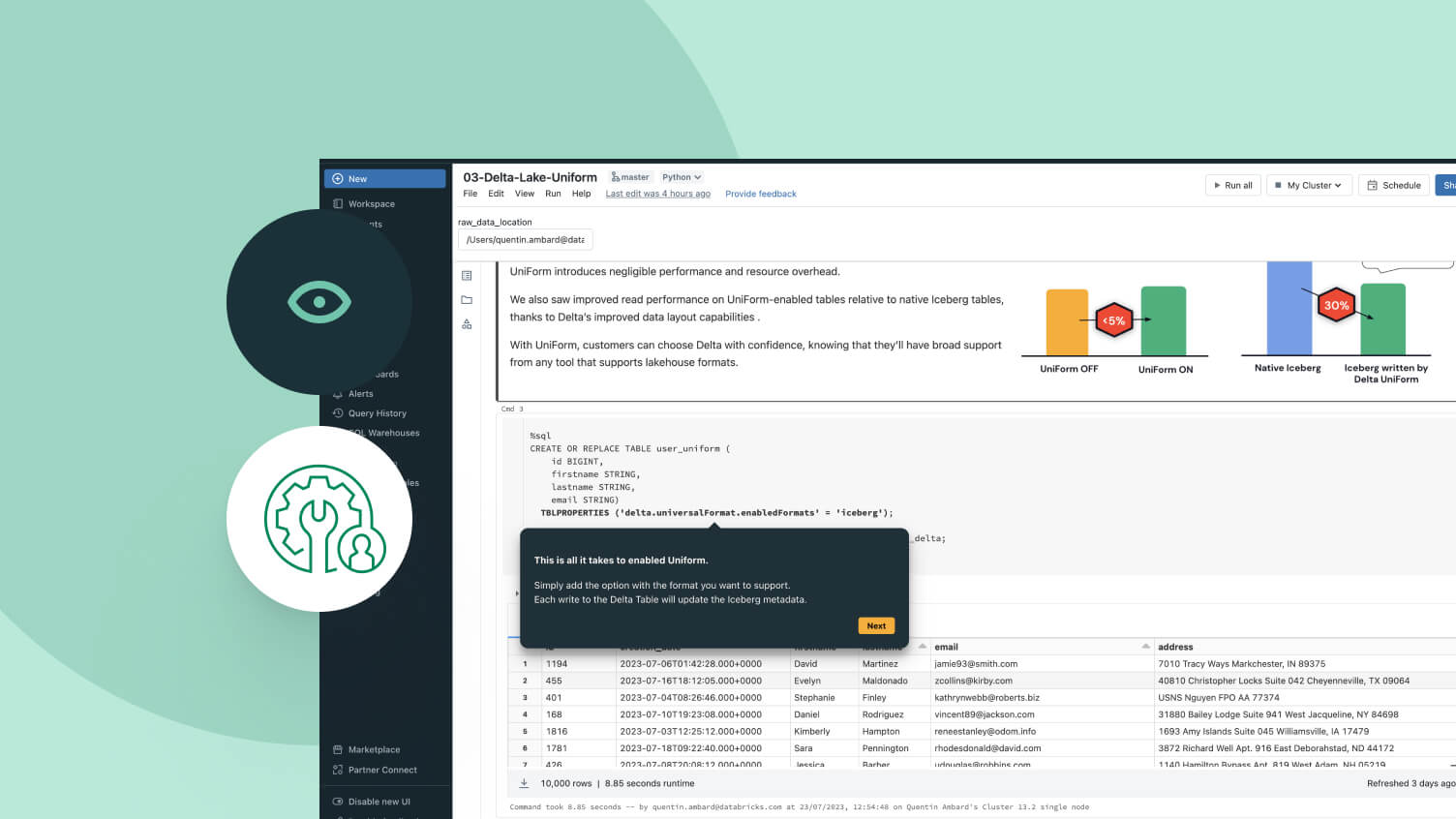

Choose the storage location and open format that works for you. Keep your data portable, without vendor lock-in.Best-in-class read and write performance for Delta Lake and Apache Iceberg™ tables, out of the box, with storage optimizations not available in any other lakehouse.

More features

For all your analytics and AI workloads

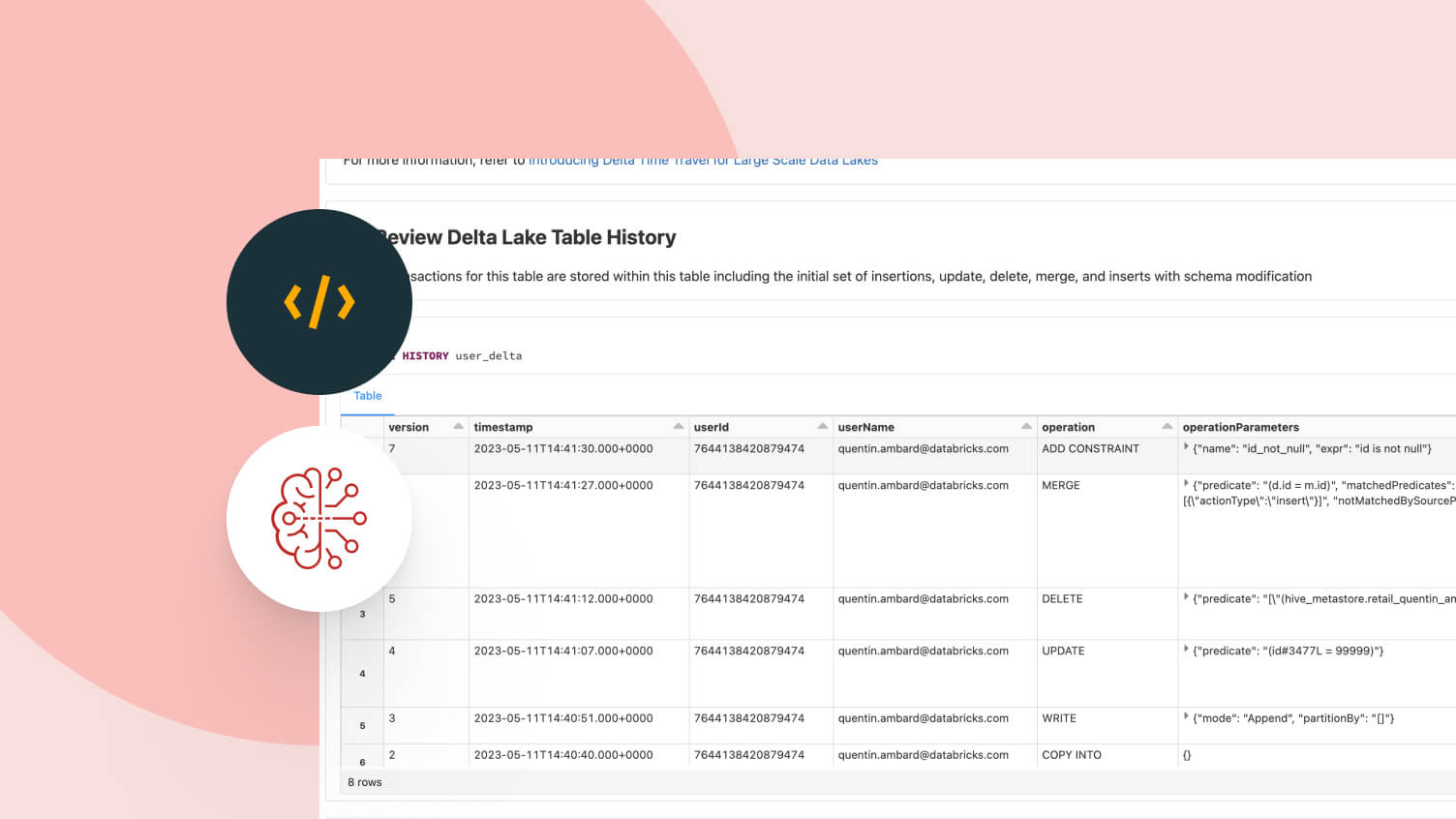

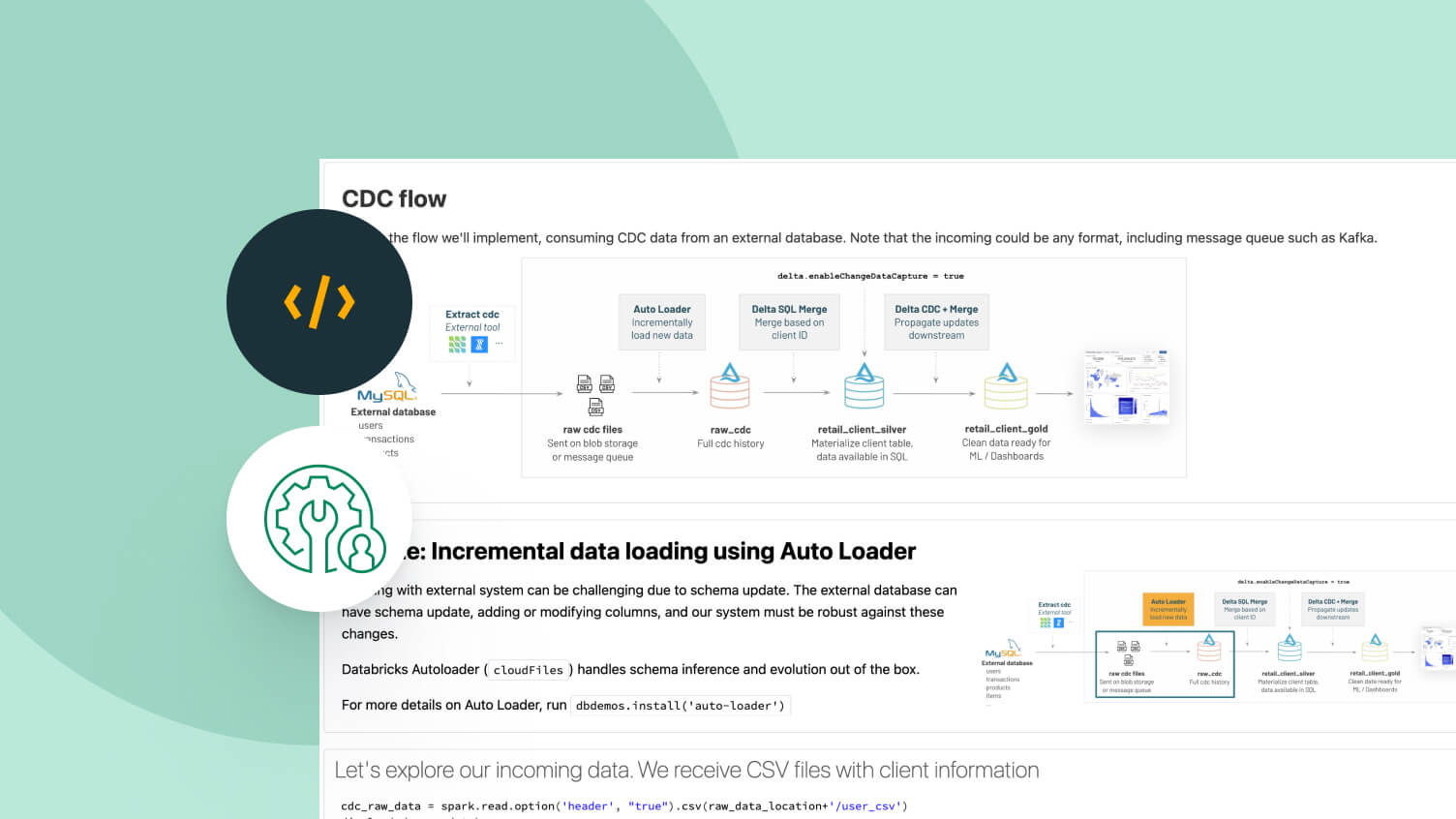

Build and manage reliable data pipelines

Managed tables act as both batch tables and a streaming source and sink. Streaming data ingest, batch historic backfill and interactive queries all work out of the box and directly integrate with Spark Structured Streaming.

Explore Delta Lake demos

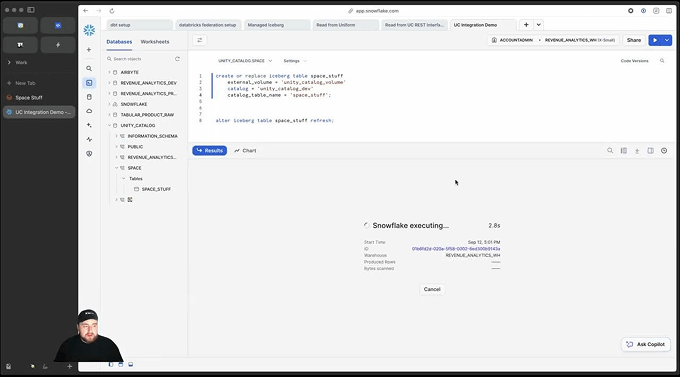

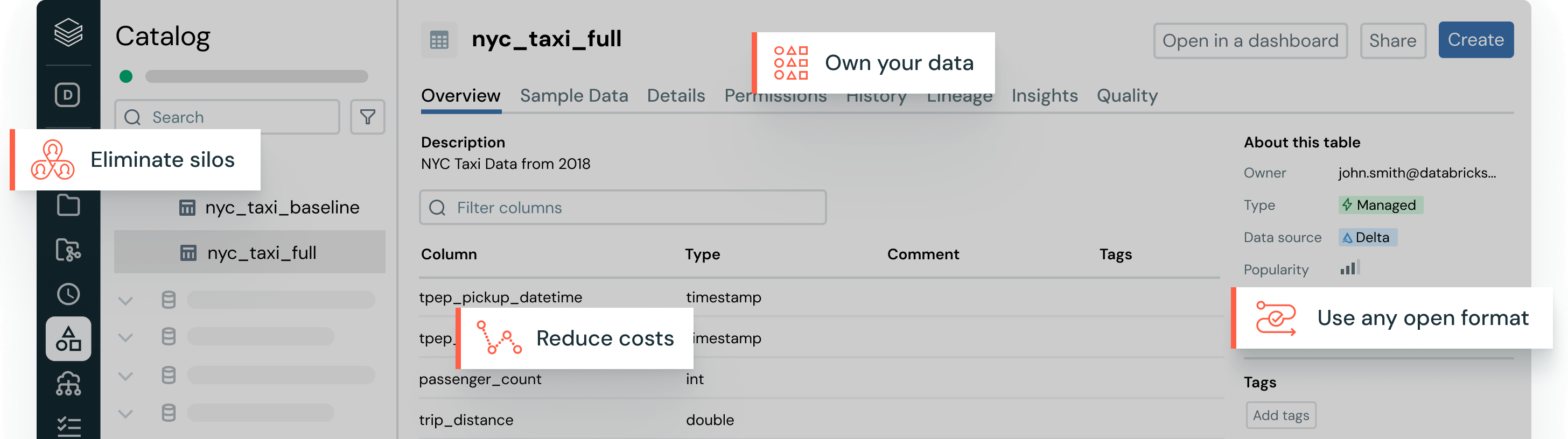

Discover, govern and share your data and AI assets

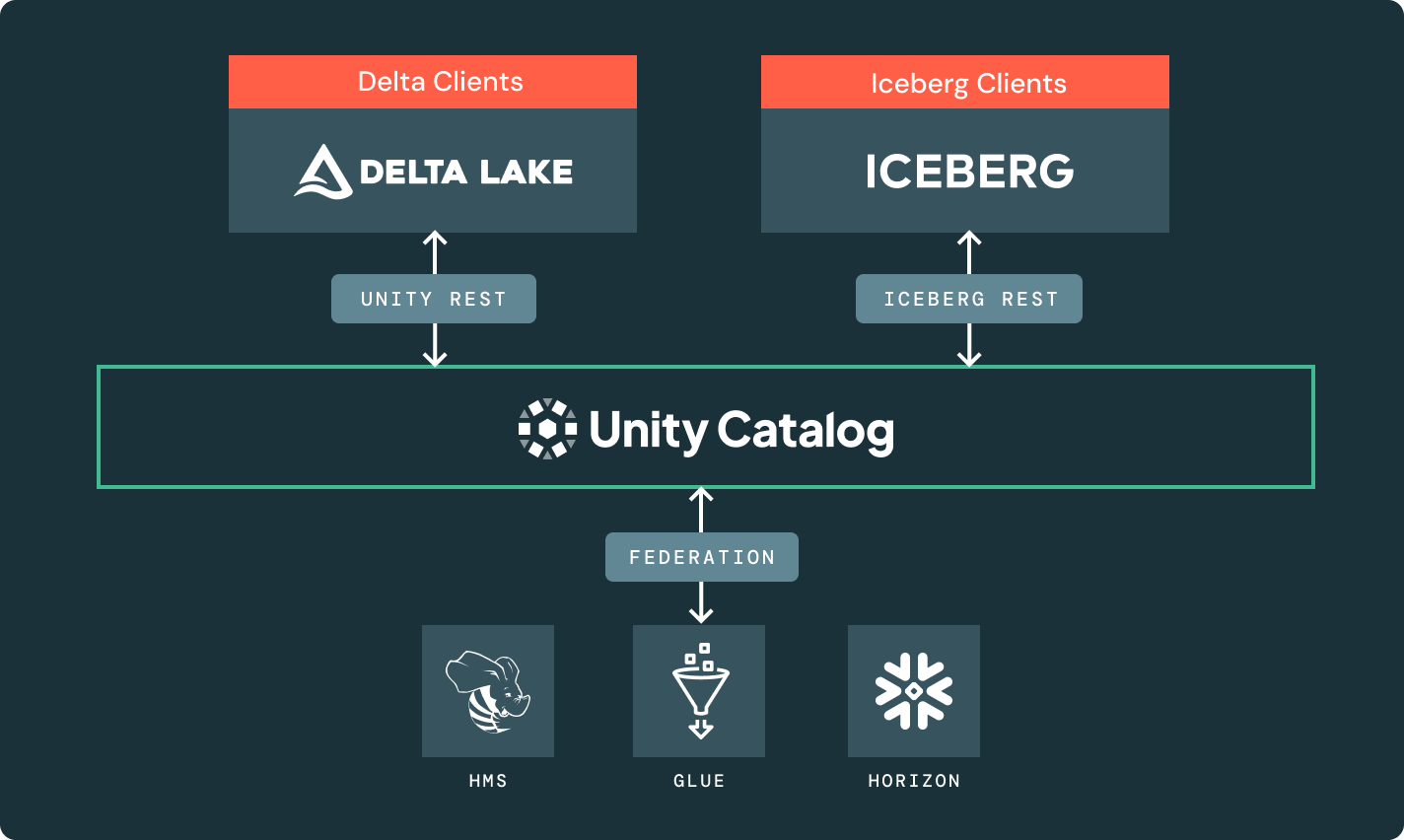

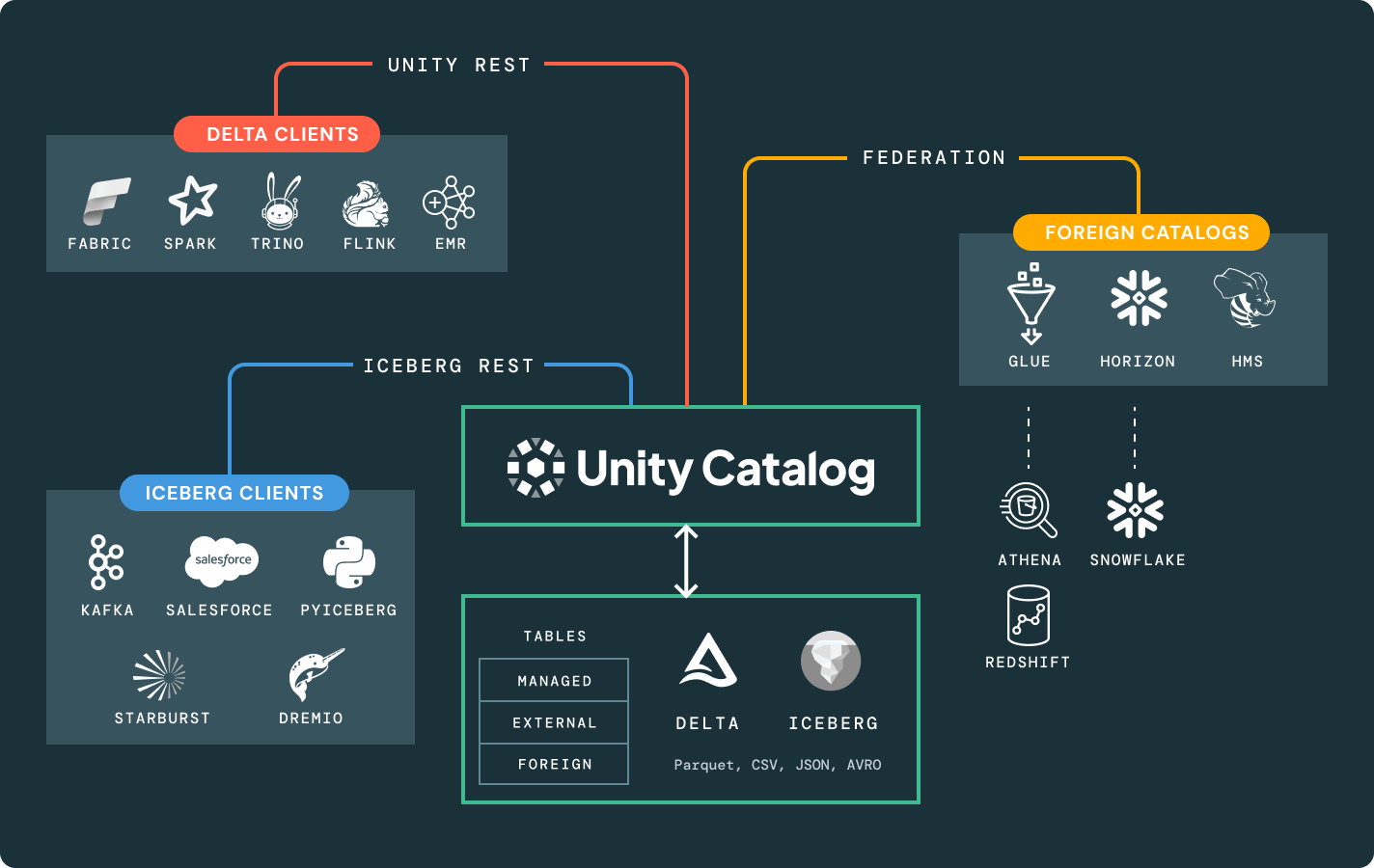

Learn more about how the Databricks Data Intelligence Platform empowers your data teams across all your data and AI workloads.Unity Catalog

The industry’s only unified and open governance solution for data and AI, built into the Databricks Data Intelligence Platform.

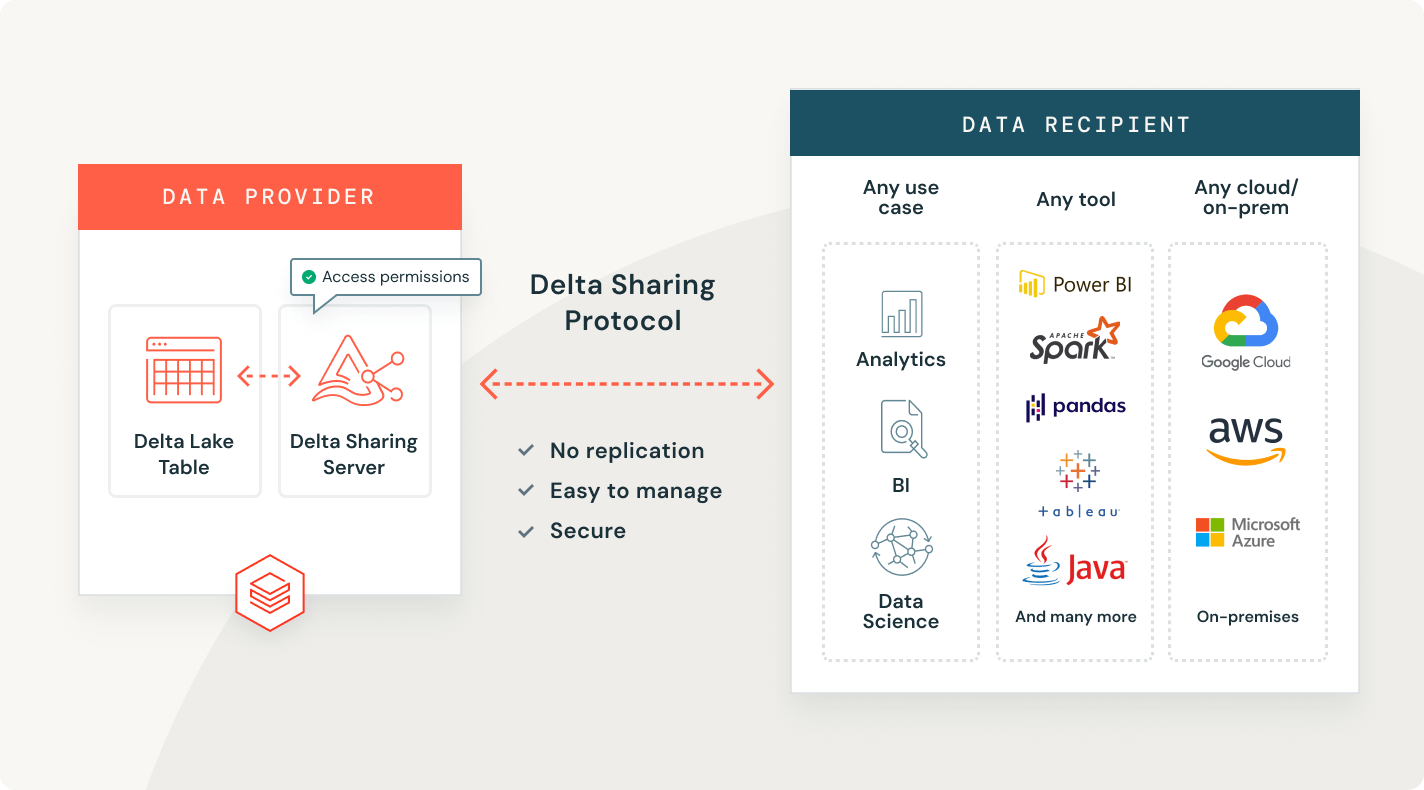

Delta Sharing

The first open source approach to data sharing across data, analytics and AI. Securely share live data across platforms, clouds and regions.

Data Intelligence Platform

Explore the full range of tools available on the Databricks Data Intelligence Platform to seamlessly integrate data and AI across your organization.

Learn more

Lakehouse storage FAQ

Ready to become a data + AI company?

Take the first steps in your transformation