SQL Subqueries in Apache Spark 2.0

Try this notebook in Databricks

In the upcoming Apache Spark 2.0 release, we have substantially expanded the SQL standard capabilities. In this brief blog post, we will introduce subqueries in Apache Spark 2.0, including their limitations, potential pitfalls and future expansions, and through a notebook, we will explore both the scalar and predicate type of subqueries, with short examples that you can try yourself.

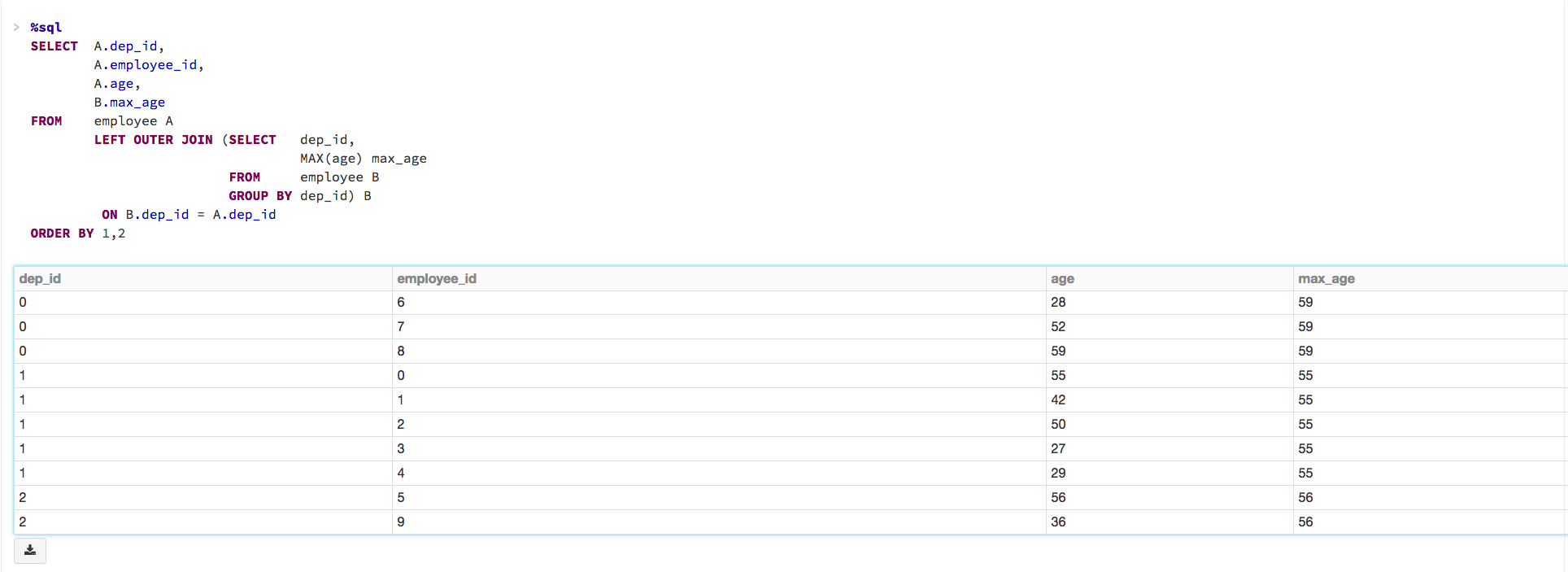

A subquery is a query that is nested inside of another query. A subquery as a source (inside a SQL FROM clause) is technically also a subquery, but it is beyond the scope of this post. There are basically two kinds of subqueries: scalar and predicate subqueries. And within scalar and predicate queries, there are uncorrelated scalar and correlated scalar queries and nested predicate queries respectively.

Get started with ETL

For brevity, we will let you jump and explore the notebook, which is more an interactive experience rather than an exposition here in the blog. Click on this diagram below to view and explore the subquery notebook with Apache Spark 2.0 preview on Databricks.

What’s Next

Subquery support in Apache Spark 2.0 provides a solid solution for the most common subquery usage scenarios. However, there is room for improvement in the areas noted in detail at the end of the notebook.

To try this notebook on Databricks, sign up now.