Improving Threat Detection in a Big Data World

High-profile cybersecurity breaches dominated headlines in 2017. In the first half of the year, over 1.9B records were stolen. That’s more than 7,000 records breached every minute. And the fallout from a single event can be staggering. Customer attrition, negative PR and regulatory fines amount to millions in financial losses. In fact, according to recent research from IBM the average cost of a data breach is $3.62M.

With thousands of records being stolen each day this begs the question – why is this happening and what can be done to help prevent it?

The complex threat environment

Cybercriminals have become more sophisticated over the years. No longer relying on a single tactic to penetrate the enterprise firewall, most criminals employ a coordinated, multi-pronged attack. Verizon recently published a list of the most common tactics used by cybercriminals and it’s clear the methods are diverse:

What tactics do cybercriminals use?

- 62% - hacking

- 51% - malware

- 43% - social attacks

- 14% - misuse of privileged rights

- 14% - the result of an employee mistake

- 8% - loss/theft of physical media

Preventing one type of attack is simply not enough. To make matters more complicated, cybercriminals have begun to make use of AI-supported systems to rapidly scale attacks, personalize phishing emails, identify system vulnerabilities, and mutate malware and ransomware on the fly. Staying ahead of these increasingly complex attacks requires cybersecurity teams to monitor their network for a broad range of threats that may or may not resemble traditional threat patterns.

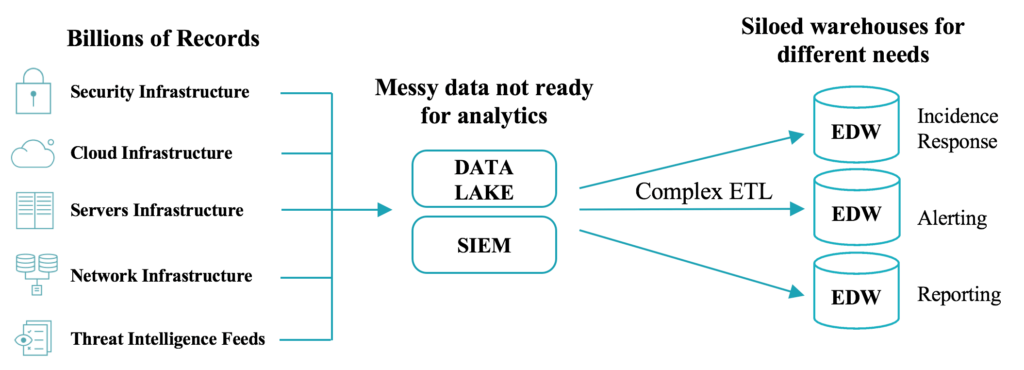

The challenge of managing threats in a big data world

Staying abreast of the latest threat isn’t the only challenge. The increasing volume and complexity of threats require security teams to capture and mine mountains of data in order to avoid a breach. Yet, the Security Information and Event Management (SIEM) and threat detection tools they’ve come to rely on were not built with big data in mind resulting in a number of challenges:

- Inability to scale cost efficiently - companies deploy logging and monitoring devices across their networks, end-user devices and production machines to help detect suspicious behavior. These tools produce petabytes of log data that need to be contextualized and analyzed in real-time. Processing petabytes of data takes significant compute power. Unfortunately, most SIEM tools were built for on-premises environments requiring significant build-outs to meet processing demands. Additionally, most SIEM tools charge customers per GB of data ingested. This makes scaling threat detection tools for large volumes of data incredibly cost-prohibitive.

- Inability to conduct historic reviews in real-time - identifying a cybersecurity breach as soon as it happens is critical to minimizing data theft, damages and creation of backlogs. As soon as an event occurs, security analysts need to conduct deep historic analyses to fully investigate the validity and breadth of an attack. Without a means to efficiently scale existing tools most security teams only have access to a few weeks of historical data. This limits the ability of security teams to identify attacks over long time horizons or conduct forensic reviews in real-time.

- Abundance of false positives - another common challenge is the high volume of false positives produced by SIEM tools. The massive amounts of data captured in OS logs, cloud infrastructure logs, intrusion detection systems and other monitoring devices produce events that in isolation or in connection with other events may signify a compromised network. Most events need further investigation to determine if the threat is legitimate. Relying on individuals to review hundreds of alerts including a large number of false positives results in alert fatigue. Eventually, overwhelmed security teams disregard or overlook events that are in actuality legitimate threats.

In order to effectively detect and remediate threats in today’s environment, security teams need to find a better way to process and correlate massive amounts of real-time and historical data, detect patterns that exist outside pre-defined rules and reduce the number of false positives.

Enhancing threat detection with scalable analytics and AI

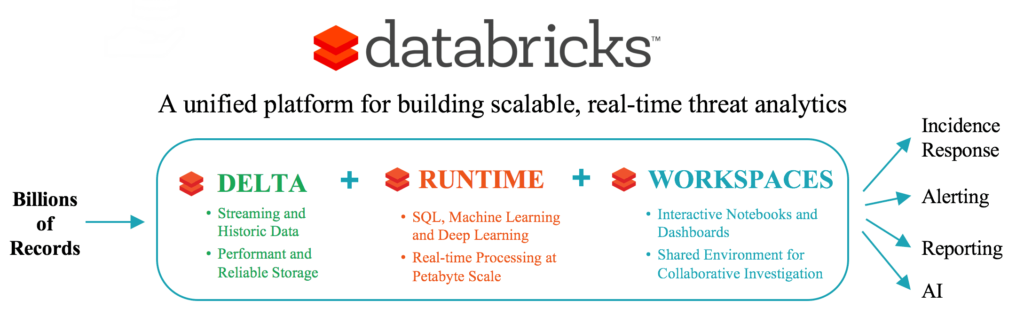

Databricks offers security teams a new set of tools to combat the growing challenges of big data and sophisticated threats. Where existing tools fall short, the Databricks Unified Analytics Platform fills the void with a platform for data scientists and cybersecurity analysts to easily build, scale, and deploy real-time analytics and machine learning models in minutes, leading to better detection and remediation.

Databricks complements existing threat detection efforts with the following capabilities:

- Full enterprise visibility - native to the cloud and built on Apache Spark, Databricks is optimized to process large volumes of streaming and historic data for real-time threat analysis and review. Security teams can query petabytes of historic data stretching months or years into the past, making it possible to profile long-term threats and conduct deep forensic reviews to uncover backdoors left behind by hackers. Security teams can also integrate all types of enterprise data - SIEM logs, cloud logs, system security logs, threat feeds, etc – for a more complete view of the threat environment.

- Proactive threat analytics - Databricks enables security teams to build predictive threat intelligence with a powerful, easy-to-use platform for developing AI and machine learning models. Data scientists can build machine learning models that better score alerts from SIEM tools reducing reviewer fatigue caused by too many false positives. Data scientists can also use Databricks to build machine learning models that detect anomalous behaviors that exist outside pre-defined rules and known threat patterns.

- Collaborative investigations - interactive notebooks and dashboards enable data scientists, analysts and security teams to collaborate in real-time. Multiple users can run queries, share visualizations and make comments within the same workspace to keep investigations moving forward without interruption.

- Cost efficient scale - the Databricks platform is fully managed in the cloud with cost-efficient pricing designed for big data processing. Security teams don’t need to absorb the costly burden of building and maintaining a homegrown cybersecurity analytics platform or paying per GB of data ingested and retained.

How a Fortune 100 company uses Databricks and advanced cybersecurity analytics to combat threats

A leading technology company employs a large cybersecurity operations center to monitor, analyze and investigate trillions of threat signals each day. Data flows in from a diverse set of sources including intrusion detection systems, network infrastructure and server logs, application logs and more, totaling petabytes in size.

When a suspicious event is identified, threat response teams need to run queries in real-time against large historical datasets to verify the extent and validity of a potential breach. To keep pace with the threat environment the team needed a solution capable of:

- Large data volumes at low latency: Analyze billions of records within seconds

- Correct and consistent data: Partial and failed writes cannot show up in user queries

- Fast, flexible queries on current and historical data: Security analysts need to explore petabytes of data with multiple languages (e.g. Python, SQL)

The Challenge

It took a team of twenty engineers over six months to build their legacy architecture that consisted of various data lakes, data warehouses, and ETL tools to try to meet these requirements. Even then, the team was only able to store two weeks of data in its data warehouses due to cost, limiting its ability to look backward in time. Furthermore, the data warehouses chosen were not able to run machine learning.

The Solution

Using the Databricks Unified Analytics platform the company was able to put their new architecture into production in just two weeks with a team of five engineers.

Their new architecture is simple and performant. End-to-end latency is low (seconds to minutes) and the threat response team saw up to 100x query speed improvements over open source Apache Spark on Parquet. Moreover, using Databricks, the team is now able to run interactive queries on all its historical data — not just two weeks worth — making it possible to better detect threats over longer time horizons and conduct deep forensic reviews. They also gain the ability to leverage Apache Spark for machine learning and advanced analytics.

Final Thoughts

As cybercriminals continue to evolve their techniques, so do cybersecurity teams need to evolve how they detect and prevent threats. Big data analytics and AI offer a new hope for organizations looking to improve their security posture, but choosing the right platform is critical to success.

Download our Cybersecurity Analytics Solution Brief or watch the replay of our recent webinar Enhancing Threat Detection with Big Data and AI to learn how Databricks can enhance your security posture.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read