A Guide to Data Science, Developer, and Deep Dive Talks at Spark + AI Summit Europe

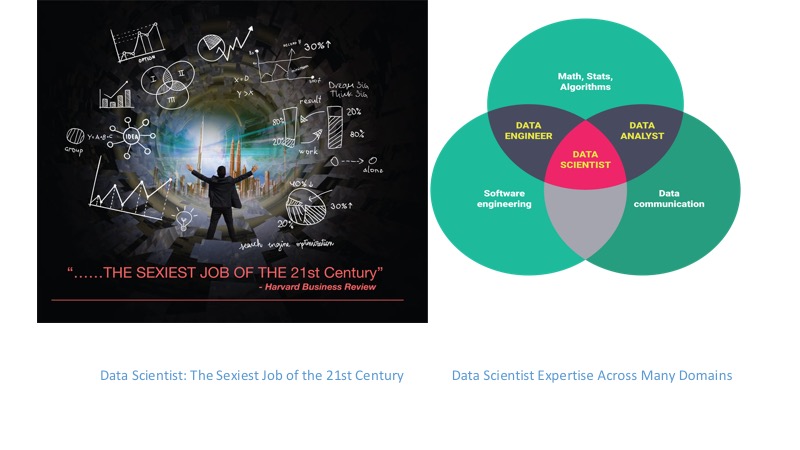

In October 2012, Harvard Business Review put a spotlight on the data science career with a dedicated issue and a catchy claim: Data Scientist: The Sexiest Job of the 21st Century.

Last year in October, five years on, Forbes recast an answer on Quora, Why Data Science Is Such A Hot Career Right Now?

Recent technical literature seems to suggest that data scientists are unique and that their expertise span across domains. Because of this unique combination of skills spanning domains—from software engineering to data exploration and communication to strong knowledge of maths and statistics—the demand for this new career exceeds the supply—even today.

“Data scientists’ most basic, universal skill is the ability to write code,” writes DJ Patil and Thomas Davenport. To that I would add, while they may be developers at the core, they are also data explorers and data storytellers at heart.

And this October, you can join these data scientists and developers at Spark + AI Summit Europe and learn from them as they offer their insight into use cases in how they combine data and AI, to build models, convey data stories, and garner insights.

In this blog, we highlight selected sessions that speak to their endeavors in combining the immense value of data and AI from three specific tracks that cover knowledge across the domains shown in the Venn diagram above.

Let’s consider what does it take to extract features from data to convey data stories. Shlomi Babluki of SimilarWeb will introduce how to use extracted matrices together with additional datasets to generate valuable features to train different regression and classification models in his talk, Interaction-Based Feature Extraction: How to Convert Your Users’ Activity into Valuable Features.

If you are a data storyteller and you revel in the notion of how story shapes and influences decisions or shifts opinions, then this topical talk, Rejecting the Null Hypothesis of Apathetic Retweeting of US Politicians and SPLC-defined Hate Groups in the 2016 US Presidential Election, from Raazesh Sainudiin of Canterbury University, reveals how to analyze social tweets and build geometric models to portray a visual summary of neighbor-joined population ideological trees. Similarly, Jerry Schirmer of SparkCognition in his topical talk, Time-Series Anomaly Detection in Plaintext Using Apache Spark, will share how to identify shifts in public sentiments and identify anomalies in unstructured time-series plaintext data such as news articles, tweets, and publications using Apache Spark.

What makes data science projects successful? Are there any identifiable patterns or practices to adhere to? Bill Chambers of Databricks will explore these questions in his talk, Patterns for Successful Data Science Projects. Furthermore, if you are a new data scientist and wish to avoid learning pitfalls, Sean Owen of Databricks will offer some wisdom in his talk: Three Stats Pitfalls Facing the New Data Scientist

Now if you ever wonder what data science techniques and algorithms lie behind recommendation engines that we constantly interact with today at high-volume sites like Amazon, Netflix or Spotify, etc? Rui Vieira of Red Hat will reveal the mystery in his talk: Building Streaming Recommendation Engines on Apache Spark

Extracting features, doing sentiment analysis, developing data science projects, and building recommendations engines are many challenges data scientists and developers face. But another other challenge is how to manage a model’s lifecycle: how to track experiments, train, reproduce, and deploy models. Mani Parkhe of Databricks will give a deep dive in his talk, MLflow: Infrastructure for a Complete Machine Learning Life Cycle.

For many European data scientists and developers, GDPR compliance is a law, especially when dealing with data models employing sensitive or private data. In their deep-dive talk, Great Models with Great Privacy: Optimizing ML and AI Under GDPR, Messrs Sim Simeonov (Swoop) and Slater VIctoroff (Indico Data Solutions) will discuss how to grapple with issues of privacy and yet be innovative.

Structured Streaming has garnered a lot of interest in building end-to-end data pipelines. Two deep-dive talks will give you insight into how. First is from Tathagata Das (Databricks): Deep Dive into Stateful Stream Processing in Structured Streaming. Second is from Sandy May (Elastacloud and Renewables.AI): Using Azure Databricks, Structured Streaming & Deep Learning Pipelines, to Monitor 1,000+ Solar Farms in Real-Time.

As a developer of big data applications, you worry often about scale. At CERN scale is the norm. Luca Canali (CERN) will share his passion: Apache Spark for RDBMS Practitioners: How I Learned to Stop Worrying and Love to Scale. Similarly, Baruk Yavuz (Databricks) will share about scale: Designing and Building Next Generation Data Pipelines at Scale with Structured Streaming.

If you worry about performance and want to garner knowledge about Apache Spark internals and Spark SQL optimization, three talks we recommend: first, Towards Writing Scalable Big Data Applications from Philipp Brunenberg (consultant); second, A Framework for Evaluating the Performance and the Correctness of the Spark SQL Engine from Messrs Bogdan Ghit and Nicolas Poggi (Databricks); and third, Bucketing in Spark SQL 2.3 from Jacek Laskowski (consultant).

And finally, check out two training courses for big data developers to extend your knowledge of Apache Spark programming and performance and tunning respectively: APACHE SPARK™ PROGRAMMING and APACHE SPARK™ TUNING AND BEST PRACTICES.

What’s Next

You can also peruse and pick sessions from the schedule, too. In the next blog, we will share our picks from sessions related to Research and Spark Ecosystem and Use Cases and Experience.

If you have not registered yet, use this code JulesPicks and get 20% discount.

Never miss a Databricks post

What's next?

Data Science and ML

October 1, 2024/5 min read