Make Your Data Lake CCPA Compliant with a Unified Approach to Data and Analytics

Get an early preview of O'Reilly's new ebook for the step-by-step guidance you need to start using Delta Lake.

With more digital data being captured every day, there has been a rise of various regulatory standards such as the General Data Protection Regulation (GDPR) and recently the California Consumer Privacy Act (CCPA). These privacy laws and standards are aimed at protecting consumers from businesses that improperly collect, use, or share their personal information, and is changing the way businesses have to manage and protect the consumer data they collect and store.

Similar to the GDPR, the CCPA empowers individuals to request:

- what personal information is being captured,

- how personal information is being used, and

- to have that personal information deleted.

Additionally, the CCPA encompasses information about ‘households’. This has potential to significantly expand the scope of personal information subject to these requests. Failure to comply in a timely manner can result in statutory fines and statutory damages (where a consumer need not even prove damages) that can rise quickly. The challenge for companies doing business in California or otherwise subject to the CCPA, then, is to ensure they can quickly find, secure, and delete that personal information.

Many companies wrongfully think that the data privacy processes and controls put in place for GDPR compliance will guarantee complete compliance with the CCPA–and while the things you may have done to prepare for the GDPR are helpful and a great start–they are unlikely to be sufficient. Companies need to focus on understanding their need for compliance and must determine which processes and controls can effectively prevent the misuse and unauthorized sale of consumer data.

Are you prepared for CCPA?

CCPA requires businesses to potentially delete all personal information about a consumer upon request. Many organizations today are using or plan to use a data lake for storing the vast majority of their data in order to have a comprehensive view of their customers and business and power downstream data science, machine learning, and business analytics. The lack of structure of a data lake makes it challenging to locate and remove individual records to remain compliant with these regulatory requirements.

This is critical when responding to a consumer’s deletion request, and if a business receives more than just a few consumer rights requests in a short period of time, the resources spent to comply with the requests could be significant. Businesses that fail to comply with CCPA requirements by January 1, 2020 could be subject to lawsuits and civil penalties. The CCPA also contains a “lookback” period applying it to actions and personal information since January 1, 2019, making it vital to get these solutions in place quickly.

Taking your data security beyond the data lake

When it comes to adhering to CCPA requirements, your data lake should enable you to respond to consumer rights requests within prescribed timelines without handicapping your business. Unfortunately, most data lakes lack the data management and data manipulation capabilities to quickly locate and remove records, which makes this challenging.

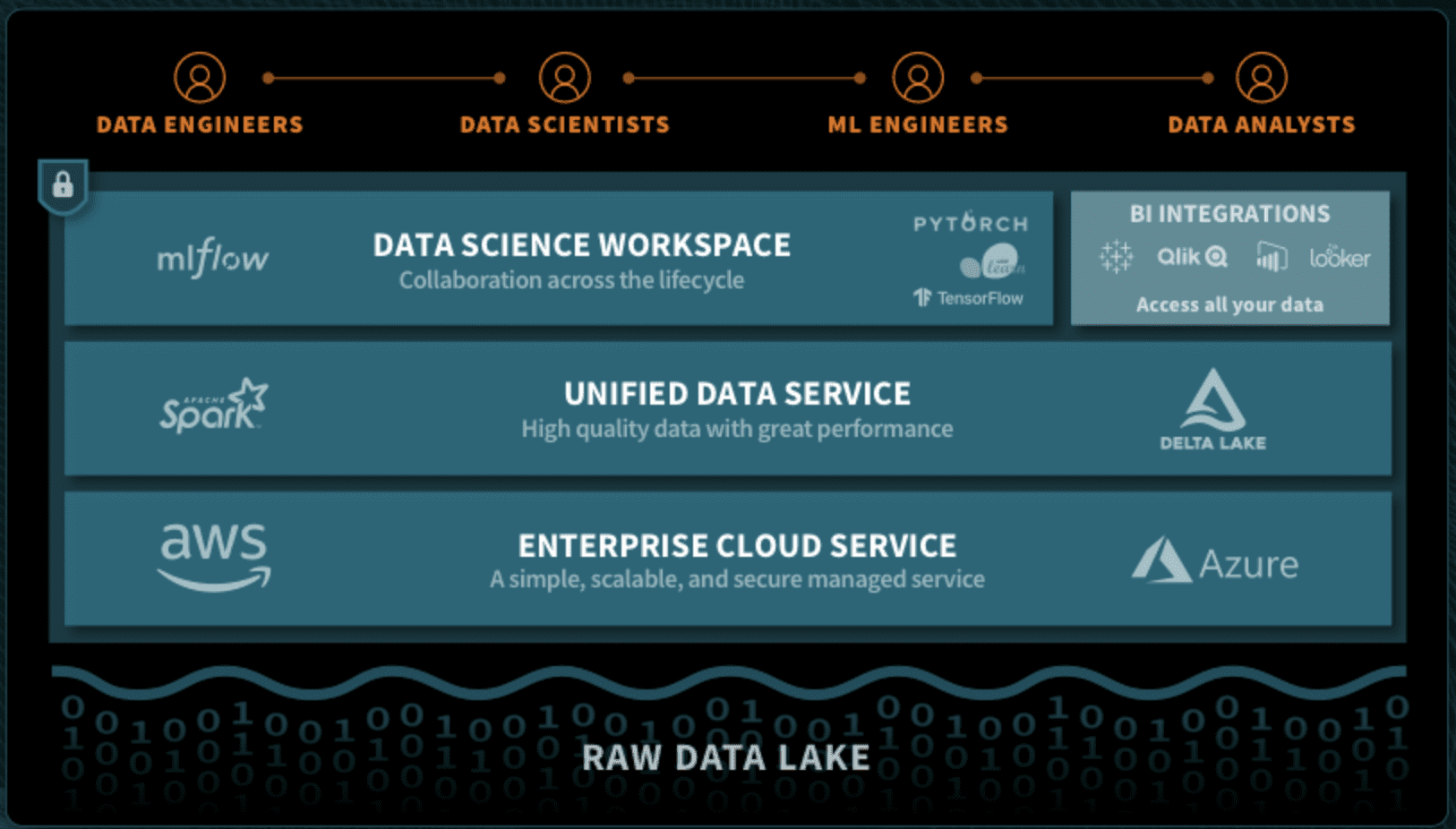

Fortunately, Databricks offers a solution. The Databricks Unified Data Analytics Platform simplifies data access and engineering, while fostering a collaborative environment that supports analytics and machine learning. As part of the platform, Databricks offers a Unified Data Service that ensures reliability and scalability for your data pipelines, data lakes, and data analytics workflows.

One of the main components of the Databricks Unified Data Service is Delta Lake, an open-source storage layer that brings enhanced data reliability, performance, and lifecycle management to your data lake. With improved data management, organizations can start to think “beyond the data lake” and leverage more advanced analytics techniques and technologies to extend their data for downstream business needs including data privacy protection and CCPA compliance.

Start building a CCPA-friendly data lake with Delta Lake

Delta Lake provides your data lake with a structured data management system including transactional capabilities. This enables you to easily and quickly search, modify, and clean your data using standard DML statements (e.g. DELETE, UPDATE, MERGE INTO).

To get started, ingest your raw data with the Spark APIs that you’re familiar with and write them out as Delta Lake tables. Doing this also adds metadata to your files. If your data is already in Parquet format, you also have the option to convert the parquet files in place to a Delta Lake table without rewriting any of the data. Delta uses an open file format (parquet) so there are no worries of being locked in as you can quickly and easily convert your data back into another format if you need to.

Once ingested, you can easily search and modify individual records within your Delta Lake tables. The final step is to make Delta Lake your single source of truth by erasing any underlying raw data. This removes any lingering records from your raw data sets. We suggest setting up a retention policy with AWS or Azure of thirty days or less to automatically remove raw data so that no further action is needed to delete the raw consumer data to meet CCPA response timelines.

Gartner®: Databricks Cloud Database Leader

How do I delete data in my data lake using Delta Lake?

You can find and delete any personal information related to a consumer by running two commands:

- DELETE FROM data WHERE email = '[email protected]';

- VACUUM data;

The first command identifies records that contain the string “[email protected]” stored in the column email, and deletes the data containing these records by rewriting the respective underlying files with the consumer’s unique personal data removed and marking the old files as deleted.

The second command cleans up the Delta table, removing any stale records that have been logically deleted and are outside of the default retention period. With the default retention period of 7 days, this means that files marked for deletion will linger around until you run the VACUUM command at least 7 days later. You could easily set up a scheduled job with the Databricks Job Scheduler to run the VACUUM command for you in an automated fashion. You might also be familiar with Delta Lake’s time travel capabilities, which allows you to keep historical versions of your Delta Lake table in case you need to query an earlier version of the table. Note that when you run VACUUM, you will lose the ability to time travel back to a version older than the default 7-day data retention period.

After running these commands, you can now safely state that you have removed the necessary consumer data and records from your data lake.

How else does Databricks help me with CCPA consumer rights requests?

Once a user's personal information has been removed from the data lake, it is also important to remove this personal information from the tools used by your data teams. Often times these tools reside locally on the data scientist’s or engineer’s laptop. A better more secure solution is to use Databricks with its hosted Data Science Workspace where data teams can prep, explore and model data collaboratively in a shared notebook environment. This improves team productivity while creating a secured, centralized environment for the entire analytics workflow.

To help you meet CCPA compliance requirements, Databricks provides you with privacy protection tools to permanently remove personal information, either on a per-command or per-notebook level.

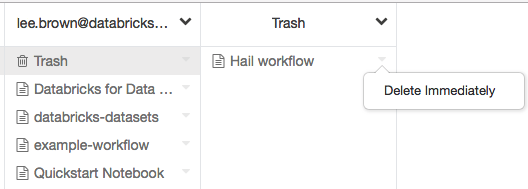

After you delete a notebook, it is moved to trash. If you don’t take further action, it will be permanently deleted within 30 days - allowing you to be confident it has been deleted within the prescribed timelines for both CCPA and GDPR.

If for any reason you need to do this more quickly, we also offer the ability to permanently delete individual items in the trash:

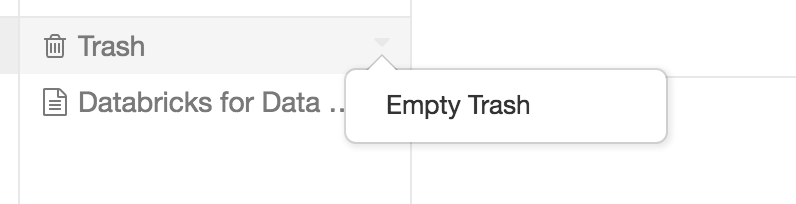

deleting all items in a particular user’s trash:

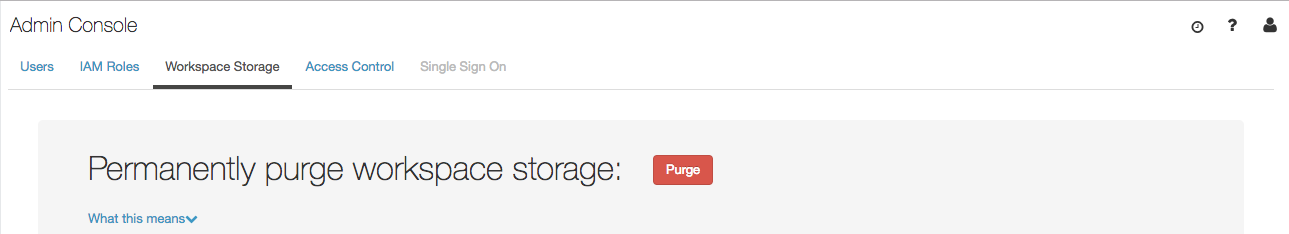

or purging all deleted items in a workspace on command, which includes deleted notebook cells, notebook comments or MLFlow experiments:

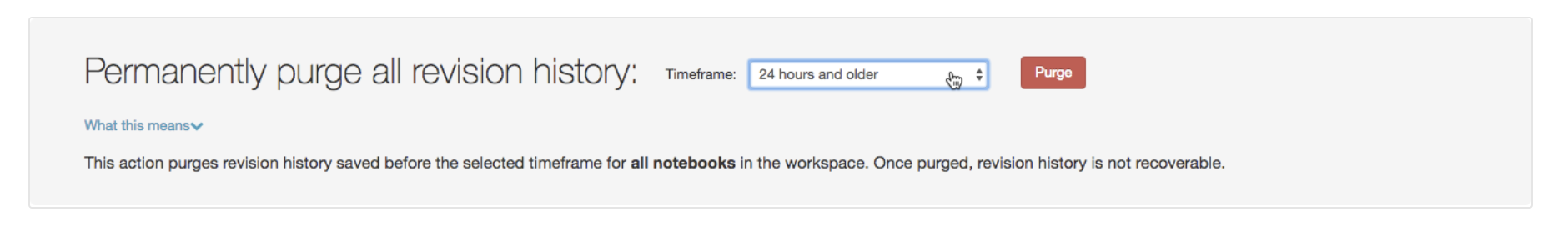

You also have the option to purge Databricks notebook revision history, which is useful to ensure that old query results are permanently deleted:

Getting Started with CCPA Compliance for Data and Analytics

With the Databricks Unified Data Analytics Platform and Delta Lake, you can bring enhanced data security, reliability, performance, and lifecycle management to your data lake while delivering on all your analytics needs. Organizations can now quickly find and remove individual records from a data lake to meet CCPA access requests and compliance requirements without hindering their business.

Learn more about Delta Lake and the Databricks Unified Data Analytics Platform. Sign-up for your free Databricks trial now.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read