Trust but Verify with Databricks

As enterprises modernize their data infrastructure to make data-driven decisions, teams across the organization become consumers of that platform. The data workloads grow exponentially, where cloud data lake becomes the centralized storage for enterprise-wide functions and different tools & technologies are used to gain insights out of it. For cloud security teams, the addition of more services and more users means the potential of additional vulnerabilities and security threats. They need to ensure that any data access adheres to enterprise governance controls, which could be easily monitored and audited. Organizations are faced with the challenge of balancing broader access to data in order to make better business decisions, by following a myriad number of controls and regulations to prevent unauthorized access and data leaks. Databricks helps to address this security challenge by providing visibility into all platform activities through Audit Logs, which when combined with cloud provider activity logging becomes a powerful tracking tool in the hands of security and admin teams.

Databricks Audit Logs

Audit Logging allows enterprise security and admins to monitor all access to data and other cloud resources, which helps to establish an increased level of trust with the users. Security teams gain insight into a host of activities occurring within or from a Databricks workspace, like:

- Cluster administration

- Permission management

- Workspace access via the Web Application or the API

- And much more…

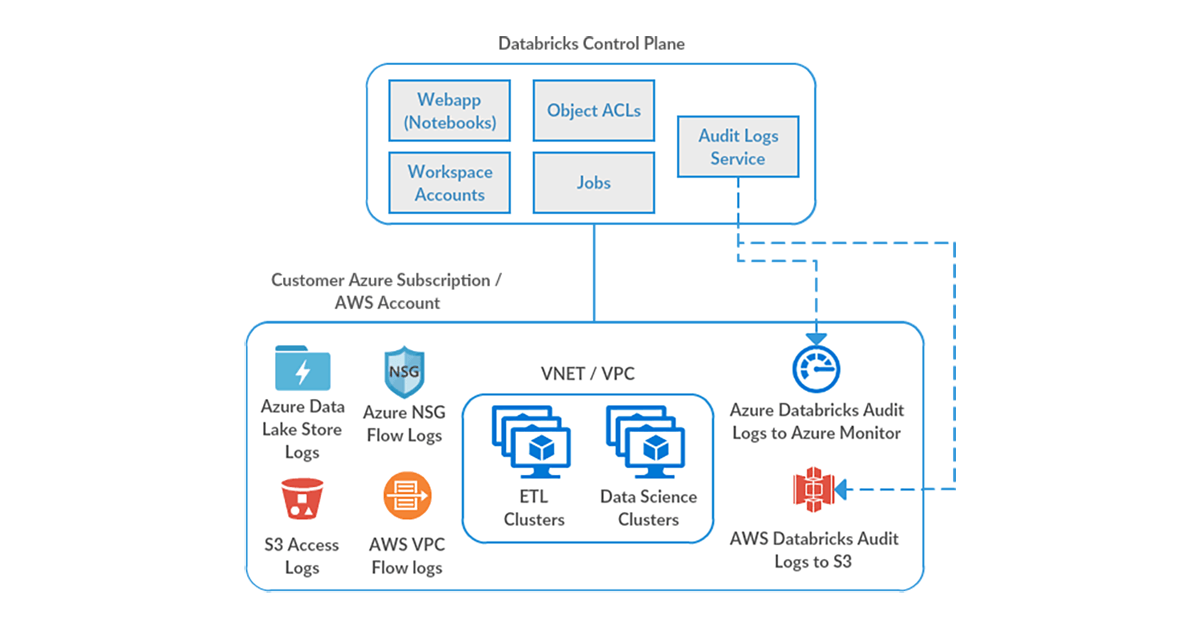

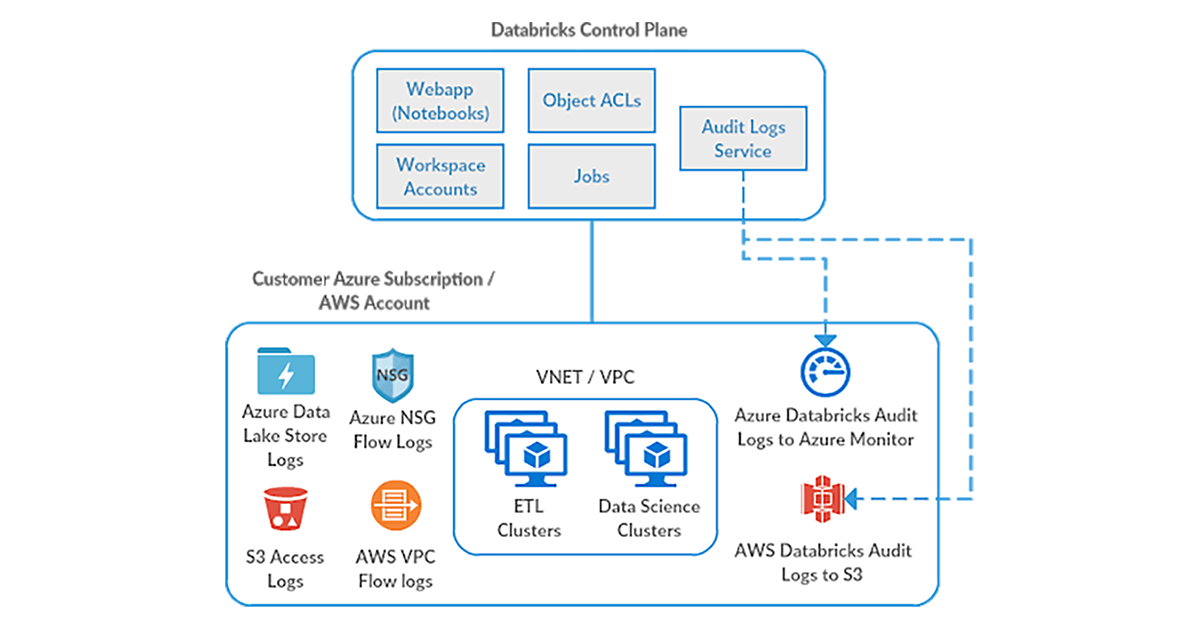

Audit logs can be configured to be delivered to your cloud storage (Azure / AWS). From there, Databricks or any other log analysis service could be used to find anomalies of interest, and integrated with cloud-based notification services to create a seamless alerting workflow. We’ll discuss some scenarios where Databricks audit logs could prove to be a critical security solution asset.

Workspace Access Control

Issue: An admin accidentally adds a new user to a group that has an elevated access level that the user should not have been granted. They realize the mistake a few days later and remove the user from the relevant group.

Vulnerability: The admin is worried that the user may have used the workspace in a way s/he was not entitled to, during the period of elevated access level. This particular group has the ability to grant permissions across Workspace objects. The admin needs to ensure that this user did not share clusters or jobs with other users, which would cause cascading security issues in terms of access control.

Solution: The admin can use Databricks Audit Logs to see the exact amount of time that the user was in the wrong group. They can analyze every activity the user made during that time period to see if they had taken advantage of the higher privileges in the workspace. In this case, if the user had not behaved maliciously, it could be proved by Audit Logs.

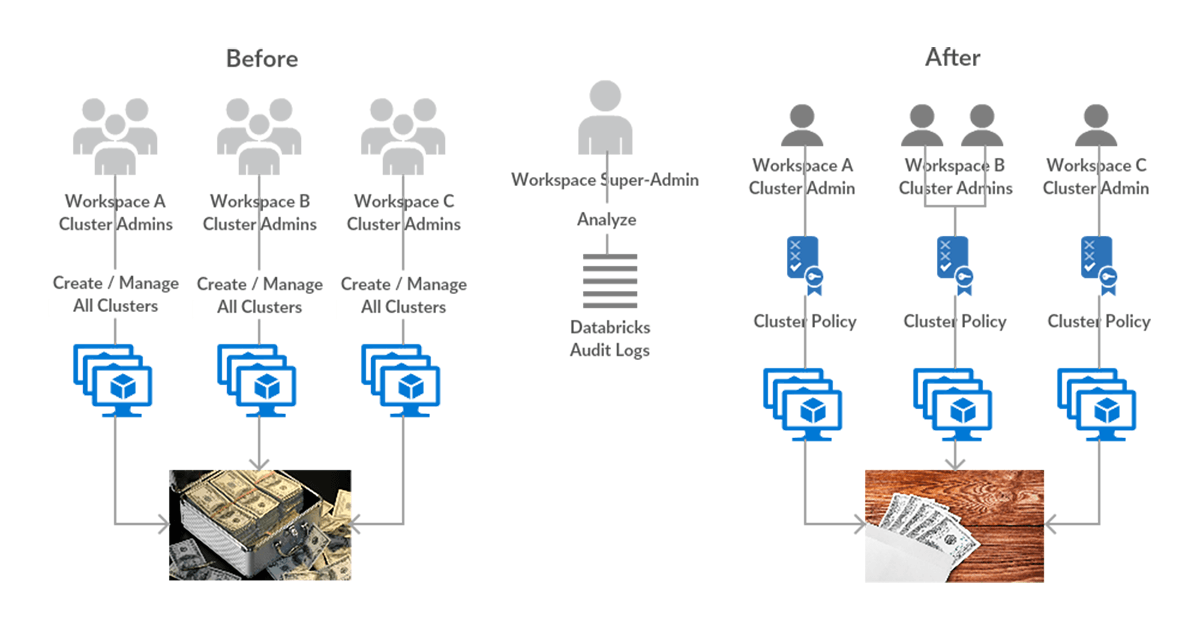

Workspace Budget Control

Issue: Databricks admins want to ensure that teams are using the service within allocated budgets. But a few workspace admins have given many of their users broad controls to create and manage clusters.

Vulnerability: The admin is worried that large clusters are being created or existing clusters are being resized due to elevated cluster provisioning controls. This could put relevant teams over their allocated budgets.

Solution: The admin can use Audit Logs to watch over cluster activities. They can see when cluster creation or resizing occurs. This enables them to notify their users to keep within allocated budget for respective teams. Additionally, the admin can create Cluster Policies to address this concern in the future.

Cloud Provider Infrastructure Logs

Databricks logging allows security and admin teams to demonstrate conformance to data governance standards within or from a Databricks workspace. Customers, especially in the regulated industries, also need records on activities like:

- User access control to cloud data storage

- Cloud Identity and Access Management roles

- User access to cloud network and compute

- And much more...

Databricks audit logs and integrations with different cloud provider logs provide the necessary proof to meet varying degrees of compliance. We’ll now discuss some of the scenarios where such integrations could be useful.

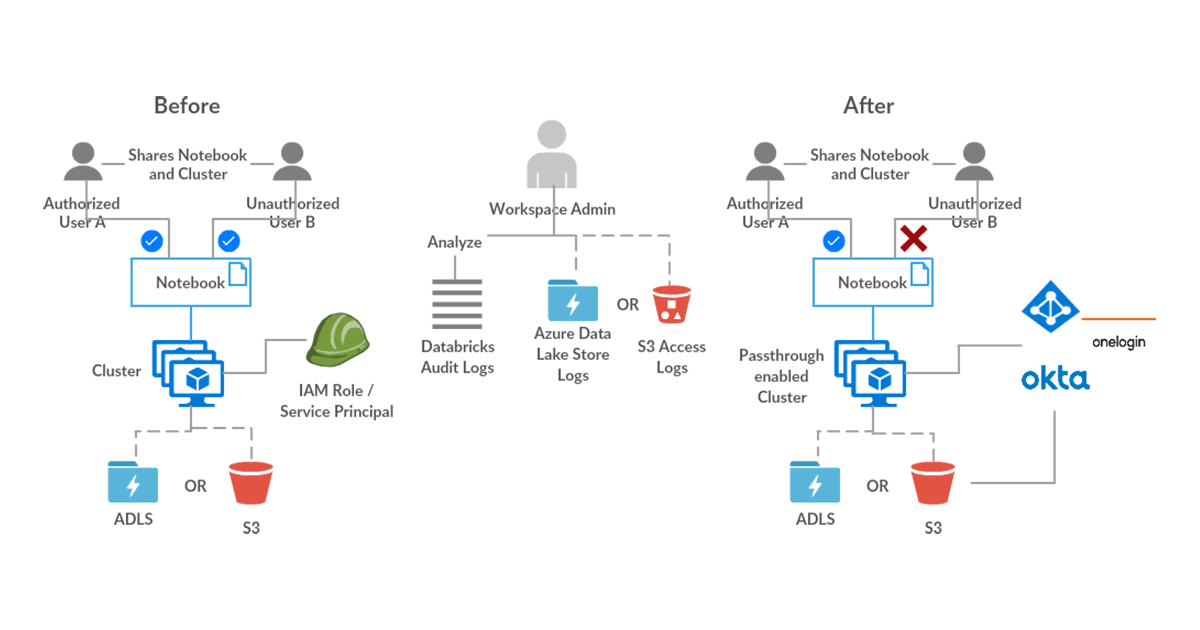

Data Access Security Controls

Issue: A healthcare company wants to ensure that only verified or allowed users access their sensitive data. This data is in cloud storage and a group of verified users have access to this bucket. An issue could occur if the verified user shares the Databricks notebook and the cluster that are used to access this data, with a non-verified user.

Vulnerability: The non-verified user could access the sensitive data and potentially use it for nefarious purposes. Administrators need to ensure that there are no loopholes in the data access security controls and verify this with an audit of who is accessing the cloud storage.

Solution: The admin could enforce that Databricks users access the cloud storage only from a passthrough-enabled (Azure / AWS) cluster. This will ensure that even if a user accidentally shares access to this cluster with a non-verified user, that user will not be able to access the underlying data. Audit of which user is accessing which file/folder can be delivered using the cloud storage access logs capability (Azure / AWS). These logs will prove that only verified users are accessing the classified data which could be used to meet compliance.

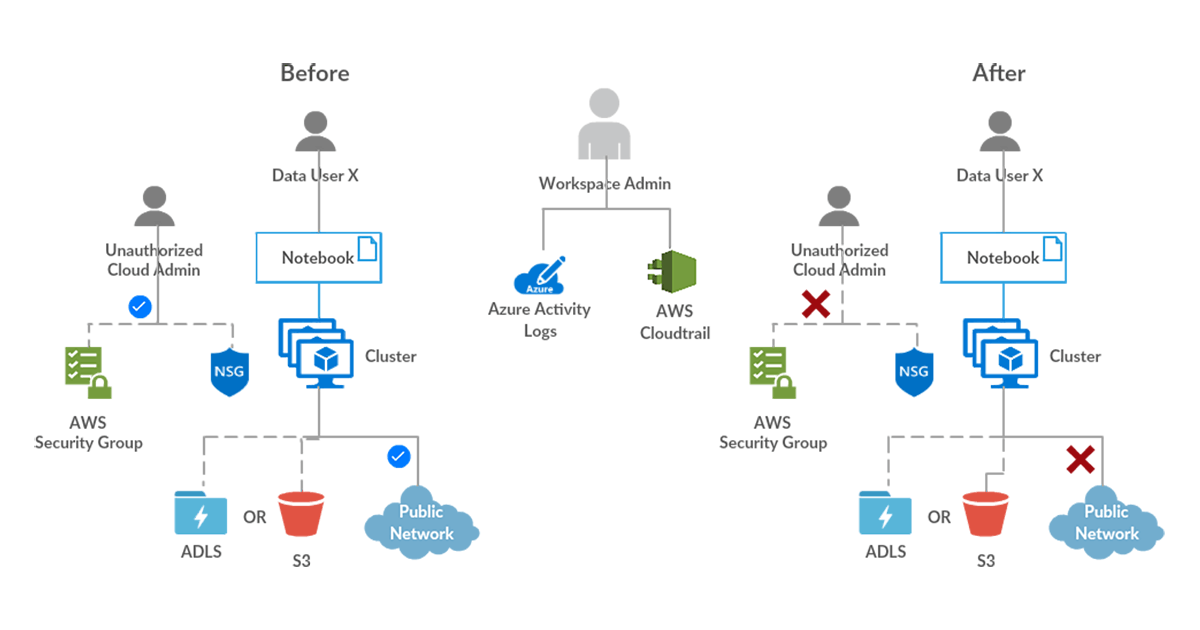

Data Exfiltration Controls

Issue: A group of admins are allowed elevated permissions in the cloud account / subscription to provision Databricks workspaces and manage related cloud infrastructure like security groups, subnets etc. One of these admins updates the outbound security group rules to allow for extra egress locations.

Vulnerability: Users can now access the Databricks workspace and exfiltrate data to this new location that was added to the outbound security group rules.

Solution: Admins should always follow the best practices of shared responsibility model in cloud and assign elevated cloud account/subscription permissions only to a minimum set of authorized superusers. In this case, one could monitor if such changes are being made and by whom using cloud provider activity logs (Azure / AWS). Additionally, admins should also configure appropriate access control in the Databricks workspace (Azure / AWS) and can monitor that access in Databricks Audit Logs.

Getting started with workspace auditing

Tracking workspace activity using Databricks audit logs and various cloud provider logs provides security and admin teams the insights they need to allow their users access the required data while conforming to enterprise governance controls. Databricks users are also comfortable with the understanding that everything that needs to be audited is, and they are working in a safe and secure cloud environment. This enables more and better data-driven decisions throughout the organization. We’ll do a recap of different types of logs worth looking into:

- Databricks Audit Logs (Azure / AWS)

- Cloud Storage Access Logs (Azure / AWS)

- Cloud Provider Activity Logs / CloudTrail (Azure / AWS)

- Virtual Network Traffic Flow Logs (Azure / AWS)

We plan to publish deep dives into how to analyze the above types of logs in the near future. Until then, please feel free to reach out to your Databricks account team for any questions.