Data Exfiltration Protection With Databricks on AWS

In this blog, you will learn a series of steps you can take to harden your Databricks deployment from a network security standpoint, reducing the risk of Data exfiltration happening in your organization.

Data Exfiltration is every company's worst nightmare, and in some cases, even the largest companies never recover from it. It's one of the last steps in the cyber kill chain, and with maximum penalties under the General Data Protection Regulation (GDPR) of €20 million (~ $23 million) or 4% of annual global turnover – it's arguably the most costly.

But first, let's define what data exfiltration is. Data exfiltration, or data extrusion, is a type of security breach that leads to the unauthorized transfer of data. This data often contains sensitive customer information, the loss of which can lead to massive fines, reputational damage, and an irreparable breach of trust. What makes it especially difficult to protect against is that it can be caused by both external and internal actors, and their motives can be either malicious or accidental. It can also be extremely difficult to detect, with organizations often not knowing that it's happened until their data is already in the public domain and their logo is all over the evening news.

There are tons of reasons why preventing data exfiltration is top of mind for organizations across industries. One that we often hear about are concerns over platform-as-a-service (PaaS). Over the last few years, more and more companies are seeing the benefits of adopting a PaaS model for their enterprise data and analytics needs. Outsourcing the management of your data and analytics service can certainly free up your data engineers and data scientists to deliver even more value to your organization. But if the PaaS service provider requires you to store all of your data with them, or if it processes the data in their network, solving for data exfiltration can become an unmanageable problem. In that scenario, the only assurances you really have are whatever industry-standard compliance certifications they can share with you.

The Databricks Lakehouse platform enables customers to store their sensitive data in their existing AWS account and process it in their own private virtual network(s), all while preserving the PaaS nature of the fastest growing Data & AI service in the cloud. And now, following the announcement of Private Workspaces with AWS PrivateLink, in conjunction with a cloud-native managed firewall service on AWS, customers can benefit from a new data exfiltration protection architecture- one that's been informed by years of work with the world's most security-conscious customers.

Data exfiltration protection architecture

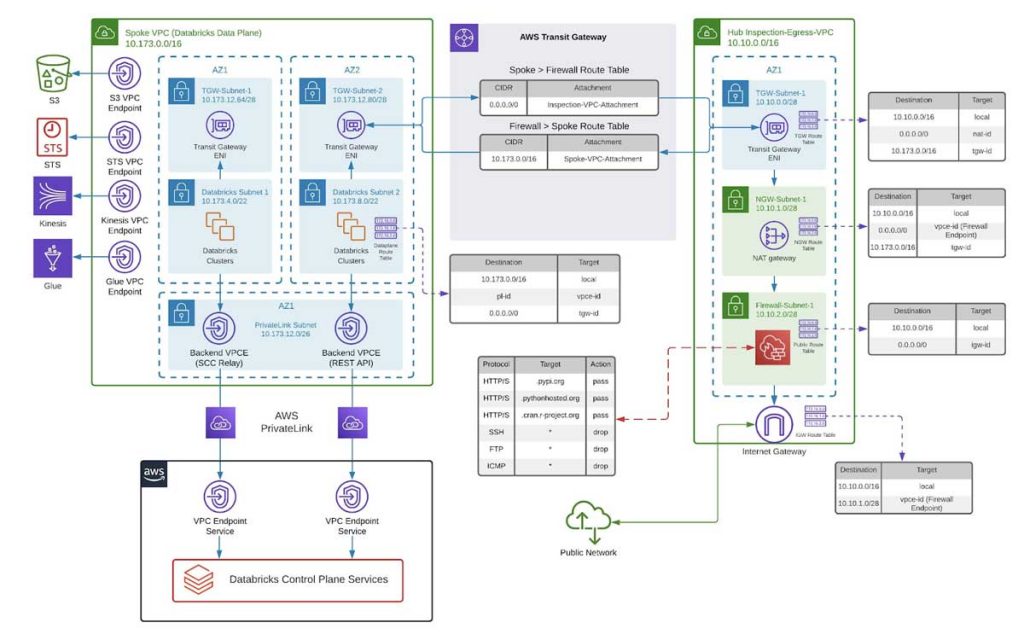

We recommend a hub and spoke topology reference architecture, powered by AWS Transit Gateway. The hub will consist of a central inspection and egress virtual private cloud (VPC), while the Spoke VPC houses federated Databricks workspaces for different business units or segregated teams. In this way, you create your own version of a centralized deployment model for your egress architecture, as is recommended for large enterprises.

A high-level view of this architecture and the steps required to implement it are provided below:

- Deploy a Databricks Workspace into your own Spoke VPC

- Set up VPC endpoints in-scope AWS Services in the Spoke VPC

- (Optional) Set up AWS Glue or an External Hive Metastore

- Create a Central Inspection/Egress VPC

- Deploy AWS Network Firewall to the Inspection/Egress VPC

- Link the Hub & Spoke with AWS Transit Gateway

- Validate Deployment

- Clear up the Spoke VPC resources

Secure AWS Databricks deployment details

Step 1: Deploy a Databricks Workspace in your own spoke VPC

Databricks enterprise security and admin features allow customers to deploy Databricks using your own Customer Managed VPC, which enables you to have greater flexibility and control over the configuration of your spoke architecture. You can also leverage our feature-rich integration with Hashicorp Terraform to create or manage deployments via Infrastructure-as-a-Code, so that you can rinse and repeat the operation across the wider organization.

Prior to deploying the workspace, you'll need to create the following prerequisite resources in your AWS account:

- A cross-account IAM role

- An S3 bucket (used for the default storage)

- A Customer Managed VPC that meets the VPC requirements

- VPC Endpoints in their own dedicated subnet for the Databricks backend services (one each for the Web Application and SCC Relay). Please see the Enable AWS PrivateLink documentation for full details, including the endpoint service names for your target region.

Once you've done that, you would need to register the VPC Endpoints for Databricks backend services by following steps 3-6 of the Enable AWS PrivateLink documentation, before creating a new workspace using the workspace API.

In the example below, a Databricks workspace has been deployed into a spoke VPC with a CIDR range of 10.173.0.0/16 and two subnets in different availability zones with the ranges 10.173.4.0/22 and 10.173.8.0/22. VPC Endpoints for the Databricks backend services have also been deployed into a dedicated subnet with a smaller CIDR range- 10.173.12.0/26. You can use these IP ranges to follow the deployment steps and diagrams below.

Step 2: Set up VPC endpoints for in-scope AWS Services in the Spoke VPC

Over the last decade, there have been many well-publicized data breaches from incorrectly configured cloud storage containers. So, in terms of major threat vectors and mitigating them, there's no better place to start than by setting up your VPC Endpoints.

As well as setting up your VPC endpoints, it's also well worth considering how these might be locked down further. Amazon S3 has a host of ways you can further protect your data, and we recommend you use these wherever possible.

In the AWS console:

- Go to Services > Virtual Private Cloud > Endpoints.

- Select Create endpoint and create VPC endpoints for S3, STS and Kinesis, choosing the VPC, subnets/route table and (where applicable) Security Group of the Customer Managed VPC created as part of Step 1 above:

| Service Name | Endpoint Type/b> | Policy | Security Group |

| com.amazonaws. |

Gateway | Leave as "Full Access" for now | N/A |

| com.amazonaws. |

Interface | Leave as "Full Access" for now | The Security Group for the Customer Managed VPC created above |

| com.amazonaws. |

Interface | Leave as "Full Access" for now | The Security Group for the Customer Managed VPC created above |

Note - If you want to add VPC endpoint policies so that users can only access the AWS resources that you specify, please contact your Databricks account team as you will need to add the Databricks AMI and container S3 buckets to the Endpoint Policy for S3.

Please note that applying a regional endpoint to your VPC will prevent cross-region access to any AWS services- for example S3 buckets in other AWS regions. If cross-region access is required, you will need to allow-list the global AWS endpoints for S3 and STS in the AWS Network Firewall Rules below

Step 3: (Optional) set up AWS Glue or an external metastore

For data cataloging and discovery, you can either leverage a managed Hive Metastore running in the Databricks Control Plane, host your own, or use AWS Glue. The steps for setting these up are fully documented in the links below.

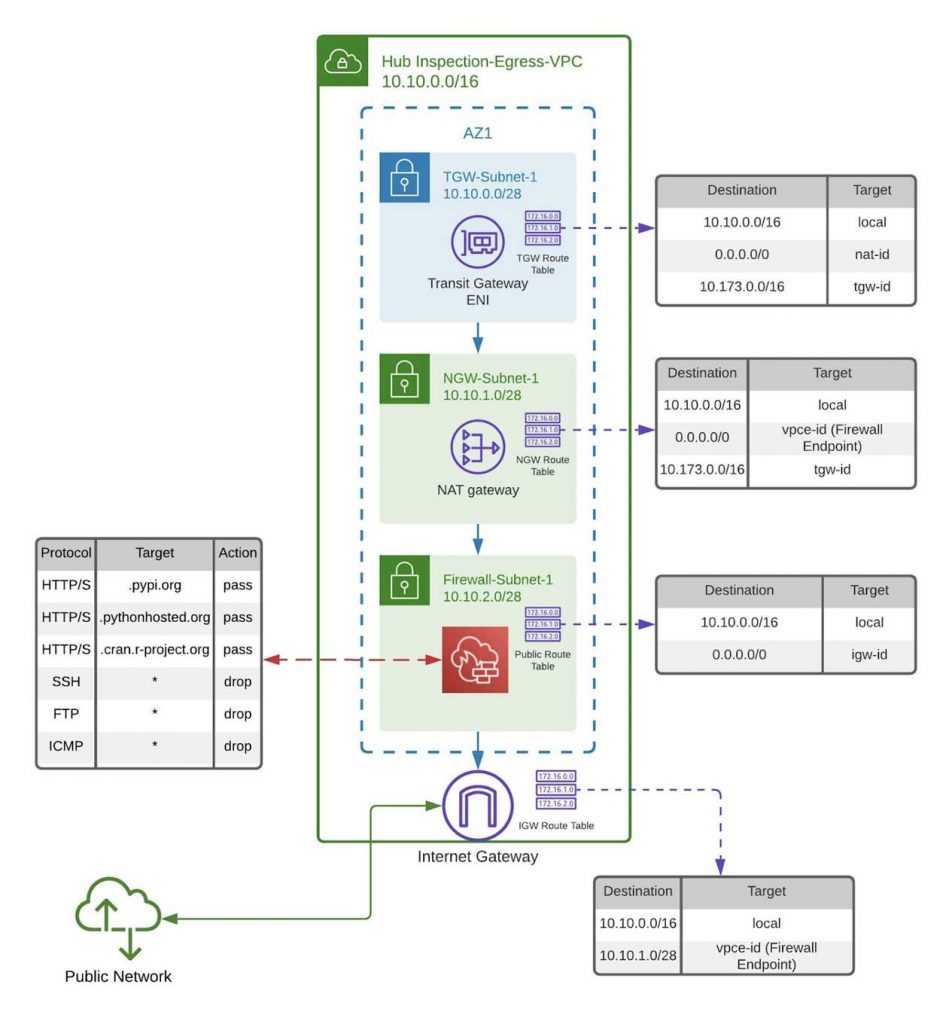

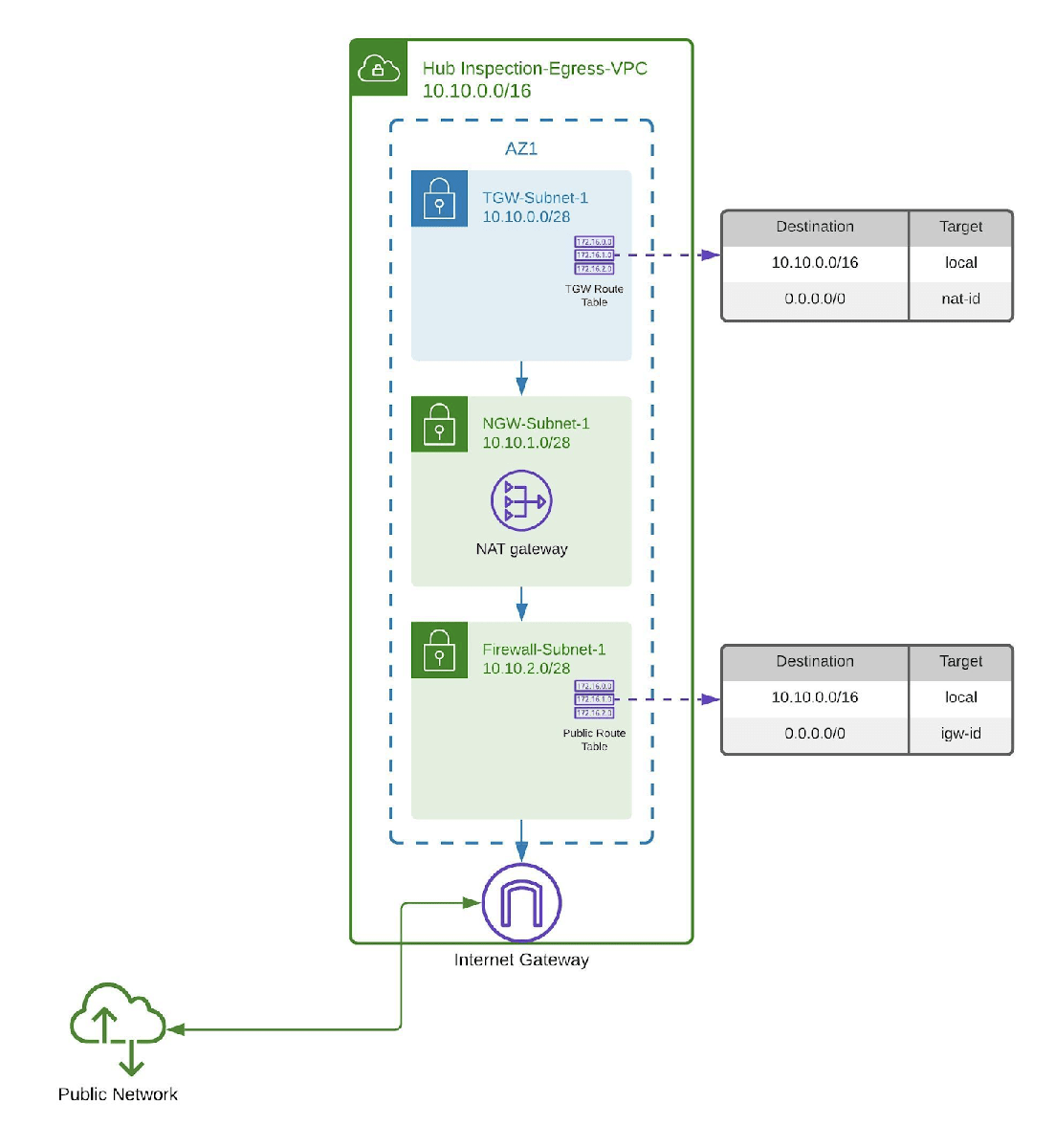

Step 4- Create a Central Inspection/Egress VPC

Next, you'll create a central inspection/egress VPC, which once we've finished should look like this:

For simplicity, we'll demonstrate the deployment into a single availability zone. For a high availability solution, you would need to replicate this deployment across each availability zone within the same region.

- Go to Your VPCs and select Create VPC

- As per the Inspection VPC diagram above, create a VPC called "Inspection-Egress-VPC" with the CIDR range 10.10.0.0/16

- Go to Subnets and select Create subnet

- As per the Inspection-Egress VPC diagram above, create 3 subnets in the above VPC with the CIDR ranges 10.10.0.0/28, 10.10.1.0/28 and 10.10.2.0/28. Call them TGW-Subnet-1, NGW-Subnet-1 and Firewall-Subnet-1 respectively

- Go to Security Groups and select Create security group. Create a new Security Group for the Inspection/Egress VPC as follows:

| Name | Description | Inbound rules | Outbound rules |

| Inspection-Egress-VPC-SG | SG for the Inspection/Egress VPC | Add a new rule for All traffic from 10.173.0.0/16 (the Spoke VPC) | Leave as All traffic to 0.0.0.0/0 |

Because you're going from private to public networks you will need to add both a NAT and Internet Gateway. This helps from a security point of view because the NAT will sit on the trusted side of the AWS Network Firewall, giving an additional layer of protection (a NAT GW not only gives us a single external IP address, it will also refuse unsolicited inbound connections from the internet).

-

- Go to Internet Gateways and select Create internet gateway

- Enter a name like "Egress-IGW" and select Create internet gateway

- On the Egress-IGW page, select Actions > Attach to VPC

- Select Egress-VPC and then click Attach internet gateway

- Go to Route Tables and select Create route table

- Create a new route table called "Public Route Table" and associate it with the Inspection-Egress-VPC created above

- Select the route table > Routes > Edit routes and add a new route to Destination 0.0.0.0/0 with a Target of igw* (if you start typing it, Egress-IGW created above should appear)

- Select Save routes and then Subnet associations > Edit subnet associations

- Add an association to Firewall-Subnet-1 created above

- Go to NAT Gateways and select Create NAT gateway

- Complete the NAT gateway settings page as follows:

| Name | Subnet | Elastic IP allocation ID |

| Egress-NGW-1 | NGW-Subnet-1 | Allocate Elastic IP |

- Select Create NAT gateway

- Go to Route Tables and select Create route table

- Create a new route table called "TGW Route Table" and associated with the Inspection-Egress-VPC created above

- Select the route table > Routes > Edit routes and add a new route to Destination 0.0.0.0/0 with a Target of nat-* (if you start typing it, Egress-NGW-1 created above should appear)

- Select Save routes and then Subnet associations > Edit subnet associations

- Add an association to TGW-Subnet-1 created above

At the end of this step, your central inspection/egress VPC should look like this:

Step 5- Deploy AWS Network Firewall to the Inspection/Egress VPC

Now that you've created the networks, it's time to deploy and configure your AWS Network Firewall.

- Go to Firewalls and select Create firewall

- Create a Firewall as follows (Select Create and associate an empty firewall policy under Associated firewall policy):

| Name | VPC | Firewall subnets | New firewall policy name |

| Egress-Inspection-VPC | Firewall-Subnet-1 | Egress-Policy |

- Go to Firewall details and scroll down to Firewall endpoints. Make a note of the Endpoint ID. You'll need this later to route traffic to and from the firewall.

In order to configure our firewall rules, you're going to use the AWS CLI. The reason for this is that in order for AWS Network Firewall to work in a hub & spoke model, you need to provide it with a HOME_NET variable - that is the CIDR ranges of the networks you want to protect. Currently, this is only configurable via the CLI.

- Download, install and configure the AWS CLI.

- Test that it works as expected by running the command

aws network-firewall list-firewalls - Create a JSON file for your allow-list rules. A template for all of the files you need to create can be found on GitHub here. You'll need to replace the values for anything capitalized as follows: . For example and should be replaced with the CIDR ranges for your Customer Managed VPC and central Inspection/Egress VPC as appropriate.

- In the example below, we've included the managed Hive Metastore URL. If you chose to use Glue or host your own in step 3, this can be omitted.

- Note also that the HOME_NET variable should contain all of your spoke CIDR ranges, as well as the CIDR range of the Inspection/Egress VPC itself. Save it as "allow-list-fqdns.json." An example of a valid rule group configuration for the eu-west-1 region would be as follows:

- Use the AWS create-rule-group command to create a rule group, for example:

aws network-firewall create-rule-group --rule-group-name Databricks-FQDNs --rule-group file://allow-list-fqdns.json --type STATEFUL --capacity 100

Finally, add some basic deny rules to cater for common firewall scenarios such as preventing the use of protocols like SSH/SFTP, FTP and ICMP. Create another JSON file, this time called "deny-list.json." An example of a valid rule group configuration would be as follows:

- Again, use the AWS create-rule-group command to create a rule group, for example:

aws network-firewall create-rule-group --rule-group-name Deny-Protocols --rule-group file://deny-list.json --type STATEFUL --capacity 100

Now add the following rule groups to the Egress-Policy created above.

-

- Go to Firewalls and select Firewall policies

- Select the Egress-Policy created above

- In Stateless default actions, select Edit and change Choose how to treat fragmented packets to Use the same actions for all packets

- You Should now have Forward to stateful rule groups for all types of packet

- Scroll down to Stateful rule groups and select Add rule groups > Add stateful rule groups to the firewall policy

- Select both rule groups created above (Databricks-FQDNs and Deny-Protocols) and then select Add stateful rule group

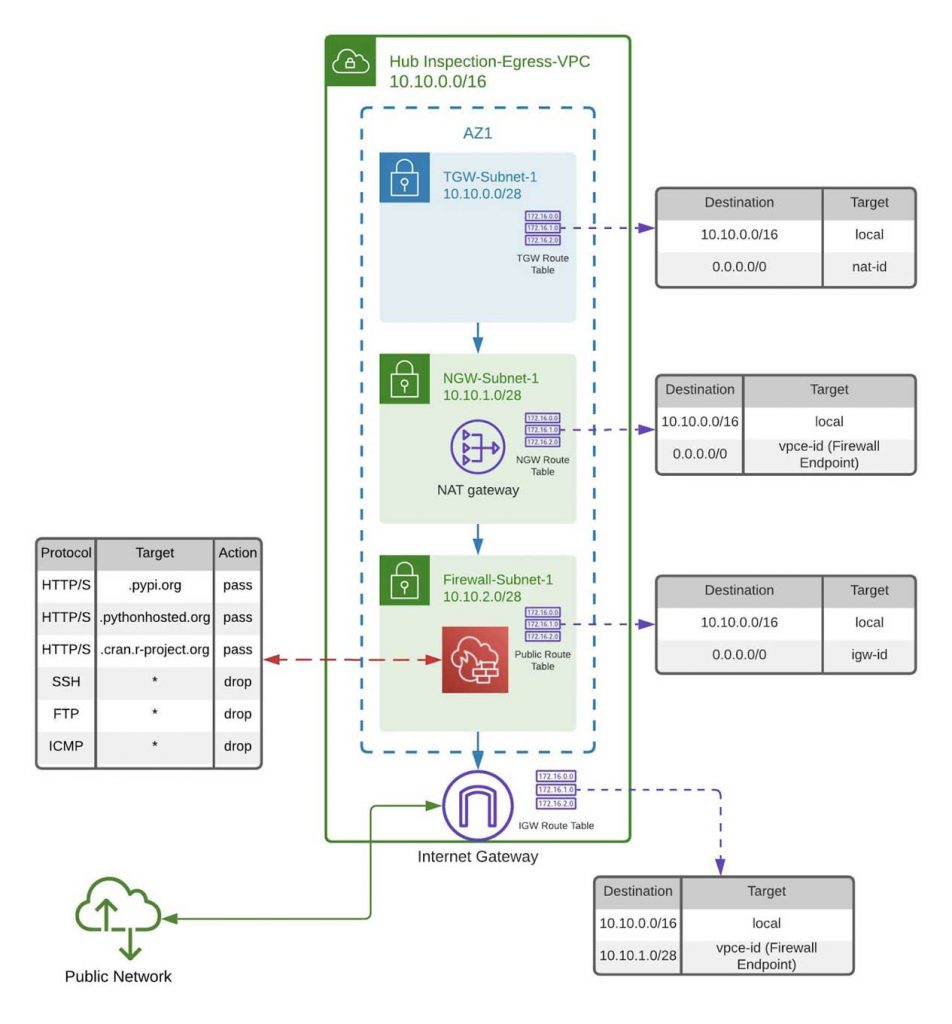

Our AWS Network Firewall is now deployed and configured, all you need to do now is route traffic to it.

- Go to Route Tables and select Create route table

- Create a new route table called "NGW Route Table" and associated with the Inspection-VPC created above

- Select the route table > Routes > Edit routes and add a new route to Destination 0.0.0.0/0 with a Target of vpce-* (if you start typing it, the VPC endpoint id for the firewall created above should appear).

- Select Save routes and then Subnet associations > Edit subnet associations

- Add an association to NGW-Subnet-1 created above

- Select Create route table again

- Create a new route table called "Ingress Route Table" and associated with the Inspection-VPC created above

- Select the route table > Routes > Edit routes and add a new route to Destination 10.10.1.0/28 (NGW-Subnet-1 created above) with a Target of vpce-* (if you start typing it, the VPC endpoint id for the firewall created above should appear.

- Select Save routes and then Edge Associations > Edit edge associations

- Add an association to the Egress-IGW created above

These steps walk through creating a firewall configuration that restricts outbound http/s traffic to an approved set of Fully Qualified Domain Names (FQDNs). So far, this blog has focussed a lot on this last line of defense, but it's also worth taking a step back and considering the multi-layered approach taken here. For example, the security group for the Spoke VPC only allows outbound traffic. Nothing can access this VPC unless it is in response to a request that originates from that VPC. This approach is enabled by the Secure Cluster Connectivity feature offered by Databricks and allows us to protect resources from the inside out.

At the end of this step, your central inspection/egress VPC should look like this:

Now that our spoke and inspection/egress VPCs are ready to go, all you need to do is link them all together, and AWS Transit Gateway is the perfect solution for that.

Step 6- Link the spoke, inspection and hub VPCs with AWS Transit Gateway

First, let's create a Transit Gateway and link our Databricks data plane via TGW subnets:

-

- Go to Transit Gateways > Create Transit Gateway

- Enter a Name tag of "Hub-TGW" and uncheck the following:

- Default route table association

- Default route table propagation

- Select Create Transit Gateway

- Go to Subnets and select Create subnet

- Create a new subnet for each availability zone in the Customer Managed VPC with the next available CIDR ranges (for example 10.173.12.64/28 and 10.173.12.80/28) and name them appropriately (for example "TGW-Subnet-1" and "TGW-Subnet-2")

- Go to Transit Gateway Attachments > Create Transit Gateway Attachment

- Complete the Create Transit Gateway Attachment page as follows:

| Transit Gateway ID | Attachment type | Attachment name tag | VPC ID | Subnet IDs |

| Hub-TGW | VPC | Spoke-VPC-Attachment | Customer Managed VPC created above | TGW-Subnet-1 and TGW-Subnet-2 created above |

- Select Create attachment

Repeat the process to create Transit Gateway attachments for the TGW to Inspection/Egress-VPC:

- Select Create Transit Gateway Attachment

- Complete the Create Transit Gateway Attachment page as follows:

| Transit Gateway ID | Attachment type | Attachment name tag | VPC ID | Subnet IDs |

| Hub-TGW | VPC | Inspection-Egress-VPC-Attachment | Inspection-Egress-VPC | TGW-Subnet-1 |

- Select Create attachment

All of the logic that determines what routes where via a Transit Gateway is encapsulated within Transit Gateway Route Tables. Next we're going to create some TGW route tables for our Hub & Spoke networks.

- Go to Transit Gateway Route Tables > Create Transit Gateway Route Table

- Give it a Name tag of "Spoke > Firewall Route Table" and associate it with Transit Gateway ID Hub-TGW

- Select Create Transit Gateway Route Table

- Repeat the process, this time creating a route table with a Name tag of "Firewall > Spoke Route Table" and again associating it with Hub-TGW

Now associate these route tables and, just as importantly, create some routes:

-

- Select "Spoke > Firewall Route Table" created above > the Association tab > Create association

- In Choose attachment to associate, select Spoke-VPC-Attachment and then Create association

- For the same route table, select the Routes tab and then Create static route

- In CIDR enter 0.0.0.0/0 and in Choose attachment select Inspection-Egress-VPC-Attachment. Select Create static route.

-

- Select "Firewall > Spoke Route Table" created above > the Association tab > Create association

- In Choose attachment to associate, select Inspection-Egress-VPC-Attachment and select Create association

- Select the Routes tab for "Firewall > Spoke Route Table"

- Select Create static route

- In CIDR, enter 10.173.0.0/16 and in Choose attachment, select Spoke-VPC-Attachment. Select Create static route. This route will be used to return traffic to the Spoke-VPC.

The Transit Gateway should be set up and ready to go, now all that needs to be done is update the route tables in each of the subnets so that traffic flows through it.

- Go to Route Tables and find the NGW Route Table that you created in the Inspection-Egress-VPC during Step 5 earlier

- Select the Routes tab > Edit routes and add a new route with a Destination of 10.173.0.0/16 (the Spoke-VPC) and a Target of Hub-TGW

- Select Save routes

- Now find the TGW Route Table that you created in Egress-VPC earlier

- Again, select the Routes tab > Edit routes and add a new route with a Destination of 10.173.0.0/16 (the Spoke-VPC) and a Target of Hub-TGW

- Select Save routes

- Go to Route Tables and find the route table associated with the subnets that make up your Databricks data plane. It will have been created as part of the Customer Managed VPC in Step 1 above.

- Select the Routes tab > Edit routes. You should see a route to pl-* for com.amazonaws.

.s3 and potentially a route to 0.0.0.0/0 to a nat-* - Replace the target in the route for Destination 0.0.0.0/0 to be the Hub-TGW created above.

Step 7- Validate the deployment

To ensure there are no errors, we recommend some thorough testing before handing the environment over to end-users.

First you need to create a cluster. If that works, you can be confident that your connection to the Databricks secure cluster connectivity relay works as expected.

Next, check out Get started as a Databricks Workspace user, particularly the Explore the Quickstart Tutorial notebook as this is a great way to test the connectivity to a number of different sources- from the Hive Metastore to S3.

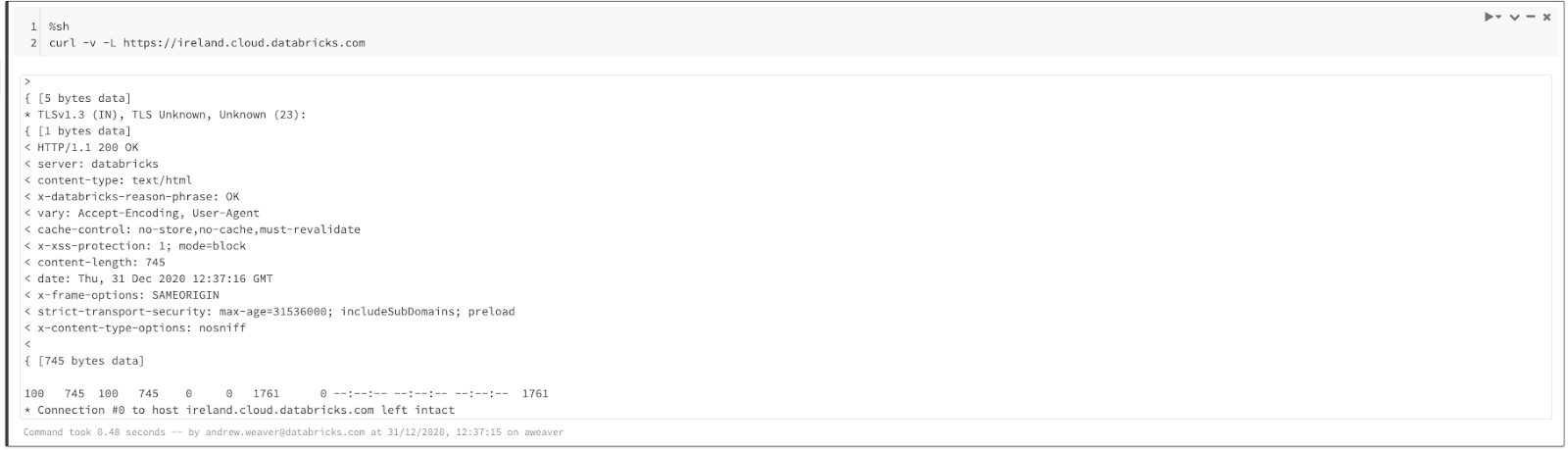

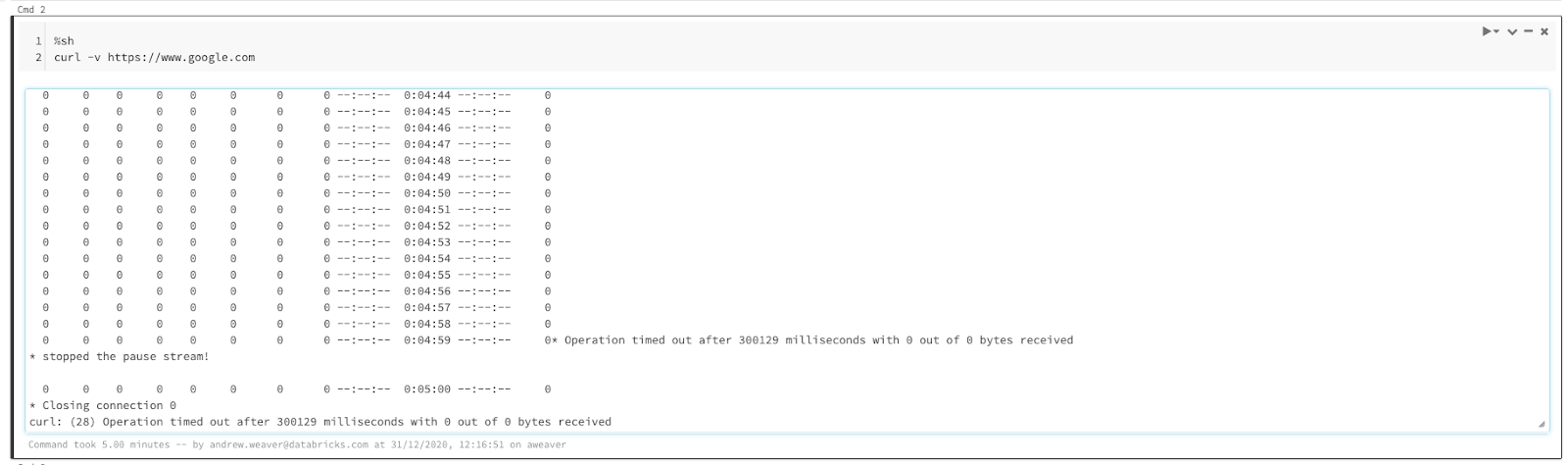

As an additional test, you could use %sh in a notebook to invoke curl and test connectivity to each of the required URLs.

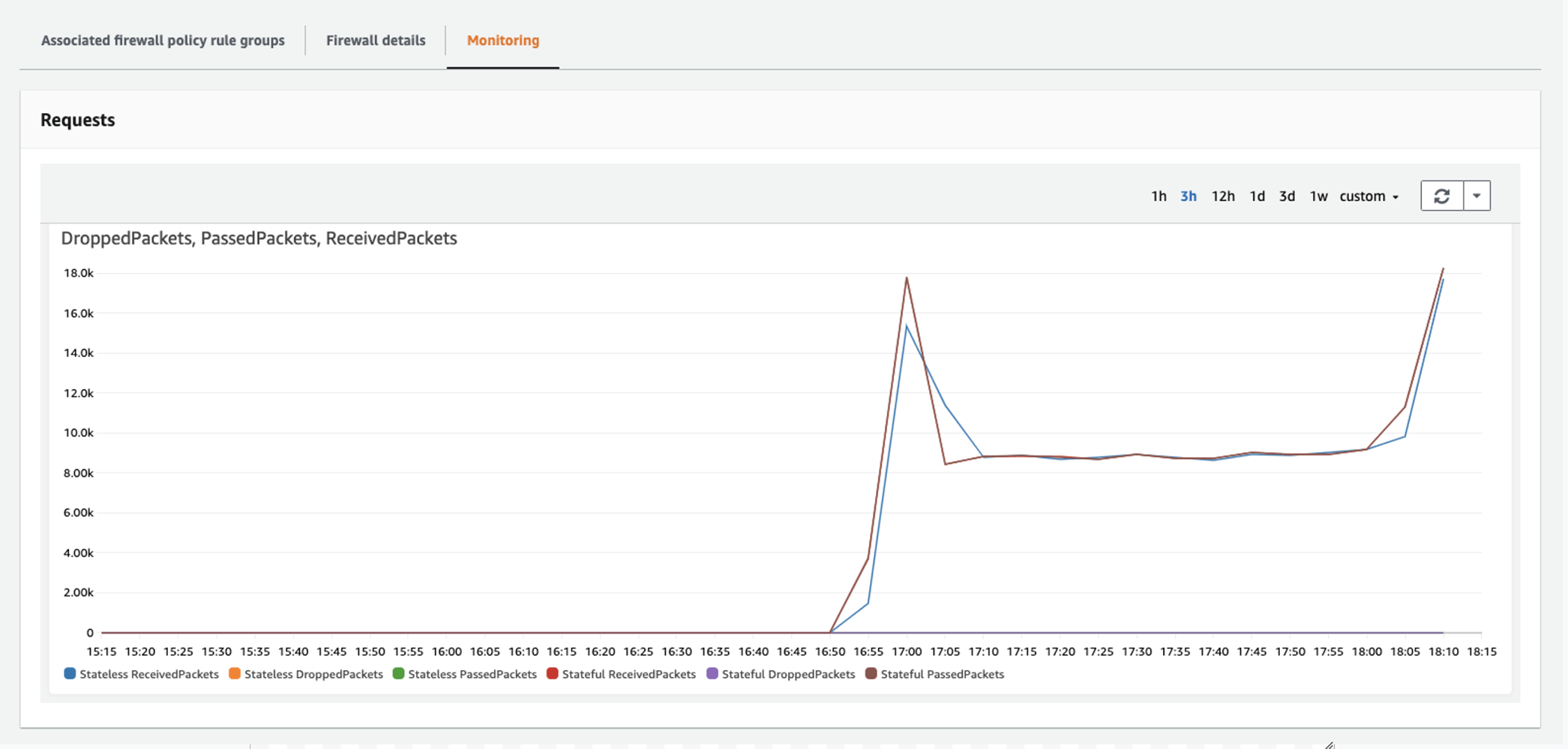

Now go to Firewalls in the AWS console and select the Hub-Inspection-Firewall you created above. On the Monitoring tab you should see the traffic generated above being routed through the firewall:

If you want a more granular level of detail, you can set up specific logging & monitoring configurations for your firewall, sending information about the network traffic flowing through your firewall and any actions applied to it to sinks such as CloudTrail or S3. What's more, by combining these with Databricks audit logs, you can build a 360-degree view of exactly how users are using their Databricks environment, and set-up alerts on any potential breaches of the acceptable use policy.

As well as positive testing, we recommend doing some negative tests of the firewall too. For example:

HTTPS requests to the Databricks Web App URL are allowed.

Whereas HTTPS requests to google.com fail.

Finally, it's worth testing the "doomsday scenario" as far as data exfiltration protection is concerned- that data could be leaked to an S3 bucket outside of your account. Since the global S3 URL has not been allow-listed, attempts to connect to S3 buckets outside of your region will fail:

And if you combine this with endpoint policies for Amazon S3, you can tightly enforce which S3 buckets a user can access from Databricks within your region too.

Step 8- Clear up the Spoke VPC resources

Depending on how you set up the Customer Managed VPC, you might find that there are now some unused resources in it, namely:

- A public subnet

- A NAT GW and EIP

- An IGW

Once you have completed your testing, it should be safe to detach and delete these resources. Before you do, it's worth double-checking that your traffic is routing through the AWS Network Firewall as expected, and not via the default NAT Gateway. You can do this in any of the following ways:

- Find the Route Table that applies to the subnets in your Customer Managed VPC. Make sure that the 0.0.0.0/0 route has a destination of your TGW rather than the default NAT Gateway.

- As described above, go to Firewalls in the AWS console and select the Hub-Inspection-Firewall created. On the Monitoring tab you should see all of the traffic that's been routed through the firewall.

- If you set up logging & monitoring configurations for your firewall, you can see all flow and alert log entries in either CloudWatch or S3. This should include the traffic that is being routed back to the Databricks control plane.

If your Databricks workspace continues to function as expected (for example you can start clusters and run notebook commands), you can be confident that everything is working correctly. In the event of a configuration error, you might see one of these issues:

| # | Issue | Things to check |

| 1 | Cluster creation fails after a few minutes with an error saying that it has Failed Fast |

|

| 2 | Cluster creation takes a long time and eventually fails with Container Launch Failure |

|

| 3 | Cluster creation takes a long time and eventually times out with Network Configuration Failure |

|

If clusters won't start, and more in-depth troubleshooting is required, you could create a test EC2 instance in one of your Customer Managed VPC subnets and use commands like curl to test network connectivity to the necessary URLs.

Next Steps

You can no longer put a price on data security. The cost of lost exfiltrated data is often the tip of the iceberg, compounded by the cost of long-term reputational damage, regulatory backlash, loss of IP, and more...

This blog shows an example firewall configuration and how security teams can use it to restrict outbound traffic based on a set of allowed FQDNs. It's important to note however that a one-size-fits-all approach will not work for every organization based on risk profile or sensitivity of data. There's plenty that can be done to lock this down further. As an example, this blog has focused on how to prevent data exfiltration from the data plane, which is where the vast majority of the data is processed and resides. But you could equally implement an architecture involving Front-end (user to workspace) AWS PrivateLink connections to restrict access to locked down VMS or Amazon Workspaces, therefore helping to mitigate any risk associated with the subsets of data that are returned to the Control Plane.

Customers should always engage the right security and risk professionals in their organizations to determine the appropriate access controls for each individual use case. This guide should be seen as a starting point, not the finishing line.

The war against cybercriminals and the many cyber threats faced in this connected, data-driven world is never won, but there are step-wise approaches like protecting against data exfiltration that you can take to fortify your defense.

This blog has focussed on how to prevent data exfiltration with an extra-secure architecture on AWS. But the best security is always based on a defense-in-depth approach. Learn more about the other platform features you can leverage in Databricks to protect your intellectual property, data and models. And learn how other customers are using Databricks to transform their business, and better still, how you can too!