Productionize Data Science With Repos on Databricks

Most data science solutions make data teams choose between flexibility for exploration and rigidity for production. As a result, data scientists often need to hand off their work to engineering teams that use a different technology stack and essentially rewrite their work in a new environment. This is not only costly but also delays the time it takes for a data scientist’s work to deliver value to the business.

The next-generation Data Science Workspace on Databricks navigates these trade-offs to provide an open and unified experience for modern data teams. As part of this Databricks Workspace, we are excited to announce public availability of the new Repos feature, which delivers repository-level integration with Git providers, enabling any member of the data team to follow best practices. Databricks Repos integrate with your developer toolkit with support for a wide range of Git providers, including Github, Bitbucket, Gitlab, and Microsoft Azure DevOps.

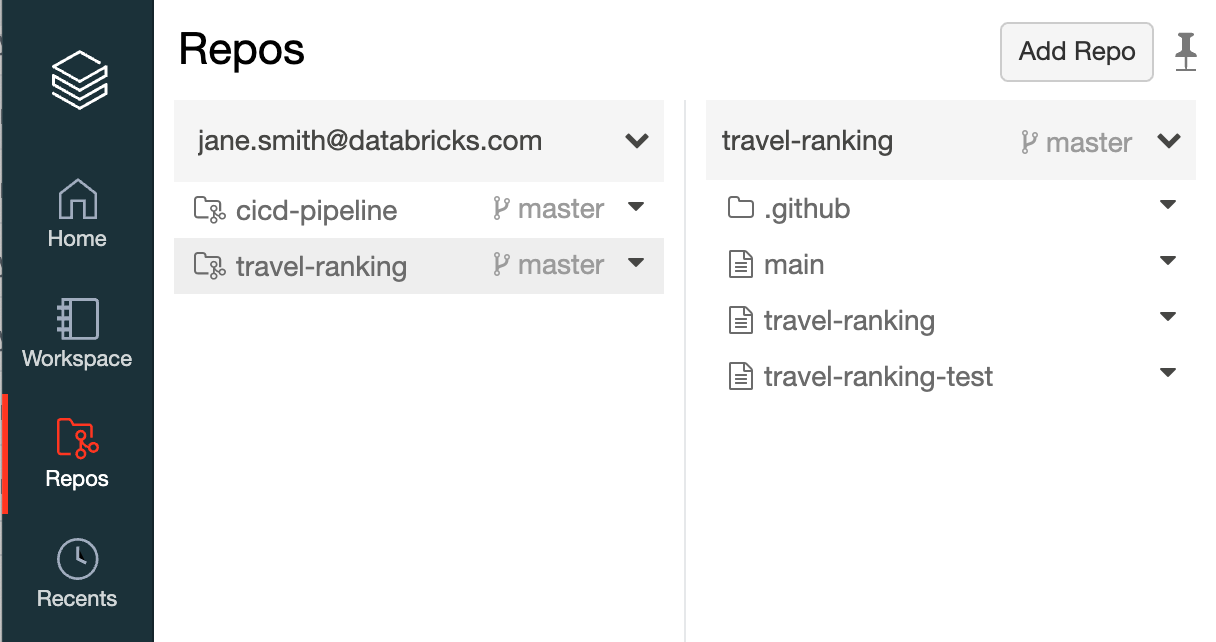

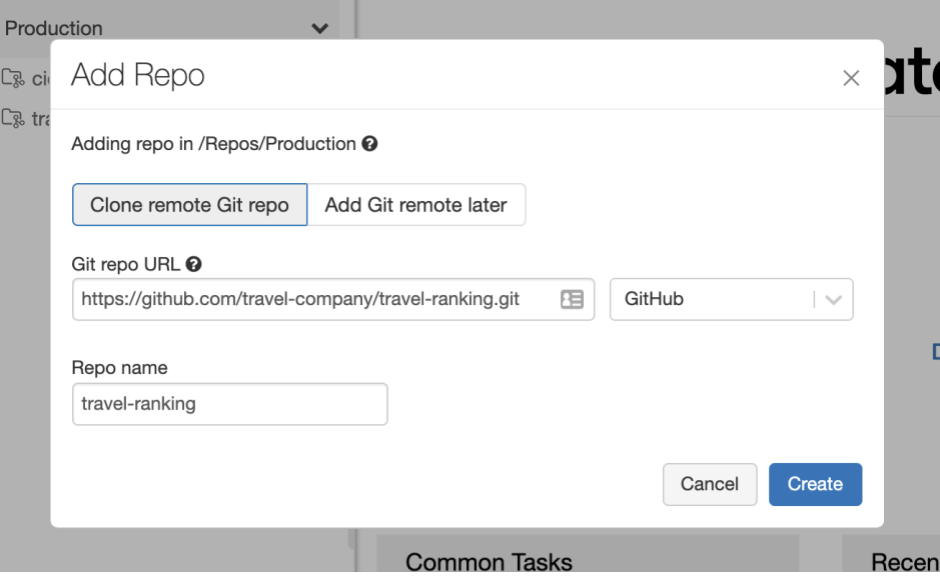

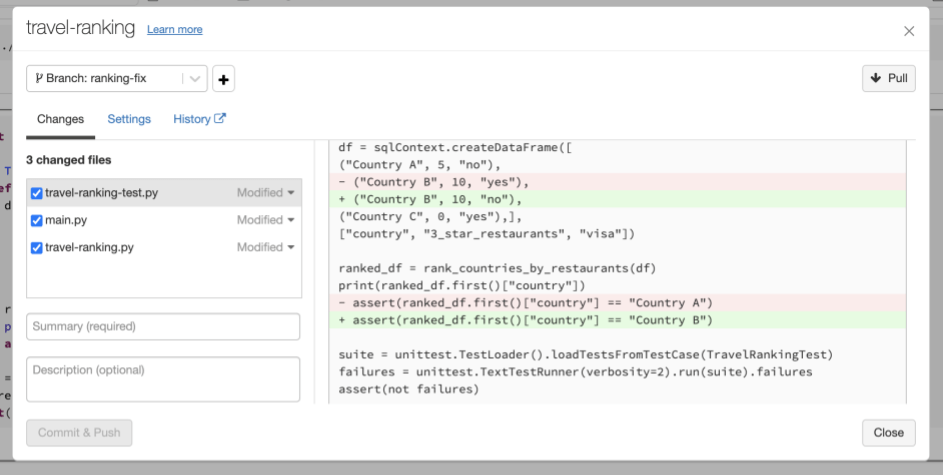

By integrating with Git, Databricks Repos provide a best-of-breed developer environment for data science and data engineering. You can enforce standards for code developed in Databricks, such as code reviews, tests, etc., before deploying your code to production. Developers will find familiar Git functionality in Repos, including the ability to clone remote Git repos (Figure 1), manage branches, pull remote changes and visually inspect outstanding changes before committing them (Figure 2).

Gartner®: Databricks Cloud Database Leader

With the public launch of Repos, we are adding functionality to satisfy the most demanding enterprise use-cases:

- Allow lists enable admins to configure URL prefixes of Git repositories to which users can commit code to. This makes sure that code cannot accidentally be pushed to non-allowed repositories.

- Secret detection identifies clear-text secrets in your source code before they get committed, helping data teams follow best practices of using secret managers.

Repos can also be integrated with your CI/CD pipelines and allows data teams to take data science and machine learning (ML) code from experimentation to production seamlessly. With the Repos API (currently in private preview, contact your Databricks rep for access), you can programmatically update your Databricks Repos to the latest version of a remote branch. This enables you to easily implement CI/CD pipelines, e.g. the following best-practice workflow:

- Development: Developers work on feature branches on personal checkouts of a remote repo in their user folders.

- Review & Testing: When a feature is ready for review and a PR is created, your CI/CD system can use the Repos API to automatically update a test environment in Databricks with the changes on the feature branch and then run a set of tests to validate the changes.

- Production: Finally, once all the tests have passed and the PR has been approved and merged, your CI/CD system can use the Repos API to update the production environment in Databricks with the changes. Your production jobs will now run against the latest code.

The Repos feature is a part of the Next Generation Workspace and, with this public release, enables data teams to easily follow best practices and accelerate the path from exploration to production.

Get started

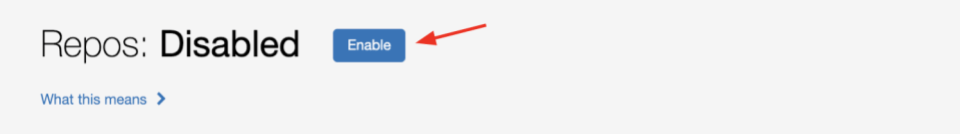

Repos are in Public Preview and can be enabled for Databricks Workspaces! To enable Repos, go to Admin Panel -> Advanced and click the “Enable” button next to “Repos.” Learn more in our developer documentation.