It’s Time to Re-evaluate Your Relationship With Hadoop

With companies forced to adapt to a remote, distributed workforce this past year, cloud adoption has accelerated at an unprecedented pace by +14% resulting in 2% or $13B above pre-pandemic forecasts for 2020 - with possibly more than $600B in on-prem to cloud migrations within the next few years. This shift to the cloud places growing importance on a new generation of data and analytics platforms to fuel innovation and deliver on enterprise digital transformation strategies. However, many organizations still struggle with the complexity, unscalable infrastructure and severe maintenance overheads of their legacy Hadoop environments and eventually sacrifice the value of their data and, in turn, risk their competitive edge. To tackle this challenge and unlock more (sometimes hidden) opportunities in their data, organizations are turning to open, simple and collaborative cloud-based data and analytics platforms like the Databricks Lakehouse Platform. In this blog, you’ll learn about the challenges driving organizations to explore modern cloud-based solutions and the role the lakehouse architecture plays in sparking the next wave of data-driven innovation.

Unfulfilled promises of Hadoop

Hadoop’s distributed file system (HDFS) was a game-changing technology when it launched and will remain an icon in the halls of data history. Because of its advent, organizations were no longer bound by the confines of relational databases, and it gave rise to modern big data storage and eventually cloud data lakes. For all its glory and fanfare leading up to 2015, Hadoop struggled to support the evolving potential of all data types – especially at enterprise scale. Ultimately, as the data landscape and accompanying business needs evolved, Hadoop struggled to continue to deliver on its promises. As a result, enterprises have begun exploring cloud-based alternatives and the rate of migration from Hadoop to the cloud is only increasing.

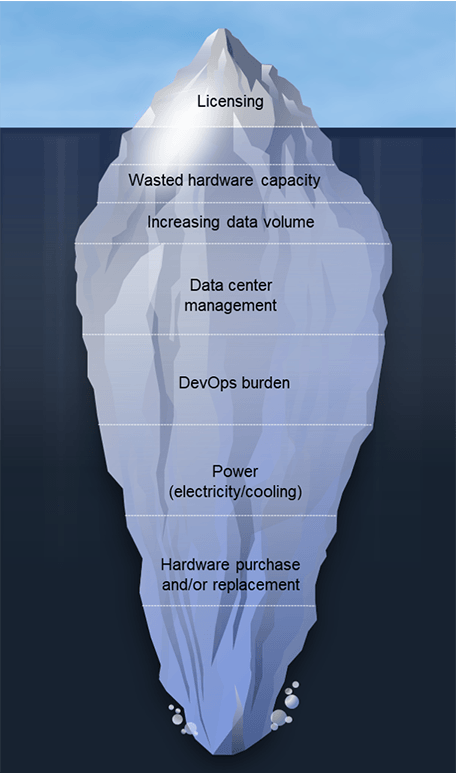

Teams migrate from Hadoop for a variety of reasons; it’s often a combination of “push” and “pull.” Limitations with existing Hadoop systems and high licensing and administration costs are pushing teams to explore alternatives. They’re also being pulled by the new possibilities enabled by modern cloud data architectures. While the architecture requirements vary by organization, we see several common factors that lead customers to realize it’s time to start saying goodbye. These include:

- Wasted hardware capacity: Over-capacity is a given in on-premises implementations so that you can scale up to your peak time needs, but the result is that much of that capacity sits idle but continues to add to the operational and maintenance costs.

- Scaling costs add up fast: Decoupling storage and compute is not possible in an on-premises Hadoop environment, so costs grow with data sets. Factor that in with the rapid digitization resulting from the COVID-19 pandemic and the global growth rate. Research indicates that the total amount of data created, captured, copied, and consumed in the world forecast to increase by 152.5% from 2020 to 2024 to 149 Zettabytes. In a hyperdata growth world, runaway costs can balloon rapidly.

- DevOps burden: Based on our customers’ experience, you can assume 4 to 8 full-time employees for every 100 nodes.

- Increased power costs: Expect to pay as much as $800 per server annually based on consumption and cooling. That’s $80K per year for a 100 node Hadoop cluster!

- New and replacement hardware costs: This accounts for ~20% of TCO, which is equal to the Hadoop clusters’ administration costs.

- Software version upgrades: These upgrades are often mandated to ensure the support contract is retained, and those projects take months at a time, deliver little new functionality and take up precious bandwidth of the data teams.

In addition to the full range of challenges above, there’s genuine concern about the long-term viability of Hadoop. In 2019, the world saw a massive unraveling within the Hadoop sphere. Google, whose seminal 2004 paper on MapReduce underpinned the creation of Apache Hadoop, stopped using MapReduce altogether, as tweeted by Google SVP of Technical Infrastructure, Urs Hölzle. There were also some very high-profile mergers and acquisitions in the world of Hadoop. Furthermore, in 2020, a leading Hadoop provider shifted its product set away from being Hadoop-centric, as Hadoop is now thought of as “more of a philosophy than a technology”. Lastly, in 2021, the Apache Software Foundation announced the retirement of ten projects from the Hadoop ecosystem. This growing collection of concerns paired with the accelerated need to digitize has encouraged many companies to re-evaluate their relationship with Hadoop.

Gartner®: Databricks Cloud Database Leader

The shift toward lakehouse architecture

A lakehouse architecture is the ideal data architecture for data-driven organizations. It combines the best qualities of data warehouses and data lakes to provide a single high-performance solution for all data workloads. Lakehouse architecture supports a variety of use cases, such as streaming data analytics to BI, data science and AI. Why do customers love the Databricks Lakehouse Platform?

- It’s simple. Unify your data, analytics and AI on one platform.

- It’s open. Unify your data ecosystem with open standards and formats.

- It’s collaborative. Unify your data teams to collaborate across the entire data and AI workflow.

A lakehouse architecture can deliver significant gains compared to legacy Hadoop environments, which “pull” companies into their cloud adoption. This also includes customers who have tried to use Hadoop in the cloud but aren’t getting the same results as expected or desired. As R. Tyler Croy, Director of Engineering at Scribd, explains “Databricks claimed an optimization of 30%–50% for most traditional Apache Spark™ workloads. Out of curiosity, I refactored my cost model to account for the price of Databricks and the potential Spark job optimizations. After tweaking the numbers, I discovered that at a 17% optimization rate, Databricks would reduce our Amazon Web Services (AWS) infrastructure cost so much that it would pay for the cost of the Databricks platform itself. After our initial evaluation, I was already sold on the features and developer velocity improvements Databricks would offer. When I ran the numbers in my model, I learned that I couldn’t afford not to adopt Databricks!”

Scribd isn’t alone; additional customers that have migrated from Hadoop to the Databricks Lakehouse Platform include:

- H&M processes massive volumes of data from over 5,000 stores in over 70 markets with millions of customers every day. Their Hadoop-based architecture created challenges for data. It became resource-intensive and costly to scale, presented data security issues, struggled to scale operations to support data science efforts from various siloed data sources and slowed down time-to-market because of significant DevOps delays. It would take a whole year to go from ideation to production. With Databricks, H&M benefits from improved operational efficiency by reducing operating costs by 70%, improving cross-team collaboration, and boosting business impact with faster time-to-insight.

- Viacom18 needs to process terabytes of daily viewer data to optimize programming. Their on-premises Hadoop data lake could not process 90 days of rolling data within SLAs, limiting their ability to deliver on business needs. With Databricks, they significantly lowered costs with faster querying times and less DevOps despite increasing data volumes. Viacom18 also improved team productivity by 26% with a fully managed platform that supports ETL, analytics and ML at scale.

- Reckitt Benckiser Group (RB) struggled with the complexity of forecasting demand across 500,000 stores. They process over 2TB of data every day across 250 pipelines. The legacy Hadoop infrastructure proved to be complex, cumbersome, costly to scale and struggled with performance. With Databricks, RB realized 10x more capacity to support business volume, 98% data compression from 80TB to 2TB, reducing operational costs, and 2x faster data pipeline performance for 24x7 jobs.

Hadoop was never built to run in cloud environments. While cloud-based Hadoop services make incremental improvements compared to their on-premises counterparts, both still lag compared to the lakehouse architecture. Both Hadoop instances yield low performance, low productivity, high costs and their inability to address more sophisticated data use cases at scale.

Future-proofing your data, analytics and AI-driven growth

Cloud migration decisions are business decisions. They force companies to take a hard look at the reality of the delivery of their current systems and evaluate what they need to achieve for both near-term and long-term goals. As AI investment continues to gain momentum, data, analytics, and technology leaders need to play a critical role in thinking beyond the existing Hadoop architecture with the question “will this get us where we need to go?”

With clarity on the goals come critical technical details, such as technology mapping, evaluating cloud resource utilization and cost-to-performance, and structuring a migration project that minimizes errors and risks. But most importantly, you need to have the data-driven conviction that it’s time to re-evaluate your relationship with Hadoop. Learn more how migration from Hadoop can accelerate business outcomes across your data use cases.

1. Source: Gartner Market Databook, Goldman Sachs Global Investment Research

Never miss a Databricks post

What's next?

Product

August 30, 2024/6 min read