Simplifying Data and ML Job Construction With a Streamlined UI

Databricks Jobs make it simple to run notebooks, Jars and Python eggs on a schedule. Our customers use Jobs to extract and transform data (ETL), train models and even email reports to their teams. Today, we are happy to announce a streamlined UI for jobs and new features designed to make your life easier.

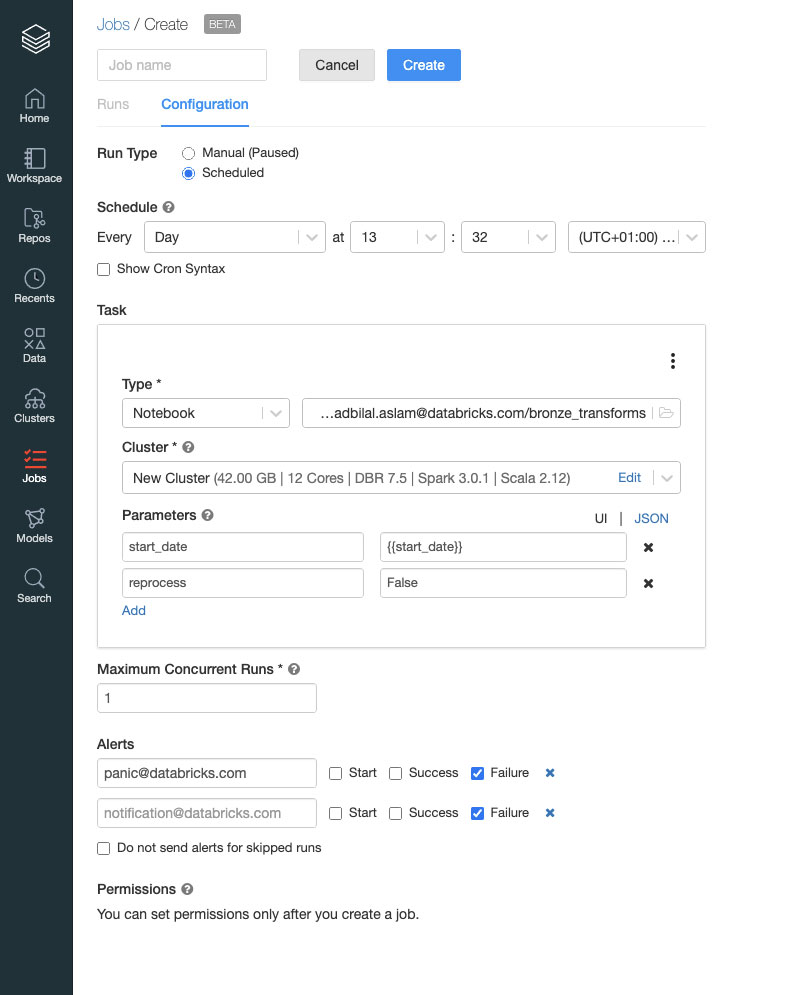

The most obvious change is that instead of a single page containing all the information; there are two tabs: Runs and Configuration. You use the Configuration tab to define the Job, whereas the Runs tab contains active and historical runs. This small change allowed us to make room for new features:

While doing the facelift, we added a few more features. You can now easily clone a job -- useful if you want to, say, change the cluster type but not make changes to a production job. Also, we added the ability to pause a job’s schedule, which you can use to make changes to a job while preventing new runs until the changes are done. Lastly, as shown above, we also added parameter variables, e.g. {{start_date}}, which are interpreted when a job run starts and is replaced with an actual value e.g. “2021-03-17”. A handful of parameter variables are already available, and we plan on expanding this list.

We are excited to be improving the experience of developing jobs while adding useful features for data engineers and data scientists. The update to the Jobs UI also sets the stage for some exciting new capabilities we will announce in the coming months. Finally, we would love to hear from you -- please use the feedback button in the UI to let us know what you think. Stay tuned for more updates!

Never miss a Databricks post

Sign up

What's next?

Product

November 21, 2024/3 min read