Curating More Inclusive and Safer Online Communities With Databricks and Labelbox

This is a guest authored post by JT Vega, Support Engineering Manager, Labelbox.

While video games and digital content are a source of entertainment, connecting with others, and fun for many around the world, they are also frequently a destination for toxic behavior that can include flaming, trolling, cyberbullying, and hate speech in the form of user-generated content. Social media platforms and video game developers are both aiming to fight online toxic behavior with the latest advances in AI. However, the reality of the situation is that AI training often begins with manual and laborious labeling efforts where teams sift through piles of toxic and benign user comments in order to categorize content for model training.

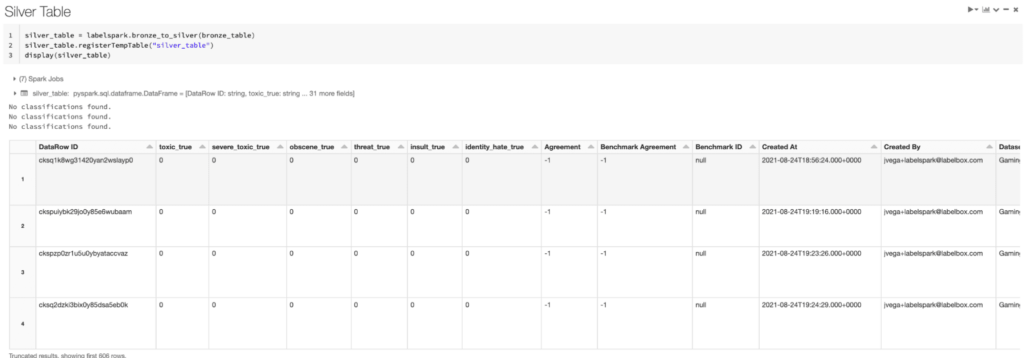

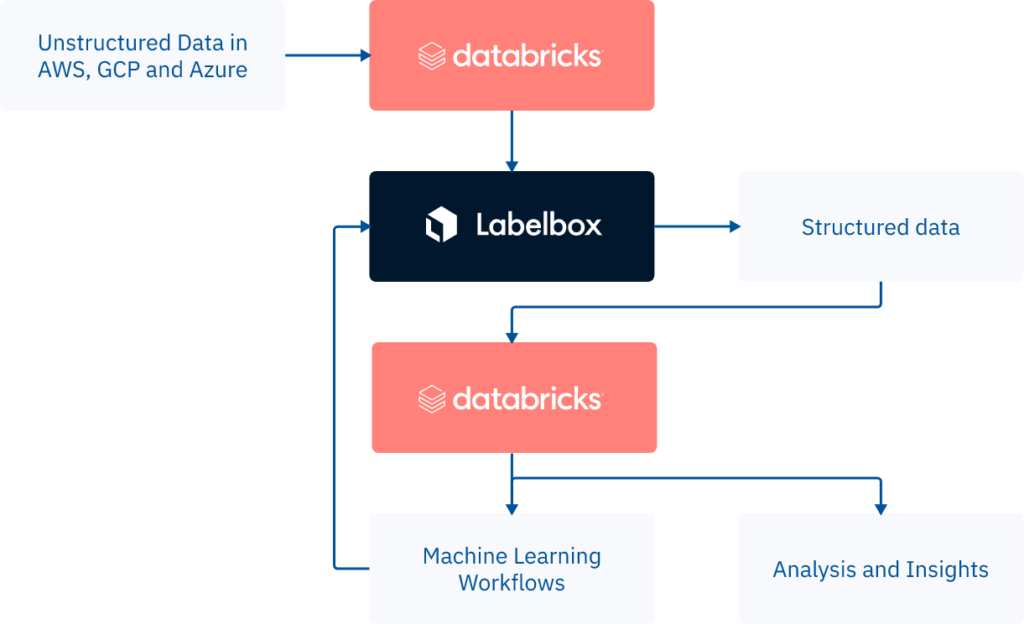

Finding faster and more cost-effective ways to convert unstructured text data into structured data is highly beneficial towards supporting more advanced use cases for identifying and removing unwanted content. The business benefit of this includes the ability to enhance the work and efficiency of human moderators while creating online communities that engage with each other that are free from harassment.

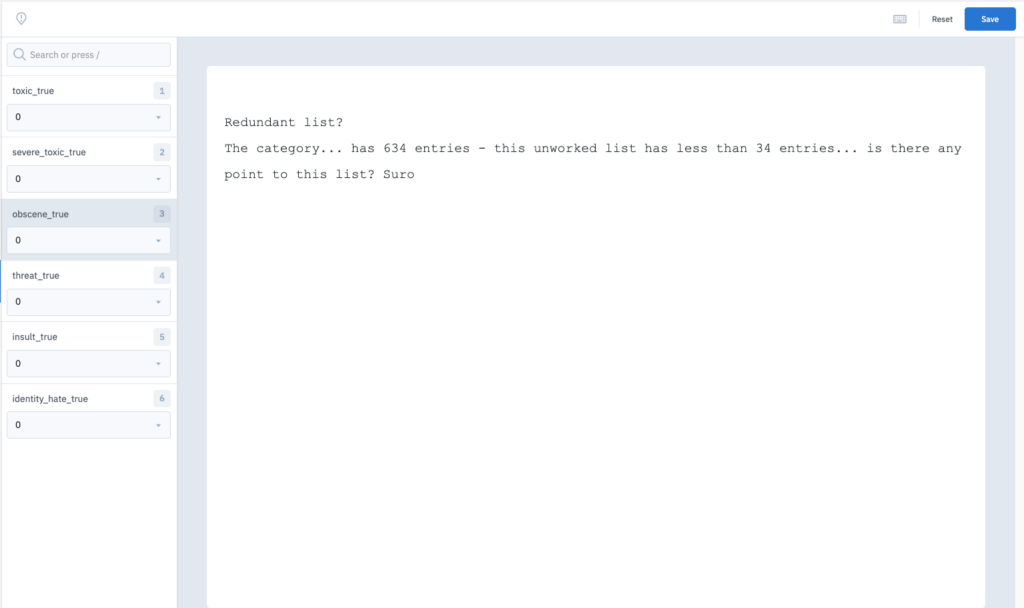

In-game toxicity models can actually hurt the gamer experience if they have high false-negative or false-positive rates. False negatives allow toxic behavior to continue unabated, and false positives can flag healthy players for removal. Active Learning is an efficient process that helps to reduce false positives and false negatives. To facilitate Active Learning, Labelbox allows you to quickly inspect predictions from your model and approve or correct them. You can then use your corrected labels to retrain your model so it will not make the same mistake in the future.

(Disclaimer: the content provided is used for illustrative purposes that can be considered offensive or objectionable)

You can also store model embeddings in Labelbox to facilitate analysis through dimensionality reduction. For example, your model embeddings may reveal new groupings of data that you had not previously thought of before. Perhaps you’ll also find specific types of data where you have a high false negative or false positive rate.

You can check out the project featured in this blog post here. While these demo notebooks are tailored for battling toxic content, you can broadly apply them to other NLP use-cases where high-quality training data is required to train AI models. You can learn more about the Databricks and Labelbox integration by watching this talk from Data & AI Summit 2021. Questions? Reach out to us at [email protected].

Download the Toxicity Solutions Accelerator and try Model Assisted Labeling with Labelbox today! To enable Model Assisted Labeling on your free trial of Labelbox, please reach out to [email protected].

Never miss a Databricks post

Sign up

What's next?

Product

November 21, 2024/3 min read