Simplifying Data + AI, One Line of TypeScript at a Time

Today, Databricks is known for our backend engineering, building and operating cloud systems that span millions of virtual machines processing exabytes of data each day. What's not as obvious is the focus on crafting user experiences that make data more accessible and usable.

We thought it’s time to highlight that via a series of posts on the people, the work, and impact they have had on our customers and the ecosystem. In this first post, we cover the founders’ stories in this area and some of the technical and product challenges we experience. In the future, we will cover newer works such as visualization and micro frontends.

Let’s get started.

Databricks’ founding thesis: can’t simplify data without great UI/UX

Ever since we started doing research on large-scale computing at UC Berkeley, our goal was to make it accessible to many more people. The state-of-the-art back then required a team of engineers working in Java (or C++) for weeks to process terabytes of data. Our work on Apache Spark made it possible for everyone to run distributed computations with just a few lines of Python or SQL.

But that wasn’t enough. From the early days, we saw that many of the exciting early applications of Spark were interactive -- for example, one of the most mind-blowing was a group of neuroscientists visualizing zebrafish brain activity in real time to understand how the brain worked. Seeing these applications, we realized a great compute engine could only get us so far: further expanding access to big data would also require new, high-quality user interfaces for both highly-trained developers and more citizen data consumers.

As our first product, we built the world’s first collaborative and interactive notebook for data science, designing a frontend that could display and visualize large amounts of data, a backend that automatically sliced and recomputed data as users manipulated their visualizations, and a full collaborative editing system that allowed our users to work on the same notebook simultaneously and to visualize streaming updates of the data.

Our funding pitch demo to Andreessen Horowitz contained no changes to Spark -- it just showed how an interactive, cloud-based interface based on it could make terabytes of data usable in seconds. Our pitches to customers were the same, and they loved it!

Everybody, even the “business person”, had to learn JavaScript

The founding team had strong pedigrees in backend systems (all of us had systems/database PhDs), but we didn’t know how to attract frontend engineers. So we had to take matters into our own hands; the founders each got copies of "JavaScript: The Definitive Guide" and "JavaScript: The Good Parts," and read them cover-to-cover over summer break before we started the company. (We were happy that the “Good Parts” was only 176 pages.)

Among our 7 cofounders, Arsalan was our “business guy.” He had received his PhD in computer networks from Berkeley a few years back, and had been working at McKinsey as a partner. We thought negotiating partnerships and striking deals wouldn’t quite fill 100% of his time, so we asked him to get up to speed on JavaScript before the company started.

Imagine this: after meeting some CEOs and CFOs, this McKinsey consultant in a suit boards first class, stows his Briggs & Riley carry-on, and then pulls out his copy of JavaScript: The Good Parts.

The whole team pitched in: our CEO wrote the original visualization in D3, Matei wrote the initial file browser, and Arsalan implemented the commenting feature in notebooks that our users still love today.

Although the founding team did not shy away from picking up JavaScript and building the initial product, over the past few years we have significantly expanded the team to bring on more frontend and UX experts. They have taught us a lot and have completely modernized our frontend stack (e.g. with Jest, React, Next.js, TypeScript, Yarn).

Databricks 101: A Practical Primer

Challenges in UI/UX for data and AI

We have also found that we had many UX and engineering challenges that most frontend applications do not run into. These challenges include:

- Displaying large amounts of data efficiently. Our users want to explore and visualize massive datasets, showing as many records as possible on their screens. This meant that our table and plot controls all had to be as fast and robust as possible in the face of large, possibly irregular datasets. We also tested them heavily to make them robust -- early on, we found many customer workloads that could easily crash their web browser, from the table with 2000 columns to the row with a 100 MB text field. Our frontend and backend now handle all these cases. Even today, we are constantly pushing the boundary of what’s possible in the browser as our customers’ workloads are becoming ever more demanding.

- Designing UI for long-running parallel tasks. Sometimes users ask for something that will take a while to compute (e.g. running on petabytes of data), so how do we ensure they feel that the system is fast and responsive? By giving them meaningful progress or even letting them see approximate results before the query completes. One example is our plot control’s ability to quickly render data based on a frontend sample, and then push large queries to the backend on all data.

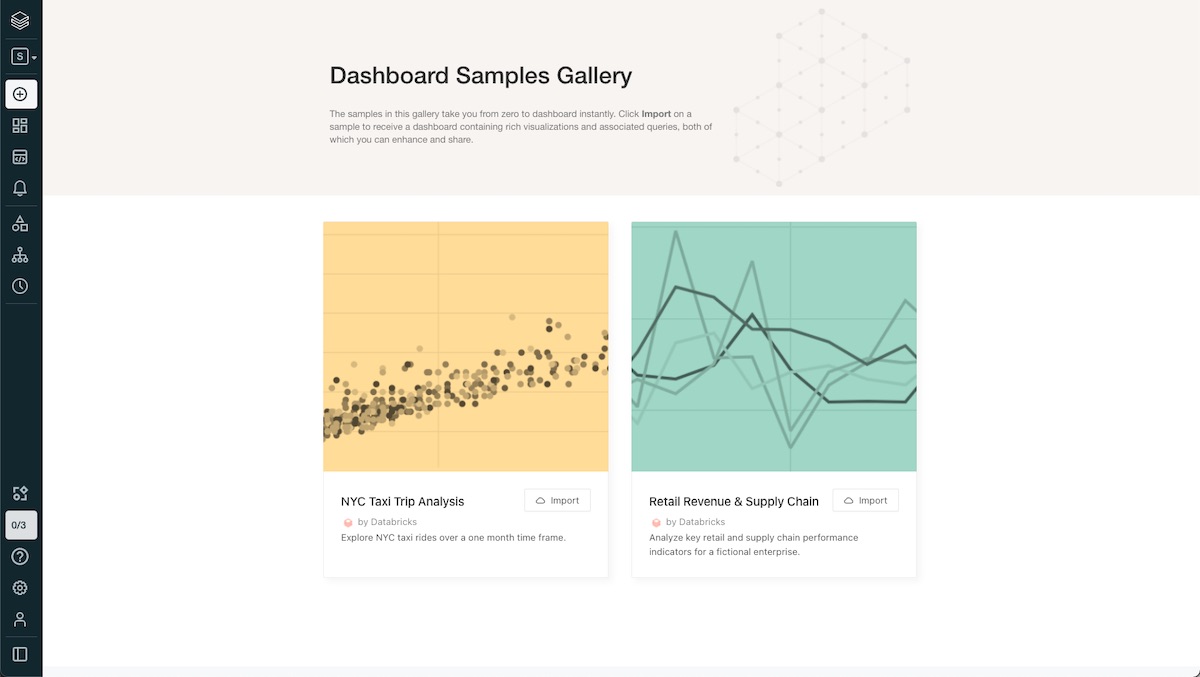

- Letting users rapidly create shareable production applications. We found that most users who do an analysis interactively then want to turn it into a dashboard and publish it to their team-- and they don’t want to leave their data analysis product to do it. Thus, we’ve built publish workflows in notebooks that let users combine their results into a usable, publishable report as quickly as possible. Our dashboards now reach hundreds of thousands of users worldwide. For example, when COVID started, our Amsterdam team spotted a Databricks dashboard tracking cases on their TV news.

- Integrating with engineering workflows. The data products built on Databricks are increasingly powering mission-critical applications. As a result, while data scientists and analysts want to explore their data quickly, they also want to follow engineering best practices to introduce rigor, such as managing code in Git or running CI/CD. A lot of our work focuses on enabling less technical users to leverage similar tools or concepts for engineering rigor in their own workflows.

![We’ve started designing the new Databricks workspace, with spaces for each of the main user personas. These spaces address the critical user journeys [CUJs] for each persona, defined in collaboration with the UX team, engineers and product management.](https://www.databricks.com/wp-content/uploads/2021/10/get-started-jpg-retina.jpg) We’ve started designing the new Databricks workspace, with spaces for each of the main user personas. These spaces address the critical user journeys [CUJs] for each persona, defined in collaboration with the UX team, engineers and product management.

We’ve started designing the new Databricks workspace, with spaces for each of the main user personas. These spaces address the critical user journeys [CUJs] for each persona, defined in collaboration with the UX team, engineers and product management.We’re just getting started

We are humbled by the impact Databricks has had on our customers. Among them are neuroscientists trying to understand how the brain works, energy engineers reducing energy consumption for whole continents, and pharmaceutical researchers speeding up the discovery of the next important drugs.

But we haven’t solved all the problems. Our explosive growth has created even more challenges to solve, and we feel we are just getting started here. For too long, our industry has built the most sophisticated technologies for data behind code-based interfaces. The first step towards democratizing data and AI is to create graphical user interfaces to significantly simplify critical user journeys.

As a recent example, we built a new data explorer UI for easier exploration of data (with zero backend changes). Right after we shipped it, we received a message from our customer Jake: "Data Explorer is night and day better. Whatever witchcraft happened here is heavenly." We know that we can do the same in many other parts of users’ workflows.

Come build the future of data and AI with us. Your work might be used to create the next cancer drug, catch the next cyberattack, or even explain the next big story on the evening news.

Never miss a Databricks post

Sign up

What's next?

Culture

September 11, 2024/5 min read

2024 Fortune Best Workplaces in Technology™ recognizes Databricks

Culture

November 25, 2024/6 min read