Databricks on Google Cloud Security Best Practices

The lakehouse paradigm enables organizations to store all of their data in one location for analytics, data science, machine learning (ML), and business intelligence (BI). Bringing all of the data together into a single location increases productivity, breaks down barriers to collaboration, and accelerates innovation.

As organizations prepare to deploy a data lakehouse, they often have questions about how to implement their policy-governed security and controls to ensure proper access and auditability. Some of the most common questions include:

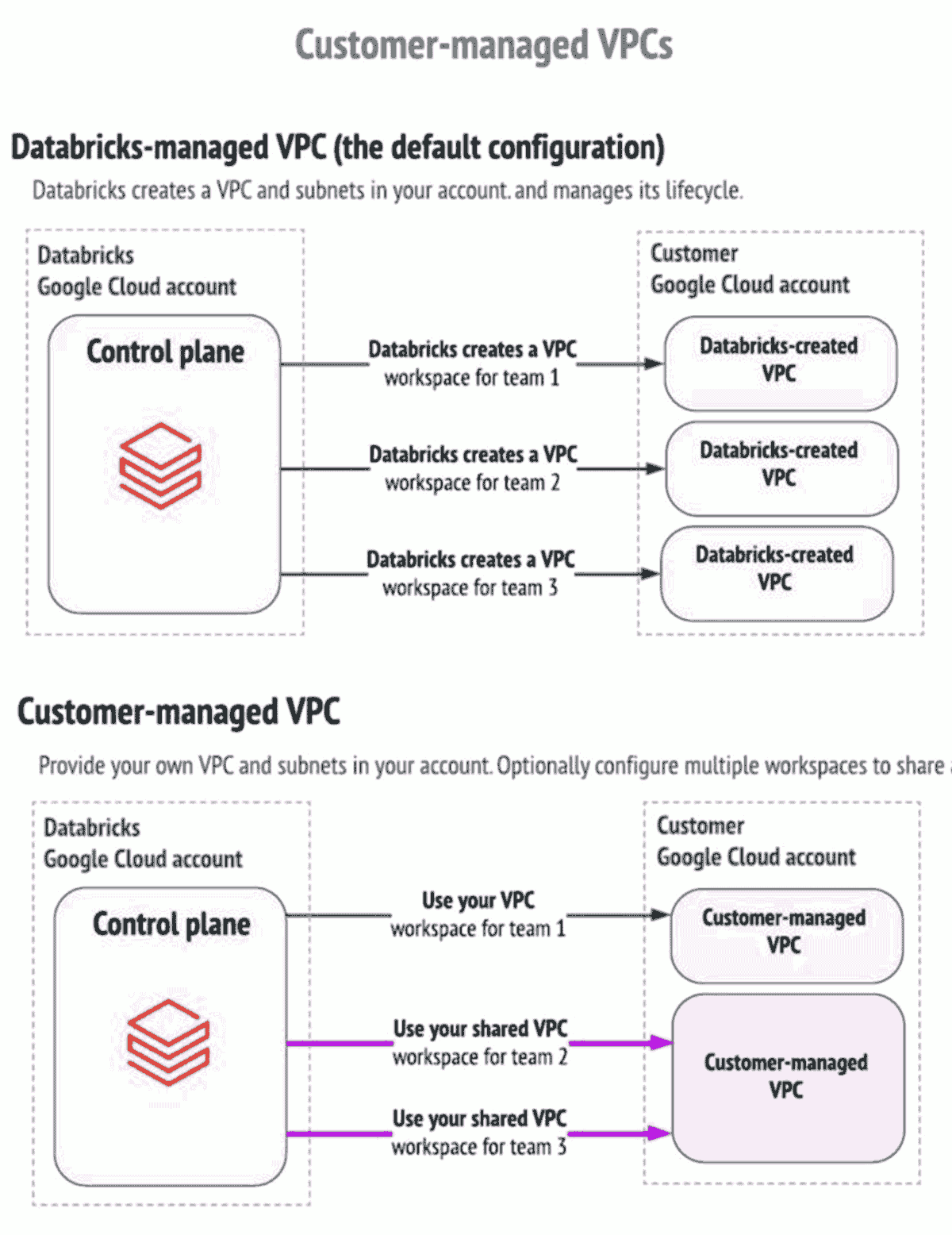

- Can I bring my own VPC (network) for Databricks on Google Cloud? (e.g., Shared VPC)

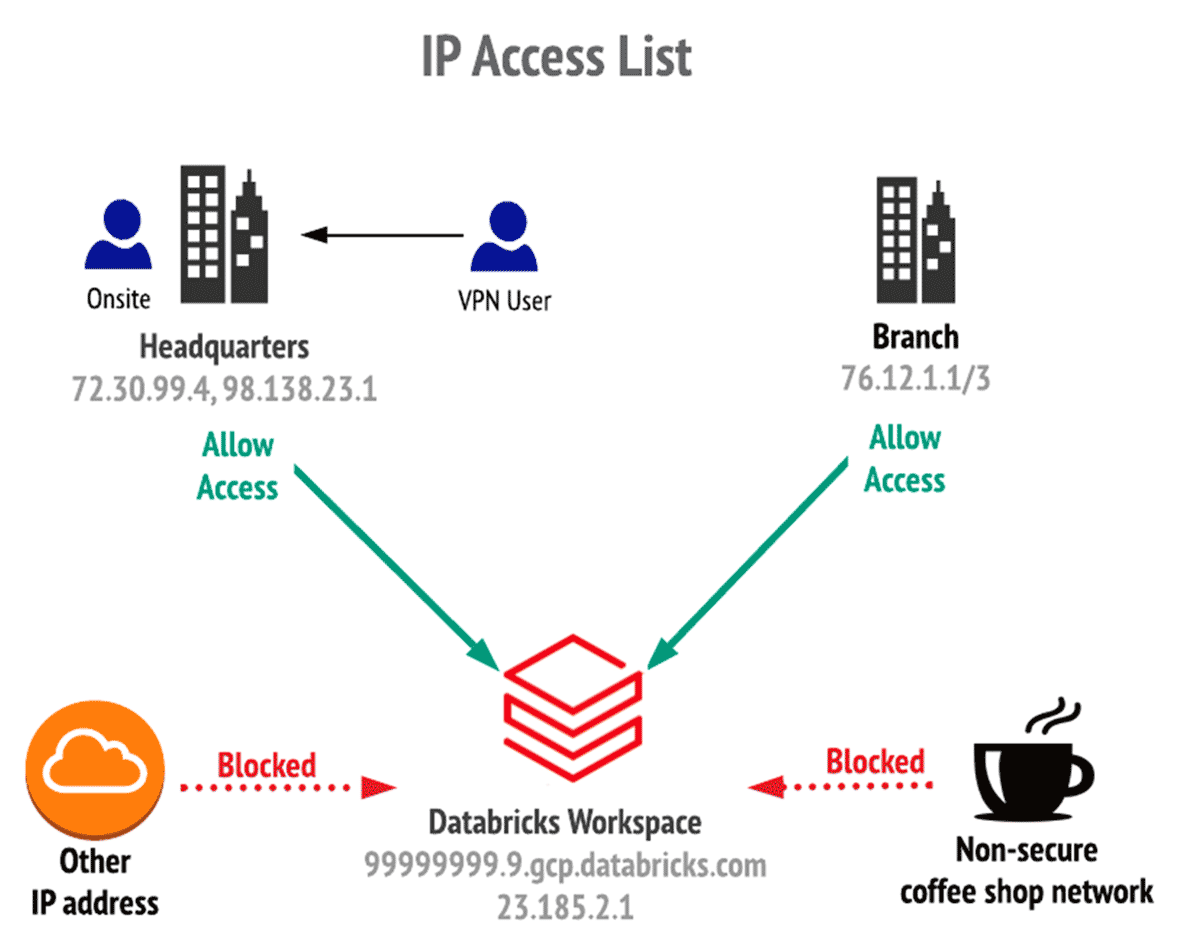

- How can I make sure requests to Databricks ( Webapp or the APIs) originate from within an approved network (e.g., users need to be on a corporate VPN while accessing a Databricks workspace)?

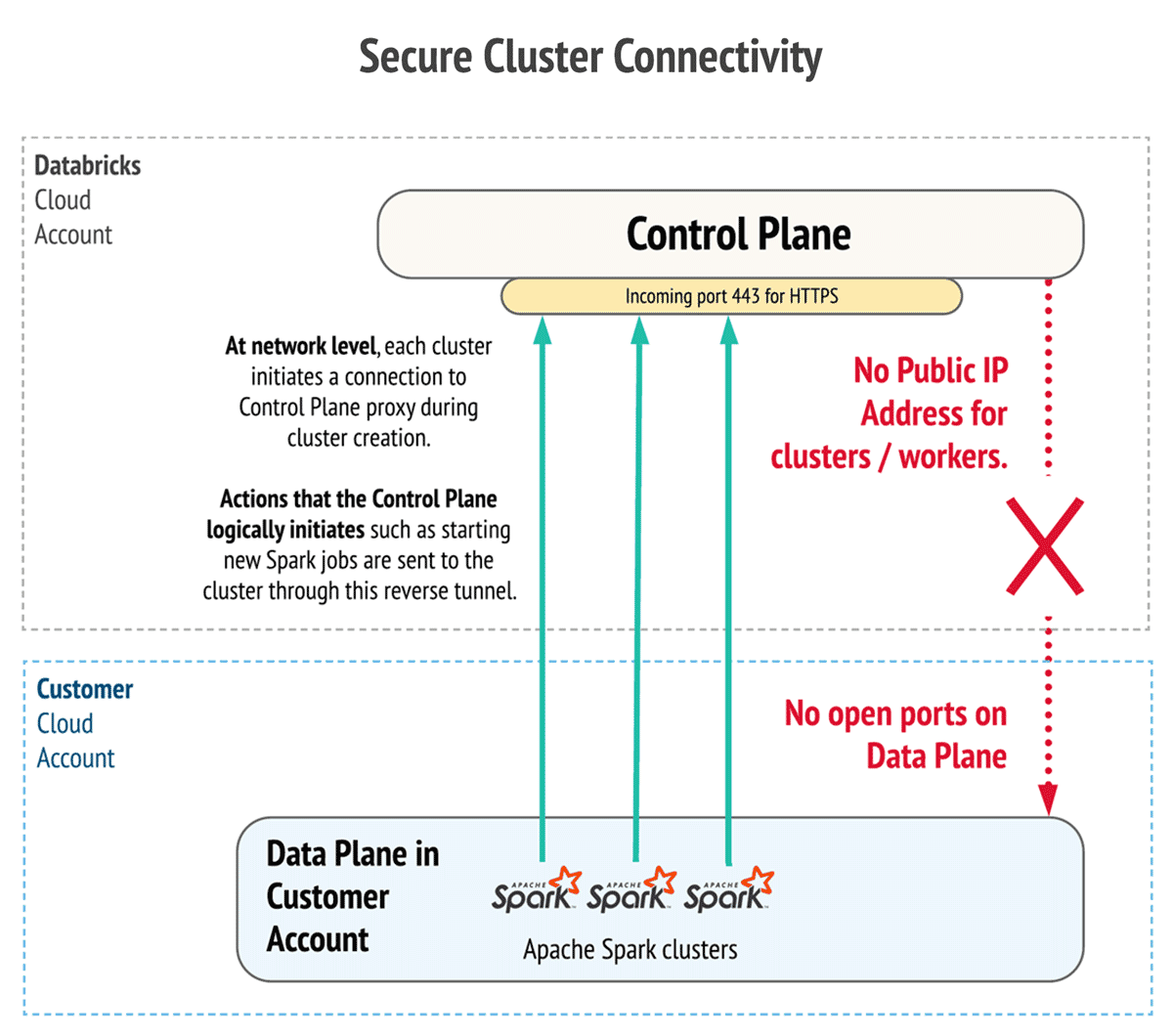

- How can Databricks compute instances have only private IP's?

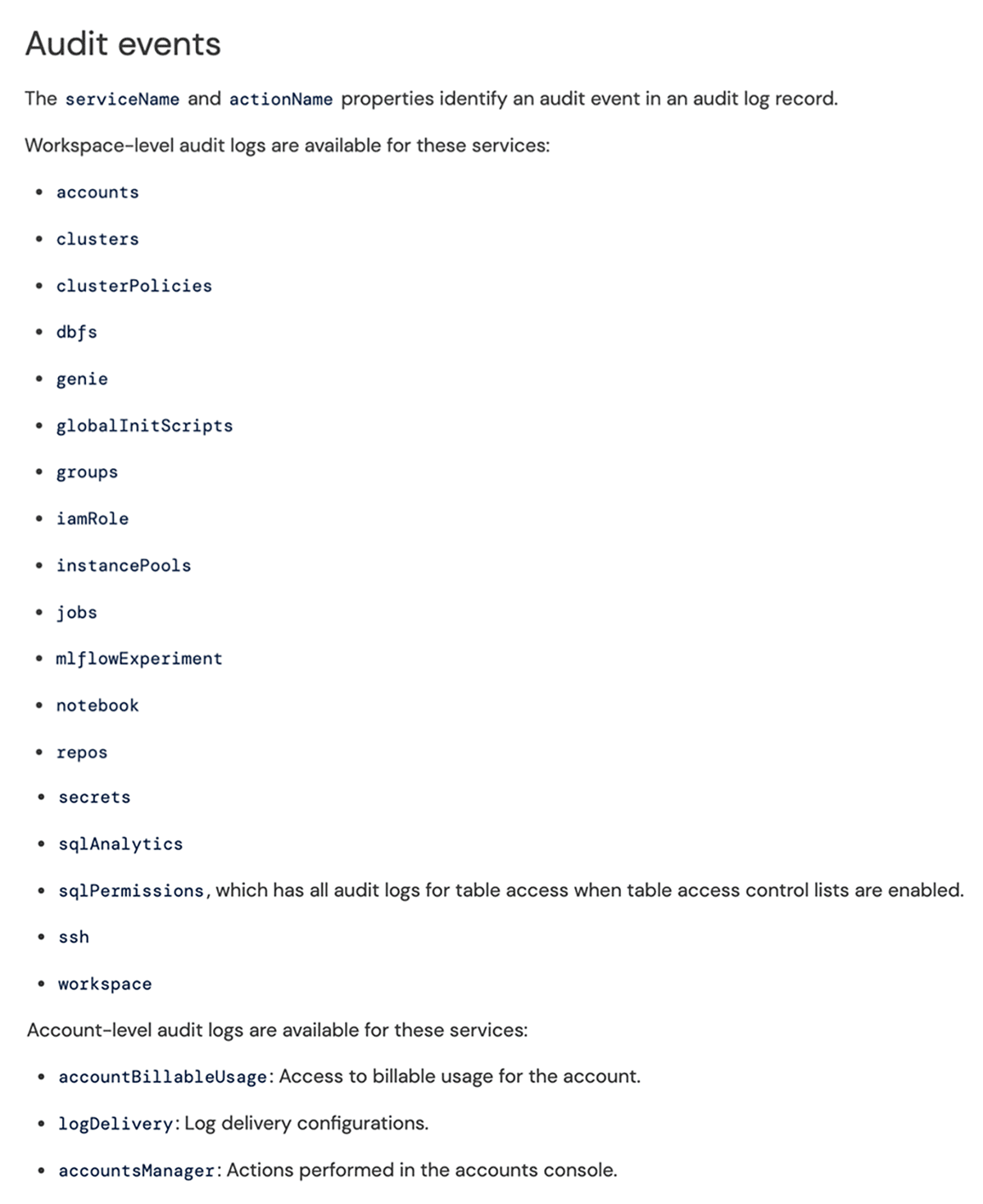

- Is it possible to audit Databricks related events (e.g., who did what and when)?

- How do I prevent data exfiltration?

- How do I manage Databricks Personal Access Tokens?

In this article, we'll address these questions and walk through cloud security features and capabilities that enterprise data teams can utilize to bake their Databricks environment as per their governance policy.

Databricks on Google Cloud

Databricks on Google Cloud is a jointly developed service that allows you to store all your data on a simple, open lakehouse platform that combines the best of data warehouses and data lakes to unify all your analytics and AI workloads. It is hosted on the Google Cloud Platform (GCP), running on Google Kubernetes Engine (GKE) and providing built-in integration with Google Cloud Identity, Google Cloud Storage, BigQuery, and other Google Cloud technologies. The platform enables true collaboration between different data personas in any enterprise, including Data Engineers, Data Scientists, Data Analysts and SecOps / Cloud Engineering.

Built upon the foundations of Delta Lake, MLflow, Koalas, Databricks SQL and Apache Spark™, Databricks on Google Cloud is a GCP Marketplace offering that provides one-click setup, native integrations with other Google cloud services, an interactive workspace, and enterprise-grade security controls and identity and access management (IAM) to power Data and AI use cases for small to large global customers. Databricks on Google Cloud leverages Kubernetes features like namespaces to isolate clusters within the same GKE cluster.

Bring your own network

How can you set up the Databricks Lakehouse Platform in your own enterprise-managed virtual network, in order to do necessary customizations as required by your network security team? Enterprise customers should begin using customer-managed virtual private cloud (VPC) capabilities for their deployments on the GCP environment. Customer-managed VPCs enable you to comply with a number of internal and external security policies and frameworks, while providing a Platform-as-a-Service approach to data and AI to combine the ease of use of a managed platform with secure-by-default deployment. Below is a diagram to illustrate the difference between Databricks-managed and customer-managed VPCs:

Enable secure cluster connectivity

Deploy your Databricks workspace in subnets without any inbound access to your network. Clusters will utilize a secure connectivity mechanism to communicate with the Databricks cloud infrastructure, without requiring public IP addresses for the nodes. Secure cluster connectivity is enabled by default at Databricks workspace creation on Google Cloud.

Control which networks are allowed to access a workspace

Configure allow-lists and block-lists to control the networks that are allowed to access your Databricks workspace.

Trust but verify with Databricks

Get visibility into relevant platform activity in terms of who's doing what and when, by configuring Databricks audit logs and other related Google Cloud Audit Logs.

Securely accessing Google Cloud Data sources from Databricks

Understand the different ways of connecting Databricks clusters in your private virtual network to your Google Cloud Data Sources in a cloud-native secure manner. Customers can choose from Private Google Access, VPC Service Controls or Private Service Connect features to read/write to data sources like BQ, Cloud SQL, GCS.

Data exfiltration protection with Databricks

Learn how to utilize cloud-native security constructs like VPC Service Controls to create a battle-tested secure architecture for your Databricks environment, that helps you prevent Data Exfiltration. Most relevant for organizations working with personally identifiable information (PII), protected health information (PHI) and other types of sensitive data.

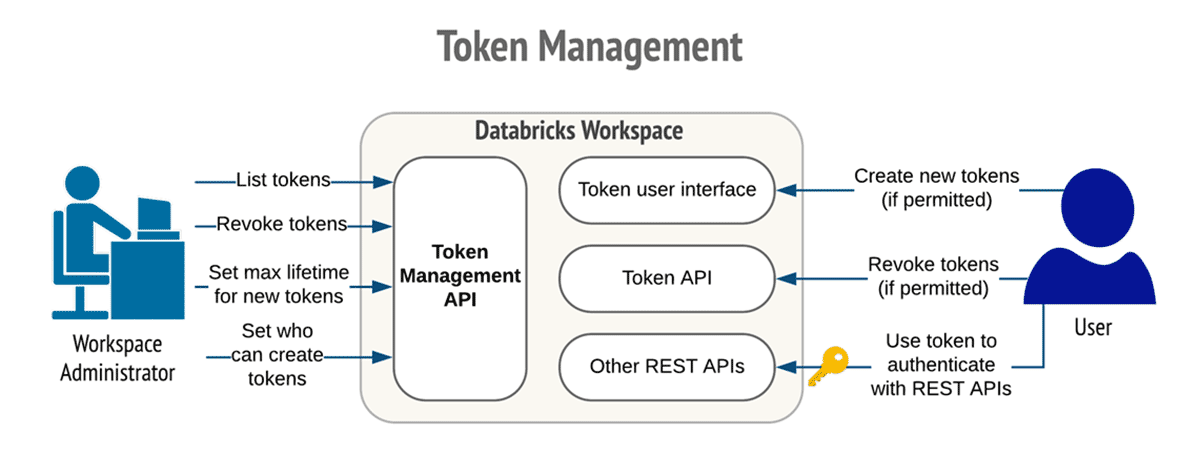

Token management for Personal Access Tokens

For use cases that require the Databricks Personal Access Tokens (PAT), we recommend to allow only the required users to be able to configure those tokens.

What's next?

The lakehouse architecture enables customers to take an integrated and consistent approach to data governance and access, giving organizations the ability to rapidly scale from a single use case to operationalizing a data and AI platform across many distributed data teams.

Bookmark this page, as we'll keep it updated with the new security-related capabilities & controls. If you want to try out the mentioned features, get started by creating a Databricks workspace in your own managed VPC.

Never miss a Databricks post

What's next?

Best Practices

May 6, 2024/14 min read

Building High-Quality and Trusted Data Products with Databricks

Best Practices

July 30, 2024/4 min read