Introducing Apache Spark™ 3.3 for Databricks Runtime 11.0

Published: June 15, 2022

by Maxim Gekk, Wenchen Fan, Hyukjin Kwon, Serge Rielau, Yingyi Bu, Xiao Li and Reynold Xin

Free Edition has replaced Community Edition, offering enhanced features at no cost. Start using Free Edition today.

Today we are happy to announce the availability of Apache Spark™ 3.3 on Databricks as part of Databricks Runtime 11.0. We want to thank the Apache Spark community for their valuable contributions to the Spark 3.3 release.

The number of monthly PyPI downloads of PySpark has rapidly increased to 21 million, and Python is now the most popular API language. This year-over-year growth rate represents a doubling of monthly PySpark downloads in the last year. Also, the number of monthly Maven downloads exceeded 24 million. Spark has become the most widely-used engine for scalable computing.

Continuing with the objectives to make Spark even more unified, simple, fast, and scalable, Spark 3.3 extends its scope with the following features:

- Improve join query performance via Bloom filters with up to 10x speedup.

- Increase the Pandas API coverage with the support of popular Pandas features such as datetime.timedelta and merge_asof.

- Simplify the migration from traditional data warehouses by improving ANSI compliance and supporting dozens of new built-in functions.

- Boost development productivity with better error handling, autocompletion, performance, and profiling.

Performance Improvement

Bloom Filter Joins (SPARK-32268): Spark can inject and push down Bloom filters in a query plan when appropriate, in order to filter data early on and reduce intermediate data sizes for shuffle and computation. Bloom filters are row-level runtime filters designed to complement dynamic partition pruning (DPP) and dynamic file pruning (DFP) for cases when dynamic file skipping is not sufficiently applicable or thorough. As shown in the following graphs, we ran the TPC-DS benchmark over three different variations of data sources: Delta Lake without tuning, Delta Lake with tuning, and raw Parquet files, and observed up to ~10x speedup by enabling this Bloom filter feature. Performance improvement ratios are larger for cases lacking storage tuning or accurate statistics, such as Delta Lake data sources before tuning or raw Parquet file based data sources. In these cases, Bloom filters make query performance more robust regardless of storage/statistics tuning.

Query Execution Enhancements: A few adaptive query execution (AQE) improvements have landed in this release:

- Propagating intermediate empty relations through Aggregate/Union (SPARK-35442)

- Optimizing one-row query plans in the normal and AQE optimizers (SPARK-38162)

- Supporting eliminating limits in the AQE optimizer (SPARK-36424).

Whole-stage codegen coverage is further improved in multiple areas, including:

- Full outer sort merge join (SPARK-35352, 20%~30% speedup)

- Full outer shuffled hash join (SPARK-32567, 10%~20% speedup)

- Existence sort merge join (SPARK-37316)

- Sort aggregate without grouping keys (SPARK-37564)

Parquet Complex Data Types (SPARK-34863): This improvement adds support in Spark's vectorized Parquet reader for complex types such as lists, maps, and arrays. As micro-benchmarks show, Spark obtains an average of ~15x performance improvement when scanning struct fields, and ~1.5x when reading arrays comprising elements of struct and map types.

Scale Pandas

Optimized Default Index: In this release, in the Pandas API on Spark (SPARK-37649), we switched the default index from 'sequence' to 'distributed-sequence', where the latter is amenable to optimization with the Catalyst Optimizer. Scanning data with the default index in Pandas API on Spark became 2 times faster in the benchmark of i3.xlarge 5 node cluster.

Pandas API Coverage:

PySpark now natively understands datetime.timedelta (SPARK-37275, SPARK-37525) across Spark SQL and Pandas API on Spark. This Python type now maps to the date-time interval type in Spark SQL. Also, many missing parameters and new API features are now supported for Pandas API on Spark in this release. Examples include endpoints like ps.merge_asof (SPARK-36813), ps.timedelta_range (SPARK-37673) and ps.to_timedelta (SPARK-37701).

Migration Simplification

ANSI Enhancements: This release completes the support of the ANSI interval data types (SPARK-27790). Now we can read/write interval values from/to tables, and use intervals in many functions/operators to do date/time arithmetic, including aggregation and comparison. Implicit casting in ANSI mode now supports safe casts between types while protecting against data loss. A growing library of "try" functions, such as "try_add" and "try_multiply", complement ANSI mode allowing users to embrace the safety of ANSI mode rules while also still allowing for fault tolerant queries.

Built-in Functions: Beyond the try_* functions (SPARK-35161), this new release now includes nine new linear regression functions and statistical functions, four new string processing functions, aes_encryption and decryption functions, generalized floor and ceiling functions, "to_number" formatting, and many others.

Boosting Productivity

Error Message Improvements: This release starts a journey wherein users observe the introduction of explicit error classes like "DIVIDE_BY_ZERO." These make it easier to search online for more context about errors, including in the formal documentation.

For many runtime errors Spark now returns the exact context where the error occurred, such as the line and column number in a specified nested view body.

Profiler for Python/Pandas UDFs (SPARK-37443): This release introduces a new Python/Pandas UDFs profiler, which provides deterministic profiling of UDFs with useful statistics. Below is an example by running PySpark with the new infrastructure:

Better Auto-Completion with Type Hint Inline Completion (SPARK-39370):

All type hints have migrated from stub files to inlined type hints in this release in order to enable better autocompletion. For example, showing the type of parameters can help provide useful context.

In this blog post, we summarize some of the higher-level features and improvements in Apache Spark 3.3.0. Please keep an eye out for upcoming posts that dive deeper into these features. For a comprehensive list of major features across all Spark components and JIRA tickets resolved, please visit the Apache Spark 3.3.0 release notes.

Get started with Spark 3.3 today

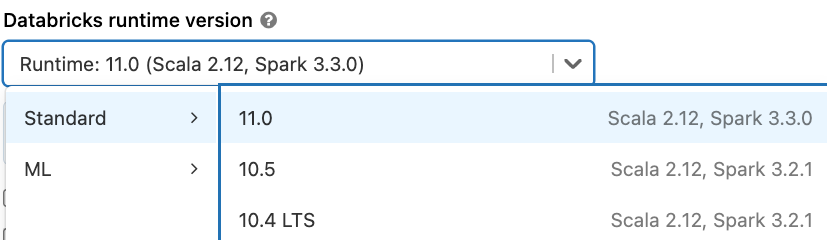

To try out Apache Spark 3.3 in Databricks Runtime 11.0, please sign up for the Databricks Community Edition or Databricks Trial, both of which are free, and get started in minutes. Using Spark 3.3 is as simple as selecting version "11.0" when launching a cluster.