Databricks Terraform Provider Is Now Generally Available

Today, we are thrilled to announce that Databricks Terraform Provider is generally available (GA)! HashiCorp Terraform is a popular open source infrastructure as code (IaC) tool for creating safe and reproducible cloud infrastructure across cloud providers.

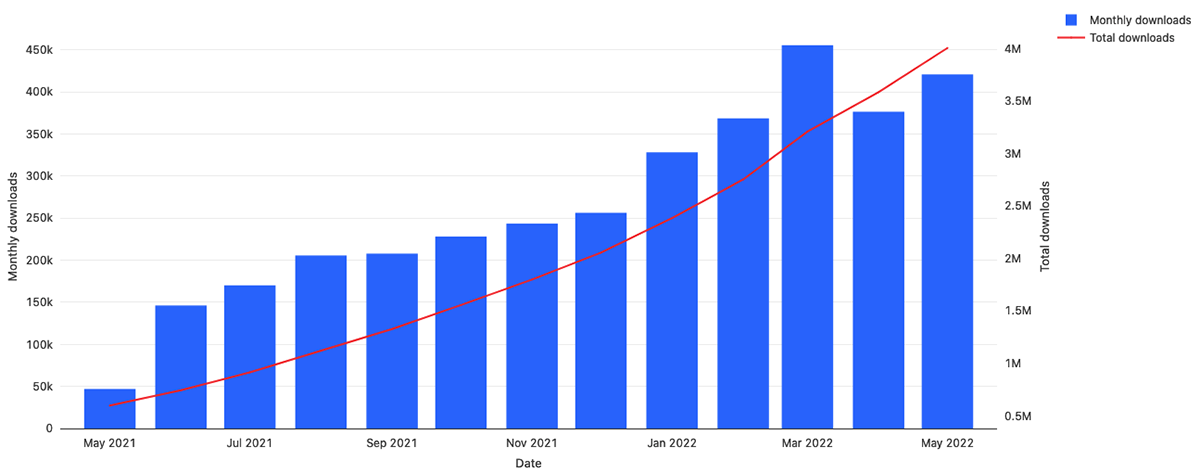

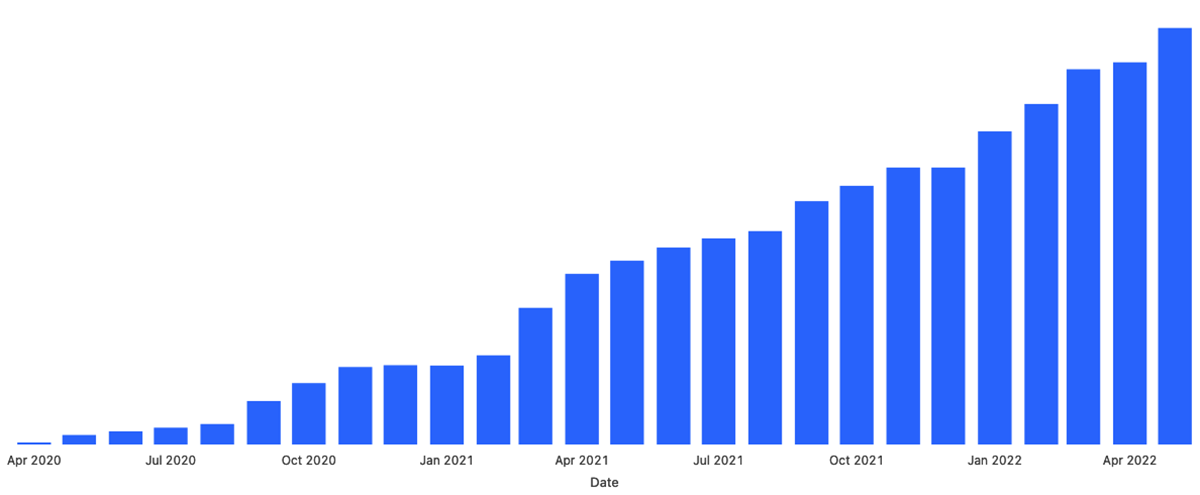

The first Databricks Terraform Provider was released more than two years ago, allowing engineers to automate all management aspects of their Databricks Lakehouse Platform. Since then, adoption has grown significantly by more than 10 times.

More importantly, we also see significant growth in the number of customers using Databricks Terraform Provider in to manage their production and development environments:

Customers win with the Lakehouse as Code

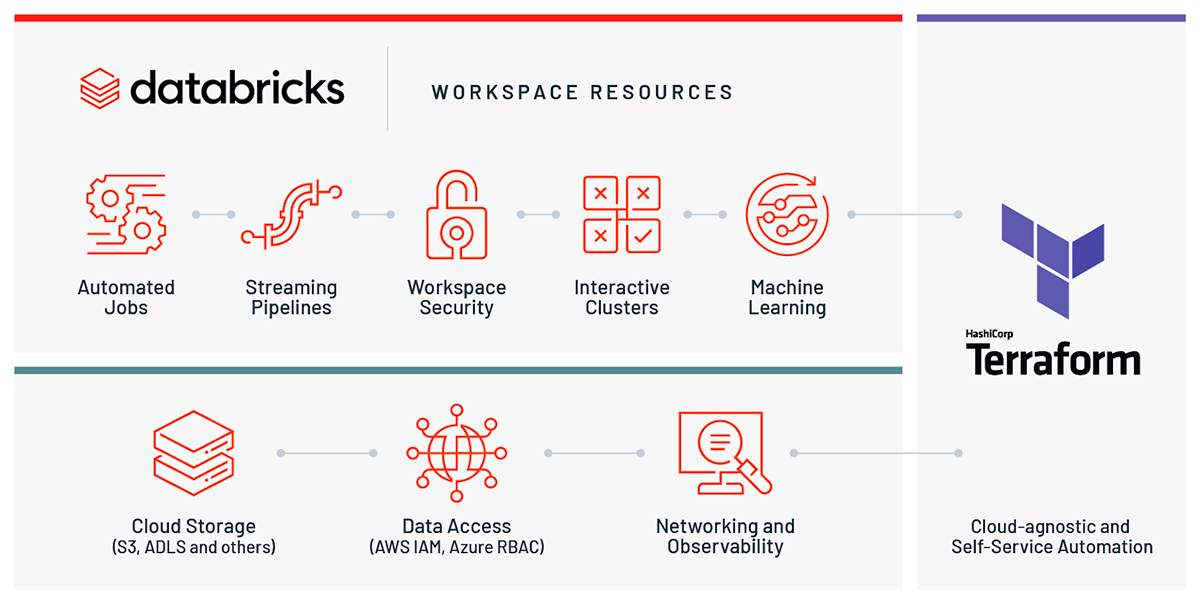

There are multiple areas where Databricks customers successfully use Databricks Provider.

Automating all aspects of provisioning the Lakehouse components and implementing DataOps/DevOps/MLOps

That covers multiple use cases - promotion of jobs between dev/staging/prod environments, making sure that upgrades are safe, creating reproducible environments for new projects/teams, and many other things.

In our joint DAIS talk, Scribd talked about how their data platform relies on Platform Engineering to put tools in the hands of developers and data scientists to "choose their own adventure". With Databricks Terraform Provider, they can offer their internal customers flexibility without acting as gatekeepers. Just about anything they might need in Databricks is a pull request away.

Other customers are also very complimentary about their usage of Databricks Terraform Provider: "swift structure replication", "maintaining compliance standards", "allows us to automate everything", "democratized or changed to reduce the operational burden from our SRE team".

Implementing an automated disaster recovery strategy

Disaster recovery is the "must have" for all regulated industries and for any company realizing the importance of data accessibility. And Terraform plays a significant role in making sure that failover processes are correctly automated and will be performed in the predictable amount of time without errors that are common when there is no automation in place.

For example, illimity's data platform is centered on Azure Databricks and its functionalities as noted in our previous blog post. They designed a data platform DR scenario using the Databricks Terraform Provider, ensuring RTOs and RPOs required by the regulatory body at illimity and Banca d'Italia (Italy's central bank). Stay tuned for more detailed blogs on disaster recovery preparation with our Terraform integration!

Implementing secure solutions

Security is a crucial requirement in the modern world. But making sure that your data is secure is not a simple task, especially for regulated industries. For these solutions there are many requirements, such as, preventing data exfiltration, controlling users access to data, etc.

Let's take deployment of the workspace on AWS with data exfiltration protection as an example. It is generally recommended that customers deploy multiple Databricks workspaces alongside a hub and spoke topology reference architecture, powered by AWS Transit Gateway. As documented in our previous blog, the setup is through the AWS UI which includes several manual steps. With the Databricks Terraform Provider, this can now be automated and deployed in just a few steps with a detailed guide.

Enabling data governance with Unity Catalog

Databricks Unity Catalog brings fine-grained governance and security to lakehouse data using a familiar, open interface. Combining Databricks Terraform Provider and Unity Catalog enables customers to govern their Lakehouse with ease and at scale through automation. And this is very critical for big enterprises.

Provider quality and support

The provider is now officially supported by Databricks, and has an established issue tracking through Github. Pull requests are always welcome. Code undergoes heavy integration testing each release and has significant unit test code coverage.

Gartner®: Databricks Cloud Database Leader

Most used resources

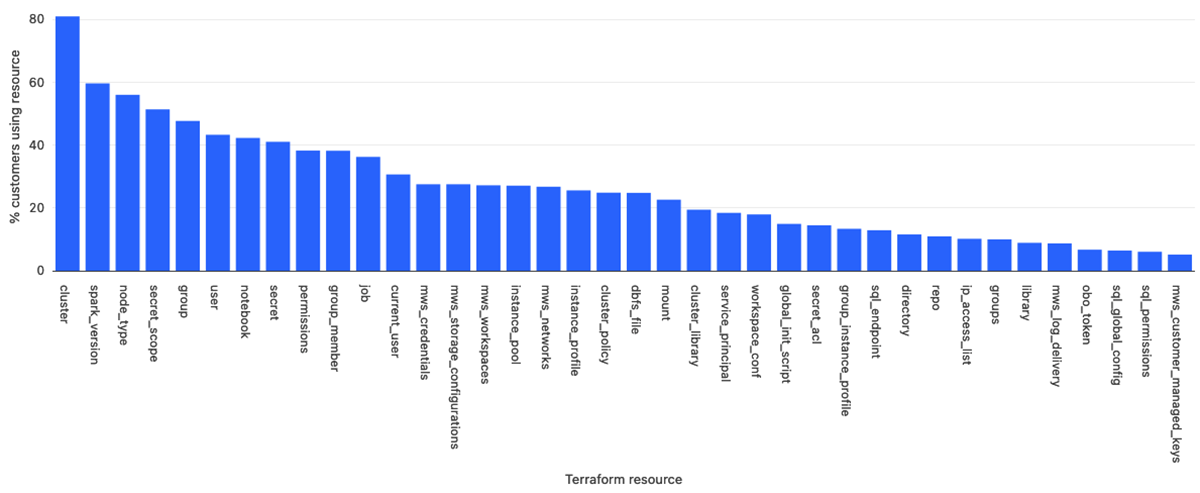

The use cases described above are the most typical ones - there are many different things that our customers are implementing using Databricks Terraform Provider. The next diagram may give another view onto which resource types are most often managed by customers via Terraform.

Migrating to GA version of Databricks Terraform Provider

To make Databricks Terraform Provider generally available, we have moved it from https://github.com/databrickslabs to https://github.com/databricks. We have worked closely with the Terraform Registry team at Hashicorp to ensure a smooth migration. Existing terraform deployments continue to work as expected without any action from your side. You should have a .terraform.lock.hcl file in your state directory that is checked into source control. terraform init will give you the following warning:

Warning: Additional provider information from registry

The remote registry returned warnings for registry.terraform.io/databrickslabs/databricks:

- For users on Terraform 0.13 or greater, this provider has moved to databricks/databricks. Please update your source in required_providers.

After you replace databrickslabs/databricks with databricks/databricks in the required_providers block, the warning will disappear. Do a global "search and replace" in *.tf files. Alternatively you can run python3 -c "$(curl -Ls https://dbricks.co/updtfns)" from the command-line, that would do all the boring work for you.

However, you may run into one of the following problems when running the terraform init command: "Failed to install provider" or "Failed to query available provider packages". This is because you didn't check in .terraform.lock.hcl to source code version control.

Error: Failed to install provider

Error while installing databrickslabs/databricks: v1.0.0: checksum list has no SHA-256 hash for "https://github.com/databricks/terraform-provider-databricks/releases/download/v1.0.0/terraform-provider-databricks_1.0.0_darwin_amd64.zip"

You can fix it by following three simple steps:

Replace databrickslabs/databrickswithdatabricks/databricksin all your.tffiles viapython3 -c "$(curl -Ls https://dbricks.co/updtfns)"command.- Run the

terraform state replace-provider databrickslabs/databricks databricks/databrickscommand and approve the changes. See Terraform CLI docs for more information. - Run

terraform initto verify everything is working.

That's it. The terraform apply command should work as expected now.

You can check out the Databricks Terraform Provider documentation, and start automating your Databricks lakehouse management with Terraform beginning with this guide and examples repository. If you already have an existing Databricks setup not managed through Terraform, you can use the experimental exporter functionality to get a starting point.

Going forward, our engineers will continue to add Terraform Provider support for new Databricks features, as well as new modules, templates and walkthroughs.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read