Security Best Practices for Delta Sharing

Best practices available to customers to harden Delta Sharing requests on their lakehouse

Published: August 1, 2022

by Andrew Weaver, Itai Weiss, Milos Colic and Som Natarajan

Update: Delta Sharing is now generally available on AWS and Azure.

The data lakehouse has enabled us to consolidate our data management architectures, eliminating silos and leverage one common platform for all use cases. The unification of data warehousing and AI use cases on a single platform is a huge step forward for organizations, but once they've taken that step, the next question to consider is "how do we share that data simply and securely no matter which client, tool or platform the recipient is using to access it?" Luckily, the lakehouse has an answer to this question too: data sharing with Delta Sharing.

Delta Sharing

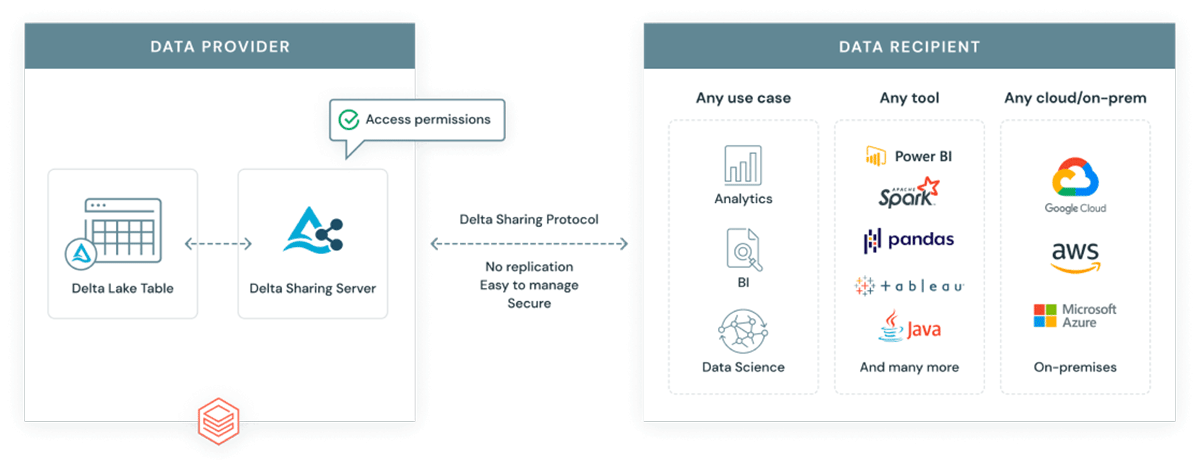

Delta Sharing is the world's first open protocol for securely sharing data internally and across organizations in real-time, independent of the platform on which the data resides. It's a key component of the openness of the lakehouse architecture, and a key enabler for organizing our data teams and access patterns in ways that haven't been possible before, such as data mesh.

Secure by Design

It's important to note that Delta Sharing has been built from the ground up with security in mind, allowing you to leverage the following features out of the box whether you use the open source version or its managed equivalent:

- End-to-end TLS encryption from client to server to storage account

- Short lived credentials such as pre-signed URLs are used to access the data

- Easily govern, track, and audit access to your shared data sets via Unity Catalog

The best practices that we'll share as part of this blog are additive, allowing customers to align the appropriate security controls to their risk profile and the sensitivity of their data.

Security Best Practices

Our best practice recommendations for using Delta Sharing to share sensitive data are as follows:

- Assess the open source versus the managed version based on your requirements

- Set the appropriate recipient token lifetime for every metastore

- Establish a process for rotating credentials

- Consider the right level of granularity for Shares, Recipients & Partitions

- Configure IP Access Lists

- Configure Databricks Audit logging

- Configure network restrictions on the Storage Account(s)

- Configure logging on the Storage Account(s)

1. Assess the open source versus the managed version based on your requirements

As we have established above, Delta Sharing has been built from the ground up with security top of mind. However, there are advantages to using the managed version:

- Delta Sharing on Databricks is provided by Unity Catalog, which allows you to provide fine-grained access to any data sets between different sets of users centrally from one place. With the open source version, you would need to separate data sets that have various data access rights amongst several sharing servers, and you would also need to impose access restrictions on those servers and the underlying storage accounts. For ease of deployment, a docker image is provided with the open source version, but it is important to note that scaling deployments across large enterprises will pose a non-trivial overhead on the teams responsible for managing them.

- Just like the rest of the Databricks Lakehouse Platform, Unity Catalog is provided as a managed service. You don't need to worry about things like the availability, uptime and maintenance of the service because we worry about that for you.

- Unity Catalog allows you to configure comprehensive audit logging capabilities out of the box.

- Data owners will be able to manage shares using SQL syntax. Additionally, REST APIs are available to manage shares. Using familiar SQL syntax simplifies the way we share data, reducing the administrative burden.

- Using the open source version, you're responsible for the configuration, infrastructure and management of data sharing but with the managed version all this functionality is available out of the box.

For these reasons, we recommend assessing both versions and making a decision based on your requirements. If ease of setup and use, out-of-the-box governance and auditing, and outsourced service management are important to you, the managed version will likely be the right choice.

2. Set the appropriate recipient token lifetime for every metastore

When you enable Delta Sharing, you configure the token lifetime for recipient credentials. If you set the token lifetime to 0, recipient tokens never expire.

Setting the appropriate token lifetime is critically important for regulatory, compliance and reputational standpoint. Having a token that never expires is a huge risk; therefore, it is recommended using short-lived tokens as best practice. It is far easier to grant a new token to a recipient whose token has expired than it is to investigate the use of a token whose lifetime has been improperly set.

See the documentation (AWS, Azure) for configuring tokens to expire after the appropriate number of seconds, minutes, hours, or days.

3. Establish a process for rotating credentials

There are a number of reasons that you might want to rotate credentials, from the expiry of an existing token, concerns that a credential may have been compromised, or even just that you have modified the token lifetime and want to issue new credentials that respect that expiration time.

To ensure that such requests are fulfilled in a predictable and timely manner, it's important to establish a process, preferably with an established SLA. This could be integrated well into your IT service management process, with the appropriate action completed by the designated data owner, data steward or DBA for that metastore.

See the documentation (AWS, Azure) for how to rotate credentials. In particular:

- If you need to rotate a credential immediately, set

--existing-token-expire-in-secondsto0, and the existing token will expire immediately. - Databricks recommends the following actions when there are concerns that credentials may have been compromised:

- Revoke the recipient's access to the share.

- Rotate the recipient and set

--existing-token-expire-in-secondsto0so that the existing token expires immediately. - Share the new activation link with the intended recipient over a secure channel.

- After the activation URL has been accessed, grant the recipient access to the share again.

4. Consider the right level of granularity for Shares, Recipients & Partitions

In the managed version, each share can contain one or more tables and can be associated with one or more recipients, using fine-grained controls to manage who or how the multiple data sets are accessed.. This allows us to provide fine-grained access to multiple data sets in a way that would be much harder to achieve using open source alone. And we can even go one step further than this, adding only part of a table to share by providing a partition specification (see the documentation on AWS, Azure).

It's worth taking advantage of these features by implementing your shares and recipients to follow the principle of least privilege, such that if a recipient credential is compromised, it is associated with the fewest number of data sets or the smallest subset of the data possible.

5. Configure IP Access Lists

By default, all that is required to access your shares is a valid Delta Sharing Credential File, therefore it's critical to minimize the possibility that credentials may be compromised by implementing network-level limits on where they can be used from.

Configure Delta Sharing IP access lists (see the docs for AWS, Azure) to restrict recipient access to trusted IP addresses, for example, the public IP of your corporate VPN.

Combining the IP access lists with the access token considerably reduces the unauthorized access risks. For someone to access the data in an unauthorized manner, they need to both have acquired a copy of your token and to be on the same authorized network which is much harder than just acquiring the token itself.

6. Configure Databricks Audit Logging

Audit logs are your authoritative record of what's happening on your Databricks Lakehouse Platform, including all of the activities related to Delta Sharing. As such, we highly recommend that you configure Databricks audit logs for each cloud (see the docs for AWS, Azure) and set up automated pipelines to process those logs and monitor/alert on important events.

Check out our companion blog, Monitoring Your Databricks Lakehouse Platform with Audit Logs for a deeper dive on this subject, including all the code you need to set up Delta Live Tables pipelines, configure Databricks SQL alerts and run SQL queries to answer important questions like:

- Which of my Delta Shares are the most popular?

- Which countries are my Delta Shares being accessed from?

- Are Delta Sharing Recipients being created without IP access list restrictions being applied?

- Are Delta Sharing Recipients being created with IP access list restrictions which are outside of my trusted IP address range?

- Are attempts to access my Delta Shares failing IP access list restrictions?

- Are attempts to access my Delta Shares repeatedly failing authentication?

7. Configure network restrictions on the storage account(s)

Once a delta sharing request has been successfully authenticated by the sharing server, an array of short-lived credentials are generated and returned to the client. The client then uses these URLs to request the relevant files directly from the cloud provider. This design means that the transfer can happen in parallel at massive bandwidth, without streaming the results through the server. It also means that from a security perspective, you're likely to want to implement similar network restrictions on the storage account to the delta sharing recipient itself - there's no point in protecting the share at the recipient level, if the data itself is hosted in a storage account that can be accessed by anyone and from anywhere.

Azure

On Azure, Databricks recommends using Managed Identities (currently in Public Preview) to access the underlying Storage Account on behalf of Unity Catalog. Customers can then configure Storage firewalls to restrict all other access to the trusted private endpoints, virtual networks or public IP ranges that delta sharing clients may use to access the data. Please reach out to your Databricks representative for more information.

Important Note: Again, it's important to consider all of the potential use cases when determining what network level restrictions to apply. For example, as well as accessing data via delta sharing, it's likely that one or more Databricks workspaces will also require access to the data, and therefore you should allow access from the relevant trusted private endpoints, virtual networks or public IP ranges used by those workspaces.

AWS

On AWS, Databricks recommends using S3 bucket policies to restrict access to your S3 buckets. For example, the following Deny statement could be used to restrict access to trusted IP addresses and VPCs.

Important Note: It's important to consider all of the potential use cases when determining what network level restrictions to apply. For example:

- When using the managed version, the pre-signed URLs are generated by Unity Catalog, and therefore you will need to allow access from the Databricks Control Plane NAT IP for your region.

- It's likely that one or more Databricks workspaces will also require access to the data, and therefore you should allow access from the relevant VPC IDs if the underlying S3 bucket is in the same region and you're using VPC Endpoints to connect to S3 or the public IP address that the data plane traffic resolves to (for example via a NAT Gateway).

- To avoid losing connectivity from within your corporate network, Databricks recommends always allowing access from at least one known and trusted IP address, such as the public IP of your corporate VPN. This is because Deny conditions apply even within the AWS console.

In addition to network level restrictions, it is also recommended that you restrict access to the underlying S3 buckets to the IAM role used by Unity Catalog. The reason being is that as we have seen, Unity Catalog provides fine grained access to your data in a way that is not possible with the coarse grained permissions provided by AWS IAM/S3. Therefore, if someone were able to access the S3 bucket directly they might be able to bypass those fine grained permissions and access more of the data than you had intended.

Important Note: As above, Deny conditions apply even within the AWS console, so it is recommended that you also allow access to an administrator role that a small number of privileged users can use to access the AWS UI/APIs.

8. Configure logging on the storage account(s)

In addition to enforcing network-level restrictions on the underlying storage account(s), you're likely going to want to monitor whether anyone is trying to bypass them. As such, Databricks recommends:

- Setting up S3 server access logging on AWS as well as the appropriate monitoring and alerting around it

- Setting up Diagnostic logging on Azure, as well as the appropriate monitoring and alerting around it

Conclusion

The lakehouse has solved most of the data management issues that led to us having fragmented data architectures and access patterns, and severely throttled the time to value an organization could expect to see from its data. Now that data teams have been freed from these problems, open but secure data sharing has become the next frontier.

Delta Sharing is the world's first open protocol for securely sharing data internally and across organizations in real-time, independent of the platform on which the data resides. And by using Delta Sharing in combination with the best practices outlined above, organizations can easily but safely exchange data with their users, partners and customers at enterprise scale.

Existing data marketplaces have failed to maximize business value for data providers and data consumers, but with Databricks Marketplace you can leverage the Databricks Lakehouse Platform to reach more customers, reduce costs and deliver more value across all of your data products.

If you're interested in becoming a Data Provider Partner, we'd love to hear from you!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read