Orchestrating Data and ML Workloads at Scale: Create and Manage Up to 10k Jobs Per Workspace

Databricks Workflows is the fully-managed orchestrator for data, analytics, and AI. Today, we are happy to announce several enhancements that make it easier to bring the most demanding data and ML/AI workloads to the cloud.

Workflows offers high reliability across multiple major cloud providers: GCP, AWS, and Azure. Until today, this meant limiting the number of jobs that can be managed in a Databricks workspace to 1000 (number varied based on tier). Customers running more data and ML/AI workloads had to partition jobs across workspaces in order to avoid running into platform limits. Today, we are happy to announce that we are significantly increasing this limit to 10,000. The new platform limit is automatically available in all customer workspaces (except single-tenant).

Thousands of customers rely on the Jobs API to create and manage jobs from their applications, including CI/CD systems. Together with the increased job limit, we have introduced a faster, paginated version of the jobs/list API and added pagination to the jobs page.

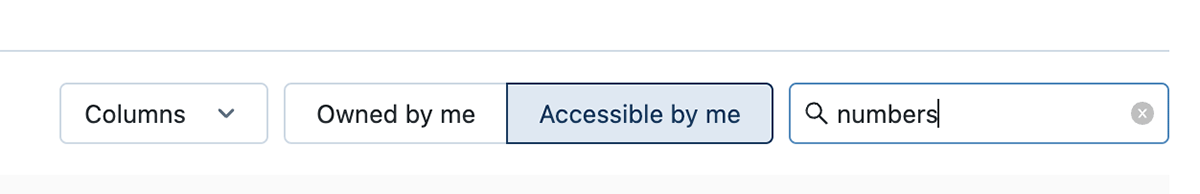

The higher workspace limit also comes with a streamlined search experience which allows searching by name, tags, and job ID.

Put together, the new features allow scaling workspaces to a large number of jobs. For rare cases where the changes in behavior above are not desired, it is possible to revert to the old behavior via the Admin Console (only possible for workspaces with up to 3000 jobs). We strongly recommend that all customers switch to the new paginated API to list jobs, especially for workspaces with thousands of saved jobs.

To get started with Databricks Workflows, see the quickstart guide. We'd also love to hear from you about your experience and any other features you'd like to see.

Learn more about:

Never miss a Databricks post

Sign up

What's next?

Product

November 21, 2024/3 min read