Best practices for cross-government data sharing

Government data exchange is the practice of sharing data between different government agencies and often partners in commercial sectors. Government can share data for various reasons, such as to improve government operations' efficiency, provide better services to the public, or support research and policy-making. In addition, data exchange in the public sector can involve sharing with the private sector or receiving data from the private sector. The considerations span multiple jurisdictions and over almost all industries. In this blog, we will address the needs disclosed as part of national data strategies and how modern technologies, particularly delta sharing, unity catalog, and cleanrooms, can help you design, implement and manage a future-proof and sustainable data ecosystem.

Data sharing and Public Sector

"The miracle is this: the more we share the more we have." - Leonard Nimoy.

Probably the quote about sharing that applies the most profoundly to the topic of data sharing. To the extent that the purpose of sharing the data is to create new information, new insights, and new data. The importance of data sharing is even more amplified in the government context, where federation between departments allows for increased focus. Still, the very same federation introduces challenges around data completeness, data quality, data access, security and control, FAIR-ness of data, etc. These challenges are far from trivial and require a strategic, multi-faceted approach to be addressed appropriately. Technology, people, process, legal frameworks, etc., require dedicated consideration when designing a robust data sharing ecosystem.

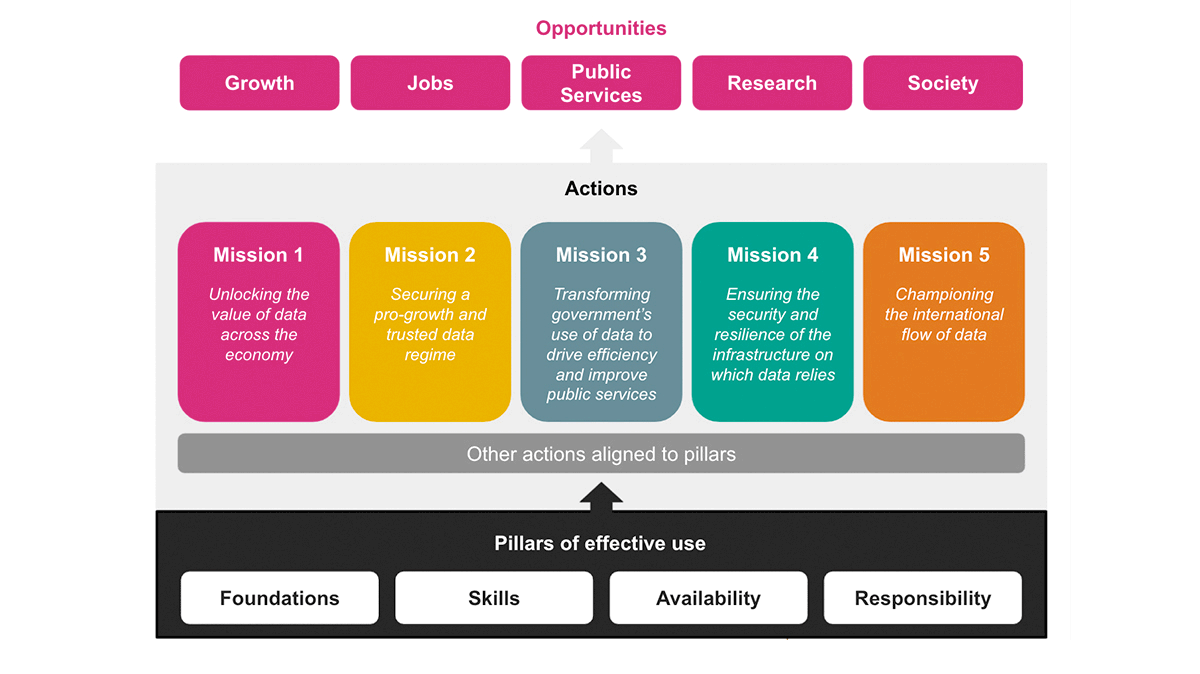

The National Data Strategy (NDS) by the UK Government outlines five actionable missions through which we can materialize the value of data for the citizen and society-wide benefits.

It comes as no surprise that each and every one of the missions is strongly related to the concept of data sharing, or more broadly, data access both within and outside of government departments:

- Unlocking the value of the data across the economy - Mission 1 of the NDS aims to assert government and the regulators as enablers of the value extraction from data through the adoption of best practices. The UK data economy was estimated to be near £125 billion in 2021 with an upwards trend. In this context, it is essential to understand that the Government collected and provided open data can be crucial for addressing many of the challenges across all industries. For example, Insurance providers can better assess the risk of insuring properties by ingesting and integrating Flood areas provided by DEFRA. On the other hand, capital market investors could better understand the risk of their investments by ingesting and integrating the Inflation Rate Index by ONS. Reversely, it is crucial for regulators to have well-defined data access and data sharing patterns for conducting their regulatory activities. This clarity truly enables the economic actors that interact with government data.

- Securing a pro-growth and trusted data regime - The key aspect of Mission 2 is data trust, or more broadly, adherence to data quality norms. Data quality considerations become further amplified for data sharing and data exchange use cases where we are considering the whole ecosystem at once, and quality implications transcend the boundaries of our own platform. This is precisely why we have to adopt "data sustainability." What we mean by sustainable data products are data products that harness the existing sources over reinvention of the same/similar assets, accumulation of unnecessary data (data pollutants) and that anticipate future uses. Ungoverned and unbounded data sharing could negatively impact data quality and hinder the growth and value of data. The quality of how the data is shared should be a key consideration of data quality frameworks. For this reason, we require a solid set of standards and best practices for data sharing with governance and quality assurance built into the process and technologies. Only this way can we ensure the sustainability of our data and secure a pro-growth trusted data regime.

- Transforming government's use of data to drive efficiency and improve public services - "By 2025 data assets are organized and supported as products, regardless of whether they're used by internal teams or external customers… Data products continuously evolve in an agile manner to meet the needs of consumers… these products provide data solutions that can more easily and repeatedly be used to meet various business challenges and reduce the time and cost of delivering new AI-driven capabilities." - The data-driven enterprise of 2025 by McKinsey. AI and ML can be powerful enablers of digital transformation for both the public and private sectors. AI, ML, reports, and dashboards are just a few examples of data products and services that extract value from data. The quality of these solutions is directly reflected in the quality of data used for building them and our ability to access and leverage available data assets both internally and externally. Whilst there is a vast amount of data available for us to build new intelligent solutions for driving efficiency for better processes, better decision-making, and better policies - there are numerous barriers that can trap the data, such as legacy systems, data silos, fragmented standards, proprietary formats, etc. Modeling data solutions as data products and standardizing them to a unified format allows us to abstract such barriers and truly leverage the data ecosystem.

- Ensuring the security and resilience of the infrastructure on which data relies - Reflecting on the vision of the year 2025 - this isn't that far from now and even in a not so distant future, we will be required to rethink our approach to data, more specifically - what is our digital supply chain infrastructure/data sharing infrastructure? Data and data assets are products and should be managed as products. If data is a product, we need a coherent and unified way of providing those products. If data is to be used across industries and across both private and public sectors, we need an open protocol that drives adoption and habit generation. To drive adoption, the technologies we use must be resilient, robust, trusted and usable by/for all. Vendor lock-in, platform lock-in or cloud lock-in are all boundaries to achieving this vision.

- Championing the international flow of data - Data exchange between jurisdictions and across governments will likely be one of the most transformative applications of data at scale. Some of the world's toughest challenges depend on the efficient exchange of data between governments - prevention of criminal activities, counter-terrorism activities, net zero emission goals, international trade, the list goes on and on. Some steps in this direction are already materializing, the US Federal Government and UK Government have agreed on data exchange for countering serious crime activities. This is a true example of championing international flow data and using data for good. It is imperative that for these use cases, we approach data sharing from a security-first angle. Data sharing standards and protocols need to adhere to security and privacy best practices.

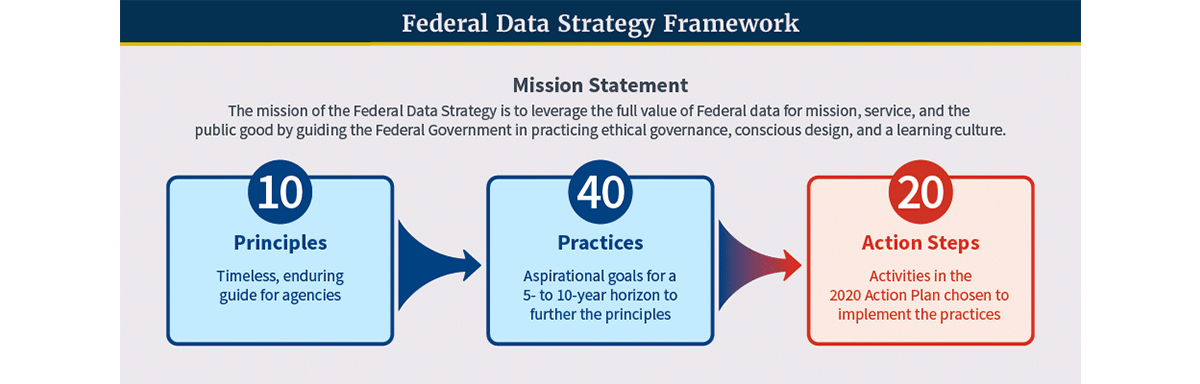

While originally built with a focus on the UK Government and how to better integrate data as a key asset of a modern government, these concepts apply in a much wider global public sector context. In the same spirit, the US Federal Government proposed the Federal Data Strategy as a collection of principles, practices, action steps and timeline through which government can leverage the full value of Federal data for mission, service and the public good.

The principles are grouped into three primary topics:

- Ethical governance - Within the domain of ethics, the sharing of data is a fundamental tool for promoting transparency, accountability and explainability of decision-making. It is practically impossible to uphold ethics without some form of audit conducted by an independent party. Data (and metadata) exchange is a critical enabler for continuous robust processes that ensure we are using the data for good and we are using data we can trust.

- Conscious design - These principles are strongly aligned with the idea of data sustainability. The guidelines promote forward thinking around usability and interoperability of the data and user-centric design principles of sustainable data products.

- Learning culture - Data sharing, or alternatively knowledge sharing, has an important role in building a scalable learning ecosystem and learning culture. Data is front and center of knowledge synthesis, and from a scientific angle, data proves factual knowledge. Another critical component of knowledge is the "Why?" and data is what we need to address the "Why?" component of any decisions we make, which policy to enforce, who to sanction, who to support with grants, how to improve the efficiency of government services, how to better serve citizens and society.

In contrast to afore discussed qualitative analysis of the value of data sharing across governments, the European Commission forecasts the economic value of the European data economy will exceed €800 billion by 2027 - roughly the same size as the Dutch economy in 2021! Furthermore, they predict more than 10 million data professionals in Europe alone. The technology and infrastructure to support the data society have to be accessible to all, interoperable, extensible, flexible and open. Imagine a world in which you'd need a different truck to transport products between different warehouses because each road requires a different set of tires, the whole supply chain would collapse. When it comes to data, we often experience the "one set of tires for one road" paradox. Rest APIs and data exchange protocols have been proposed in the past but have failed to address the need for simplicity, ease of use and cost of scaling up with the number of data products.

Delta sharing - the new Data highway

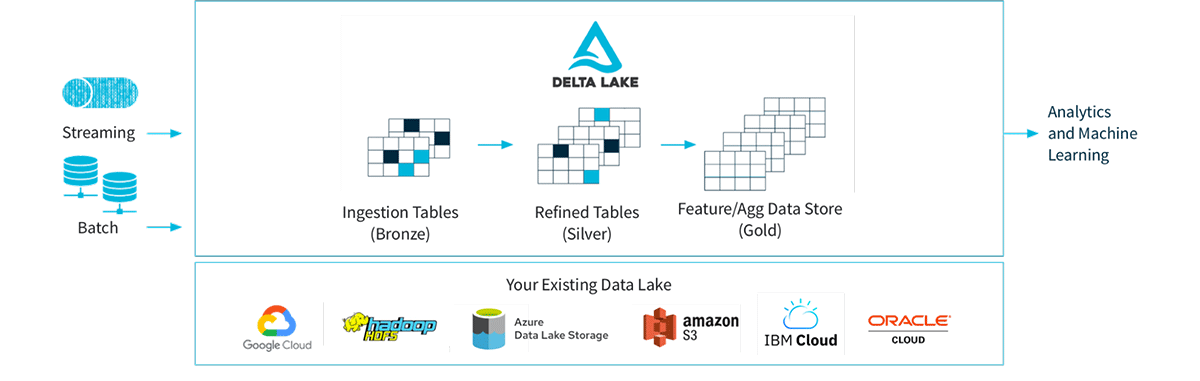

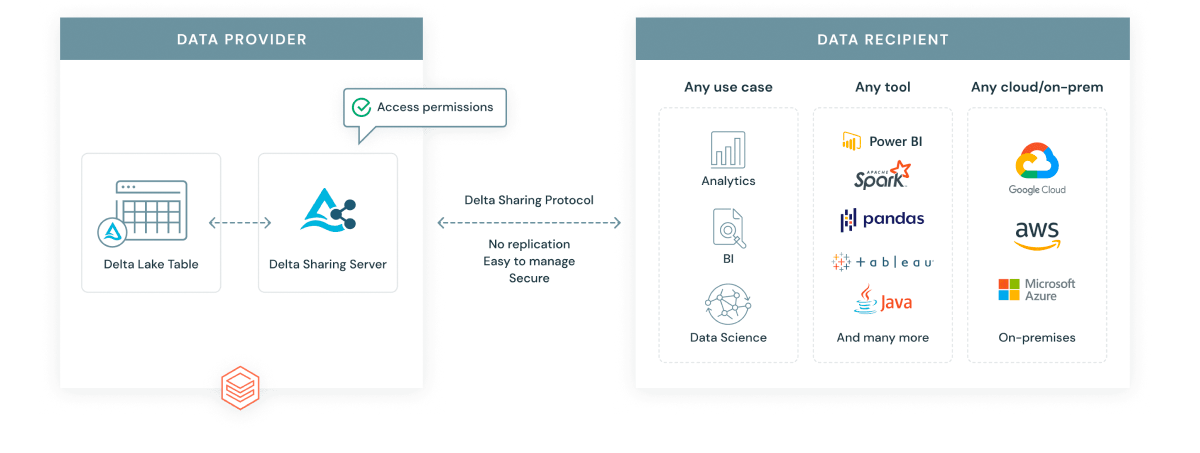

Delta Sharing provides an open protocol for secure data sharing to any computing platform. The protocol is based on Delta data format and is agnostic concerning the cloud of choice.

Delta is an open source data format that avoids vendor, platform and cloud lock-in, thus fully adhering to the principles of data sustainability, conscious design of the US Federal Data Strategy and mission 4 of the UK National Data Strategy. Delta provides a governance layer on top of the parquet data format. Furthermore, it provides many performance optimizations not available in parquet out of the box. The openness of the data format is a critical consideration, it is the main factor for driving the habit generation and adoption of best practices and standards.

Delta Sharing is a protocol based on a lean set of REST APIs to manage sharing, permissions and access to any data asset stored in delta or parquet formats. The protocol defines two main actors, the data provider (data supplier, data owner) and the data recipient (data consumer). The recipient, by definition, is agnostic to the data format at the source. Delta Sharing provides the necessary abstractions for governed data access in many different languages and tools.

Delta sharing is uniquely positioned to answer many of the challenges of data sharing in a scalable manner within the context of highly regulated domains like the public sector:

- Privacy and security concerns - Personally identifiable data or otherwise sensitive or restricted data is a major part of the data exchange needs of a data-driven and modernized government. Given the sensitive nature of such data, it is paramount that the governance of data sharing is maintained in a coherent and unified manner. Any unnecessary process and technological complexities increase the risk of over-sharing data. With this in mind, delta sharing has been designed with security best practices from the very inception. The protocol provides end-to-end encryption, short-lived credentials, and accessible and intuitive audit and governance features. All of these capabilities are available in a centralized way across all your delta tables across all clouds.

- Quality and accuracy - Another challenge of data sharing is ensuring that the data being shared is of high quality and accuracy. Given that the underlying data is stored as delta tables, we can guarantee that the transactional nature of data is respected; delta ensures ACID properties of data. Furthermore, delta supports data constraints to guarantee data quality requirements at storage. Unfortunately, other formats such as CSV, CSVW, ORC, Avro, XML, etc., do not have such properties without significant additional effort. The issue becomes even more emphasized by the fact that data quality cannot be ensured in the same way on both the data provider and data recipient side without the exact reimplementation of the source systems. It is critical to embed quality and metadata together with data to ensure quality travels together with data. Any decoupled approach to managing data, metadata and quality separately increases the risk of sharing and can lead to undesirable outcomes.

- Lack of standardization - Another challenge of data sharing is the lack of standardization in how data is collected, organized, and stored. This is particularly pronounced in the context of governmental activities. While governments have proposed standard formats (e.g. Office for National Statistics promotes usage of CSVW), aligning all private and public sector companies to standards proposed by such initiatives is a massive challenge. Other industries may have different requirements for scalability, interoperability, format complexity, lack of structure in data, etc. Most of the currently advocated standards are lacking in multiple such aspects. Delta is the most mature candidate for assuming the central role in the standardization of data exchange format. It has been built as a transactional and scalable data format, it supports structured, semi-structured and unstructured data, it stores data schema and metadata together with data and it provides a scalable enterprise-grade sharing protocol through delta sharing. Finally, Delta is one of the most popular open source projects in the ecosystem and, since May 2022, has surpassed 7 million monthly downloads.

- Cultural and organizational barriers - These challenges can be summarized by one word - friction. Unfortunately, it's a common problem for civil servants to struggle to obtain access to both internal and external data due to over cumbersome processes, policies and outdated standards. The principles we are using to build our data platforms and our data sharing platforms have to be self-promoting, have to drive adoption and have to generate habits that adhere to best practices. If there is friction with standard adoption, the only way to ensure standards are respected is by enforcement and that itself is yet another barrier to achieving data sustainability. Organizations have already adopted Delta Sharing both in the private and public sectors. For example, US Citizenship and Immigration Services (USCIS) uses delta sharing to satisfy several inter-agency data-sharing requirements. Similarly, Nasdaq describes delta sharing as the "future of financial data sharing", and that future is open and governed.

- Technical challenges - Federation at the government scale or even further across multiple industries and geographies poses technical challenges. Each organization within this federation owns its platform and drives technological, architectural, platform and tooling choices. How can we promote interoperability and data exchange in this vast, diverse technological ecosystem? The data is the only viable integration vehicle. As long as the data formats we utilize are scalable, open and governed, we can use them to abstract from individual platforms and their intrinsic complexities.

Delta format and Delta Sharing solve this wide array of requirements and challenges in a scalable, robust and open way. This positions Delta Sharing as the strongest choice for unification and simplification of the protocol and mechanism through which we share data across both private and public sectors.

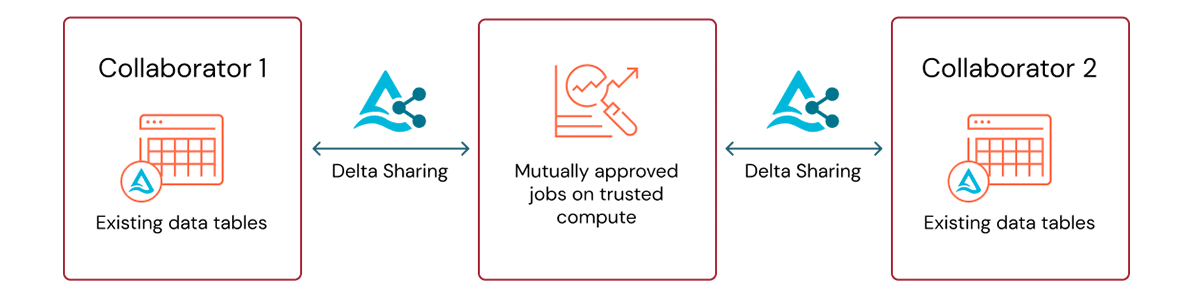

Data Sharing through Data Cleanroom

Taking the complexities of data sharing within highly regulated space and the public sector one step further - what if we require to share the knowledge contained in the data without ever granting direct access to the source data to external parties? These requirements may prove achievable and desirable where the data sharing risk appetite is very low.

In many public sector contexts, there are concerns that combining the data that describes citizens could lead to a big brother scenario where simply too much data about an individual is concentrated in a single data asset. If it were to fall into the wrong hands, such a hypothetical data asset could lead to immeasurable consequences for individuals and the trust in public sector services could erode. On the other hand, the value of a 360 view of the citizen could accelerate important decision making. It could immensely improve the quality of policies and services provided to the citizens.

Data cleanrooms address this particular need. With data cleanrooms you can share data with third parties in a privacy-safe environment. With Unity Catalog, you can enable fine-grained access controls on the data and meet your privacy requirements. In this architecture, the data participants never get access to the raw data. The only outputs from the cleanrooms are those data assets generated in a pre-agreed, governed and fully controlled manner that ensures compliance with the requirements of all parties involved.

Finally, data cleanrooms and Delta Sharing can address hybrid on-premise-off-premise deployments, where the data with the most restricted access remains on the premise. In contrast, less restricted data is free to leverage the power of the cloud offerings. In said scenario, there may be a need to combine the power of the cloud with the restricted data to solve advanced use cases where capabilities are unavailable on the on premise data platforms. Data cleanrooms can ensure that no physical data copies of the raw restricted data are created, results are produced within the cleanroom's controlled environment and results are shared back to the on premise environment (if the results maintain the restricted access within the defined policies) or are forwarded to any other compliant and predetermined destination system.

Citizen value of data sharing

Every decision made by the Government is a decision that affects its citizens. Whether the decision is a change to a policy, granting a benefit or preventing crime, it can significantly influence the quality of our society. Data is a key factor in making the right decisions and justifying the decisions made. Simply put, we can't expect high-quality decisions without the high quality of data and a complete view of the data (within the permitted context). Without data sharing, we will remain in a highly fragmented position where our ability to make those decisions is severely limited or even completely compromised. In this blog, we have covered several technological solutions available within the Lakehouse that can derisk and accelerate how the Government is leveraging the data ecosystem in a sustainable and scalable way.

For more details on the industry use cases that delta sharing is addressing please consult A New Approach to Data Sharing ebook.