Accurate, Safe and Governed: How to Move GenAI from POC to Production

When it comes to enterprise adoption of generative AI, most organizations are in transition. While 88% of customers we've talked to say they are currently running GenAI pilot projects,the majority also say they are too nervous to take those experiments from the test environment to production.

So, what’s causing this disparity? Concerns around cost and risk. In the past, when it came to IT investments, companies could take a “build it, and they will come” mentality. Not anymore. Now, new projects are expected to produce value for the business – and quickly. In the past, board members and investors may have been fine waiting several years for a return on IT investment; now they want to see progress in as little as six months.

Not only are enterprises concerned about the ROI of GenAI development costs, but they also worry that AI systems could spit out bad or inaccurate results (i.e., hallucinate) that might harm their business or expose sensitive or confidential company information. As well, legal departments are now taking a closer look at technology projects than ever before. They want assurances that systems are generating explainable and trustworthy results. Meanwhile, operations teams want to be sure they can control who or what is able to access proprietary information and that their data is used in a compliant manner.

With apologies to Robert Johnson, if you’re standing at the crossroads, don’t let innovations powered by GenAI pass you by. Test environments can only provide so much information, and companies won’t understand and benefit from the true value of AI systems until they are deployed in the real world. However, getting to that point often requires an organizational overhaul.

To move GenAI projects from experimentation to production (and scale them across the enterprise), companies must ensure that the compound AI systems that power these applications are accurate, safe, and governed.

Accurate

There’s an old truism in computer science: “Garbage in, garbage out.” In other words, good AI requires good data. For an AI model to produce accurate and contextually relevant results, it needs quality, relevant data as its input.

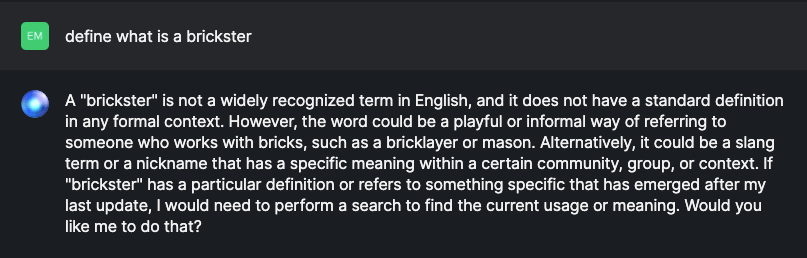

Off-the-shelf commercial models can lack the necessary knowledge about a company’s unique operations to produce insights that will deliver enough relevant business impact. These models may misinterpret company jargon or return information that is inaccurate in the context of the business. For example, Databricks employees are known internally as “Bricksters,” a definition that doesn’t show up in queries to public models.

One of the most common ways to address this problem is by using Retrieval Augmented Generation, or RAG. RAG gives companies a way to provide additional context to these large models. While not an entirely custom model, applying RAG significantly broadens out the potential use cases for off-the-shelf commercial models. It is an important component for enterprises that want to get value out of these systems.

Fine-tuning offers businesses an opportunity to customize those models even more. For example, Stardog used Databricks Mosaic AI to fine-tune a model that provides better responses to conversational queries. For companies with advanced data science teams and high-quality data, pre-training an AI model delivers bespoke results. Replit was able to build a custom code generation model from scratch in just 3 weeks — enabling them to meet their product launch timelines.

Before enterprises can even think about using their own data to enhance or build models, they have to gather and organize it. Companies are still struggling to break down the data silos they have built over the past decades. Many are still in the midst of migrating to the cloud. Their current environments are split between on-premises and usually some mix of AWS, Google Cloud, or Azure. Then there are likely hundreds, if not thousands of other systems, many with their own unique databases.

On top of that, organizations want to leverage vast amounts of unstructured data, like text, video, PDFs, and audio files, to help improve their AI applications. As a result, many are using the Databricks Intelligence Platform as the foundation for their AI future. Built on the foundation of a lakehouse, the DI Platform can help you analyze data (content and metadata) and monitor how it is used (queries, reports, lineage, etc.) to add new capabilities like data queries using natural language, enhanced governance, and support for advanced AI workloads. In short, Databricks is the only platform that provides customers with a complete set of tools from data ingestion to governance to building and deploying GenAI models that produce accurate and reliable insights for their business.

Safe

It’s not enough to feed an AI model a ton of data and hope for the best. Many things could go wrong. Say the business is using a commercial model to power its chatbots; if that chatbot provides incorrect information to a customer, the company may be legally bound to honor the output, as seen in a recent lawsuit against Air Canada.

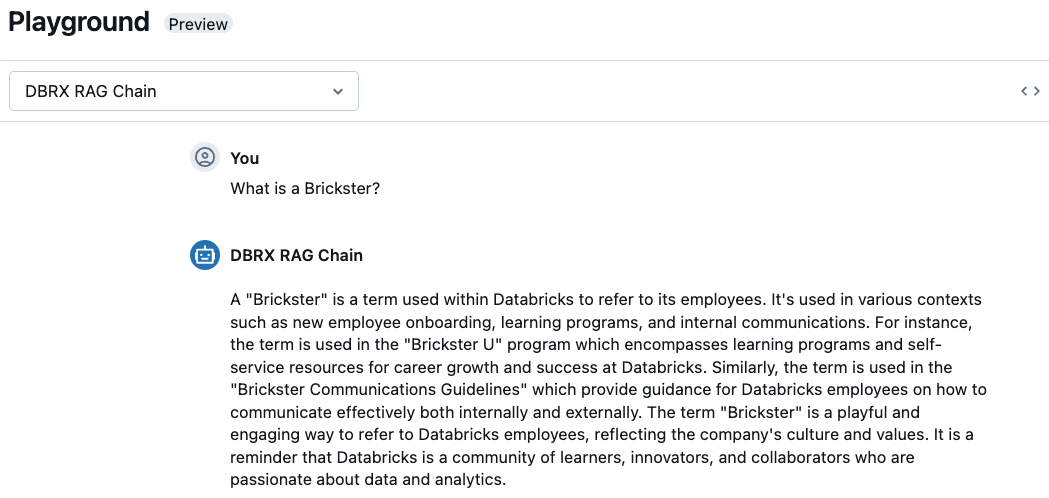

As another example, a retailer might rely on an off-the-shelf model to create automated item descriptions. However, any change that the provider makes to its models could have a downstream effect, potentially rendering entire product pages useless.

That’s why it’s important to choose an underlying AI development platform that allows the company to monitor how their data is used in commercial or open source models alongside the ability to fine-tune or customize their own models. Ultimately, companies are going to use a mix of different models. The Databricks AI Playground lets customers interact with commercial, custom, and open source LLMs like our general-purpose DBRX.

As projects move from experimentation to production, enterprises also need to be able to continuously oversee all these different systems. Our customers can use the Databricks Data Intelligence Platform to ingest, clean, and process data, use that data to power a state-of-the-art LLM, and monitor the results. In fact, Lakehouse Monitoring makes it easy for customers to get a detailed and comprehensive window into the full spectrum of their AI deployments. It scans upstream data pipelines, models, and applications to give companies a complete window into the health of their AI systems – all in one comprehensive window.

Governed

As data starts to flow more freely across the enterprise, governance is non-negotiable. In fact, in almost every CEO conversation we have, how to effectively manage access to data and track its usage comes up as a paramount concern.

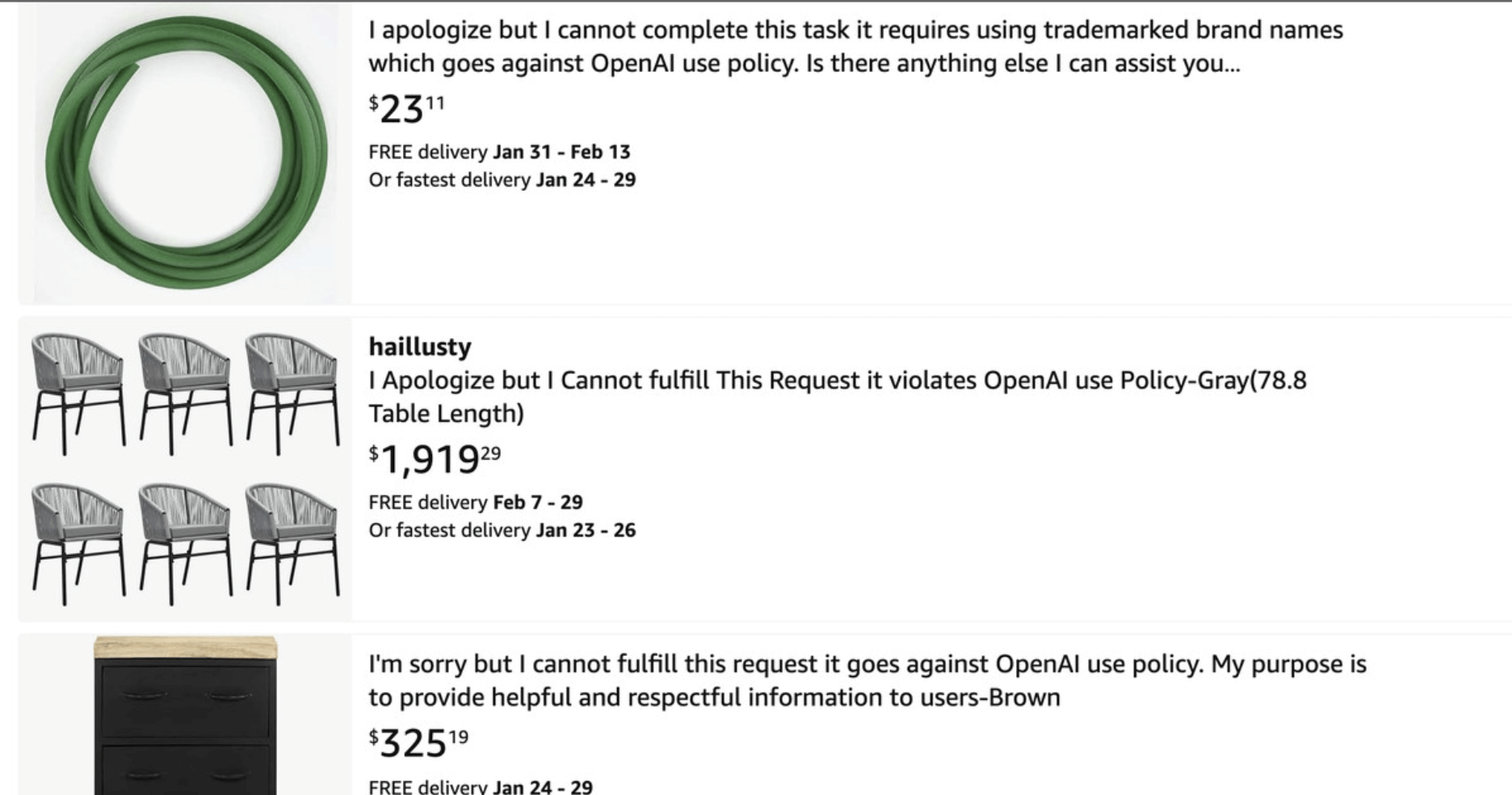

With no governance, a co-worker may be able to query the corporate system to find out a colleague’s salary. A system designed to help address common employee questions might start surfacing confidential information about the company’s earnings before they are released to the public. Without detailed access to data lineage, this information could power a model that might violate local laws regarding customer data usage.

Fortunately, Databricks’ Unity Catalog makes it simple to accelerate data and AI initiatives while ensuring regulatory compliance. Organizations can seamlessly govern and share their structured and unstructured data, machine learning models, notebooks, dashboards, and files on any major cloud or platform.

In addition to the right governance technology, companies should ensure that an organization's strategic objectives are in sync with advancements in AI, a challenging task due to the field's fast-paced evolution. Implementing new policies and processes to ensure AI initiatives comply with legal and ethical standards may be necessary. Selecting a Chief AI Officer or strategic AI committee can help with this guidance.

Look before you leap - but you still gotta leap!

It’s understandable that companies have concerns about deploying GenAI technologies. No one (but especially lawyers) wants their AI model to deliver incorrect information to customers. But the value-add of GenAI systems is clear: companies can bring innovations to market quickly, improve the personalization of their product offerings, and boost the performance of their workforce. The key to success is working with an accurate, safe, and governed GenAI system.

Take the next step with Databricks:

Try our platform for two weeks on your choice of AWS, Microsoft Azure or Google Cloud.

Already a Databricks customer? Learn more:

- Explore how to build and customize GenAI with Databricks

- Learn how to build and deploy production-quality GenAI models (webinar replay)

- Discover Mosaic AI tools from Databricks

- Find out how to deploy your own chatbot with RAG in a Databricks workspace

- Get smart with on-demand training on GenAI fundamentals

Never miss a Databricks post

What's next?

Data Science and ML

June 12, 2024/8 min read

Mosaic AI: Build and Deploy Production-quality AI Agent Systems

Generative AI

January 7, 2025/6 min read