Announcing Automatic Publishing to Power BI

Easily keep reports and dashboards in sync with changes to your data

Summary

- Databricks Workflows now supports a new task type for Power BI

- Support for refreshing and updating Import/DirectQuery/Dual Storage modes semantic models

- The Power BI task natively integrates with Unity Catalog

We’re excited to announce the Public Preview of the Microsoft Power BI task type in Databricks Workflows, available on Azure, AWS, and GCP.

With this new task type, users can now update and refresh Power BI semantic models directly from Databricks. This leads to better total cost of ownership, higher efficiency, and ensures data is up-to-date for Power BI report and dashboard consumers.

Key benefits include:

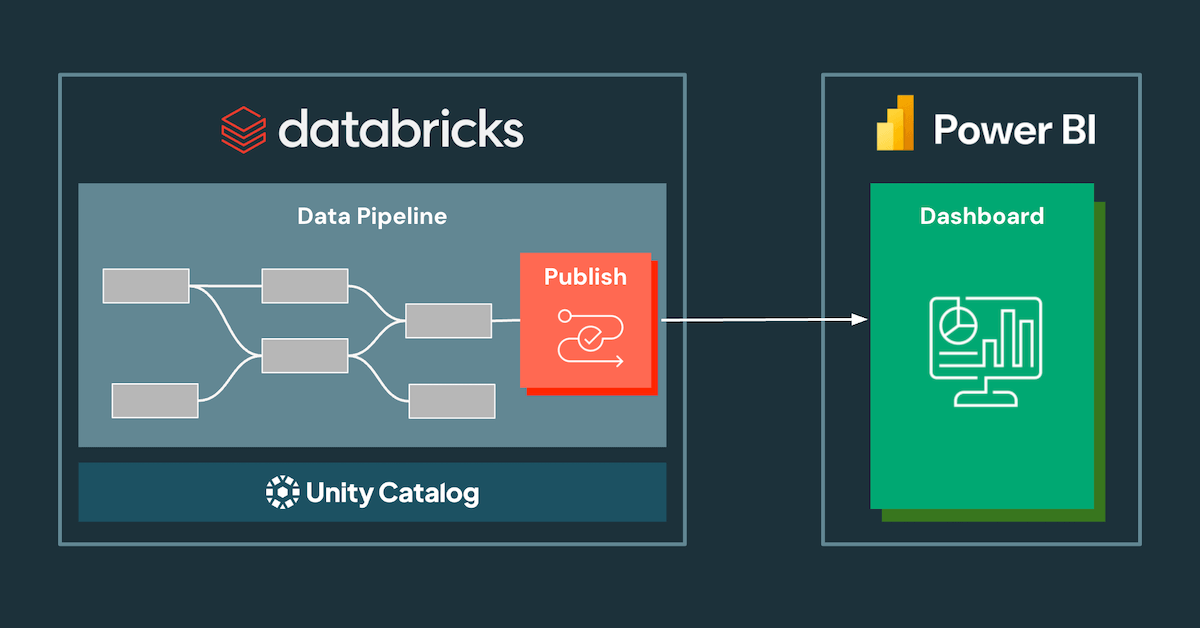

- Direct integration: Publish datasets from Unity Catalog to Power BI directly from data pipelines.

- Update Power BI when your data updates: Significantly reduce refresh costs by updating semantic models only when data changes.

- Data freshness for BI: Deliver fresh insights by automatically pushing changes to underlying tables and their relationships.

Automate data integration from Unity Catalog with Power BI

With the Power BI task, you can now automate Power BI semantic model updates and refreshes directly from Databricks Workflows. This eliminates the need to switch contexts between Databricks and Power BI, streamlining the process of making your data available for visualization and analysis in Power BI.

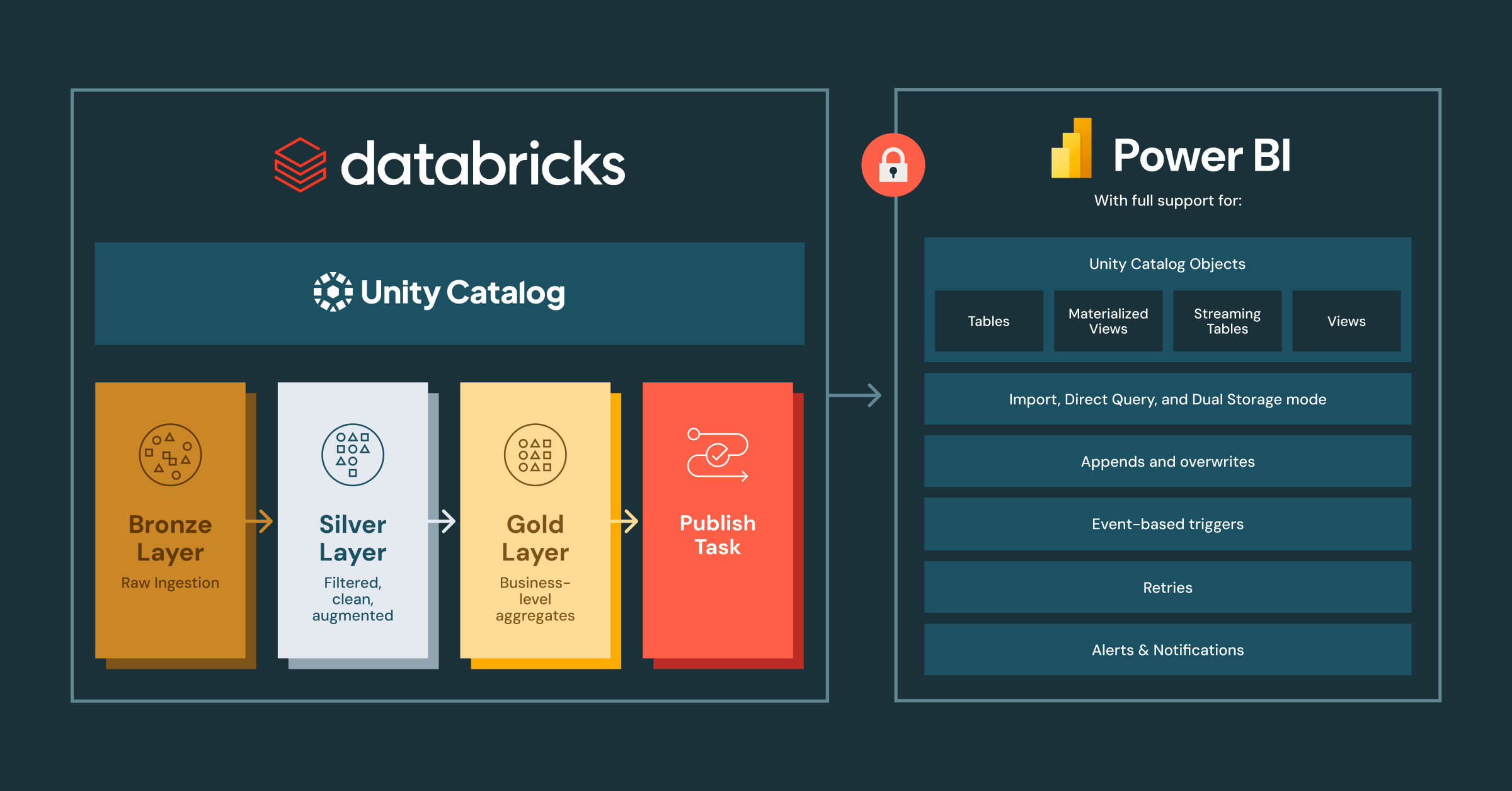

Power BI tasks fully support Unity Catalog data objects including tables, views, materialized views, and streaming tables. The best part - you can build Power BI semantic models based on Unity Catalog data objects from multiple schemas and catalogs.

Native integration among Unity Catalog, Power BI, and Microsoft Entra ID means best-in-class security, governance, and observability. Power BI semantic models can be configured to utilize OAuth with Single Sign-On to ensure that permissions are honored for each dashboard query along with the full suite of governance and observability capabilities that Unity Catalog offers. This integration enhances security and compliance by providing seamless authentication, authorization, and data access control across your Databricks and Power BI environments.

All of the power of Databricks Workflows and Power BI

Power BI tasks are built into Databricks Workflows so you can leverage its advanced orchestration and monitoring capabilities. This means you can extend powerful features such as task dependencies, schedules/triggers, retries, and notifications to data pipelines that utilize Power BI tasks.

Power BI tasks support publishing, updating, and refreshing semantic models in Import, Direct Query, and Dual Storage modes, providing you with full flexibility to balance performance and security.

Extensibility is front and center with Power BI tasks. You can work with Power BI tasks visually in the Databricks Jobs UI as well as programmatically via the Jobs API and Databricks Asset Bundles.

How it works

Scenario: You have an existing retail analytics data pipeline that ingests data from source databases using a pipeline task and applies transformations and aggregations using a notebook task, resulting in a collection of BI-ready tables. You’ve received a request to ensure a Power BI semantic model is in sync with this data as it changes over time.

Creating a Power BI task is simple. All you need to do is:

- Navigate to your existing job

- Add a Power BI task to the existing job and choose a SQL warehouse

- Select a Power BI connection, workspace, and semantic model

- Select Import or DirectQuery mode

- Select Unity Catalog data objects

- Set an authentication method

- Save the task

Now the next time your existing data pipeline runs, your Power BI semantic model will automatically update as your data changes.

Within seconds of your job successfully completing, your dataset will be updated in Power BI, ready for report creation and analysis.

Getting Started with Power BI tasks

With Power BI tasks now in Public Preview, you can empower data engineers to supercharge their data pipelines and seamlessly integrate their business-friendly datasets with Power BI.

We are excited to see how you will use Power BI tasks and encourage you to give them a try today. To get started, please visit the Power BI task documentation.

Looking to deepen your Power BI + Azure Databricks integration?

Check out Part 1 and Part 2 of our connectivity series:

- Part 1: Power BI Service Connections to Azure Databricks with Private Networking

- Part 2: Performance Configurations for Connecting Power BI to a Private Link Azure Databricks Workspace

Together, these blogs provide essential best practices to optimize security and performance when connecting Power BI to Azure Databricks.

The Databricks team is always looking to improve the Power BI integration experience, and would love to hear your feedback!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read