Announcing egress control for serverless and model serving workloads

Reduce the risk of unauthorized or accidental data transfers

Published: January 7, 2025

by Samrat Ray, Kelly Albano, Andrew Weaver and Bruce Nelson

Summary

- Serverless egress control is available in Public Preview on AWS and Azure for Databricks serverless and Mosaic AI Model Serving workloads.

- This feature enables you to centrally control outbound access from serverless workloads across multiple products and workspaces to minimize the risk of unauthorized or accidental data transfers outside Databricks.

- Serverless egress control enables safe policy testing and implementation to protect existing production workloads from disruptions with support for enforced or dry-run modes.

We are excited to announce that egress control for Databricks serverless and Mosaic AI Model Serving workloads is available in Public Preview on AWS and Azure! You can now configure policies to centrally control outbound access from serverless workloads across multiple products and workspaces.

Serverless egress control allows you to benefit from the agility and cost efficiency of Databricks serverless offerings while protecting against data exfiltration to unauthorized destinations. With this release, serverless egress control support Model Serving, Notebooks, Workflows, Delta Live Tables (DLT) pipelines, Lakehouse Monitoring, Databricks SQL and Databricks Apps.

Benefits of Databricks serverless egress control

Enhance data security

Serverless egress control helps reduce the chances of unauthorized data transfers from your secure Databricks environment. By setting egress policies, you can lower the risk of data being stolen or improperly shared. This way, you ensure that your data is only sent to approved external locations, whether on the internet or within your cloud environment.

Minimize unintended data transfer costs

Unmonitored data transfers to the internet can quickly lead to unexpected large egress charges. Now, you can better predict and manage your network costs by ensuring that data is only sent out to authorized destinations.

Ensure regulatory compliance

For industries with stringent data governance and compliance requirements, such as finance, healthcare, or government, ensuring that data is only processed in compliant environments is non-negotiable. Serverless egress control can ensure that data is only processed in an environment that is isolated from the internet and unauthorized network endpoints, helping you meet your compliance objectives.

"At Abacus Insights, our mission to streamline data management and analytics for healthcare demands strict compliance with HIPAA and HITRUST. With serverless egress control and the use of Llama 3 models on Mosaic AI Model Serving, we can ensure that the data stays in our environment. This approach enables us to benefit from the performance and agility of serverless compute for our AI use cases while meeting our security and compliance obligations." – Navdeep Alam, Chief Technology Officer, Abacus Insights

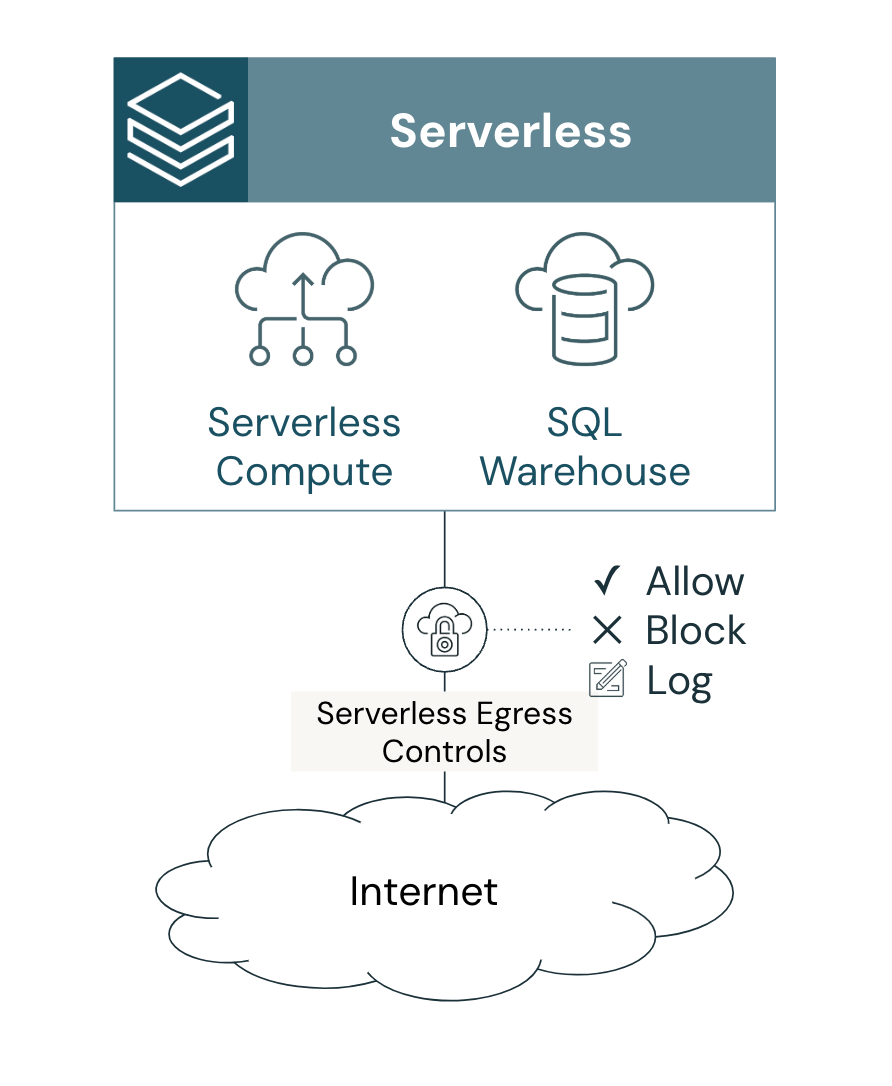

How does serverless egress control work?

Easily configure granular egress polices

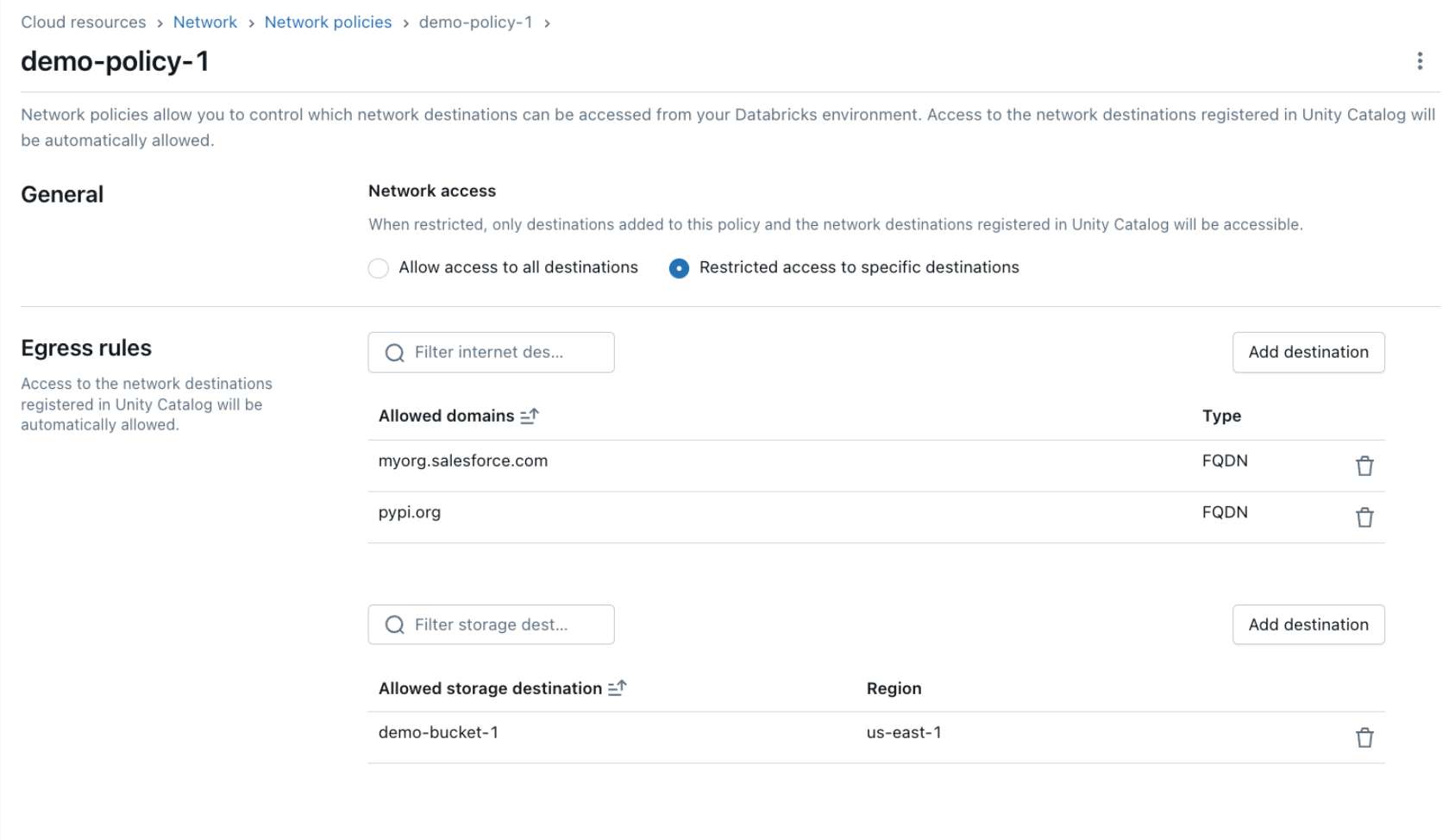

You can configure serverless egress control by creating or updating network policy objects in the account console. Within a network policy, you can define the macro egress posture - i.e., whether the workloads have full or restricted internet access. For restricted access, you can define the list of fully qualified domain names (FQDN) and cloud storage resources to which the workloads have access.

A policy applies consistently to all supported serverless products. To further simplify the configuration of granular rules, serverless egress control automatically allows access to locations and connections defined in Unity Catalog.

Centrally manage your egress posture at scale

Each Databricks account has a default-policy object that defines the default network policy associated with all workspaces in that account. You can define the default egress rules for existing and new workspaces by updating the default-policy object. Or, you can override the default policy entirely by creating an additional network policy object and associating it with one or more workspaces (AWS, Azure).

Thus, you can centrally manage the posture across all your workspaces by creating different policies for environments such as production, development, and evaluation. You can then associate each policy with all workspaces within that environment.

Audit and debug all policy violations

Serverless egress control policies are enforced at the time a connection is established. All denials are logged in the outbound_network system table within the system.access schema. Below is an example query for listing denial events in the last hour:

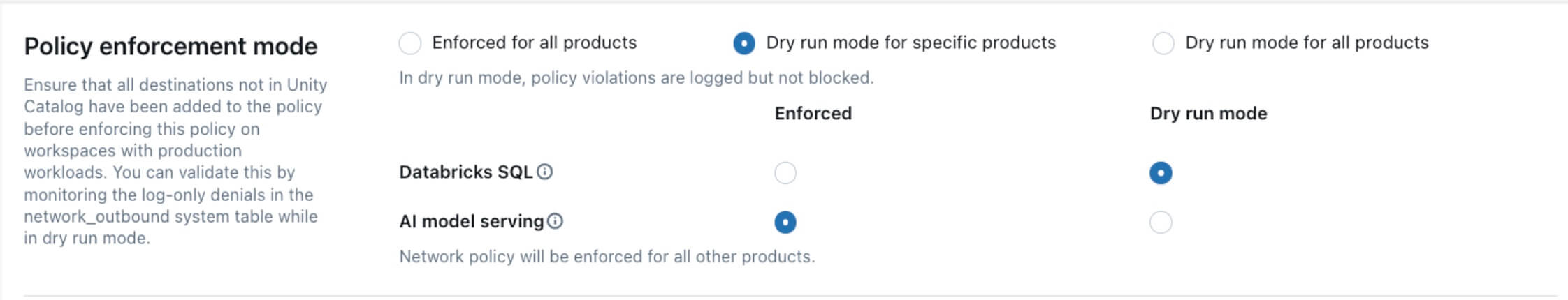

Safely apply egress control policies to existing production workloads

Serverless egress control supports the concept of an enforcement mode for the policy. The enforcement mode can be set to either “enforced” or “dry-run”.

In the enforced mode, outbound connections that violate the policy are denied and the denial is logged in the outbound_network system table. In the dry-run mode, outbound connections that violate the policy are allowed, but the violation is logged in the network_outbound system table as a dry-run entry.

You can set the policy to the dry-run mode (previously known as “log-only”) for all products or specifically for the Databricks SQL or Model Serving products. If you have any Databricks SQL or Model Serving workloads in production, we recommend setting the policy to the dry-run mode first to reduce the risk of breaking an existing production environment.

Getting started

Serverless egress controls are available on the Enterprise tier of Databricks on AWS and the Premium tier of Azure Databricks. You must be a Databricks account administrator to configure serverless egress control policies. For detailed instructions on policy configuration, please see our documentation for AWS and Azure.

If you don’t have serverless compute enabled in your account, you can follow these instructions in AWS or Azure. Please review our security best practices on the Databricks Security and Trust Center for other platform security features to consider as part of your deployment.

Take advantage of our introductory discounts: get 50% off serverless compute for Jobs and Pipelines and 30% off for Notebooks, until April 30, 2025. This limited-time offer is the perfect opportunity to explore serverless compute at a reduced cost.

Want to see it in action?

Try the Serverless Egress Control product tours

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read