Lakeflow

Ingest, transform, and orchestrate with a unified data engineering solution

TOP COMPANIES USING LAKEFLOWThe end-to-end solution for delivering high-quality data.

Tooling that makes it easy for every team to build reliable data pipelines for analytics and AI.85% faster development

50% cost reduction

99% reduction in pipeline latency

Unified tooling for data engineers

Lakeflow Connect

Efficient data ingestion connectors and native integration with the Data Intelligence Platform unlock easy access to analytics and AI, with unified governance.

Spark Declarative Pipelines

Simplify batch and streaming ETL with automated data quality, change data capture (CDC), data ingestion, transformation, and unified governance.

Lakeflow Jobs

Equip teams to better automate and orchestrate any ETL, analytics, and AI workflow with deep observability, high reliability, and broad task type support.

Unity Catalog

Seamlessly govern all your data assets with the industry’s only unified and open governance solution for data and AI, built into the Databricks Data Intelligence Platform.

Lakehouse Storage

Unify the data in your lakehouse, across all formats and types, for all your analytics and AI workloads.

Data Intelligence Platform

Explore the full range of tools available on the Databricks Data Intelligence Platform to seamlessly integrate data and AI across your organization.

Build reliable data pipelines

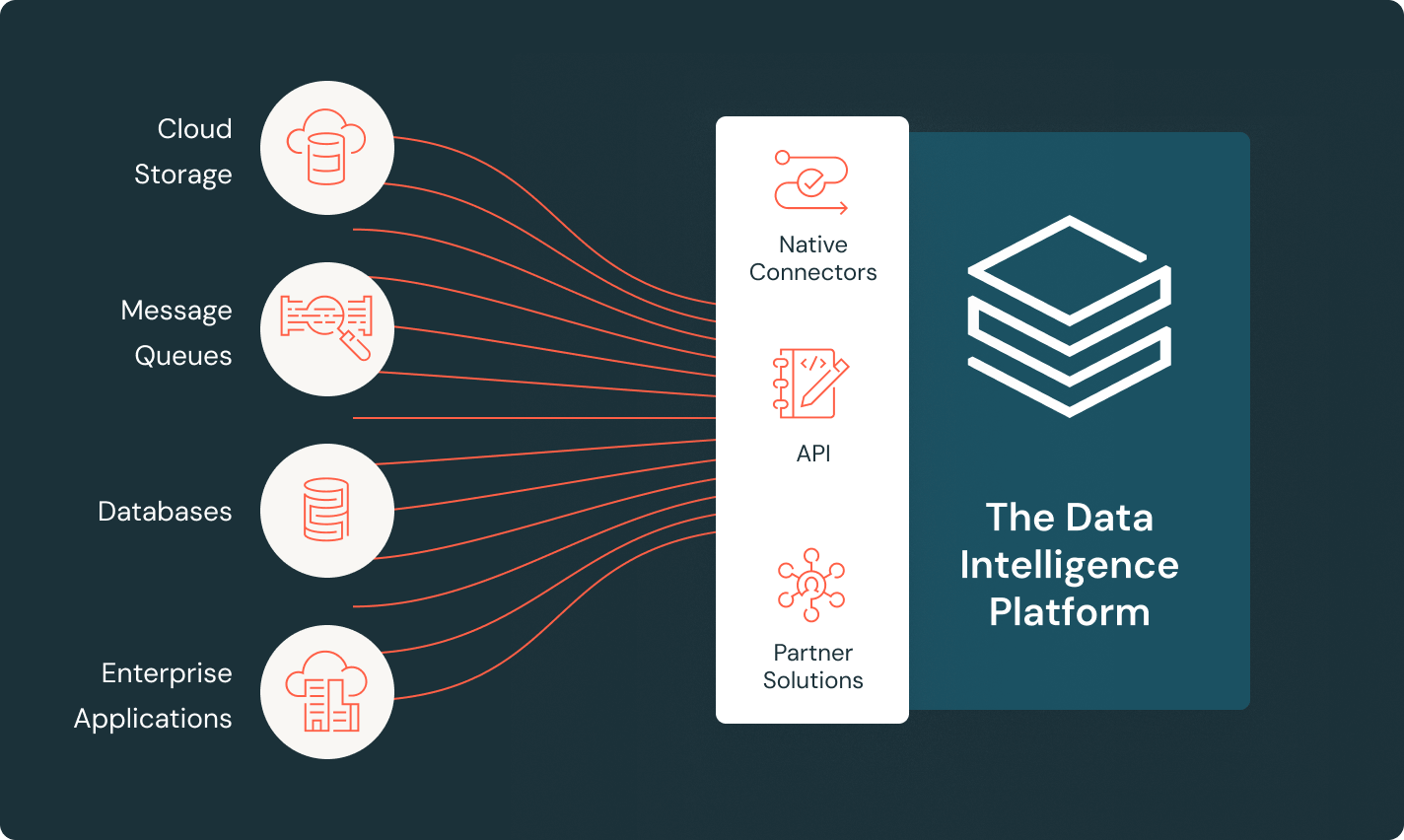

Unlock value from your data, no matter where it sits

Bring all your data into the Data Intelligence Platform using Lakeflow’s connectors to file sources, enterprise applications and databases. Fully managed connectors that utilize incremental data processing provide efficient ingestion and built-in governance keeps you in control of your data through your pipeline.

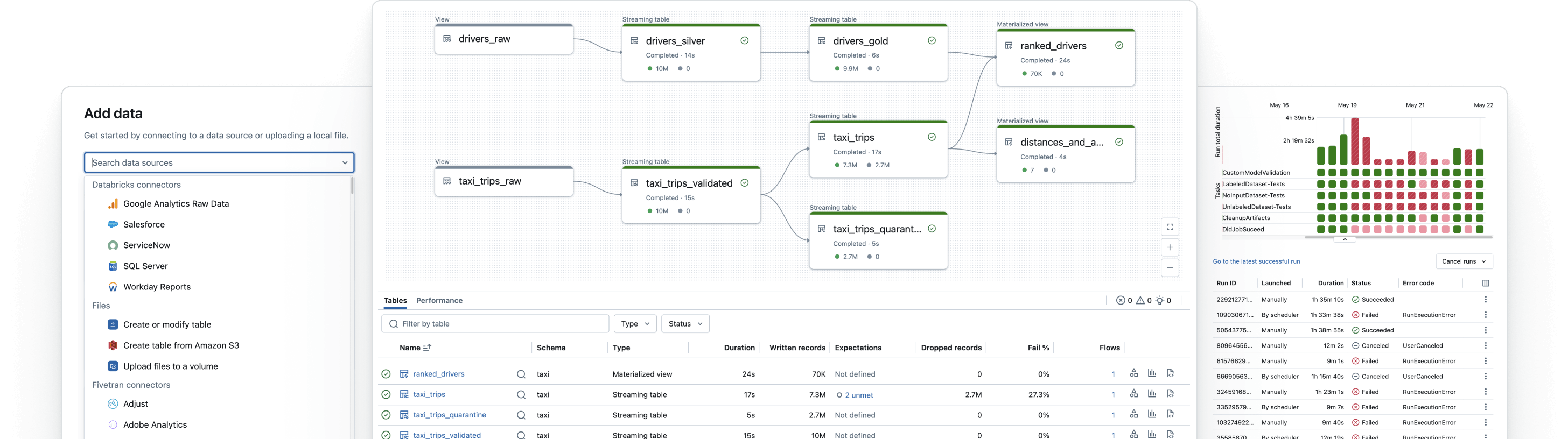

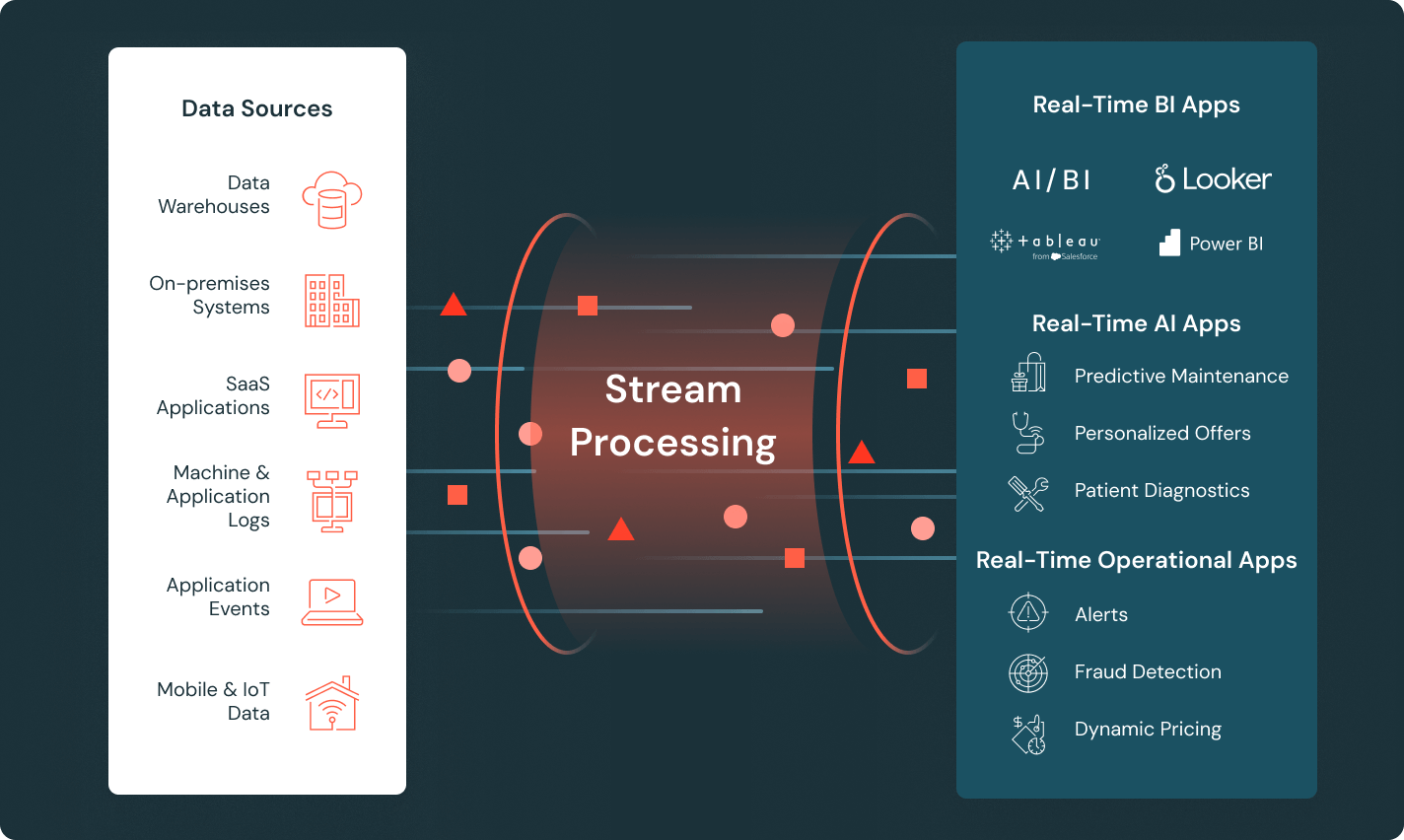

Deliver fresh data for real-time insights

Build pipelines that process data arriving in real-time from sensors, clickstreams and IoT devices and feed real-time applications. Reduce operational complexity with Spark Declarative Pipelines and use streaming tables for simplified pipeline development.

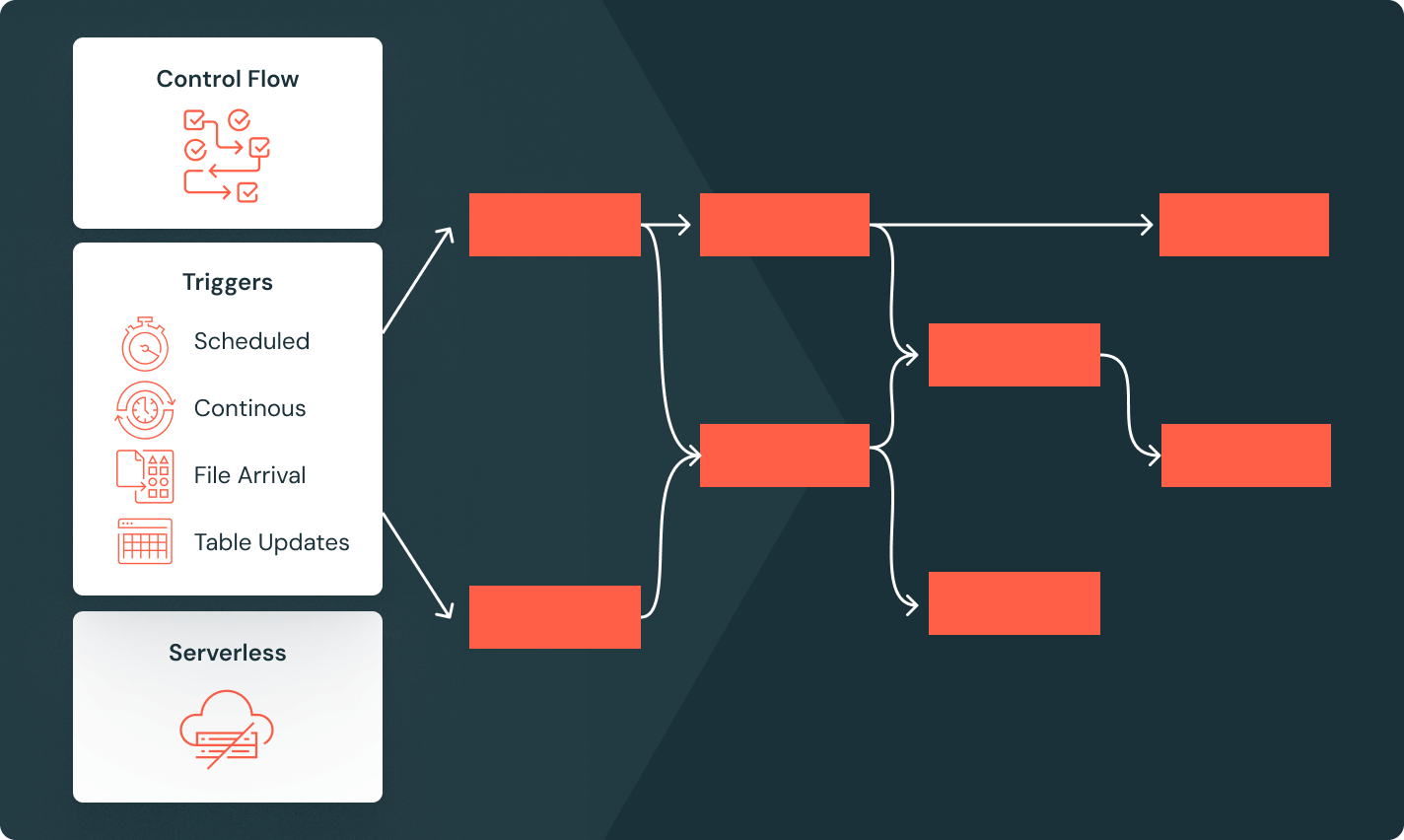

Orchestrate complex workflows with ease

Define reliable analytics and AI workflows with a managed orchestrator integrated with your data platform. Easily implement complex DAGs using enhanced control flow capabilities like conditional execution, looping and a variety of job triggers.

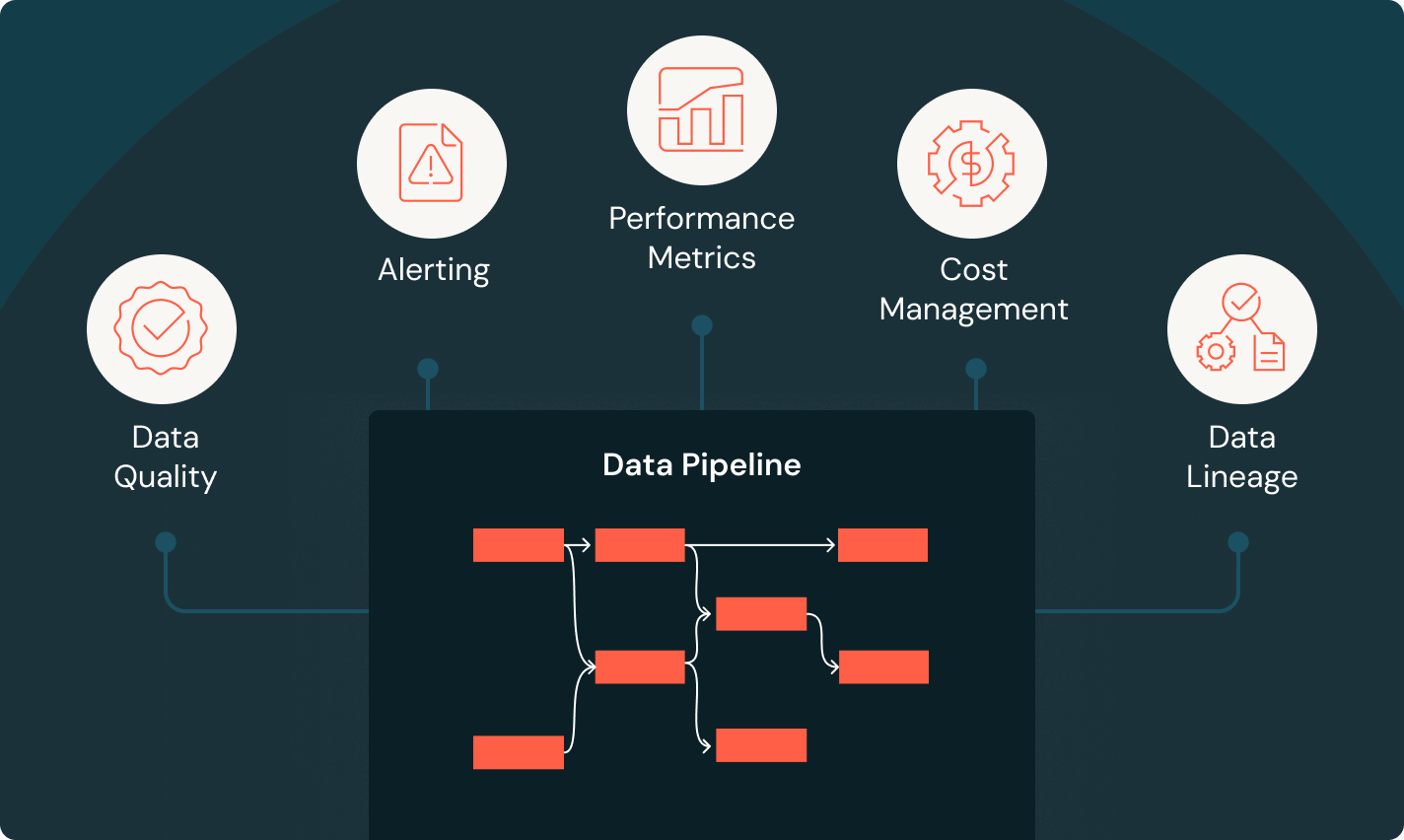

Monitor every step of every pipeline

Gain full observability to pipeline health with comprehensive metrics in real-time. Custom alerts guarantee you know exactly when issues occur, and detailed visuals pinpoint the failure’s root cause so you can quickly troubleshoot. When you have access to end-to-end monitoring, you stay in control of your data and your pipelines.

Take the next step

Data Engineering FAQ

Ready to become a data + AI company?

Take the first steps in your data transformation