Building, Improving, and Deploying Knowledge Graph RAG Systems on Databricks

Summary

- Overview of GraphRAG: The blog explores how retrieval-augmented generation (RAG) systems can be enhanced with graph databases like Neo4j, enabling more precise AI outputs by capturing semantic relationships between entities in structured data.

- Use Cases & Benefits: GraphRAG can be applied in cybersecurity for threat detection, as well as in industries like manufacturing for predictive maintenance and supply chain management, providing deeper insights from complex datasets.

- Implementation on Databricks: The blog outlines how to build and deploy a GraphRAG system on Databricks using Neo4j, showcasing the integration of LLMs, Delta Tables, and the Mosaic AI Agent Framework for end-to-end deployment.

Understanding GraphRAG

What is a Knowledge Graph?

To understand why one may use a Knowledge Graph (KG) instead of another structured data representation, it’s important to recognize its focus on explicit relationships between entities—such as businesses, people, machinery, or customers—and their associated attributes or features. Unlike embeddings or vector search, which prioritize similarity in high-dimensional spaces, a Knowledge Graph excels at representing the semantic connections and context between data points. A basic unit of a knowledge graph is a fact. Facts can be represented as a triplet in either of the following ways:

- HRT: <head, relation, tail>

- SPO: <subject, predicate, object>

Two simple KG examples are shown below. The left example of a fact could be <Andrea, loves, Irene>. You can see the KG is nothing but a collection of multiple such facts. But as you may notice graphs have semantics as the left example DOES NOT describe a romantic relationship between two people, while the right example DOES describe a romantic relationship between two people.

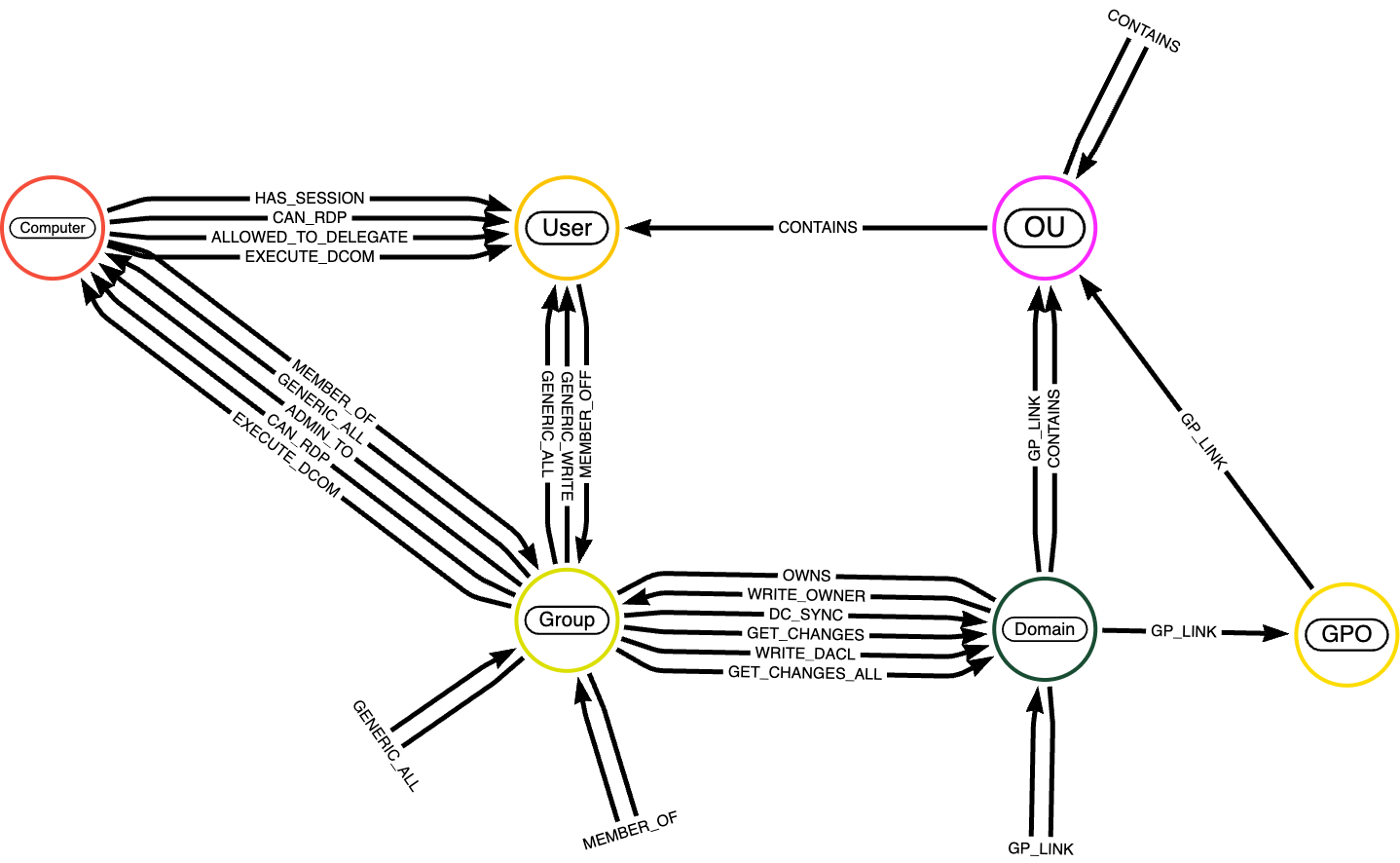

Now that you understand the significance of semantics in Knowledge Graphs, let's introduce you to the dataset we'll use in the upcoming code examples: the BloodHound dataset. BloodHound is a specialized dataset designed for analyzing relationships and interactions within Active Directory environments. It is widely used for security auditing, attack path analysis, and gaining insights into potential vulnerabilities in network structures.

Nodes in the BloodHound dataset represent entities within an Active Directory environment. These typically include:

- Users: represents individual user accounts in the domain.

- Groups: represents security or distribution groups that aggregate users or other groups for permission assignments.

- Computers: represents individual machines in the network (workstations or servers).

- Domains: represents the Active Directory domain that organizes and manages users, computers, and groups.

- Organizational Units (OUs): represents containers used for structuring and managing objects like users or groups.

- GPOs (Group Policy Objects): represents policies applied to users and computers within the domain.

A detailed description of node entities is available here. Relationships in the graph define interactions, memberships, and permissions between nodes; a full description of the edges is available here.

When to choose GraphRAG over Traditional RAG

The primary advantage of GraphRAG over standard RAG lies in its ability to perform exact matching during the retrieval step. This is made possible in part by explicitly preserving the semantics of natural language queries in downstream graph query language. While dense retrieval techniques based on cosine similarity excel at capturing fuzzy semantics and retrieving related information even when the query isn't an exact match, there are cases where precision is critical. This makes GraphRAG particularly valuable in domains where ambiguity is unacceptable, such as compliance, legal, or highly curated datasets.

That said, the two approaches are not mutually exclusive and are often combined to leverage their respective strengths. Dense retrieval can cast a wide net for semantic relevance, while the knowledge graph refines the results with exact matches or reasoning over relationships.

When to choose Traditional RAG over GraphRAG

While GraphRAG has unique advantages, it also comes with challenges. A key hurdle is defining the problem correctly—not all data or use cases are well-suited for a Knowledge Graph. If the task involves highly unstructured text or doesn’t require explicit relationships, the added complexity may not be worth it, leading to inefficiencies and suboptimal results.

Another challenge is structuring and maintaining the Knowledge Graph. Designing an effective schema requires careful planning to balance detail and complexity. Poor schema design can impact performance and scalability, while ongoing maintenance demands resources and expertise.

Real-time performance is another limitation. Graph databases like Neo4j can struggle with real-time queries on large or frequently updated datasets due to complex traversals and multi-hop queries, making them slower than dense retrieval systems. In such cases, a hybrid approach—using dense retrieval for speed and graph refinement for post-query analysis—can provide a more practical solution.

GraphDB and embeddings

Graph DBs like Neo4j often also provide vector search capabilities via HNSW indexes. The difference here is how they use this index in order to provide better results compared to vector databases. When you perform a query, Neo4j uses the HNSW index to identify the closest matching embeddings based on measures like cosine similarity or Euclidean distance. This step is crucial for finding a starting point in your data that aligns semantically with the query, leveraging the implicit semantics given by the vector search.

What sets graph databases apart is their ability to combine this initial vector-based retrieval with their powerful traversal capabilities. After finding the entry point using the HNSW index, Neo4j leverages the explicit semantics defined by the relationships in the knowledge graph. These relationships allow the database to traverse the graph and gather additional context, uncovering meaningful connections between nodes. This combination of implicit semantics from embeddings and explicit semantics from graph relationships enables graph databases to provide more precise and contextually rich answers than either approach could achieve alone.

End-to-End GraphRAG in Databricks

GraphRAG is a great example of Compound AI systems in action, where multiple AI components work together to make retrieval smarter and more context-aware. In this section, we’ll take a high-level look at how everything fits together.

GraphRAG Architecture

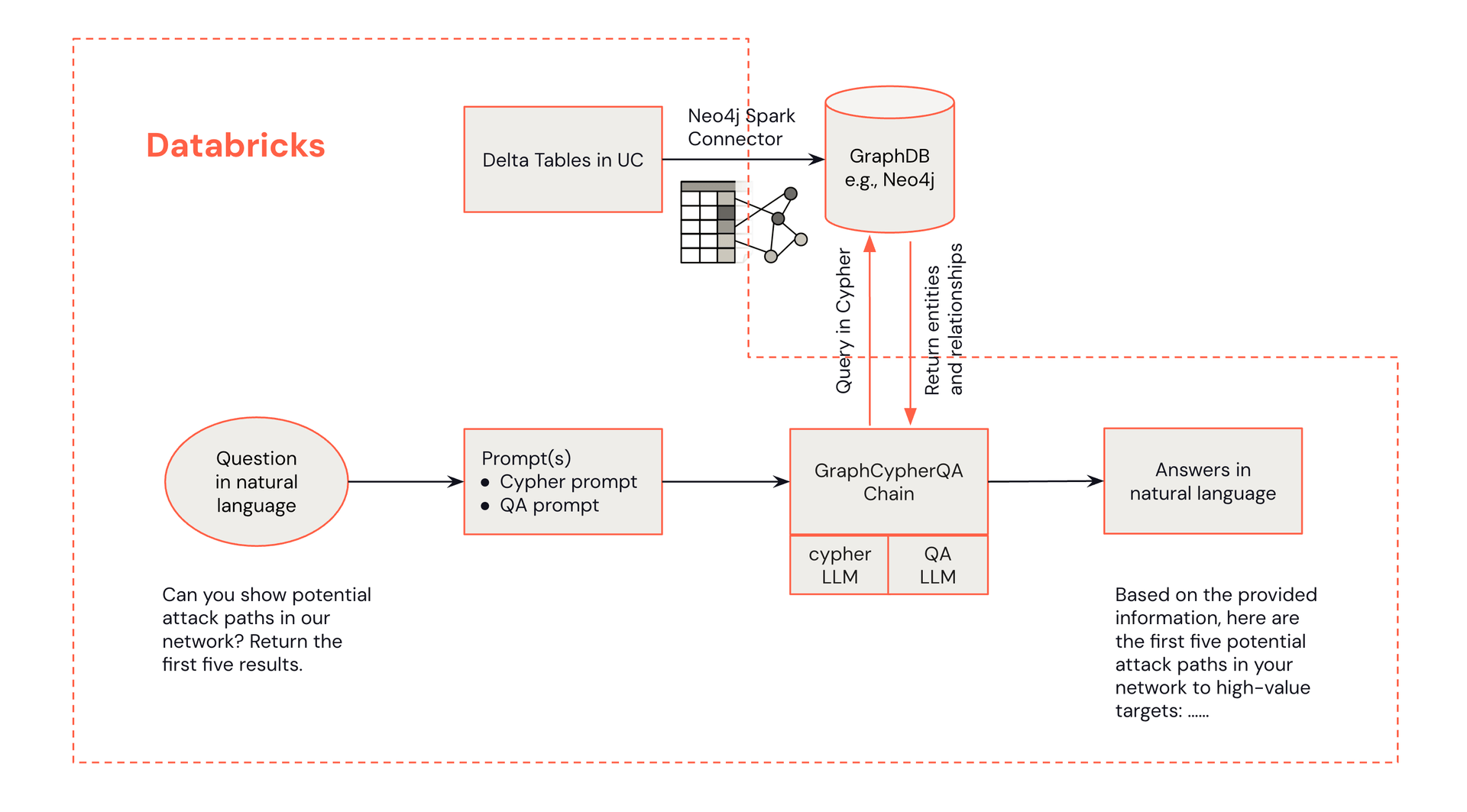

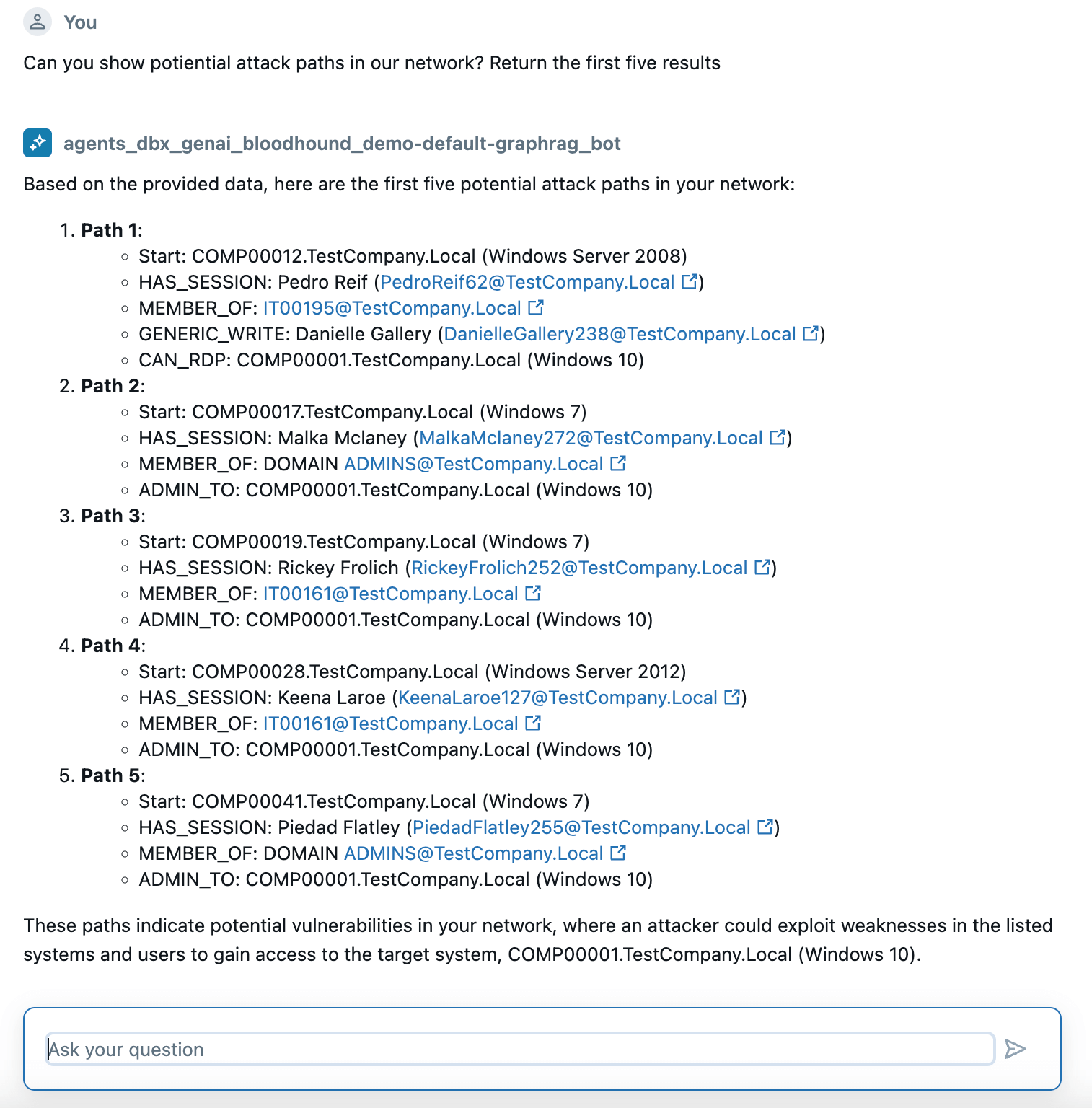

Below is an architecture diagram demonstrating how an analyst’s natural language questions can retrieve information from a Neo4j knowledge graph.

The architecture for GraphRAG-powered threat detection combines the strengths of Databricks and Neo4j:

- Security Operations Center (SOC) Analyst Interface: Analysts interact with the system through Databricks, initiating queries and receiving alert recommendations.

- Databricks Processing: Databricks handles data processing, LLM integration, and serves as the central hub for the solution.

- Neo4j Knowledge Graph: Neo4j stores and manages the cybersecurity knowledge graph, enabling complex relationship queries.

Implementation Overview

For this blog, we’re skipping the code details—check out the GitHub repository for the full implementation. Let’s walk through the key steps to build and deploy a GraphRAG agent.

- Build a Knowledge Graph from Delta Tables: In the notebook, we discussed scenarios about structured data and unstructured data. The Neo4j Spark Connector provides a very simple means of transforming data in Unity Catalog into graph entities (nodes/relationships).

- Deploy LLMs for Cypher Query and QA: GraphRAG requires LLMs for query generation and summarization. We demonstrated how to deploy gpt-4o, llama-3.x, a fine-tuned text2cypher model from HuggingFace and serve them using a provisioned throughput endpoint.

- Create and Test GraphRAG Chain: We demonstrated how to use different LLM for Cypher and QA LLMs and prompts via GraphCypherQAChain. This allows us to further tune with glass-box tracing results using MLflow Tracing.

- Deploy the Agent with Mosaic AI Agent Framework: Use Mosaic AI Agent Framework and MLflow to deploy the agent. In the notebook, the process includes logging the model, registering it in Unity Catalog, deploying it to a serving endpoint, and launching a review app for chatting.

Conclusion

GraphRAG is a powerful yet highly customizable approach to building agents that deliver more deterministic, contextually relevant AI outputs. However, its design is case-specific, requiring thoughtful architecture and problem-specific tuning. By integrating knowledge graphs with Databricks' scalable infrastructure and tools, you can build end-to-end Compound AI systems that seamlessly combine structured and unstructured data to generate actionable insights with deeper contextual understanding.

Never miss a Databricks post

Sign up

What's next?

Manufacturing

October 1, 2024/5 min read

From Generalists to Specialists: The Evolution of AI Systems toward Compound AI

Product

November 27, 2024/6 min read