The Impact of Data and AI on a Modern Business

It is no secret that there has been an explosion of data in the past 10 years. As per Forbes, from 2010 to 2020, the amount of data created, captured, copied, and consumed in the world increased from 1.2 trillion gigabytes to 59 trillion gigabytes, an almost 5,000% growth.

The World Economic Forum estimates that by 2025, more than 463 exabytes of data will be created each day globally! To put it into context, every day:

- 294 billion emails are sent

- 4 terabytes of data are created from each connected car

- 65 billion messages are sent on WhatsApp

- 5 billion searches are made

Well, the question is, how can a business capitalize on data that is generated in different varieties (structured, semi-structured, and unstructured), velocity (speed of data processing), and volume (amount of data)? The good news is – top digital companies such as Google, Meta, and Amazon are able to build a business rooted in data and AI. Studies show that insights-driven companies are 23 times more likely to add customers, 19 times more likely to be profitable, and experience 7 times faster growth than the GDP.

Challenges of Business Initiatives

Now, more than ever, CEOs are focused on maximizing profit, reducing operational costs, and paying dividends to stakeholders. Line of business leaders have multiple initiatives, such as growing revenues, improving customer experience, operating efficiently, automating labor-intensive works, and improving a product or service. To support these business objectives, organizations heavily depend on data and AI to make business decisions or predict outcomes. But effectively leveraging data is not easy:

|

|

IBM found that poor data quality costs the US economy up to $3.1 trillion annually |

|

|

Forrester reports that up to 73% of all data within an enterprise goes unused for analytics. |

|

|

According to a Forbes Survey, 95% of businesses cite the need to manage unstructured data as a problem for their business. |

Without significant changes to legacy data platforms, it is very hard to achieve the desired business outcome. Legacy data architecture stitches together multiple vendor data products to support the business initiatives, which will eventually fail due to the:

- Complex Architecture: with a multi-product approach, you are required to know multiple vendor proprietary codes, which hammers team productivity and faster time to market.

- High Latency: with the variety, velocity, and volume of data, business decisions have to be made in a realtime, but it is very difficult to achieve this with legacy data platforms.

- High TCO: owning multiple vendor data platforms and resources managing the data platform incur high operational costs.

- Data Silo : slow the development process, lead to less accurate ML models, and decrease team productivity.

Why Databricks Lakehouse Architecture?

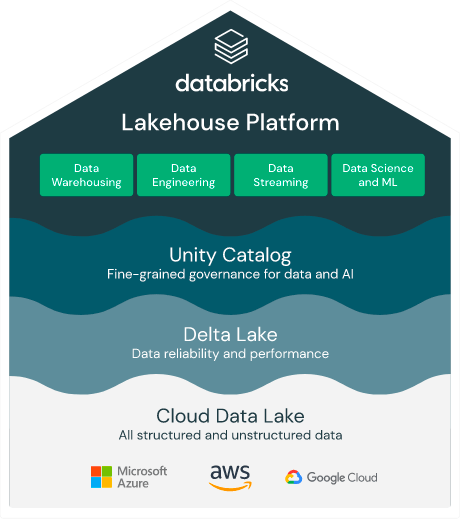

The Databricks Lakehouse Platform is a single platform for data warehouse, data engineering, Data Streaming, Data Analytics and Data Science use cases. The Databricks Lakehouse platform combines the best elements of data lakes and data warehouses to deliver the reliability, strong governance and performance of data warehouses with the openness, flexibility and machine learning support of data lakes. The Databricks Lakehouse Platform is:

|

|

Simple: the unified approach simplifies your data architecture by eliminating the data silos that traditionally separate analytics, BI, data science and machine learning use cases. |

|

|

Open: our founders are the original creators of open-source platforms such as Apache Spark, MLflow and Delta Lake. Delta Lake forms the open foundation of the lakehouse by providing reliability and world-record-setting performance directly on data in the data lake. |

|

|

Multicloud: The Databricks Lakehouse Platform offers you a consistent management, security, and governance experience across all clouds. |

Impact of Databricks on your Business Initiatives

According to Forrester, “In today’s hypercompetitive business environment, harnessing and applying data, business analytics, and machine learning at every opportunity to differentiate products and customer experiences is fast becoming a prerequisite for success.’’ So it’s no wonder enterprises are betting big on data analytics and AI. In fact, roughly 65% of CIOs at Fortune 1000 companies plan to invest over $50 million in data and AI projects in 2020.

Databricks commissioned a Forrester Consulting study: The Total Economic Impact™ (TEI) of the Databricks Unified Data Analytics Platform. In this study, Forrester examines how data teams — and the entire business — can move faster, collaborate better and operate more efficiently when they have a unified, open platform for data engineering, machine learning, and big data analytics. Through customer interviews, Forrester found that organizations deploying Databricks realize nearly $29 million in total economic benefits and a return on investment of 417% over a three-year period. They also concluded that the Databricks platform pays for itself in less than six months.

Conclusion

With such technological advancements, it is expected that organizations will generate a huge variety and volume of data more than before. Therefore, it is vital for companies to capitalize on data and AI initiatives – to cut down operational costs, and create new products and services. To achieve these initiatives, companies need to adopt big data and AI technology. A technology platform that can scale to clean, transform and store a huge volume of data, and to train, retrain and serve AI models.

If you have a pressing need for big data and AI – we are super excited to collaborate with you – contact us.

Never miss a Databricks post

What's next?

Product

August 30, 2024/6 min read