Introducing Predictive Optimization: Faster Queries, Cheaper Storage, No Sweat

We’re excited to announce the Public Preview of Databricks Predictive Optimization. This capability intelligently optimizes your table data layouts for improved performance and cost-efficiency.

Predictive Optimization leverages Unity Catalog and Lakehouse AI to determine the best optimizations to perform on your data, and then runs those operations on purpose-built serverless infrastructure. This significantly simplifies your lakehouse journey, freeing up your time to focus on getting business value from your data.

This capability is the latest in a long line of Databricks capabilities which harness AI to predictively perform actions based on your data and its access patterns. Previously, we released Predictive I/O for reads and updates, which apply these techniques when executing read and update queries.

Challenge

Lakehouse tables greatly benefit from background optimizations which improve their data layouts. This includes compaction of files to ensure proper file sizes, or vacuuming to clean up unneeded data files. Proper optimization significantly improves performance while driving down costs.

However, this creates an ongoing challenge for data engineering teams, who need to figure out:

- Which optimizations to run?

- Which tables should be optimized?

- How often to run these optimizations?

As lakehouse platforms grow in scale, and become increasingly self-service, platform teams find it virtually impossible to answer these questions effectively. A recurring sentiment we have heard from our customers is that they cannot keep up with optimizing the number of tables created from all the new business use cases.

Furthermore, even once these thorny questions are answered, teams still must deal with the operational burden of scheduling and running these optimizations - e.g., scheduling jobs, diagnosing failures, and managing the underlying infrastructure.

How Predictive Optimization works

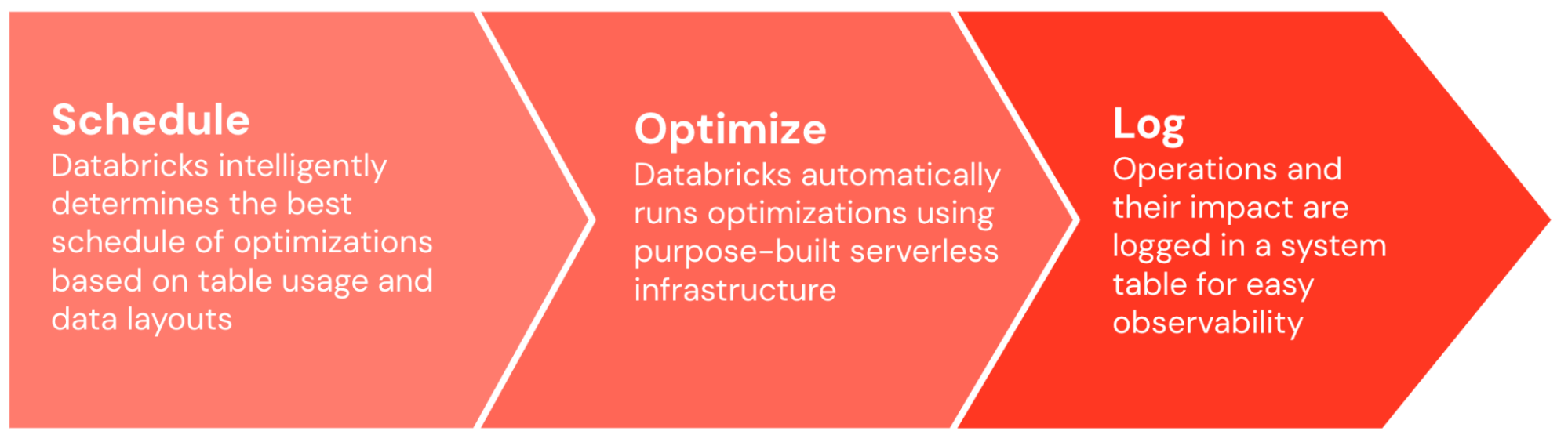

With Predictive Optimization, Databricks tackles these thorny problems for you, freeing up your valuable time to focus on driving business value with your data. Predictive Optimization can be enabled with a single button click. From there, it does all the heavy lifting.

First, Predictive Optimization intelligently determines which optimizations to run, and how often to run them. Our AI model considers a wide range of inputs, including the usage patterns of your tables, and their existing data layout and performance characteristics. It then outputs the ideal optimization schedule, weighing the expected benefits of optimization against the expected compute costs.

Once the schedule is generated, Predictive Optimization automatically runs these optimizations on the purpose-built serverless infrastructure. It automatically handles spinning up the correct number and size of machines, and ensures that optimization tasks are properly binpacked and scheduled for optimal efficiency.

The whole system runs end-to-end without the need for manual tweaking and tuning, and learns from your organization’s usage over time, optimizing the tables that matter to your organization while deprioritizing those that don’t. You are billed only for the serverless compute required to perform the optimizations. Out-of-the-box, all operations are logged in a system table, so you can easily audit and understand the impact and cost of the operations.

Impact

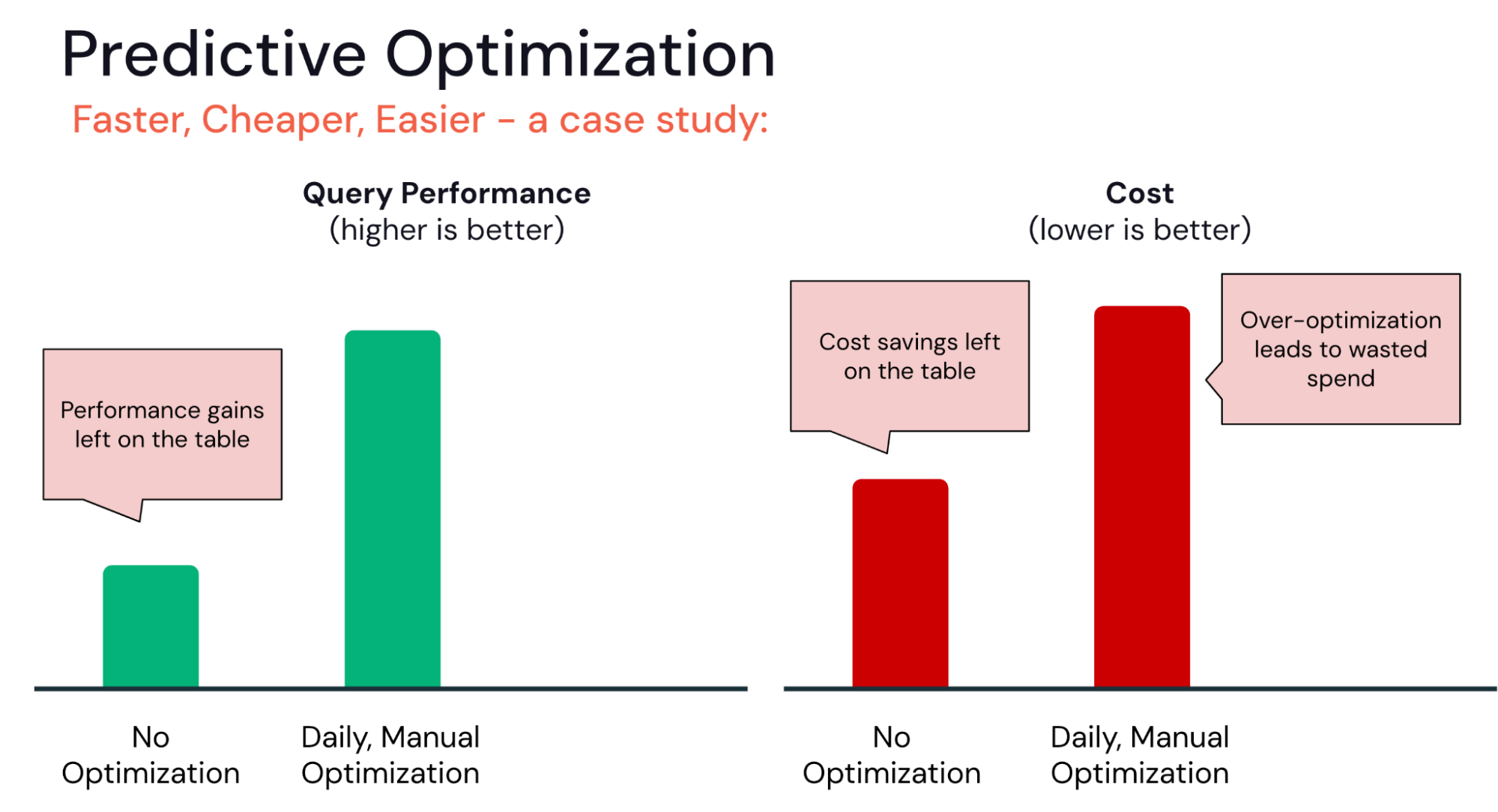

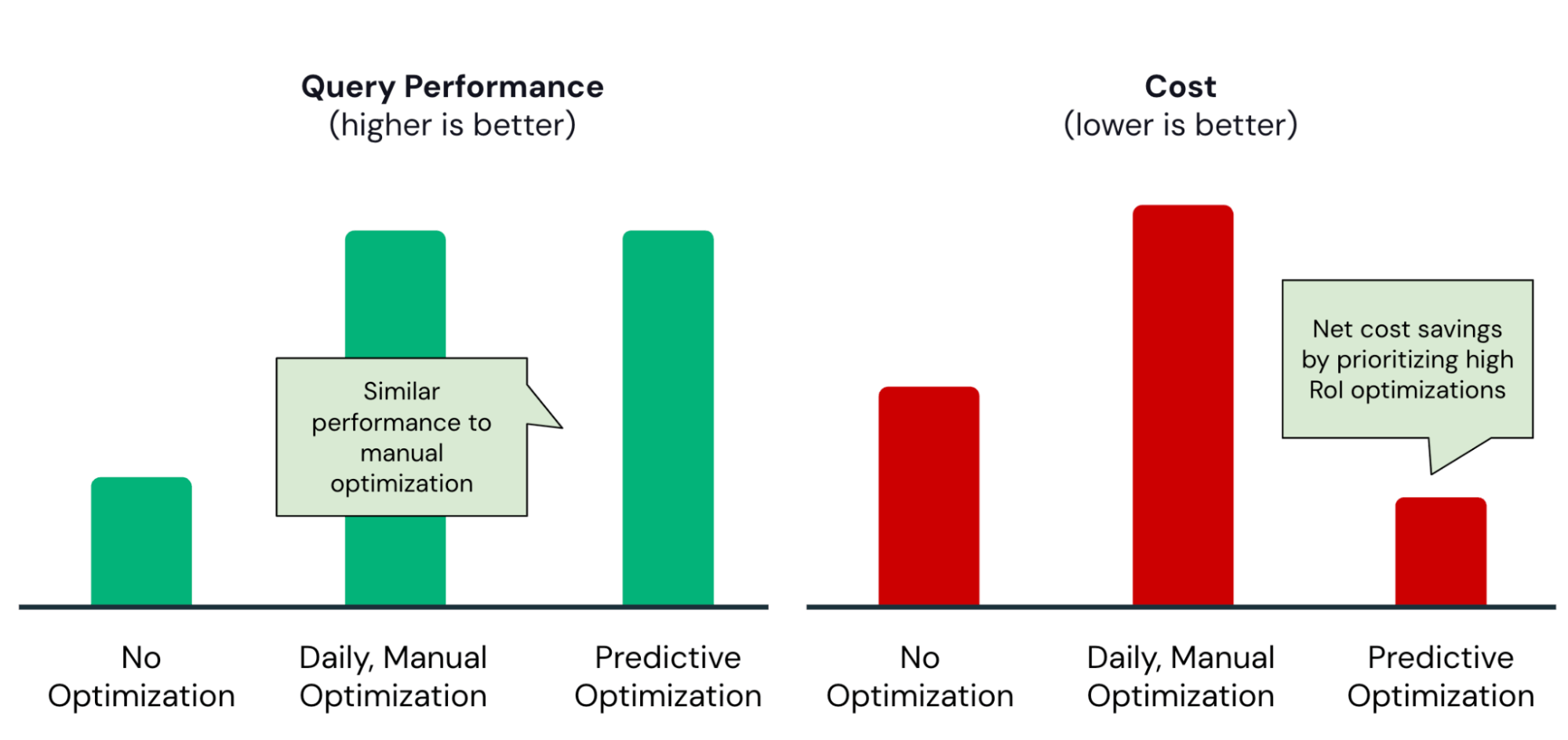

In the last few months, we have enrolled a number of customers in the private preview program for Predictive Optimization. Many have observed that it is able to find the sweet spot between two common extremes:

On one extreme, some organizations haven’t yet stood up sophisticated table optimization pipelines. With Predictive Optimization, they can instantly start optimizing their tables without figuring out the best optimization schedule or managing infrastructure.

On the other extreme, some organizations may be over-investing in optimization. For example, for a team automating their optimization pipelines, it’s tempting to run hourly or daily OPTIMIZE or VACUUM jobs. However, these stand the risk of diminishing returns. Might the same performance gains be achieved with fewer optimization operations?

Predictive Optimization helps find the right balance, ensuring that optimizations are run only with high return on investment:

As a concrete example, the Data Engineering team at Anker enabled Predictive Optimization and quickly realized these benefits:

|

50% reduction in annual storage costs

|

|

Get started

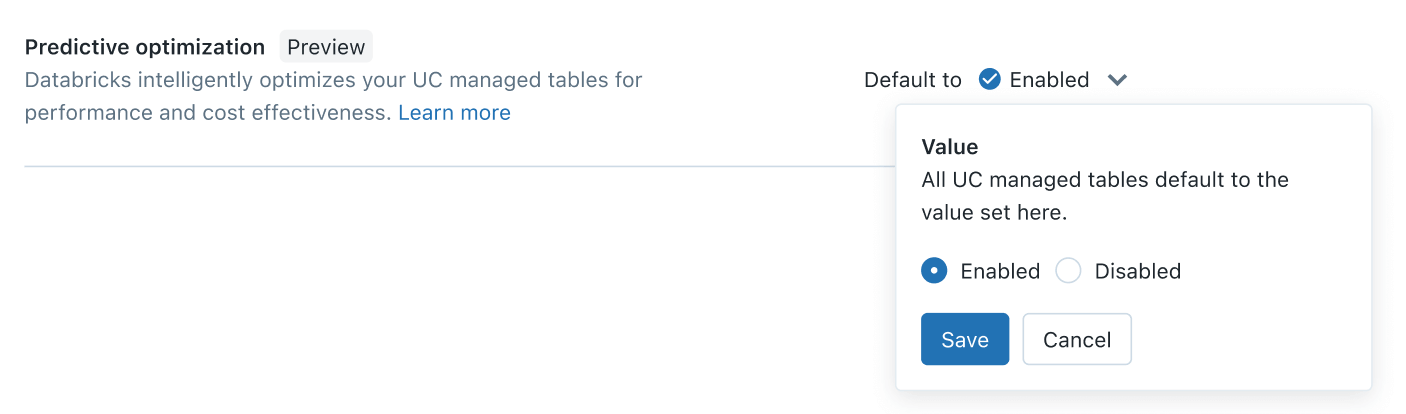

Starting today, Predictive Optimization is available in Public Preview. Enabling it should take less than five minutes. As an account admin, simply go to the account console > settings > feature enablement tab, and toggle on the Predictive Optimization setting:

In just a click, you’ll get the power of AI-optimized data layouts across your Unity Catalog managed tables, making your data faster and more cost-effective. See the documentation for more information.

And we are just getting started here. In the coming months, we will continue to add more optimizations to the capability. Stay tuned for much more to come.

2x query speed-up

2x query speed-up