Introducing the Well-Architected Data Lakehouse from Databricks

To provide customers with a framework for planning and implementing their data lakehouse, we are pleased to announce that we have recently published the well-architected framework for the Databricks Lakehouse Platform for each of the three clouds:

- Data Lakehouse architecture for Databricks on AWS

- Data Lakehouse architecture for Azure Databricks

- Data Lakehouse architecture for Databricks on GCP

In general, well-architected frameworks for cloud services are collections of best practices, design principles, and architectural guidelines that help organizations design, build, and operate reliable, secure, efficient, and cost-effective systems in the cloud. Public cloud providers such as AWS, Microsoft, and Google have such framework documentation. These frameworks are all built around five pillars that provide the underlying structure for the notion of "what is good" for cloud services:

- Operational excellence

- Security

- Reliability

- Performance efficiency

- Cost optimization

These frameworks are used by different teams in the enterprise, For example:

- Enterprise architects seek to align business and IT strategies to ensure that their organizations can most effectively achieve current and future business objectives. The goal is to create a flexible business environment that supports day-to-day operations as well as innovation and experimentation with new technologies and processes. Based on a map of IT assets and business processes, they focus on dimensions such as system consolidation and rationalization, the TCO, and evaluating and driving potential new technologies and capabilities as enablers of the future business. Each pillar of a Well-Architected Framework provides enterprise architects with a set of principles that they can evaluate and apply specifically to their organization's needs.

- Cloud architects are responsible for designing and implementing an organization's cloud computing architecture. Their primary role is to plan, design, and implement all cloud environments in the organization. They regularly evaluate cloud applications and infrastructure and ensure that the architecture works across multiple areas of IT and business. They use the principles and best practices of the well-architected frameworks to design and implement new services effectively and efficiently, and to evaluate their existing cloud architecture to identify gaps and sub-optimal implementations. They then apply the best practices to improve the overall implementation.

- Developers implement the services and workloads in the cloud. They use well-architected frameworks to ensure that their code is built according to best practices, such as security, quality, or the best performance for the cost.

- Cloud Operations maintain the architecture and monitor its performance against business objectives once the architecture is established. The principles and best practices of the Operational Excellence and the Cost Optimization pillar, for example, help them optimize deployment and operational processes to keep the system running reliably and efficiently.

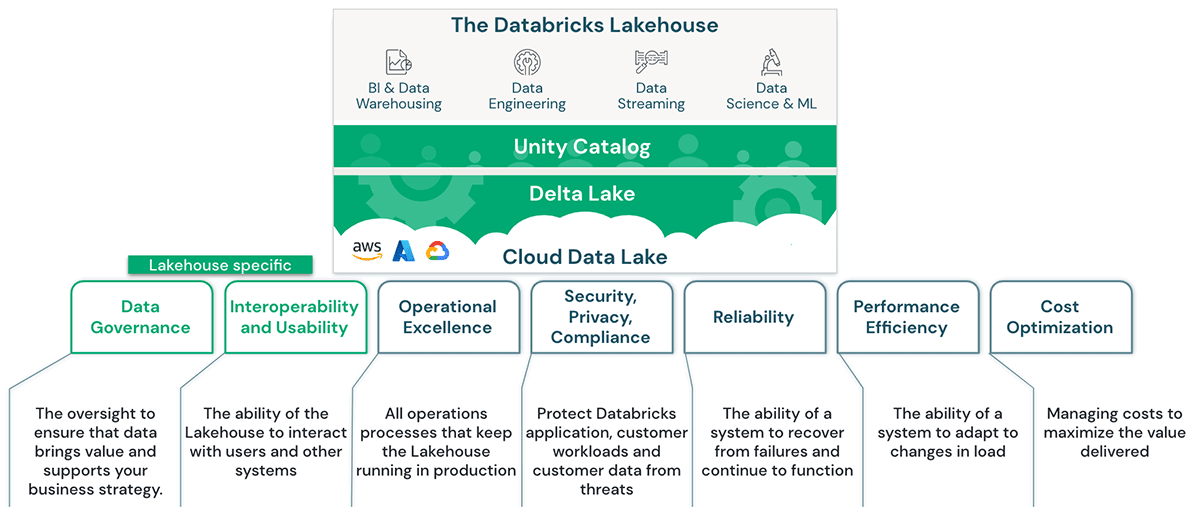

The Databricks Lakehouse is a unified data and AI cloud service, and the principles and best practices of the well-architected frameworks of the public cloud providers apply to it. However, as one of the core systems in the data domain covering ETL, ML & AI, data warehousing and BI, specific principles and best practices need to be applied to design, implement and operate the Lakehouse in a well-architected manner.

To help our customers' various teams implement an effective and efficient data lakehouse, we have created the Well-Architected Lakehouse, which is available in our public documentation:

The Well-Architected Lakehouse consists of seven pillars that describe different areas of concern for the implementation of a data lakehouse in the cloud. We have added two pillars of the Databricks Lakehouse to the five pillars taken over from the existing frameworks:

- Data governance:

The oversight to ensure that data brings value and supports your business strategy. - Interoperability and usability:

The ability of the lakehouse to interact with users and other systems.

Data governance: One of the fundamental aspects of a lakehouse is unified data governance: The lakehouse unifies data warehousing and AI use cases on a single platform. This simplifies the modern data stack by eliminating the data silos that traditionally separate and complicate data engineering, analytics, BI, data science, and machine learning.

Usability and Interoperability: An important goal of the lakehouse is to provide great usability for all personas working with it and to interact with a wide ecosystem of external systems. As an integrated platform, Databricks Lakehouse provides a consistent user experience across all workloads, improving the user experience. This reduces training and onboarding costs and improves cross-functional collaboration. In an interconnected world with cross-enterprise business processes, diverse systems need to work together as seamlessly as possible. The level of interoperability is critical, and the flow of data between internal and external partner systems must be not only secure but increasingly up to date.

Summary:

We have recently published the Databricks Well-Architected Lakehouse guidance for each of the three clouds:

- Data Lakehouse architecture for Databricks on AWS.

- Data Lakehouse architecture for Azure Databricks.

- Data Lakehouse architecture for Databricks on GCP.

The Well-Architected Lakehouse consists of seven pillars that describe different areas of concern when implementing a data lakehouse in the cloud: Data Governance, Interoperability & Usability, Operational Excellence, Security, Reliability, Performance Efficiency, and Cost Optimization. The principles and best practices in each of these areas are specific to the scalable and open Databricks platform for ML, AI and BI. They help the various teams in our customers' organizations design, build, and operate a highly efficient and effective lakehouse while properly managing TCO.

For details, check out the Well-Architected Lakehouse documentation for your cloud (AWS, Azure and GCP) to implement your Lakehouse according to the principles and best practices.