Blog

Featured Story![2025 Gartner Magic Quadrant for Cloud DBMS]()

News

November 21, 2025/5 min read

Databricks Named a Leader in 2025 Gartner® Magic Quadrant™ for Cloud Database Management Systems

What's new![A Year of Interoperability: How Enterprises Are Scaling Governance with Unity Catalog]()

Product

November 26, 2025/5 min read

A Year of Interoperability: How Enterprises Are Scaling Governance with Unity Catalog

Recent posts

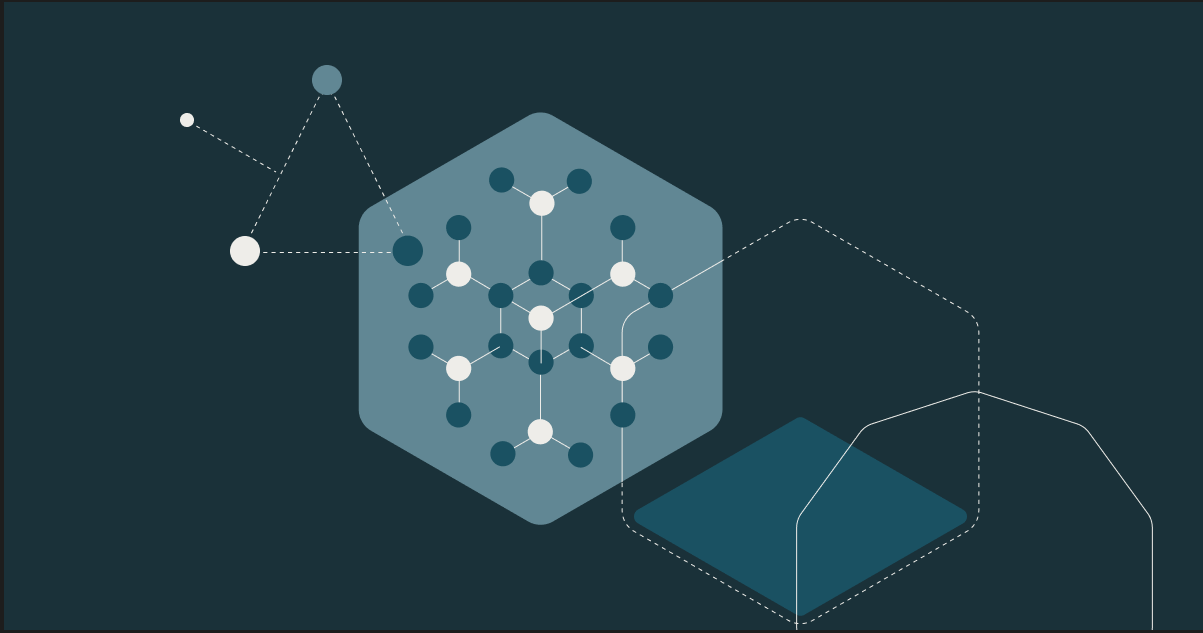

Product

December 15, 2025/12 min read

Databricks Lakehouse Data Modeling: Myths, Truths, and Best Practices

Product

December 11, 2025/5 min read

OpenAI GPT-5.2 and Responses API on Databricks: Build Trusted, Data-Aware Agentic Systems

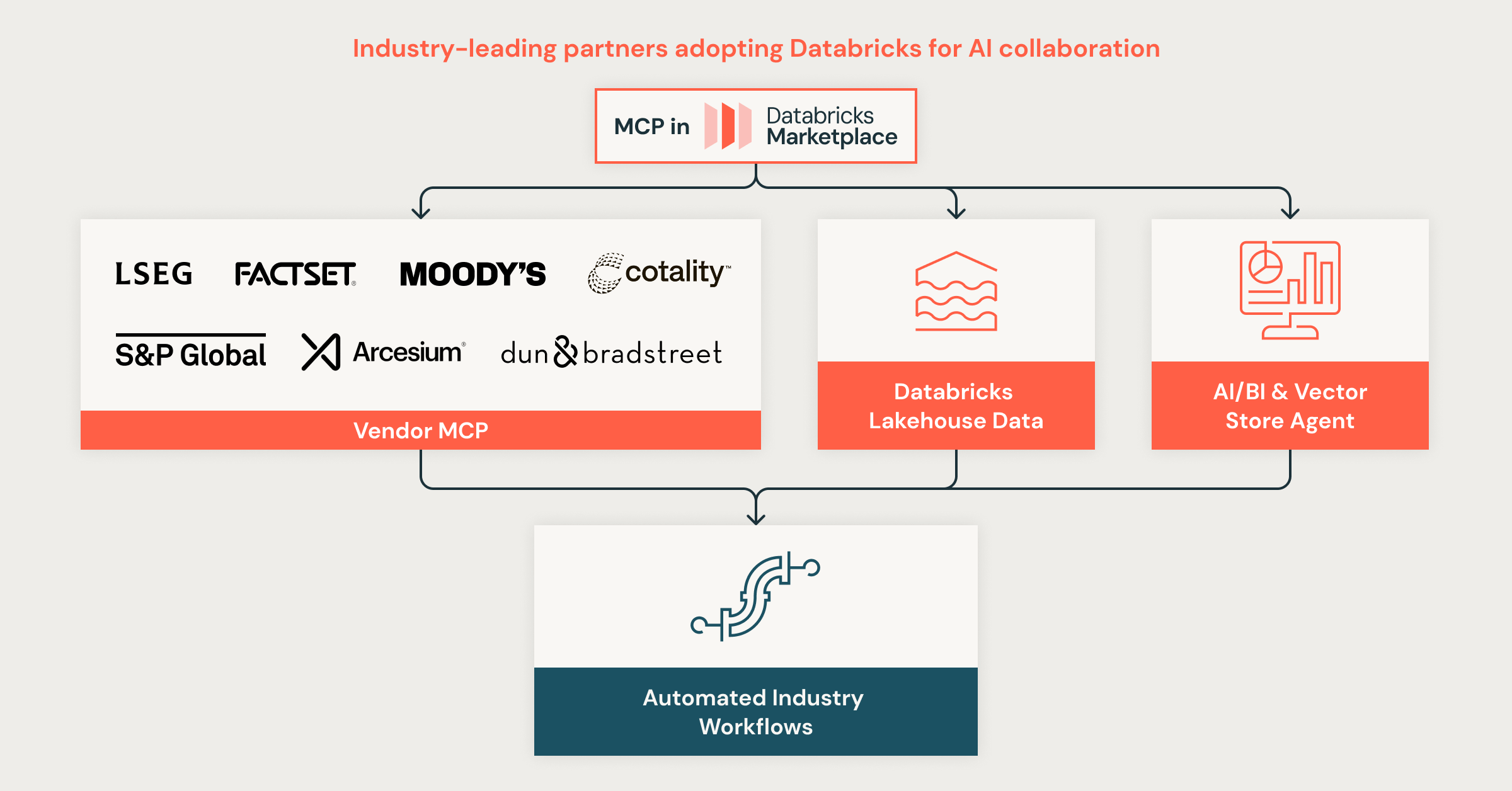

Partners

December 9, 2025/20 min read

Introducing Databricks GenAI Partner Accelerators for Data Engineering & Migration

Mosaic Research

December 9, 2025/12 min read

Introducing OfficeQA: A Benchmark for End-to-End Grounded Reasoning

Solutions

December 8, 2025/6 min read

Powering Growth: How Data and AI Are Rewiring Productivity in Banking and Payments

Technology

December 5, 2025/14 min read

Expensive Delta Lake S3 Storage Mistakes (And How to Fix Them)

Energy

December 4, 2025/4 min read

BP’s Geospatial AI Engine: Transforming Safety and Operations with Databricks

Data Leader

December 4, 2025/2 min read

Building the AI-Ready Enterprise: Leaders Share Real-World AI Solutions and Practices

Announcements

December 2, 2025/6 min read

Completing the Lakehouse Vision: Open Storage, Open Access, Unified Governance

Healthcare & Life Sciences

December 1, 2025/3 min read

Databricks and NVIDIA: Powering the Next Generation of Industry AI

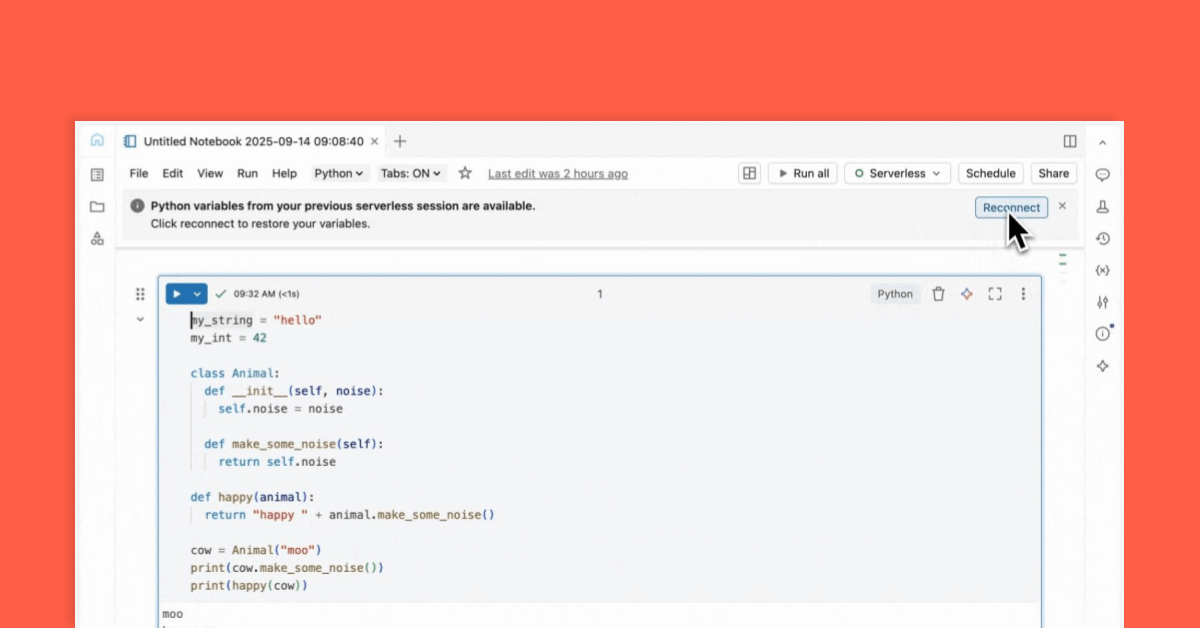

Product

December 1, 2025/6 min read

From Apache Airflow® to Lakeflow Jobs: How the Industry is Shifting from Workflow-First to Data-First Orchestration

Insights

December 1, 2025/7 min read

Using AI for Data Analysis: Tools and Techniques You Need to Know

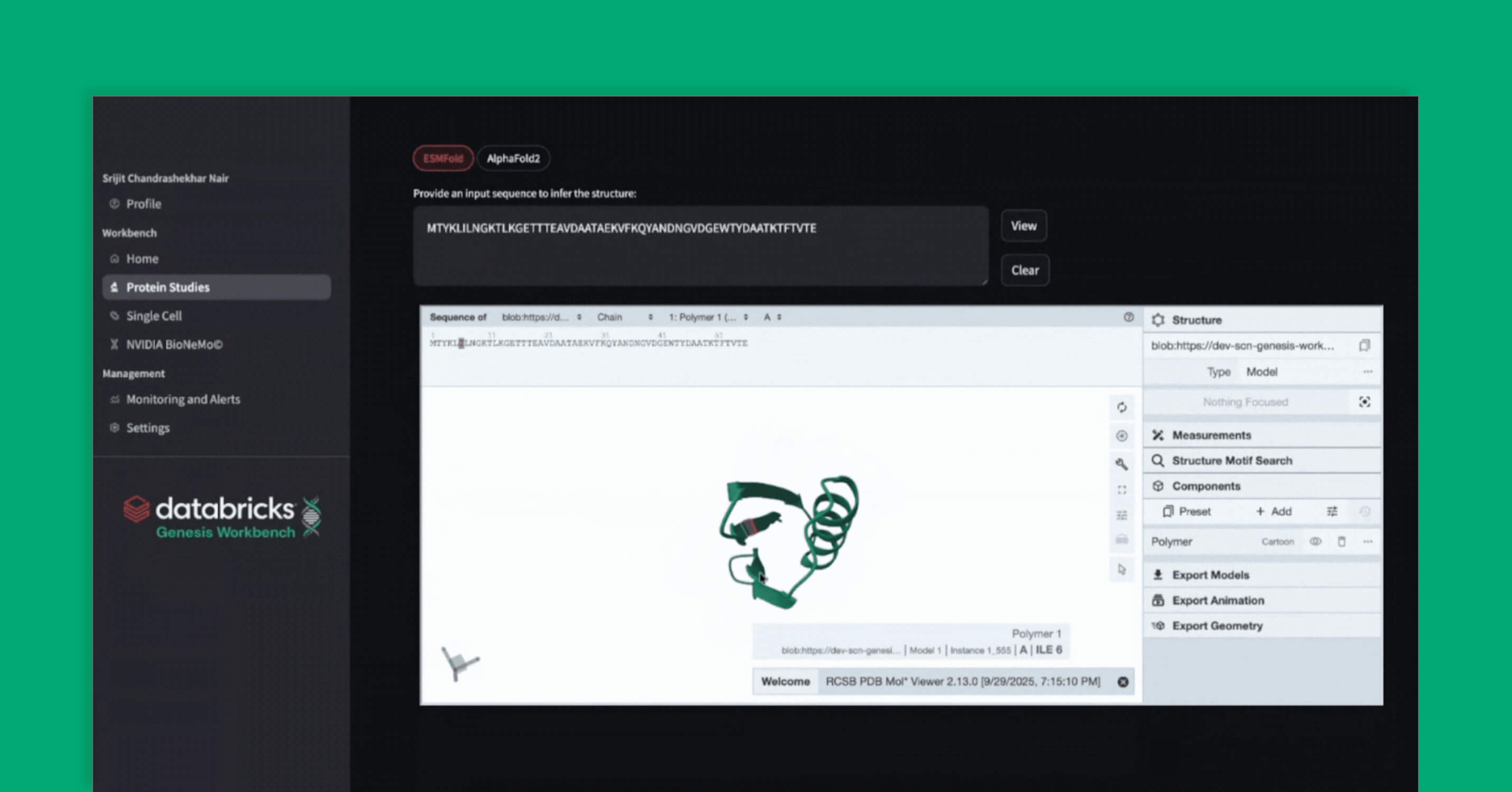

Mosaic Research

December 1, 2025/14 min read

Genesis Workbench: A Blueprint for Life Sciences Applications on Databricks

Data Engineering

December 1, 2025/11 min read

From Events to Insights: Complex State Processing with Schema Evolution in transformWithState

Data Intelligence for All

Never miss a Databricks post

Subscribe to our blog and get the latest posts delivered to your inbox