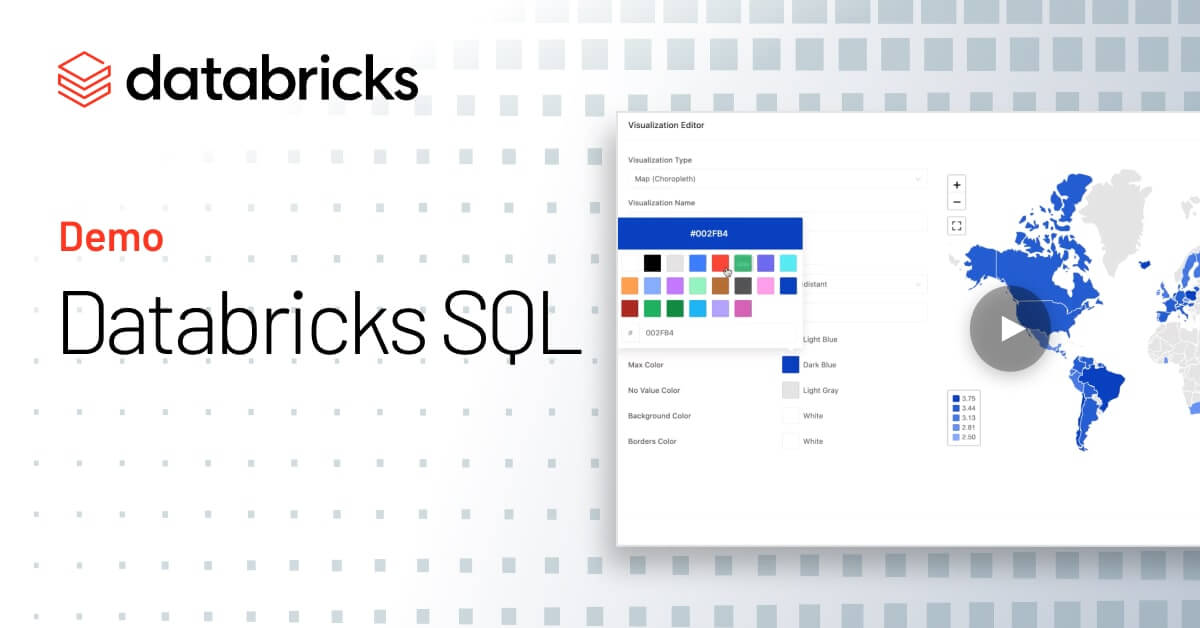

Databricks Data Warehousing Admin Demo

Databricks SQL is now in Public Preview and enabled for all users in new workspaces.

Explore Databricks SQL

Loading...

Databricks SQL is now in Public Preview and enabled for all users in new workspaces.