Announcing the Databricks AI Security Framework 2.0

Proven guidance to mitigate AI model risk at scale

Published: February 12, 2025

by Kelly Albano, Omar Khawaja and Arun Pamulapati

Summary

- The second edition of the Databricks AI Security Framework is designed to enable secure and impactful AI deployments.

- In the DASF 2.0, we’ve identified 62 technical security risks and mapped them to recommended controls.

- In response to user feedback, we’ve released the DASF compendium document, designed to help users operationalize the DASF.

We are excited to announce the second edition of the Databricks AI Security Framework (DASF 2.0—download now)! Organizations racing to harness AI’s potential need both the 'gas’ of innovation and the 'brakes’ of governance and risk management. The DASF bridges this gap, enabling secure and impactful AI deployments for your organization by serving as a comprehensive guide on AI risk management.

This blog will provide an overview of the DASF, explore key insights gained since the original version was released, introduce new resources to deepen your understanding of AI security and provide updates on our industry contributors.

What is the Databricks AI Security Framework, and what’s new in version 2.0?

The DASF is a framework and whitepaper for managing AI security and governance risks. It enumerates the 12 canonical AI system components, their respective risks, and actionable controls to mitigate each risk. Created by the Databricks Security and ML teams in partnership with industry experts, it bridges the gap between business, data, governance, and security teams with practical tools and actionable strategies to demystify AI, foster collaboration, and ensure effective implementation.

Unlike other frameworks, the DASF 2.0 builds on existing standards to provide an end-to-end risk profile for AI deployments. It delivers defense-in-depth controls to simplify AI risk management for your organization to operationalize and can be applied to your chosen data and AI platform.

In the DASF 2.0, we’ve identified 62 technical security risks and mapped them to 64 recommended controls for managing the risk of AI models. We’ve also expanded mappings to leading industry AI risk frameworks and standards, including MITRE ATLAS, OWASP LLM & ML Top 10, NIST 800-53, NIST CSF, HITRUST, ENISA’s Securing ML Algorithms, ISO 42001, ISO 27001:2022, and the EU AI Act.

Operationalizing the DASF - check out the new compendium and the companion instructional video!

We’ve received valuable feedback as we share the DASF at industry events, workshops, and customer meetings. Many of you have asked for more resources to make navigating the DASF easier, operationalizing it, and mapping your controls effectively.

In response, we’re excited to announce the release of the DASF compendium document (Google sheet, Excel). This resource is designed to help operationalize the DASF by organizing and applying its risks, threats, controls, and mappings to industry-recognized standards from organizations such as MITRE, OWASP, NIST, ISO, HITRUST, and more. We’ve also created a companion instructional video that provides a guided walkthrough of the DASF and its compendium.

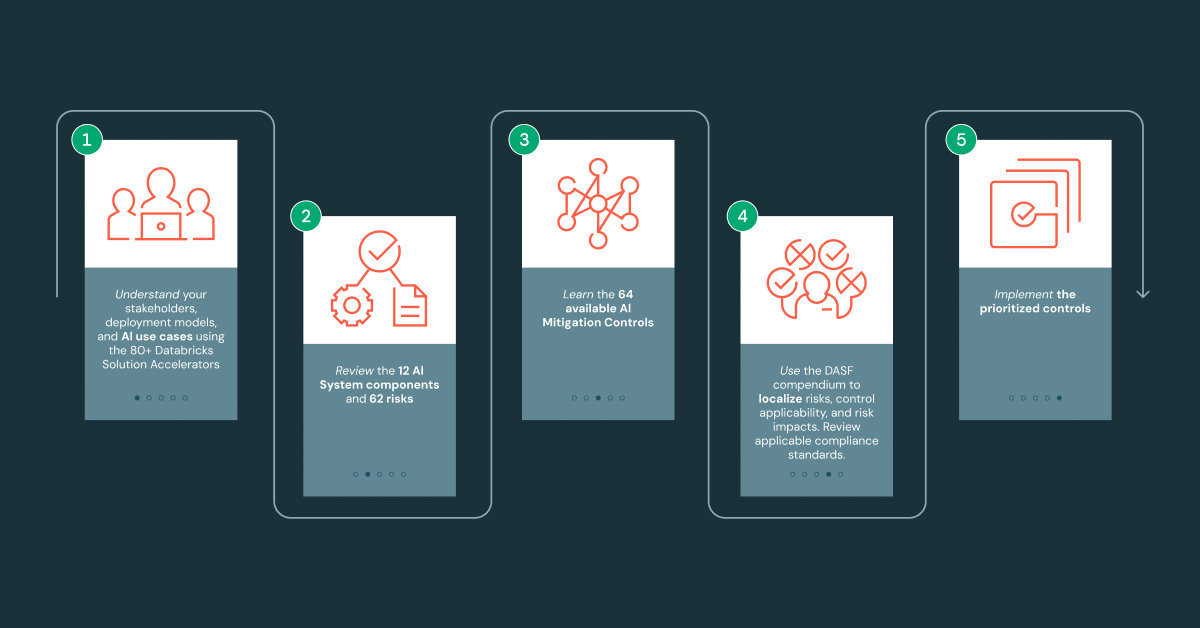

Our goal with these updates is to make the DASF easier to adopt, empowering organizations to implement AI systems securely and confidently. If you’re eager to dive in, our team recommends the following approach:

- Understand your stakeholders, deployment models, and AI use cases: Start with a business use case, leveraging the DASF whitepaper to identify the best-fit AI deployment model. Choose from 80+ Databricks Solution Accelerators to guide your journey. Deployment models include Predictive ML Models, Foundation Model APIs, Fine-tuned and Pre-trained LLMs, RAG, AI Agents with LLMs, and External Models. Ensure clarity on AI development within your organization, including use cases, datasets, compliance needs, processes, applications, and accountable stakeholders.

- Review the 12 AI system components and 62 risks: Understand the 12 AI systems components, the traditional cybersecurity and novel AI security risks associated with each component, and the responsible stakeholders (e.g., data engineers, scientists, governance officers, and security teams). Use the DASF to foster collaboration across these groups throughout the AI lifecycle.

- Review the 64 available mitigation controls: Each risk is mapped to prioritized mitigation controls, beginning with perimeter and data security. These risks and controls are further aligned with 10 industry standards, providing additional detail and clarity.

- Use the DASF compendium to localize risks, control applicability, and risk impacts: Start by using the “DASF Risk Applicability” tab to identify risks relevant to your use case by selecting one or more AI deployment models. Next, review the associated risk impacts, compliance requirements, and mitigation controls. Finally, document key details for your use case, including the AI use case description, datasets, stakeholders, compliance considerations, and applications.

- Implement the prioritized controls: Use the “DASF Control Applicability” tab of the compendium to review the applicable DASF controls and implement the mitigation controls on your data platform across 12 AI components. If you are using Databricks, we included links with detailed instructions on how to deploy each control on our platform.

Implement the DASF in your organization with new AI upskilling resources from Databricks

According to a recent Economist Impact study, surveyed data and AI leaders have identified upskilling and fostering a growth mindset as key priorities for driving AI adoption in 2025. As part of the DASF 2.0 launch, we have resources to help you understand AI and ML concepts and apply AI security best practices to your organization.

- Databricks Academy Training: We recommend taking the new AI Security Fundamentals course, which is now available on the Databricks Academy. Before diving into the whitepaper, this 1-hour course is a great primer to AI security topics highlighted in the DASF. You’ll also receive an accreditation for your LinkedIn profile upon completion. If you are new to AI and ML concepts, start with our Generative AI Fundamentals course.

- How-to videos: We have recorded DASF overview and how-to videos for quick consumption. You can find these videos on our Security Best Practices YouTube channel.

- In-person or virtual workshop: Our team offers an AI Risk Workshop as a live walkthrough of the concepts outlined in the DASF, focusing on overcoming obstacles to operationalizing AI risk management. This half-day event targets Director+ leaders in governance, data, privacy, legal, IT and security functions.

- Deployment support: The Security Analysis Tool (SAT) monitors adherence to security best practices in Databricks workspaces on an ongoing basis. We recently upgraded the SAT to streamline setup and enhance checks, aligning them with the DASF for improved coverage of AI security risks.

- DASF AI assistant: Databricks customers can configure Databricks AI Security Framework (DASF) AI assistant right in their own workspace with no prior Databricks skills, interact with DASF content in simple human language, and get answers.

Building a community with AI industry groups, customers, and partners

Ensuring that the DASF evolves in step with the current AI regulatory environment and emerging threat landscape is a top priority. Since the launch of 1.0, we have formed an AI working group of industry colleagues, customers, and partners to stay closely aligned with these developments. We want to thank our colleagues in the working group and our pre-reviewers like Complyleft, The FAIR Institute, Ethriva Inc, Arhasi AI, Carnegie Mellon University, and Rakesh Patil from JPMC. You can find the complete list of contributors in the acknowledgments section of the DASF. If you want to participate in the DASF AI Working Group, please contact our team at [email protected].

Here’s what some of our top advocates have to say:

"AI is revolutionizing healthcare delivery through innovations like the CLEVER GenAI pipeline, which processes over 1.5 million clinical notes daily to classify key social determinants and impacting veteran care. This pipeline is built with a strong security foundation, incorporating NIST 800-53 controls and leveraging the Databricks AI Security Framework to ensure compliance and mitigate risks. Looking ahead, we are exploring ways to expand these capabilities through Infrastructure as Code and secure containerization strategies, enabling agents to be dynamically deployed and scaled from repositories while maintaining rigorous security standards." - Joseph Raetano, Artificial Intelligence Lead, Summit Data Analytics & AI Platform, U.S. Department of Veteran Affairs

“DASF is the essential tool in transforming AI risk quantification into an operational reality. With the FAIR-AI Risk approach now in its second year, DASF 2.0 enables CISOs to bridge the gap between cybersecurity and business strategy—speaking a common language grounded in measurable financial impact.” - Jacqueline Lebo, Founder AI Workgroup, The FAIR Institute and Risk Advisory Manager, Safe Security

“As AI continues to transform industries, securing these systems from sophisticated and unique cybersecurity attacks is more critical than ever. The Databricks AI Security Framework is a great asset for companies to lead from the front on both innovation and security. With the DASF, companies are equipped to better understand AI risks, and find the tools and resources to mitigate those risks as they continue to innovate.” - Ian Swanson, CEO, Protect AI

“With the Databricks AI Security Framework, we’re able to mitigate AI risks thoughtfully and transparently, which is invaluable for building board and employee trust. It’s a game changer that allows us to bring AI into the business and be among the 15% of organizations getting AI workloads to production safely and with confidence.” — Coastal Community Bank

"Within the context of data and AI, conversations around security are few. The Databricks AI Security Framework addresses the often neglected side of AI and ML work, serving both as a best-in-class guide for not only understanding AI security risks, but also how to mitigate them." - Josue A. Bogran, Architect at Kythera Labs & Advisor to SunnyData.ai

“We have used the Databricks AI Security Framework to help enhance our organization's security posture for managing ML and AI security risks. With the Databricks AI Security Framework, we are now more confident in exploring possibilities with AI and data analytics while ensuring we have the proper data governance and security measures in place." - Muhammad Shami, Vice President, Jackson National Life Insurance Company

Download the Databricks AI Security Framework 2.0 today!

The Databricks AI Security Framework 2.0 and its compendium (Google sheet, Excel) are now available for download. To learn about upcoming AI Risk workshops or to request a dedicated in-person or virtual workshop for your organization, contact us at [email protected] or your account team. We also have additional thought leadership content coming soon to provide further insights into managing AI governance. For more insights on how to manage AI security risks, visit the Databricks Security and Trust Center.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read