Data Lakehouse

What is a Data Lakehouse?

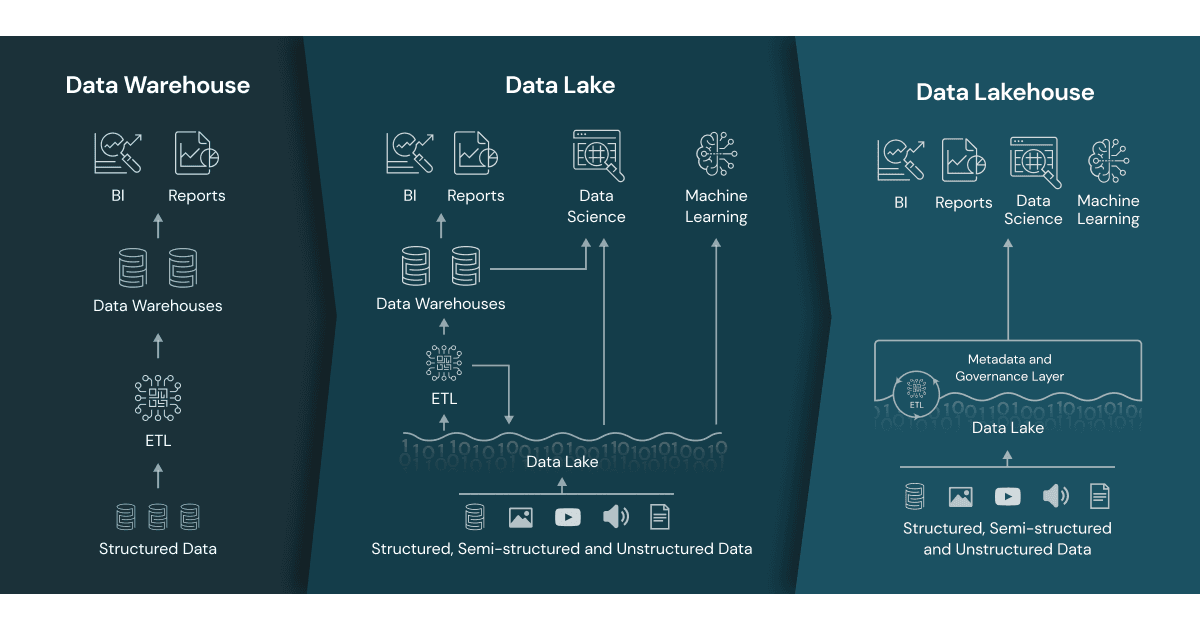

A data lakehouse is a new, open data management architecture that combines the flexibility, cost-efficiency, and scale of data lakes with the data management and ACID transactions of data warehouses, enabling business intelligence (BI) and machine learning (ML) on all data.

Here’s more to explore

Data Lakehouse: Simplicity, Flexibility, and Low Cost

Data lakehouses are enabled by a new, open system design: implementing similar data structures and data management features to those in a data warehouse, directly on the kind of low-cost storage used for data lakes. Merging them together into a single system means that data teams can move faster as they are able to use data without needing to access multiple systems. Data lakehouses also ensure that teams have the most complete and up-to-date data available for data science, machine learning, and business analytics projects.

Key Technology Enabling the Data Lakehouse

There are a few key technology advancements that have enabled the data lakehouse:

- metadata layers for data lakes

- new query engine designs providing high-performance SQL execution on data lakes

- optimized access for data science and machine learning tools.

Metadata layers, like the open source Delta Lake, sit on top of open file formats (e.g. Parquet files) and track which files are part of different table versions to offer rich management features like ACID-compliant transactions. The metadata layers enable other features common in data lakehouses, like support for streaming I/O (eliminating the need for message buses like Kafka), time travel to old table versions, schema enforcement and evolution, as well as data validation. Performance is key for data lakehouses to become the predominant data architecture used by businesses today as it's one of the key reasons that data warehouses exist in the two-tier architecture. While data lakes using low-cost object stores have been slow to access in the past, new query engine designs enable high-performance SQL analysis. These optimizations include caching hot data in RAM/SSDs (possibly transcoded into more efficient formats), data layout optimizations to cluster co-accessed data, auxiliary data structures like statistics and indexes, and vectorized execution on modern CPUs. Combining these technologies together enables data lakehouses to achieve performance on large datasets that rivals popular data warehouses, based on TPC-DS benchmarks. The open data formats used by data lakehouses (like Parquet), make it very easy for data scientists and machine learning engineers to access the data in the lakehouse. They can use tools popular in the DS/ML ecosystem like pandas, TensorFlow, PyTorch and others that can already access sources like Parquet and ORC. Spark DataFrames even provide declarative interfaces for these open formats which enable further I/O optimization. The other features of a data lakehouse, like audit history and time travel, also help with improving reproducibility in machine learning. To learn more about the technology advances underpinning the move to the data lakehouse, see the CIDR paper Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics and another academic paper Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores.

History of Data Architectures

Background on Data Warehouses

Data warehouses have a long history in decision support and business intelligence applications, though were not suited or were expensive for handling unstructured data, semi-structured data, and data with high variety, velocity, and volume.

Emergence of Data Lakes

Data lakes then emerged to handle raw data in a variety of formats on cheap storage for data science and machine learning, though lacked critical features from the world of data warehouses: they do not support transactions, they do not enforce data quality, and their lack of consistency/isolation makes it almost impossible to mix appends and reads, and batch and streaming jobs.

Common Two-Tier Data Architecture

Data teams consequently stitch these systems together to enable BI and ML across the data in both these systems, resulting in duplicate data, extra infrastructure cost, security challenges, and significant operational costs. In a two-tier data architecture, data is ETLd from the operational databases into a data lake. This lake stores the data from the entire enterprise in low-cost object storage and is stored in a format compatible with common machine learning tools but is often not organized and maintained well. Next, a small segment of the critical business data is ETLd once again to be loaded into the data warehouse for business intelligence and data analytics. Due to multiple ETL steps, this two-tier architecture requires regular maintenance and often results in data staleness, a significant concern of data analysts and data scientists alike according to recent surveys from Kaggle and Fivetran. Learn more about the common issues with the two-tier architecture.

Additional Resources

- What is a Lakehouse? - Blog

- Lakehouse Architecture: From Vision to Reality

- Introduction to Lakehouse and SQL Analytics

- Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics

- Delta Lake: The Foundation of Your Lakehouse

- The Databricks Lakehouse Platform

- Data Brew Vidcast: Season 1 on Data Lakehouses

- The Rise of the Lakehouse Paradigm

- The Data Lakehouse Platform for Dummies