Databricks Launches a Comprehensive Guide for Its Product and Apache Spark

We are proud to announce the launch of a new online guide for Databricks and Apache Spark at docs.databricks.com. Our goal is to create a definitive resource for Databricks users and the most comprehensive set of Apache Spark documentation on the web. As a result, we've dedicated a large portion of the guide to Spark tutorials and How-Tos, in addition to Databricks product documentation.

The content of the guide falls into three broad categories:

- How to use Databricks

- How to administer Databricks

- How to learn and use Apache Spark, including references, example code, and training material not available anywhere else such as:

In this blog, I will provide an overview of this new resource and highlight a few key sections.

Documentation on How to Use Databricks

We’ve consolidated product documentation into simple tutorials that walk you through everything you need to write and run Spark code in Databricks. This includes how to spin up a cluster, analyze data in notebooks, run production jobs with notebooks or JARs, Databricks APIs, and much more. There are detailed walkthroughs of every aspect of the Databricks UI as well as introductory tutorial videos.

In particular, the FAQ and best practices section has many tips to help new users get the most out of Databricks such as:

- Using an IDE with Databricks

- Integrating Databricks with Tableau

- Using XGBoost and Spark

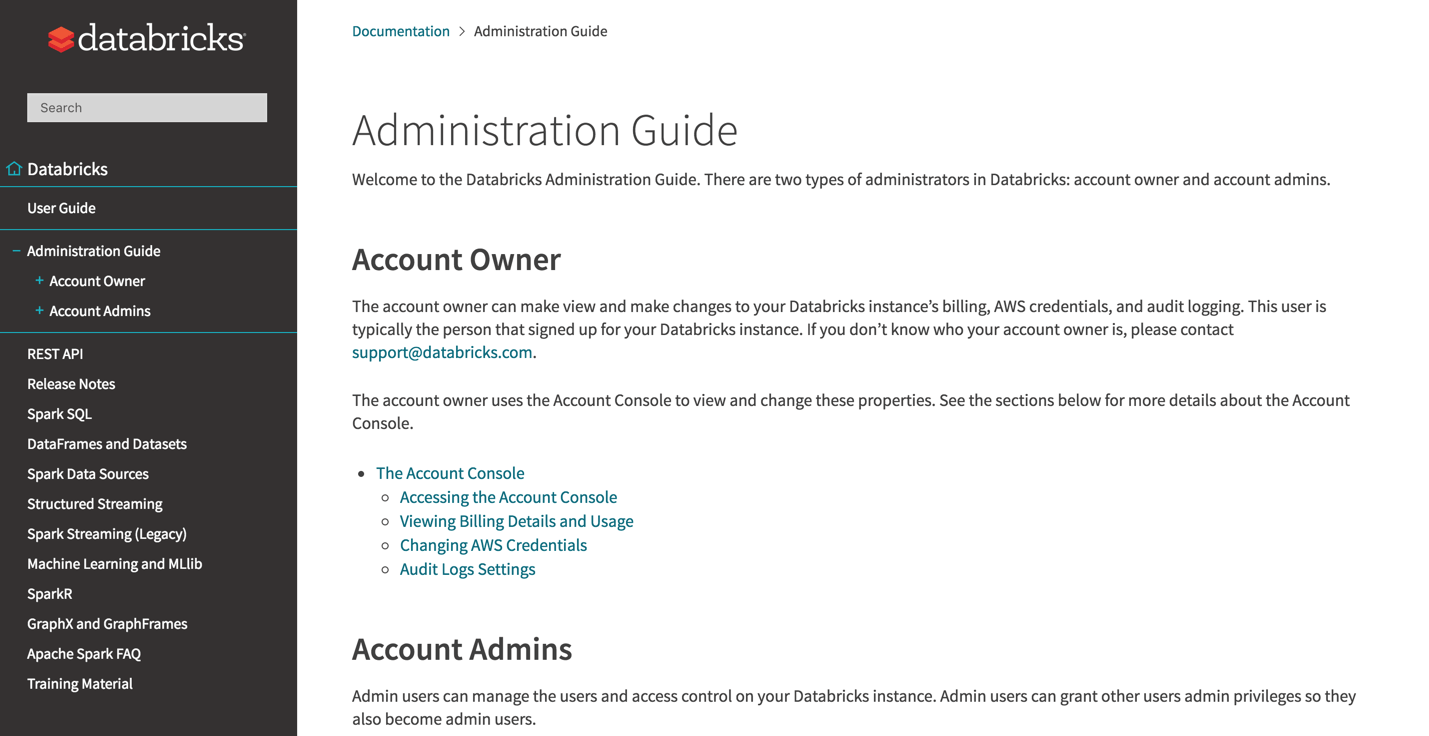

Documentation on How to Administer Databricks

These documents walk through how to manage the configuration, administration, and other housekeeping aspects of a Databricks account. For example, account owners can learn how to change AWS credentials and view billing details, while account administrators can learn how to add new Databricks users, set up access control, and configure SAML 2.0 compatible identity service providers.

Gartner®: Databricks Cloud Database Leader

Guide for Apache Spark Developers

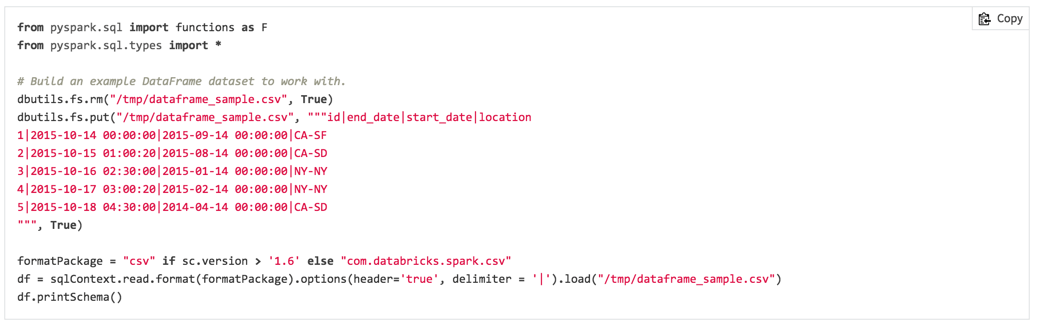

While improving the documentation for the Databricks product was essential, we also wanted to give back to the Apache Spark community. We have created Apache Spark’s only Spark SQL Language Manual, simple examples for Apache Spark UDFs in Scala and Python, SparkR Function Reference, as well as Structured Streaming Examples.

There are many practical code examples throughout the guide and you can easily drop them into your environment to test them out with a simple “copy” button.

Accessing the Guide in Databricks

To make it easy for users to find and use the code they need, we have also revamped the in-product search to make it easy to use and reference the code examples that you need. For example, finding an example for a Python Spark UDF is just a couple of keystrokes away!

https://www.youtube.com/watch?v=nSRX_9SbEBM

What’s Next

This release has been a couple of months in the making and we are just getting started. We will be constantly adding new content to answer the most common questions as well as deep dives into more sophisticated use cases. Be sure to browse the docs, bookmark it, and share with friends because this resource will only continue to grow!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read