Databricks Runtime 3.0 Beta Delivers Cloud Optimized Apache Spark

A major value Databricks provides is the automatic provisioning, configuration, and tuning of clusters of machines that process data. Running on these machines are the Databricks Runtime artifacts, which include Apache Spark and additional software such as Scala, Python, DBIO, and DBES. For customers these artifacts provide value: they relieve them from the onus of manual scaling; they tighten up security; they boost I/O performance; and they deliver rapid release versions.

In the past, the Runtime was co-versioned with upstream Apache Spark. Today, we are changing to a new version scheme that decouples Databricks Runtime versions from Spark versions and allows us to convey major feature updates in Databricks Runtime clearly to our customers. We are also making the beta of Databricks Runtime 3.0, which is the next major release and includes the latest release candidate build of Apache Spark 2.2, available to all customers today. (Note that Spark 2.2 has not yet officially been released by Apache.)

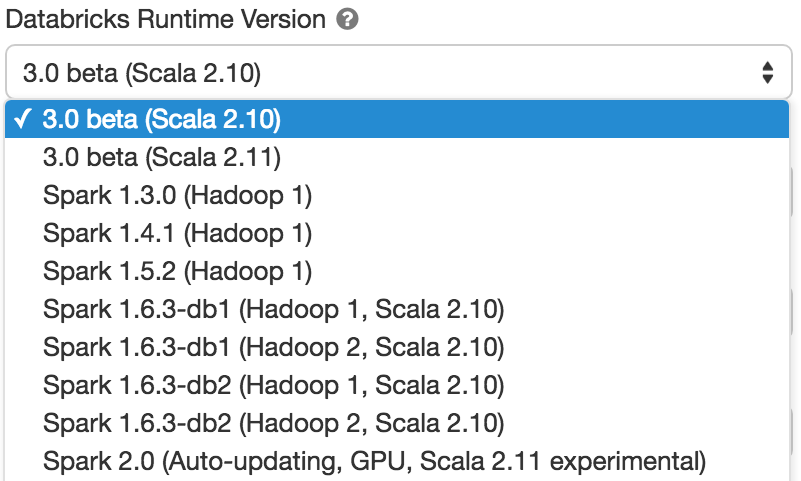

Customers can select this version when creating a new cluster.

In this blog post, we will explain what Databricks Runtime is, the added value it provides, and preview some of the major updates in the upcoming 3.0 release.

Databricks Runtime and Versioning

Databricks Runtime is the set of software artifacts that run on the clusters of machines managed by Databricks. It includes Spark but also adds a number of components and updates that substantially improve the usability, performance, and security of big data analytics. The primary differentiations are:

- Better Performance with DBIO: The Databricks I/O module, or DBIO, leverages the vertically integrated stack to significantly improve the performance of Spark in the cloud.

- Stronger Security with DBES: The Databricks Enterprise Security, or DBES, module adds features such as data encryption at rest and in motion, fine-grained data access control, and auditing to satisfy standard compliance (e.g. HIPAA, SOC2) and the most stringent security requirements as one would expect of large enterprises.

- Significantly lower operational complexity: With features such as auto-scaling of compute resources and local storage, we put Spark on “autopilot” and markedly reduce the operational complexity and management cost.

- Rapid releases and early access to new features: Compared to upstream open source releases, Databricks' SaaS offering facilitates quicker release cycles, offering our customers the latest features and bug fixes that are not yet available in open source releases.

Existing Databricks customers might recognize that Databricks Runtime was called “cluster image” and was co-versioned with Spark before this release, for example, the Spark 2.1 line appeared in the Databricks platform as “2.1.0-db1”, “2.1.0-db2”, “2.1.0-db3”, and “2.1.1-db4”. While Spark is a major component of the runtime, the old co-versioning scheme has labeling limitations. The new version scheme decouples Databricks Runtime versions from Spark versions and allows us to convey major feature updates in Databricks Runtime clearly to our customers.

In effect, Databricks Runtime 3.0 beta includes the release candidate version of Spark 2.2, and all its artifacts, which will be updated automatically as we incorporate bug fixes until it is generally available in June. Next, we will discuss the major features and improvements in this Runtime release.

Performance and DBIO

Databricks Runtime 3.0 includes a number of updates in DBIO that improve performance, data integrity, and security:

- Higher S3 throughput: Improves read and write performance of your Spark jobs.

- More efficient decoding: Boosts CPU efficiency when decoding common formats.

- Data skipping: Allows users to leverage statistics on data files to prune files more effectively in query processing.

- Transactional writes to S3: Features transactional (atomic) writes (both appends and new writes) to S3. Speculation can be turned on safely.

As part of DBIO artifact, Amazon Redshift connector enhancement includes:

- Advanced push down into Redshift: Query fragments that contain limit, samples, and aggregations can now be pushed down into Redshift for execution to reduce data movement from Redshift clusters to Spark.

- Automatic end-to-end encryption with Redshift: Data at rest and in transport can be encrypted automatically.

Shortly, we will publish a blog showing performance improvements as observed in the TPC-DS benchmarks. To give you a teaser, we compared Databricks Runtime 3.0 and Spark running on EMR, and Databricks is faster on every single query, with a total geomean of 5X improvements on the 99 complex TPC-DS queries. Over 10 queries improved more than 10X in runtime.

A customer tested the latest release and found 4x to 60x improvements on her queries over earlier versions of Spark:

"The performance has been phenomenal! I could almost accuse you of being a random number generator, except the results are correct!"

Fine-grained Data Access Control

As part of a new feature in DBES for fine-grained data access control for SQL and the DataFrame APIs, database administrators and data owners can now define access control policies on databases, tables, views, and functions in the catalog to restrict access.

Using standard SQL syntax, access control policies can be defined on views on arbitrary granularity, that is, row level, column level, and aggregate level. This is similar to features available in traditional databases such as Oracle or Microsoft SQL Server, but applies to both SQL and the DataFrame APIs across all supported languages. Even better, it is implemented in a way that does not have any performance penalty and does not require the installation of any additional software.

As an example, the following example grants user rxin permission to access the aggregate salary per department, but not the individual employee’s salary.

In the coming weeks, we will publish a series of blogs and relevant documentation with more details on fine-grained data access control.

Gartner®: Databricks Cloud Database Leader

Structured Streaming

Structured Streaming was introduced one year ago to Spark as a new way to build continuous applications. Not only does it simplify building end-to-end streaming applications by exposing a single API to write streaming queries as you would write batch queries, but it also handles streaming complexities by ensuring exactly-once-semantics, doing incremental stateful aggregations and providing data consistency.

The Databricks Runtime 3.0 includes the following new features from Spark 2.2:

- Support for arbitrary complex stateful processing using [flat]MapGroupsWithState

- Support for reading and writing data in streaming or batch to/from Apache Kafka

In addition to the upstream improvements, Databricks Runtime 3.0 has been optimized specifically for the cloud deployments, including the following enhancements:

- Ability to cut costs dramatically, by combining the Once Trigger mode with the Databricks Job Scheduler

- Production monitoring with integrated throughput and latency metrics

- Support for streaming data from Amazon's Kinesis

Finally, after processing 100s of billions of records in production streams, Databricks is also now considering structured streaming GA and ready for production for our customers.

Other Notable Updates

Higher order functions in SQL for nested data processing: Exposes a powerful and expressive way to work with nested data types (arrays, structs). See this blog post for more details.

Improved multi-tenancy: When multiple users run workloads concurrently on the same cluster, Databricks Runtime 3.0 ensures that these users can get fair shares of the resources, so users running short, interactive queries are not blocked by users running large ETL jobs.

Auto scaling local storage: Databricks Runtime 3.0 can automatically configure local storage and scale them on demand. Users no longer need to estimate and provision EBS volumes.

Cost-based optimizer from Apache Spark: The most important update in Spark 2.2 is the introduction of a cost-based optimizer. This feature is now available (off by default) in Databricks Runtime 3.0 beta.

Conclusion

Databricks Runtime 3.0 will include Spark 2.2 and more than 1,000 improvements in DBIO, DBES, and Structured Streaming to make data analytics easier, more secure, and more efficient.

While we don’t recommend putting any production workloads on this beta yet, we encourage you to give it a spin. The beta release will be updated automatically daily as we incorporate bug fixes in the upstream open source Apache Spark as well as other components until it is generally available in June.

Sign up for a Databricks trial today to test the full functionality.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read