Blog

Featured Story![lakebase]()

Announcements

June 12, 2025/6 min read

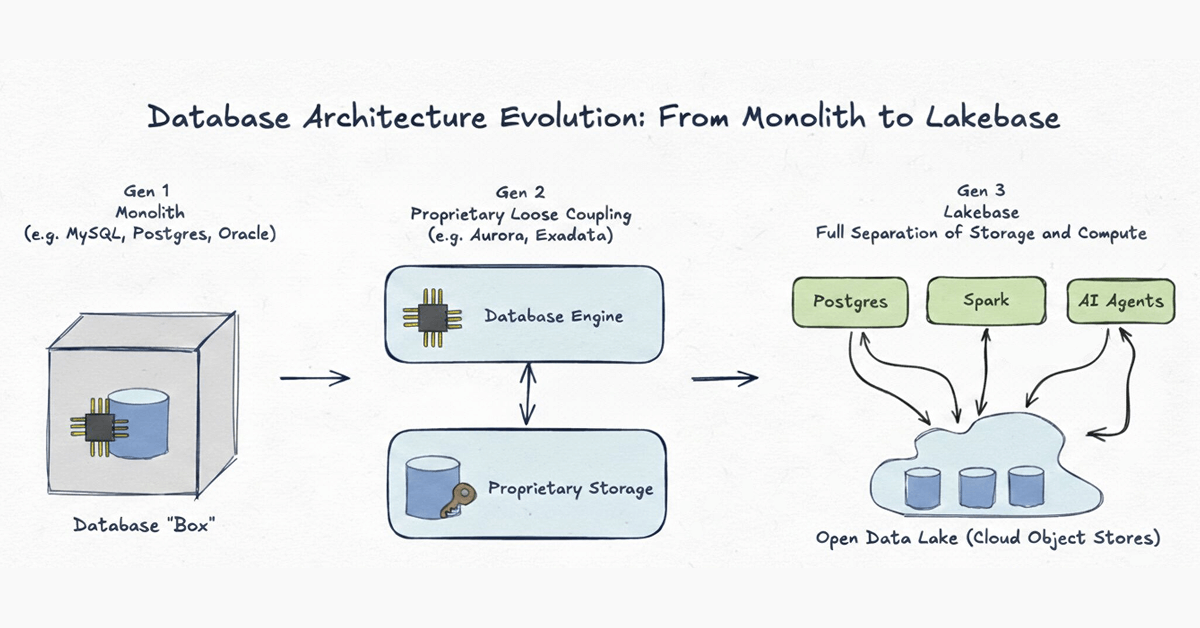

A New Era of Databases: Lakebase

What's new![How to Build Production-Ready Genie Spaces, and Build Trust Along the Way]()

Product

February 6, 2026/14 min read

How to Build Production-Ready Genie Spaces, and Build Trust Along the Way

Recent posts

Product

February 24, 2026/7 min read

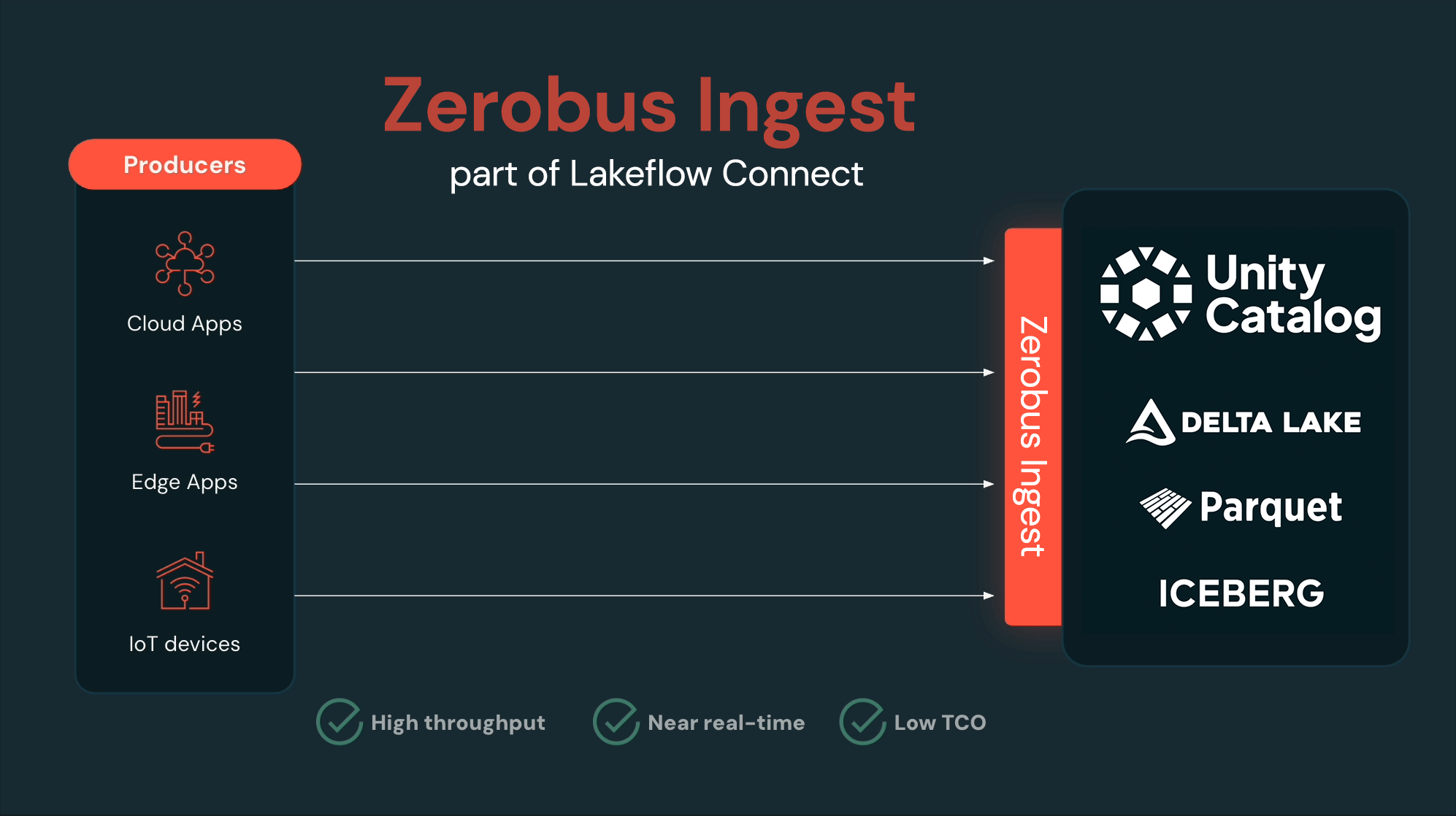

An AI-First Approach to Data Engineering with Lakeflow and Agent Bricks

Announcements

February 23, 2026/6 min read

Spark Declarative Pipelines: Why Data Engineering Needs to Become End-to-End Declarative

Product

February 18, 2026/6 min read

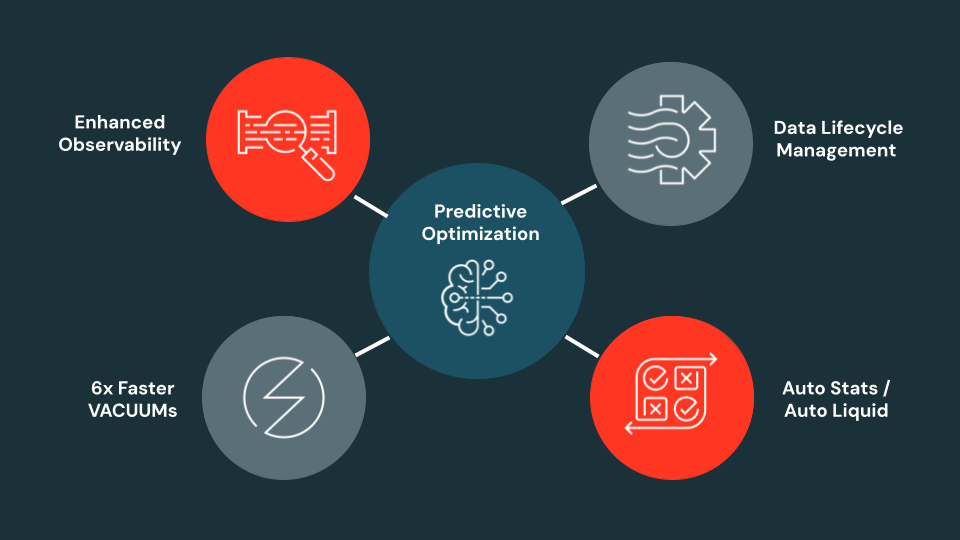

Predictive Optimization at Scale: A Year of Innovation and What’s Next

Data Strategy

February 17, 2026/9 min read

Business Analytics: Essential Tools, Techniques and Skills for Data-Driven Success

Media & Entertainment

February 17, 2026/3 min read

The Marketing Cloud and Adstra deliver identity resolution through Databricks Clean Rooms for secure, privacy-first marketing data collaboration

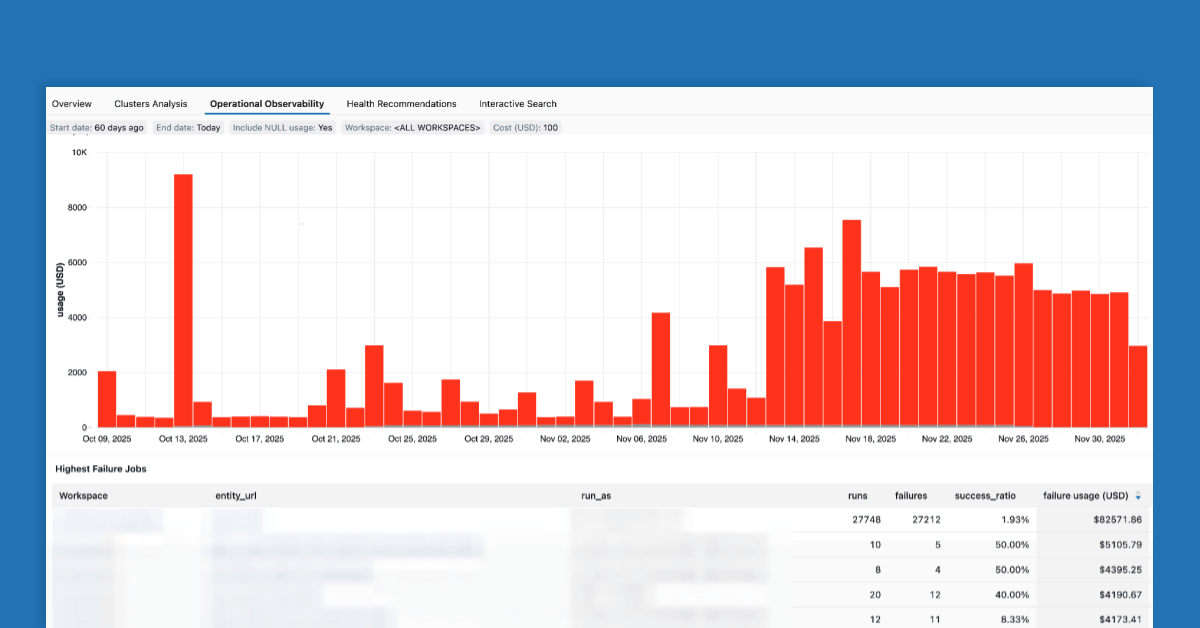

Product

February 17, 2026/11 min read

How Databricks System Tables Help Data Engineers Achieve Advanced Observability

Technology

February 12, 2026/10 min read

Getting the Full Picture: Unifying Databricks and Cloud Infrastructure Costs

Data Strategy

February 12, 2026/6 min read

Domain Intelligence Wins: What “High-Quality” Actually Means in Production AI

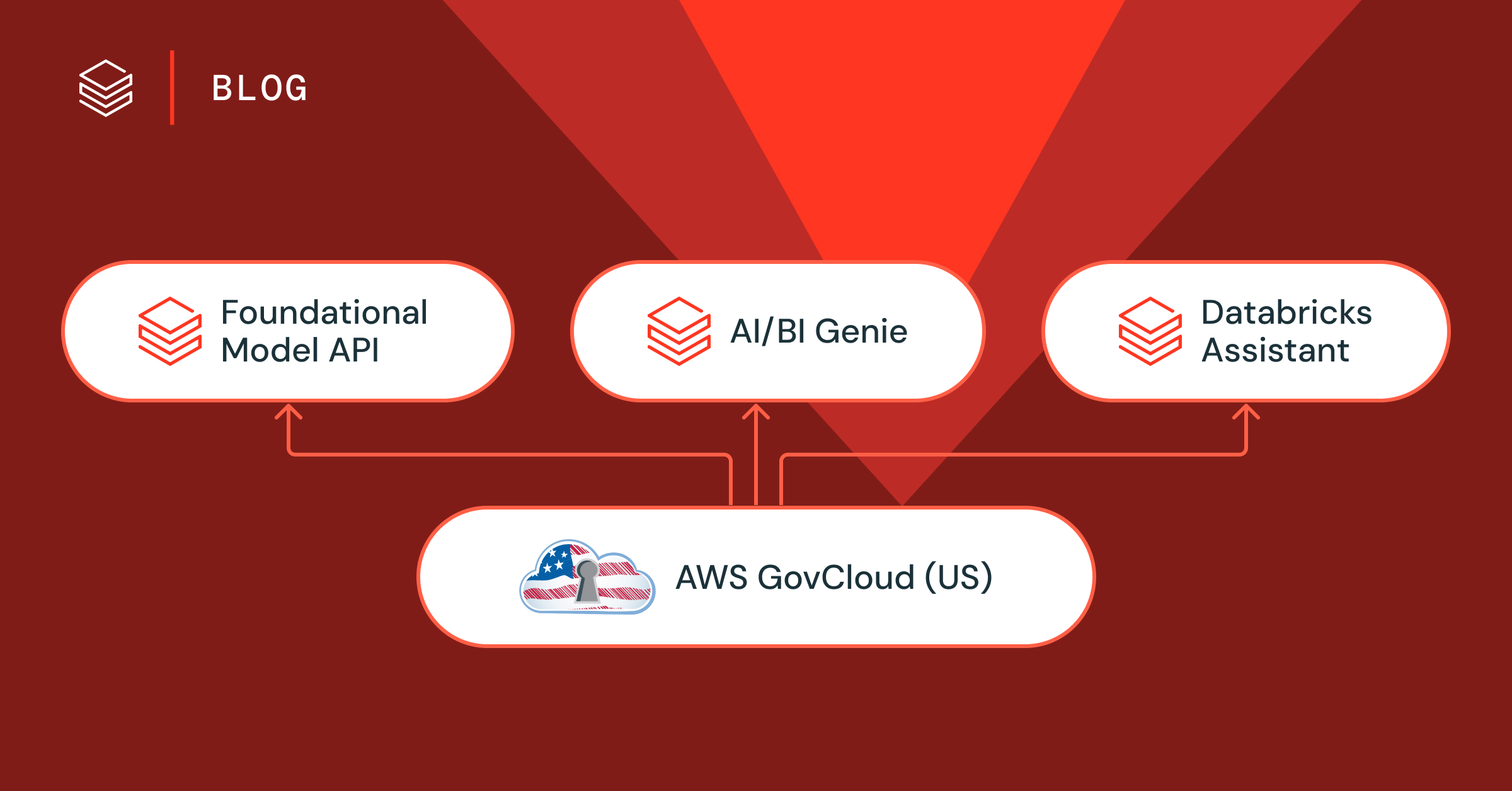

Public Sector

February 12, 2026/4 min read

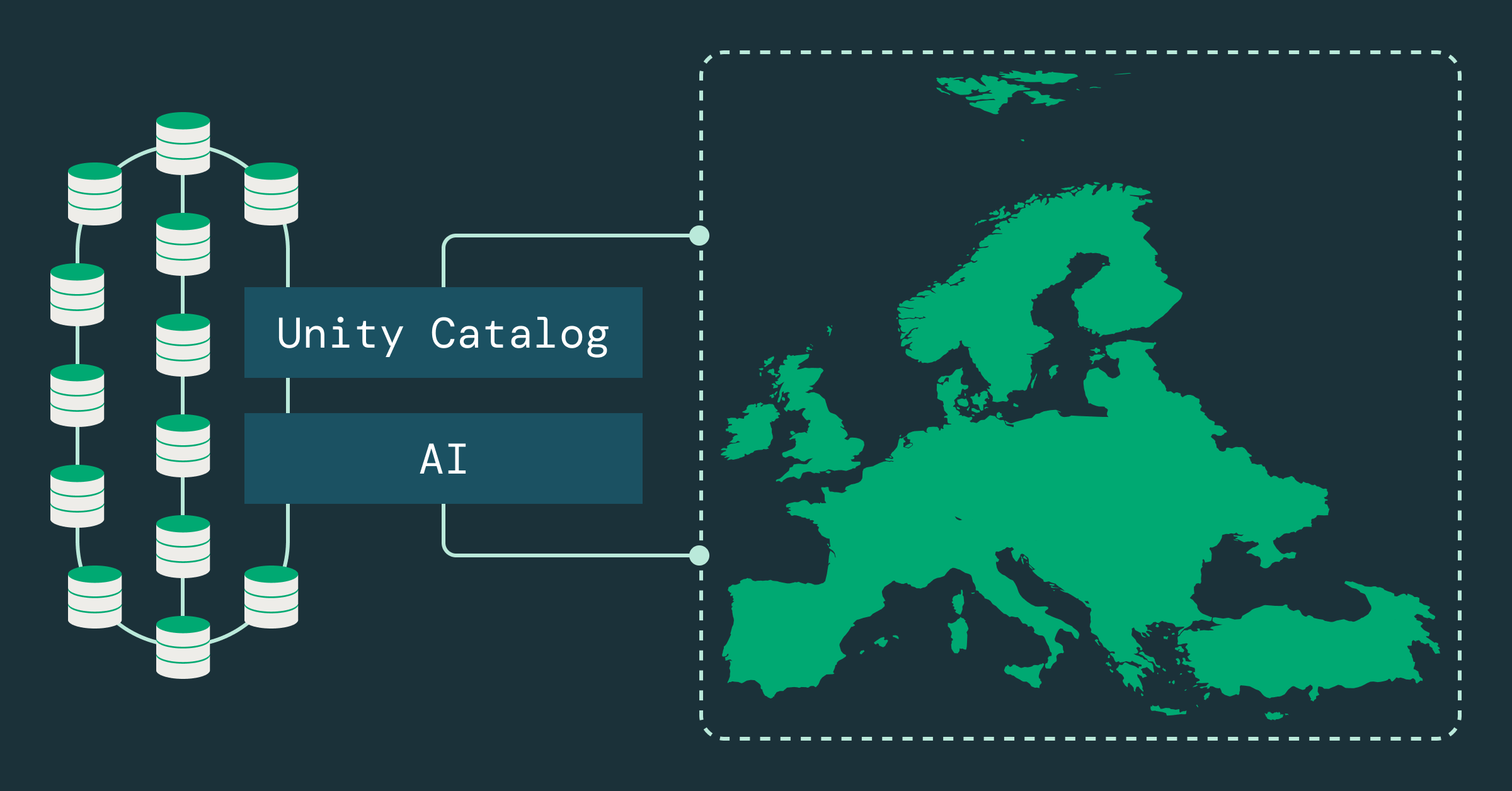

AI/BI Genie, Foundational Model API, and Databricks Assistant Now Generally Available in AWS GovCloud

Data Intelligence for All

Data + AI Summit is over, but you can still watch on demand

Never miss a Databricks post

Subscribe to our blog and get the latest posts delivered to your inbox