New Databricks Delta Features Simplify Data Pipelines

Continued Innovation and Expanded Availability for the Next-gen Unified Analytics Engine

Databricks Delta Lake the next generation unified analytics engine, built on top of Apache Spark, and aimed at helping data engineers build robust production data pipelines at scale is continuing to make strides. Already a powerful approach to building data pipelines, new capabilities and performance enhancements make Delta an even more compelling solution:

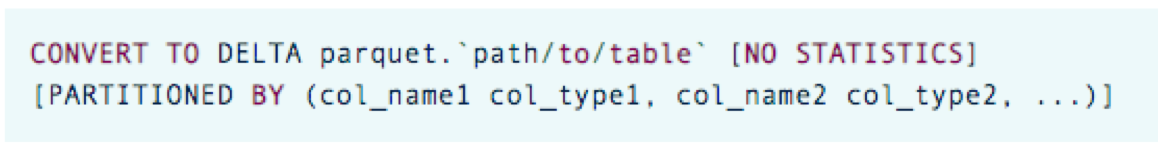

Fast Parquet import: makes it easy to convert existing Parquet files for use with Delta. The conversion can now be completed much more quickly and without requiring large amount of extra compute and storage resources. Please see the documentation for more details: Azure | AWS.

Example:

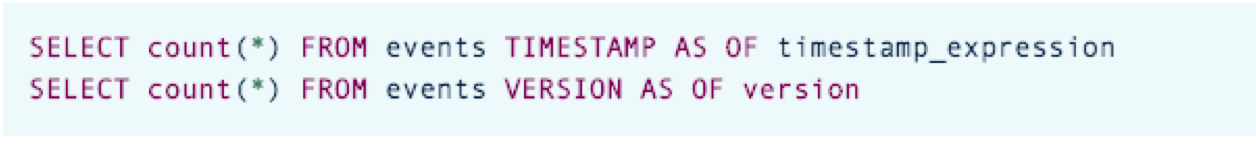

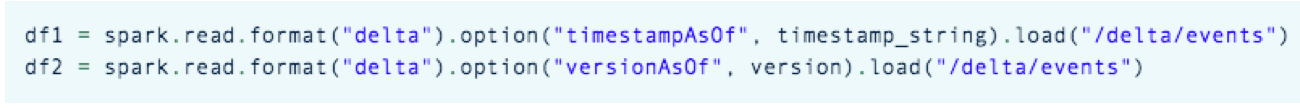

Time Travel: allows you to use and revert to earlier versions of your data. This is useful for conducting analyses that depend upon earlier versions of the data or for correcting errors. Please see this recent blog or the documentation for more details: Azure | AWS.

Example:

MERGE enhancements: In order to enable writing change data (inserts, updates, and deletes) from a database to a Delta table, there is now support for multiple MATCHED clauses, additional conditions in MATCHED and NOT MATCHED clauses, and a DELETE action. There is also support for * in UPDATE and INSERT actions to automatically fill in column names (similar to * in SELECT). This makes it easier to write MERGE queries for tables with a very large number of columns. Please see the documentation for more details: Azure | AWS.

Examples:

Expanded Availability

In response to rising customer interest we have decided to make Databricks Delta more broadly available. Azure Databricks customers now have access to Delta capabilities for Data Engineering and Data Analytics from both the Azure Databricks Standard and the Azure Databricks Premium SKUs. Similarly, customers using Databricks on AWS now have access to Delta from both Data Engineering and Data Analytics offerings.

| Cloud | Offering | Delta Included? |

| Microsoft Azure | Azure Databricks Standard Data Engineering | ✓ |

| Azure Databricks Standard Data Analytics | ✓ | |

| Azure Databricks Premium Data Engineering | ✓ | |

| Azure Databricks Premium Data Analytics | ✓ | |

| AWS | Databricks Basic | ✘ |

| Databricks Data Engineering | ✓ | |

| Databricks Data Analytics | ✓ |

Gartner®: Databricks Cloud Database Leader

Easy to Adopt: Check Out Delta Today

Porting existing Spark code for using Delta is as simple as changing

“CREATE TABLE … USING parquet” to

“CREATE TABLE … USING delta”

or changing

“dataframe.write.format(“parquet“).load(“/data/events“)” to

“dataframe.write.format(“delta“).load(“/data/events“)”

You can explore Delta today using:

- Databricks Delta Quickstart - for an introduction to Databricks Delta Azure | AWS.

- Optimizing Performance and Cost - for a discussion of features such as compaction, z-ordering and data skipping Azure | AWS.

Both of these contain notebooks in Python, Scala and SQL that you can use to try Delta.

If you are not already using Databricks, you can try Databricks Delta for free by signing up for the Databricks trial Azure | AWS.

Making Data Engineering Easier

Data engineering is critical to successful analytics and customers can use Delta in various way to improve their data pipelines. We have summarized some of these use cases in the below set of blogs:

- Change data capture with Databricks Delta

- Building a real-time attribution pipeline with Databricks Delta

- Processing Petabytes of data in seconds with Databricks Delta

- Simplifying streaming stock data analysis with Databricks Delta

- Build a mobile gaming events data pipeline with Databricks Delta

You can learn more about Delta from the Databricks Delta documentation Azure | AWS.

Visit the Delta Lake online hub to learn more, download the latest code and join the Delta Lake community.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read