Custom DNS With AWS Privatelink for Databricks Workspaces

Published: April 30, 2021

by Greg Wood, Ranjit Kalidasan and Pratik Mankad

This post was written in collaboration with Amazon Web Services (AWS). We thank co-authors Ranjit Kalidasan, senior solutions architect, and Pratik Mankad, partner solutions architect, of AWS for their contributions.

Last week, we were excited to announce the release of AWS PrivateLink for Databricks Workspaces, now in public preview, which enables new patterns and functionalities to meet the governance and security requirements of modern cloud workloads. One pattern we’ve often been asked about is the ability to leverage custom DNS servers for Customer-managed VPC for a Databricks workspace. To provide this functionality in AWS PrivateLink-enabled Databricks workspaces, we partnered with AWS to create a scalable, repeatable architecture. In this blog, we’ll discuss how we implemented Amazon Route 53 Resolvers to enable this use case, and how you can recreate the same architecture for your own Databricks workspace.

Motivation

Many enterprises configure their cloud VPCs to use their own DNS servers. They may do this because they want to limit the use of externally controlled DNS servers, and/or because they have on-prem, private domains that need to be resolved by cloud applications. In general, this is not an issue when using Databricks because our standard deployments, even with Secure Cluster Connectivity (i.e. private subnets), use domains that are resolvable by AWS.

AWS PrivateLink for Databricks interfaces, however, requires private DNS resolution in order to make connectivity to back-end and front-end interface work. If a customer configures their own DNS servers for their workspace VPC, they will not be able to resolve these VPC endpoints on their own, so connectivity between the Databricks Data and Control planes will be broken. In order to deploy Databricks with AWS PrivateLink and Custom DNS, Route 53 can be used to resolve these private DNS names in the Data Plane.

What is Amazon Route 53?

Amazon Route 53 is a highly-available and scalable cloud Domain Name System (DNS) web service. It is designed to give developers and businesses an extremely reliable and cost-effective way to route end users to Internet applications by translating names like www.example.com into the numeric IP addresses like 192.0.2.1 that computers use to connect to each other. Route53 consists of different components, such as hosted zones, policies and domains. In this blog, we focus on Route 53 Resolver Endpoints (specifically, Outbound Endpoints) and the applied Endpoint Rules.

High-level architecture

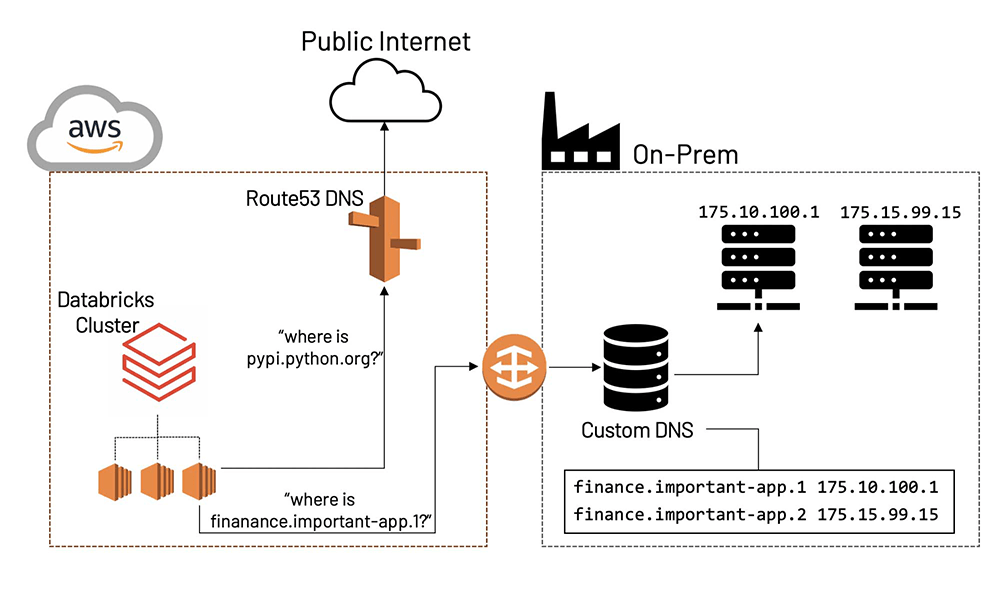

At a high level, the architecture to create Private DNS names for an interface Amazon virtual private cloud (VPC) endpoint on the service consumer side is shown below:

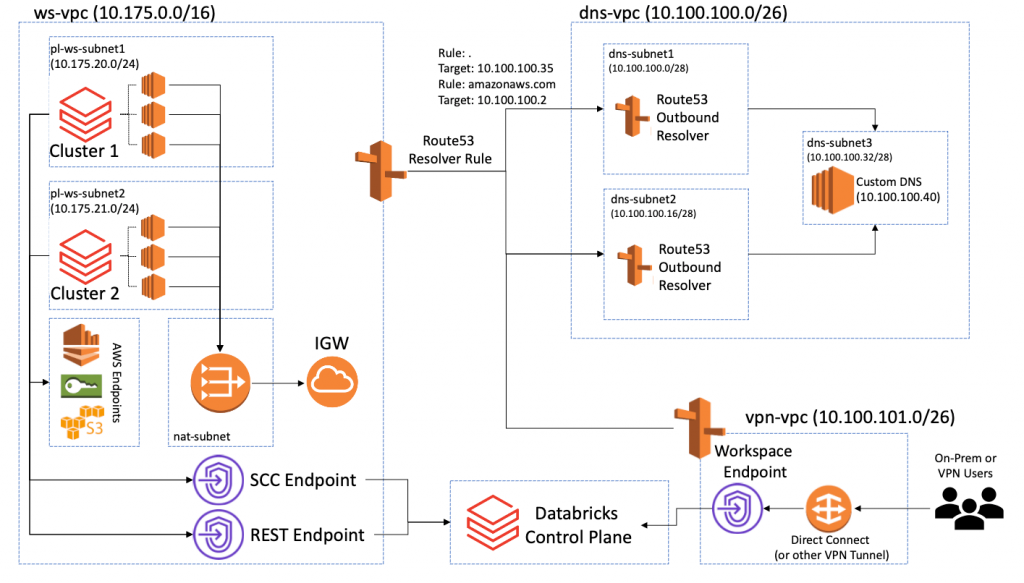

Route53 in this case provides an outbound resolver endpoint. This essentially provides a way of resolving local, private domains with Route 53, and using the custom DNS for any remaining, unresolved domains. Technically, this architecture consists of Route 53 outbound resolver endpoints deployed in the DNS Server VPC, and Route 53 Resolver Rules that tell the service how and where to resolve domains. For more information on how Route 53 Private Hosted Zone entries are resolved by AWS, please see the documentation and user guide. For more information, refer to Private DNS for Interface Endpoints and Working with Private Hosted Zones. Note that this works similarly in the case where a DNS server is hosted on-prem. In this case, the VPC in which Outbound Resolvers are deployed should be the same VPC that is hosting the Direct Connect endpoint to your on-prem data center.

Step-by-step instructions

Below, we walk through the steps for setting up a Route53 Outbound Resolver with the appropriate rules. We assume that a AWS PrivateLink-enabled Databricks workspace is already deployed and running.

- Ensure that the workspace is deployed properly according to our PrivateLink documentation. If you cannot spin up clusters due to the Custom DNS already in place, try enabling AWS DNS resolution to make sure that cluster creation is unblocked and there are no additional issues.

- Gather the following information:

- The VPC ID used for the Databricks Data Plane (and, if applicable, the User-to-Workspace VPC endpoint)

- The VPC ID of the VPC containing the custom DNS server

- The subnets into which Route53 endpoints will be deployed. These must be in the same VPC as the custom DNS server (at least 2 subnets are required, and they should be in separate AZs)

- The IP of the custom DNS server

- The Security Group ID that will be applied to the Route 53 endpoints. This should allow inbound connections on UDP port 53 from the Data Plane VPC (10.175.0.0/16 in the above diagram), and should use the default outbound rule (i.e., allow 0.0.0.0/0)

- Start by creating a new Route53 Outbound Resolver (Services > Route53 > Outbound Endpoint > Create Outbound Endpoint). Create this endpoint on the DNS VPC with VPC ID obtained from step 2b, and on the subnets from step 2c. Select the security group created from step2e. Unless you have a compelling reason to do otherwise, select “Use an IP address that is selected automatically” when selecting the IP addresses.

- Create a new resolver rule (Services > Route53 > Rules > Create Rule). This rule will forward DNS queries to the custom DNS server for all domains except for Private DNS names for Databricks VPC endpoints (these endpoints will use Private Hosted Zone for resolution). In “Domain Name”, enter a dot (“.” without quotes), which is translated to all domains. For the VPC, select your Data Plane VPC from Step 2a. The outbound endpoint should be the endpoint created in Step 3. In “Target IP”, use the IP of the custom DNS server. NOTE: if you use a User-to-Workspace PrivateLink endpoint in a separate VPC from the SCC/REST endpoints, also attach the rule to that VPC.

- If AWS endpoints are being used for the Data Plane, (i.e., Kinesis, S3 and STS endpoints), add another rule to forward these domain resolution requests to the Route 53 default resolver. This rule should have a domain of “amazonaws.com” (no quotes). The VPC and endpoint settings should be the same as those in Step 4. For the target IP address, use the AWS VPC resolver, which is the second IP of the VPC CIDR range; i.e., for CIDR 10.0.0.0/16, use 10.0.0.2. This should be the VPC from Step 2b; in this example the IP would be 10.100.100.2.

- Your Route53 resolver is now set up. Make sure that the DNS and Data Plane VPCs have routing configured correctly; no additional routing is required for Route53 endpoints once they are associated with the appropriate VPCs. No explicit routing is required for the Databricks VPC endpoints (since they are resolved by Route53), but other endpoints, such as Amazon S3 or other services, may have explicit routes.

- Open your workspace and try launching a cluster. To validate that the resolution is working, you can run the following command in a notebook:

%sh dig region.privatelink.cloud.databricks.com

Where region will change depending on the region you are in. For us-east-1, this will be nvirginia. This command should return something similar to the following:

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read