Solution Accelerator: Toxicity Detection in Gaming

Check out the solution accelerator to download the notebooks referred throughout this blog.

Across massively multiplayer online video games (MMOs), multiplayer online battle arena games (MOBAs) and other forms of online gaming, players continuously interact in real time to either coordinate or compete as they move toward a common goal -- winning. This interactivity is integral to gameplay dynamics, but at the same time, it’s a prime opening for toxic behavior -- an issue pervasive throughout the online video gaming sphere.

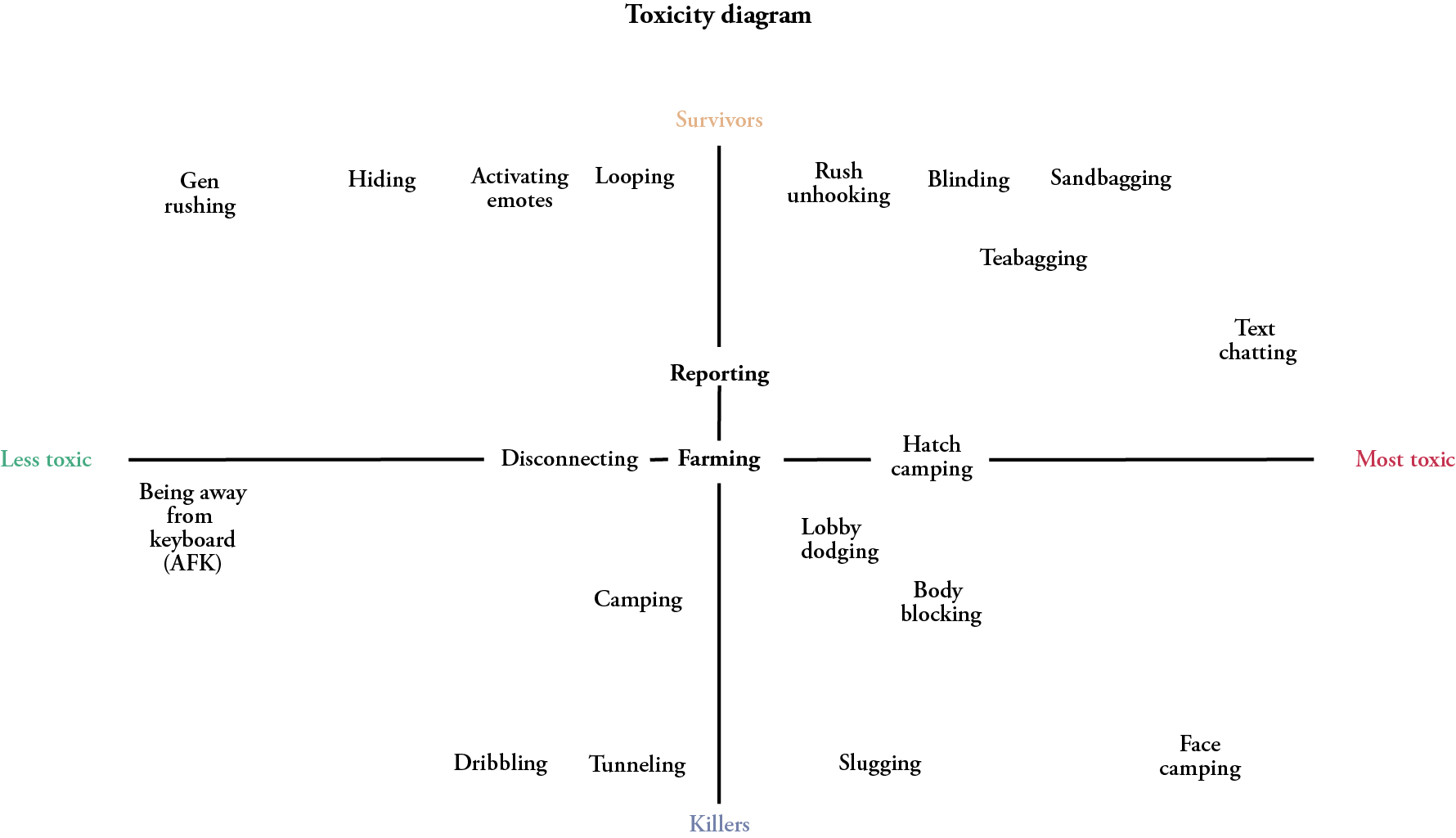

Toxic behavior manifests in many forms, such as the varying degrees of griefing, cyberbullying and sexual harassment that are illustrated in the matrix below from Behaviour Interactive, which lists the types of interactions seen within the multiplayer game, Dead by Daylight.

In addition to the personal toll that toxic behavior can have on gamers and the community -- an issue that cannot be overstated -- it is also damaging to the bottom line of many game studios. For example, a study from Michigan State University revealed that 80% of players recently experienced toxicity, and of those, 20% reported leaving the game due to these interactions. Similarly, a study from Tilburg University showed that having a disruptive or toxic encounter in the first session of the game led to players being over three times more likely to leave the game without returning. Given that player retention is a top priority for many studios, particularly as game delivery transitions from physical media releases to long-lived services, it’s clear that toxicity must be curbed.

Compounding this issue related to churn, some companies face challenges related to toxicity early in development, even before launch. For example, Amazon’s Crucible was released into testing without text or voice chat due in part to not having a system in place to monitor or manage toxic gamers and interactions. This illustrates that the scale of the gaming space has far surpassed most teams’ ability to manage such behavior through reports or by intervening in disruptive interactions. Given this, it’s essential for studios to integrate analytics into games early in the development lifecycle and then design for the ongoing management of toxic interactions.

Toxicity in gaming is clearly a multifaceted issue that has become a part of video game culture and cannot be addressed universally in a single way. That said, addressing toxicity within in-game chat can have a huge impact given the frequency of toxic behavior and the ability to automate detection of it using natural language processing (NLP).

Introducing the Toxicity Detection in Gaming Solution Accelerator from Databricks

Using toxic comment data from Jigsaw and Dota 2 game match data, this solution accelerator walks through the steps required to detect toxic comments in real time using NLP and your existing lakehouse. For NLP, this solution accelerator uses Spark NLP from John Snow Labs, an open-source, enterprise-grade solution built natively on Apache Spark ™.

The steps you will take in this solution accelerator are:

- Load the Jigsaw and Dota 2 data into tables using Delta Lake

- Classify toxic comments using multi-label classification (Spark NLP)

- Track experiments and register models using MLflow

- Apply inference on batch and streaming data

- Examine the impact of toxicity on game match data

Detecting toxicity within in-game chat in production

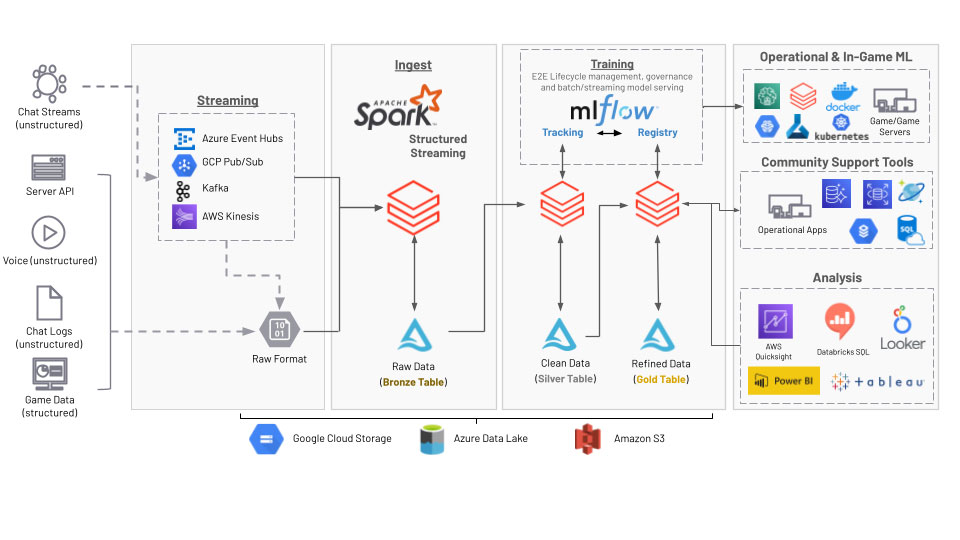

With this solution accelerator, you can now more easily integrate toxicity detection into your own games. For example, the reference architecture below shows how to take chat and game data from a variety of sources, such as streams, files, voice or operational databases, and leverage Databricks to ingest, store and curate data into feature tables for machine learning (ML) pipelines, in-game ML, BI tables for analysis and even direct interaction with tools used for community moderation.

Having a real-time, scalable architecture to detect toxicity in the community allows for the opportunity to simplify workflows for community relationship managers and the ability to filter millions of interactions into manageable workloads. Similarly, the possibility of alerting on severely toxic events in real-time, or even automating a response such as muting players or alerting a CRM to the incident quickly, can have a direct impact on player retention. Likewise, having a platform capable of processing large datasets, from disparate sources, can be used to monitor brand perception through reports and dashboards.

Getting started

The goal of this solution accelerator is to help support the ongoing management of toxic interactions in online gaming by enabling real-time detection of toxic comments within in-game chat. Get started today by importing this solution accelerator directly into your Databricks workspace.

Once imported you will have notebooks with two pipelines ready to move to production.

- ML Pipeline using Multi-Label Classification with training on real-world English datasets from Google Jigsaw. The model will classify and label the forms of toxicity in text.

- Real-time streaming inference pipeline leveraging the toxicity model. The pipeline source can be easily modified to ingest chat data from all the common data sources.

With both of these pipelines, you can begin understanding and analyzing toxicity with minimal effort. This solution accelerator also provides a foundation to build, customize and improve the model with relevant data to game mechanics and communities.

Check out the solution accelerator to download the notebooks referred throughout this blog.

Never miss a Databricks post

What's next?

Partners

March 7, 2024/6 min read

Databricks Expands Brickbuilder Program to Include Unity Catalog Accelerators

Solution Accelerators

September 4, 2024/8 min read