How to Build Scalable Data and AI Industrial IoT Solutions in Manufacturing

This is a collaborative post between Bala Amavasai of Databricks and Tredence, a Databricks consulting partner. We thank Vamsi Krishna Bhupasamudram, Director – Industry Solution, and Ashwin Voorakkara, Sr. Architect – IOT analytics, of Tredence for their contributions."

The most significant developments today, within manufacturing and logistics, are enabled through data and connectivity. To that end, the Industrial Internet of things (IIoT) forms the backbone of digital transformation, as it’s the first step in the data journey from edge to artificial intelligence (AI).

The importance and growth of the IIoT technology stack can’t be underestimated. Validated by several leading research firms, IIoT is expected to grow at a CAGR of greater than 16% annually through 2027 to reach $263 billion globally. Numerous industry processes are driving this growth, such as automation, process optimization and networking with a strong focus on machine-to-machine communication, big data analytics and machine learning (ML) delivering quality, throughput and uptime benefits to aerospace, automotive, energy, healthcare, manufacturing and retail markets. Real-time data from sensors helps industrial edge devices and enterprise infrastructure make real-time decisions, resulting in better products, more agile production infrastructure, reduced supply chain risk and quicker time to market

IIoT applications, as part of the broader industry X.0 paradigm, enables ‘’connected’’ industrial assets to enterprise information systems, business processes and the people at the heart of running the business. AI solutions built on top of these ‘’things’’ and other operational data, help unlock the full value of both legacy and newer capital investments by providing new real-time insights, intelligence and optimization, speeding up decision making and enabling progressive leaders to deliver transformational business outcomes and social value. Just as data is the new fuel, AI is the new engine that is propelling IIoT led transformation.

Leveraging sensor data from the manufacturing shop floor or from a fleet of vehicles offers multiple benefits. The use of cloud-based solutions is key to driving efficiencies and improving planning. Use cases include:

- Predictive maintenance: reduce overall factory maintenance costs by 40%.

- Quality control and inspection: improve discrete manufacturing quality by up to 35%.

- Remote monitoring: ensure workers health and safety.

- Asset monitoring: reduce energy usage by 4-10% in the oil and gas industry.

- Fleet management: make freight recommendations nearly 100% faster.

Getting started with industrial IoT solutions

The journey to achieving full value from Industry 4.0 solutions can be fraught with difficulties if the right decision is not made early on. Manufacturers require a data and analytics platform that can handle the velocity and volume of data generated by IIoT, while also integrating unstructured data. Achieving the north star of Industry 4.0 requires careful design using proven technology with user adoption, operational and tech maturity as the key considerations.

As part of their strategy, manufacturers will need to address these key questions regarding their data architecture:

- How much data needs to be collected in order to provide accurate forecasting/scheduling?

- How much historical data needs to be captured and stored?

- How many devices IoT systems are generating data and at what frequency?

- Does data need to be shared either internally or with partners?

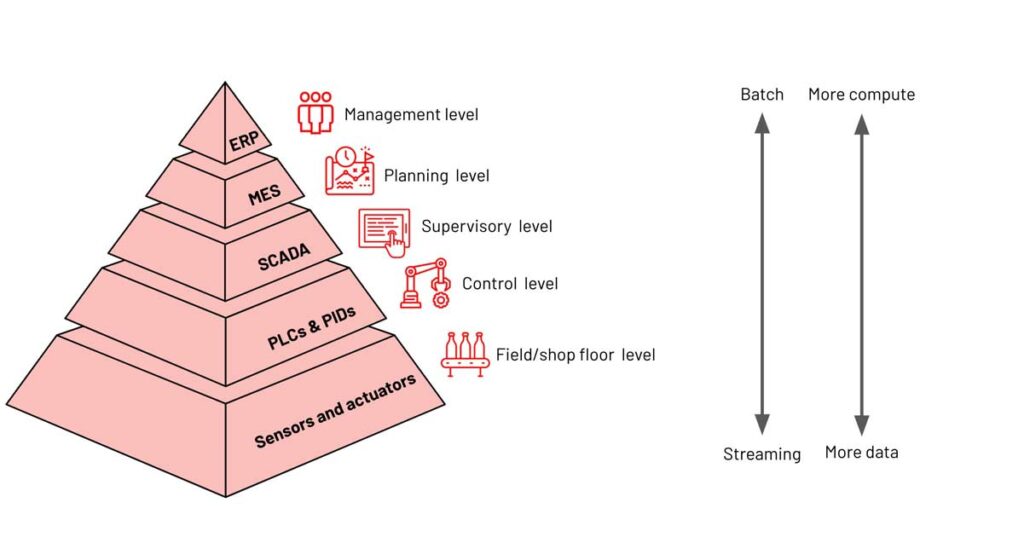

The automation pyramid in Figure 1 summarizes the different IT/OT layers in a typical manufacturing scenario. The granularity of data varies at different levels. Typically the bottom end of the pyramid deals with the largest quantity of data and in streaming form. Analytics and machine learning at the top end of the pyramid largely relies on batch computing.

As manufacturers begin their journey to design and deliver the right platform architectures for their initiatives, there are some important challenges and considerations to keep in mind:

| Challenge | Required Capability |

| High data volume and velocity | The ability to capture and store high-velocity granular readings reliably and cost-effectively from streaming IoT devices |

| Multiple proprietary protocols in OT layers to extract data | Ability to transform data from multiple protocols to standard protocols like MQTT and OPC UA |

| Data processing needs are more complex | Low latency time series data procession, aggregations, and mining |

| Curated data provisioning & analytics enablement for ML use cases | Heavy-duty, flexible compute for sophisticated AI/ ML applications |

| Scalable IoT edge compatible ML development | Collaboratively train and deploy predictive models on granular, historic data. Streamline the data and model pipelines through an “ML-IoT ops” approach. |

| Edge ML, insights, and actions orchestration | Orchestration of real-time insights and autonomous actions |

| Streamlined edge implementation | Production deployment of data engineering pipelines, ML pipelines on relatively small form factor devices |

| Security and governance | Data governance implementation of different layers. Threat modeling across the value chain. |

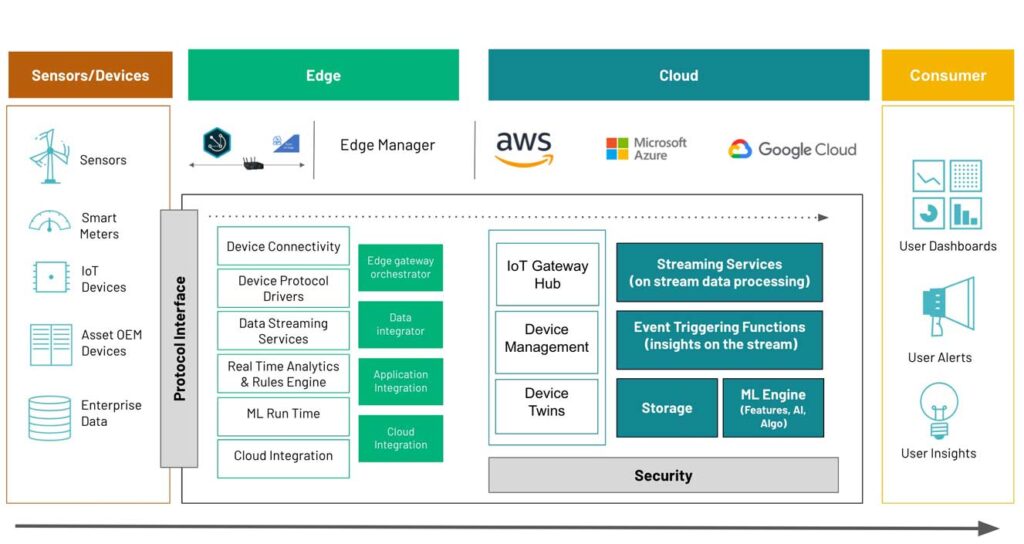

Irrespective of the platform and technology choices, there are fundamental building blocks that need to work together. Each of these building blocks need to be accounted for in order for the architecture to work seamlessly.

A typical agnostic technical architecture, based on Databricks, is shown below. While Databricks’ capabilities address many of the needs, IIoT solutions are not an island and need many supporting services and solutions in order to work together. This architecture also provides some guidance for where and how to integrate these additional components.

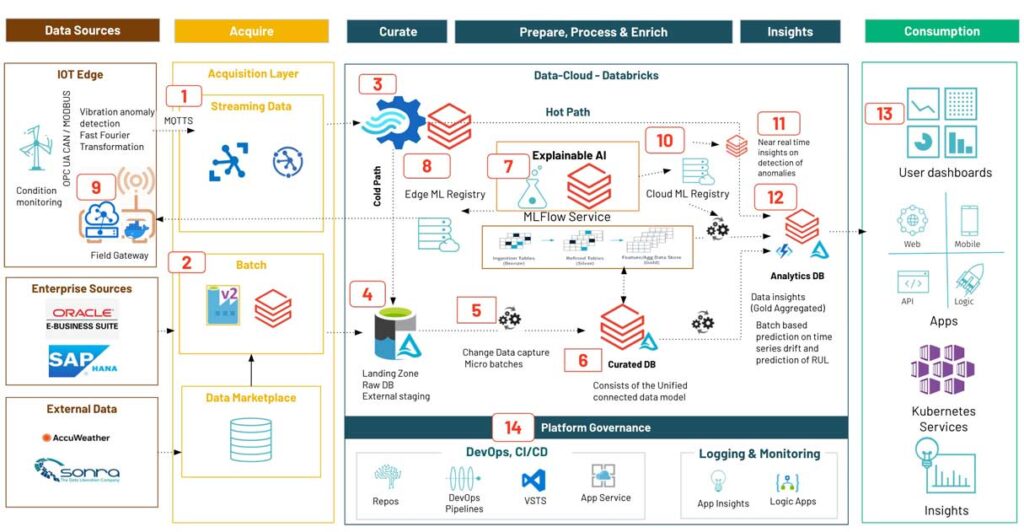

Unlike traditional data architectures, which are IT-based, in manufacturing there is an intersection between hardware and software that requires an OT (operational technology) architecture. OT has to contend with processes and physical machinery. Each component and aspect of this architecture is designed to address a specific need or challenge, when dealing with industrial operations. The ordered numbers in the figure traces the data journey through the architecture:

1 - Connect multiple OT protocols, ingest and stream IoT data from equipment in a scalable manner. Facilitate streamlined ingestion from data-rich OT devices -- sensors, PLC/SCADA into a cloud data platform

2 - Ingest enterprise and master data in batch mode

3,11 - Enable near real-time insights delivery

4 - Tuned raw data lake for data ingestion

5,6 - Develop data engineering pipelines to process and standardize data, remove anomalies and store in Delta Lake

7 - Enable data scientists to build ML models on the curated database

8,9,10 - Containerize and ship production-ready ML models to the edge, enabling edge analytics

12,13 - Aggregated database holds formatted insights, real time and batch ready for consumption in any form

14 - CI/CD pipelines to automate the data engineering pipelines and deployment of ML models on edge and on hotpath/coldpath

6 reasons why you should embrace this architecture

There are five simple insights that will help you build a scalable IIoT architecture:

- A single edge platform should connect and ingest data from multiple OT protocols streaming innumerable tags

- The Lakehouse can transform data to insights near real time with Databricks jobs compute cluster (streaming) and process heaps of data in batch with Data engineering cluster

- All purpose clusters allow ML workloads to be run on large volumes of data

- MLflow helps to containerize the model artifacts, which can be deployed on edge for real-time insights

- The Lakehouse architecture, Delta Lake, is open source and follows open standards, thus increasing software component compatibility without causing lock-ins

- Ready to use AI notebooks and accelerators

Why the Lakehouse for IIoT solutions

In a manufacturing scenario, there are multiple data-rich sensors feeding multiple gateway devices and data needs to land consistently into storage. The problems associated with this scenarios are:

- Volume: due to the quantity of data producers within the system, the amount of data stored could sky-rocket, thus cost becomes a factor.

- Velocity: hundreds of sensors connected to tens of gateways in a normal manufacturing shop floor is the ideal recipe for failure.

- Variety: data from the shopfloor does not always come in a structured tabular form and may be semi-structured or unstructured.

The Databricks Lakehouse Platform is ideally suited to manage large amounts of streaming data. Built on the foundation of Delta Lake, you can work with the large quantities of data streams delivered in small chunks from these multiple sensors and devices, providing ACID compliances and eliminating job failures compared to traditional warehouse architectures. The Lakehouse platform is designed to scale with large data volumes.

Manufacturing produces multiple data types consisting of semi-structured (JSON, XML, MQTT, etc.) or unstructured (video, audio, PDF, etc.), which the platform pattern fully supports. By merging all these data types onto one platform, only one version of the truth exists, leading to more accurate outcomes.

In addition to the lakehouse’s data management capabilities, it enables data teams to perform analytics and ML directly, without needing to make copies of the data, thus improving accuracy and efficiency. Storage is decoupled from compute, meaning the lakehouse can scale to many more concurrent users and larger data quantities.

Conclusion

Manufacturers that have invested in solutions built atop IIoT systems have not only seen huge optimizations with costs and productivity, but also an increase in revenue. The convergence of data from a multitude of sources is an ongoing challenge within manufacturing. The core to delivering value-driven outcomes is by investing in the right architecture that is able to scale and cope with the volume and velocity of industrial data, while not succumbing to huge increases in costs. We at Databricks and Tredence believe that the data lakehouse architecture is a huge enabler. In future blog posts, we will build on this core architecture to demonstrate how value can be delivered by running meaningful data analysis and AI-driven analytics built within the “repository” of big industrial data. Check out more of our solutions

Never miss a Databricks post

What's next?

Manufacturing

October 1, 2024/5 min read

From Generalists to Specialists: The Evolution of AI Systems toward Compound AI

Product

November 27, 2024/6 min read