A Tale About Vulnerability Research and Early Detection

This is a collaborative post between Databricks and Orca Security. We thank Yanir Tsarimi, Cloud Security Researcher, of Orca Security for their contribution.

Databricks’ number one priority is the safeguarding of our customer data. As part of our defense-in-depth approach, we work with security researchers in the community to proactively discover and remediate potential vulnerabilities in the Databricks platform so that they may be fixed before they become a risk to our customers. We do this through our private bug bounty and third-party penetration testing contracts.

We know that security is also top-of-mind for customers across the globe. So, to showcase our efforts we’d like to share a joint blog on a recent experience working with one of our security partners, Orca Security.

Orca Security, as part of an ongoing research effort, discovered a vulnerability in the Databricks platform. What follows below is Orca’s description of their process to discover the vulnerability, Databricks’ detection of Orca’s activities, and vulnerability response.

The vulnerability

In the Databricks workspace, a user can upload data and clone Git repositories to work with them inside a Databricks workspace. These files are stored within Databricks-managed cloud storage (e.g., AWS S3 object storage), in what Databricks refers to as a “file store.” Orca Security’s research was focused on Databricks features that work with these uploaded files - specifically Git repository actions. One specific feature had a security issue: the ability to upload files to Git repositories.

The upload is performed in two different HTTP requests:

- A user’s file is uploaded to the server. The server returns a UUID file name.

- The upload is “finalized” by submitting the UUID file name(s).

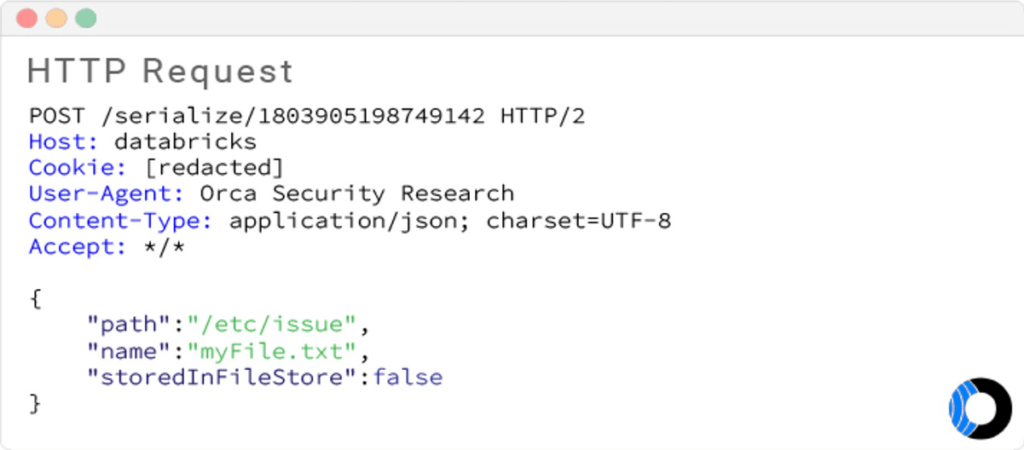

This procedure makes sense for uploading multiple files. Looking at the request sent to the server when confirming the file upload, the researcher noticed that the HTTP request is sent with three parameters:

{

"path": "the path from the first step",

"name": "file name to create in the git repo",

"storedInFileStore": false

}

The last parameter caught the Orca researcher’s eye. They already knew the “file store” is actually the cloud provider’s object storage, so it was not clear what “false” meant.

The researcher fiddled with the upload requests and determined that when the file upload is “confirmed” after the first HTTP request, the uploaded file was stored locally on the disk under "/tmp/import_xxx/...". The import temporary directory gets prepended to the uploaded file name. The Orca researcher needed to determine whether they could execute a directory traversal attack, this involved sending a request for a local file name such as ".../.../.../.../etc./issue" and seeing if it works. It did not. The backend checked for traversals and did not allow the Orca researcher to complete the upload.

While Databricks had prevented this attack, further attempts confirmed that while attempting to take advantage of relative paths to traverse did not work, the system did have a vulnerability, as it permitted the researcher to provide an absolute path such as "/etc./issue." After attempting to upload this file, the researcher verified the file contents via the Databricks web console, an indication that they might be able to read arbitrary files from the server’s filesystem.

To understand the severity of this issue without potentially compromising customer data, the Orca Security researcher carefully tried reading files under "/proc/self`. The researcher determined that they would be able to obtain certain information while reading environment variables from "/proc/self/environ." They ran a script iterating against "/proc/self/fd/XX," which yielded read access to open log files. To ensure that no data was compromised, they paused the attack to alert Databricks of the findings.

Databricks’ detection and vulnerability response

Prior to notification, Databricks had already rapidly detected the anomalous behavior and began to investigate, contain and take countermeasures to repel further attacks -- and contacted Orca Security even before Orca was able to report the issue to Databricks.

The Databricks team, as part of the Incident Response procedures, was able to identify the attack and vulnerability and deploy a fixed version within just a few hours. Databricks also determined that the exposed environmental information was not valid in the system at the time of the research. Databricks even identified the source of the requests and worked diligently with Orca Security to validate their detection and actions and further protect customers.

Orca Security would like to applaud Databricks’ security team efforts, as to this day, this is the only time we’ve been detected while researching a system.

Never miss a Databricks post

What's next?

News

December 11, 2024/4 min read

Innovators Unveiled: Announcing the Databricks Generative AI Startup Challenge Winners!

News

December 16, 2024/3 min read