Accelerating Innovation at JetBlue Using Databricks

This blog is authored by Sai Ravuru Senior Manager of Data Science & Analytics at JetBlue

The role of data in the aviation sector has a storied history. Airlines were among the first users of mainframe computers, and today their use of data has evolved to support every part of the business. Thanks in large part to the quality and quantity of data, airlines are among the safest modes of transportation in the world.

Airlines today must balance several variables occurring in tandem with each other in a chronological dance:

- Customers need to connect to their flights

- Bags need to be loaded on to flights and tracked to the same destination as customers

- Flight crews (e.g. pilots, flight attendants, commuting crews) need to be in position for their flights while meeting legal FAA duty and rest requirements

- Aircraft are constantly monitored for maintenance needs while ensuring parts inventory is available where needed

- Weather is dynamic across hundreds of critical locations and routes, and forecasts are vital for safe and efficient flight operations

- Government agencies are regularly updating airspace constraints

- Airport authorities are regularly updating airport infrastructure

- Government agencies are regularly updating airport slot restrictions and adjusting for geopolitical tensions

- Macroeconomic forces constantly affect the price of Jet-A aircraft fuel and Sustainable Aviation Fuels (SAF)

- Inflight situations for a variety of reasons prompt active adjustments of the airline’s system

The role of data and in particular analytics, AI and ML is key for airlines to provide a seamless experience for customers while maintaining efficient operations for optimum business goals.

Airlines are the most data-driven industries in our world today due to the frequency, volume and variety of changes happening as customers depend on this vital component of our transportation infrastructure.

For a single flight, for example, from New York to London, hundreds of decisions have to be made based on factors encompassing customers, flight crews, aircraft sensors, live weather and live air traffic control (ATC) data. A large disruption such as a brutal winter storm can impact thousands of flights across the U.S. Therefore it is vital for airlines to depend on real-time data and AI & ML to make proactive real time decisions.

Aircraft generate terabytes of IoT sensor data over the span of a day, and customer interactions with booking or self-service channels, constant operational changes stemming from dynamic weather conditions and air traffic constraints are just some of the items highlighting the complexity, volume, variety and velocity of data at an airline such as JetBlue.

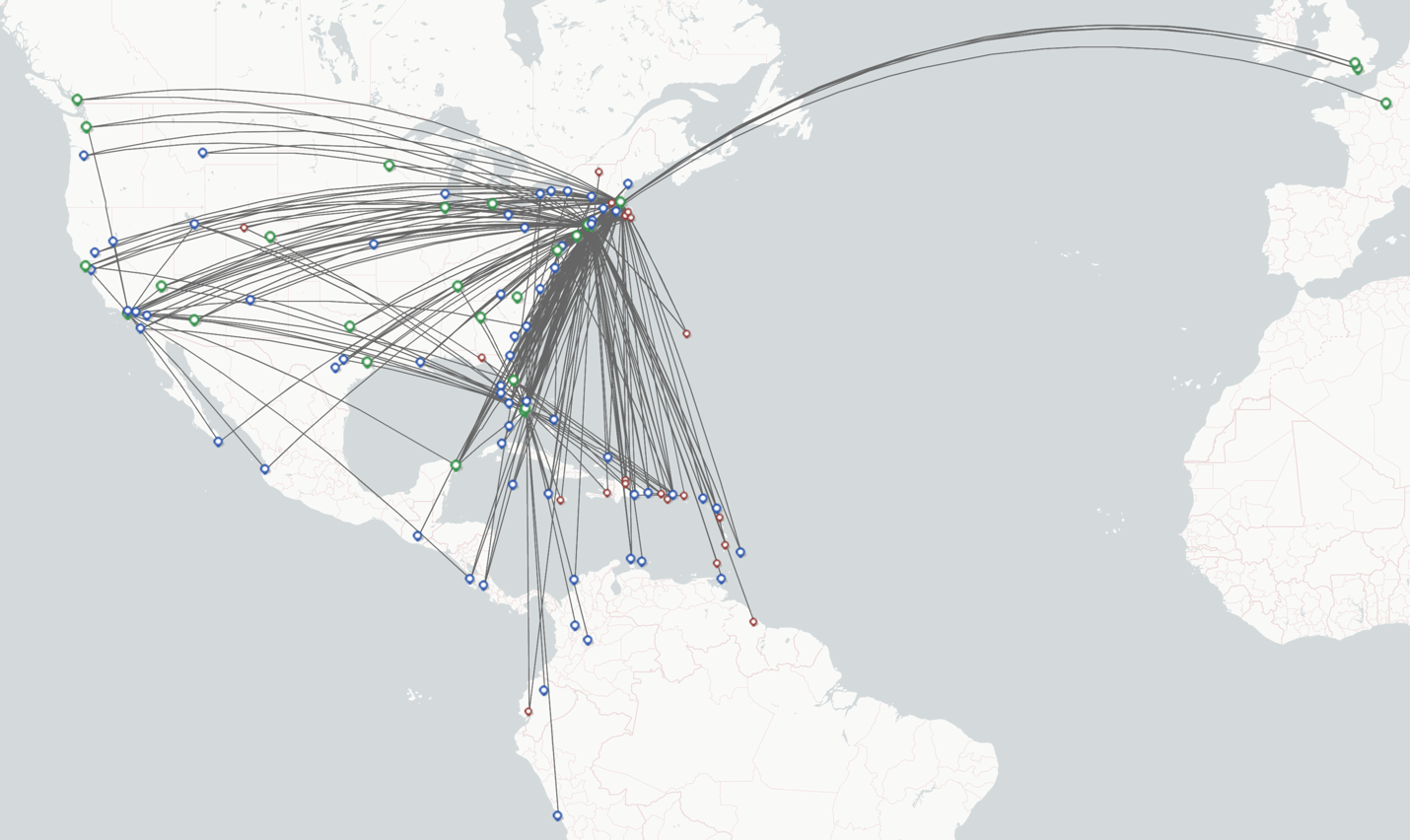

With six focus cities (Boston, Fort Lauderdale, Los Angeles, New York City, Orlando, San Juan) and a heavy concentration of flights in the world’s busiest airspace corridor, New York City, JetBlue in 2023 has:

State of Data and AI at JetBlue

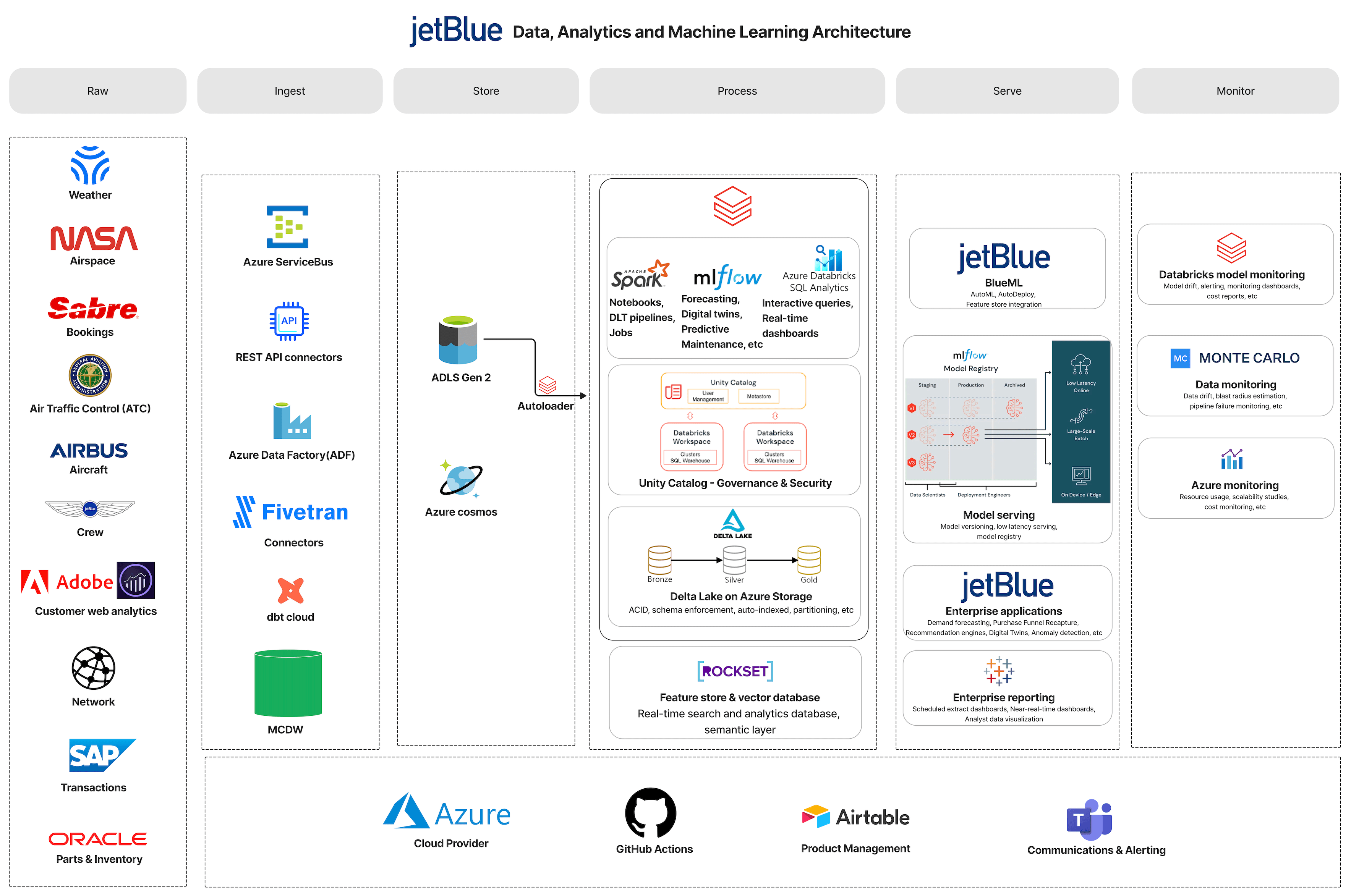

Due to the strategic importance of data at JetBlue, the data team is comprised of Data Integration, Data Engineering, Commercial Data Science, Operations Data Science, AI & ML engineering, and Business Intelligence teams reporting directly to the CTO.

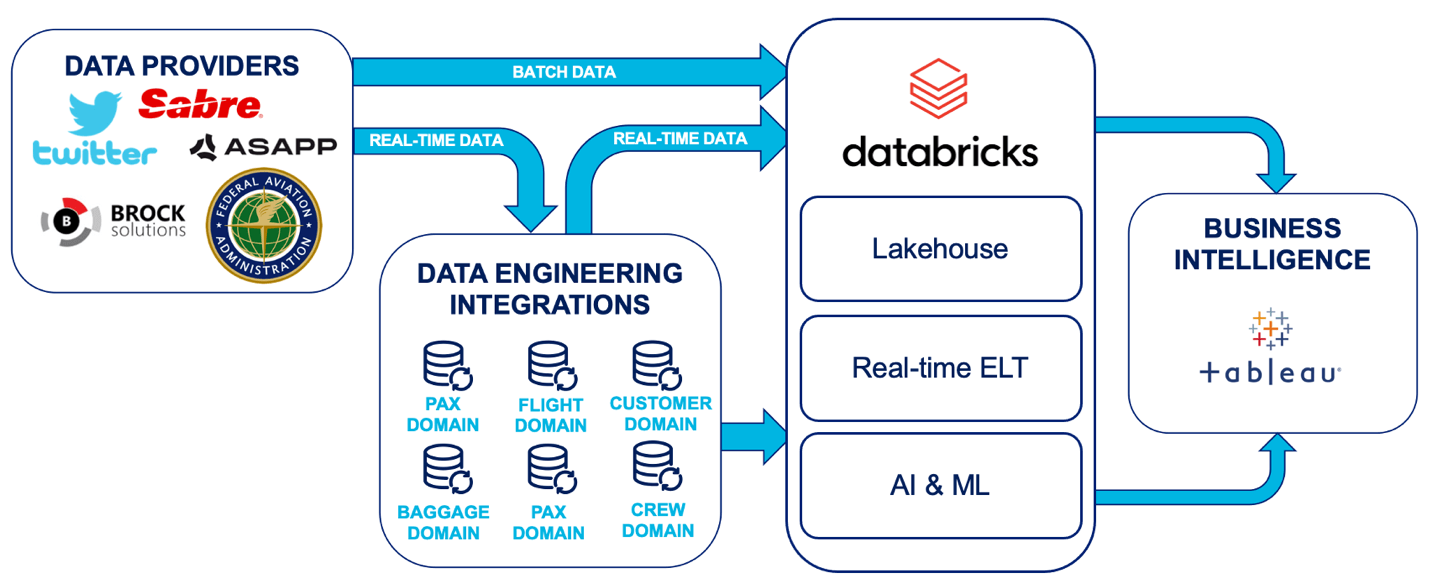

JetBlue's current technological stack is mostly centered on Azure, with Multi-Cloud Data Warehouse and lakehouse architecture running simultaneously for various purposes. Both internal and external data are continuously enriched in the Databricks Data Intelligence Platform in the form of batch, near-real-time, and real-time feeds.

Using Delta Live Tables to extract, load, and transform data allows Data Engineers and Data Scientists to fulfill a wide range of latency SLA requirements while feeding data to downstream applications, AI and ML pipelines, BI dashboards, and analyst needs.

JetBlue uses the internally built BlueML library with AutoML, AutoDeploy, and online feature store features, as well as MLflow, model registry APIs, and custom dependencies for AI and ML model training and inference.

Insights are consumed using REST APIs that connect Tableau dashboards to Databricks SQL serverless compute, a fast-serving semantic layer, and/or deployed ML serving APIs.

Deployment of new ML products is often accompanied by robust change management processes, particularly in lines of business closely governed by Federal Air Regulations and other laws due to the sensitivity of data and respective decision-making. Traditionally, such change management has entailed a series of workshops, training, product feedback, and more specialized ways for users to interact with the product, such as role-specific KPIs and dashboards.

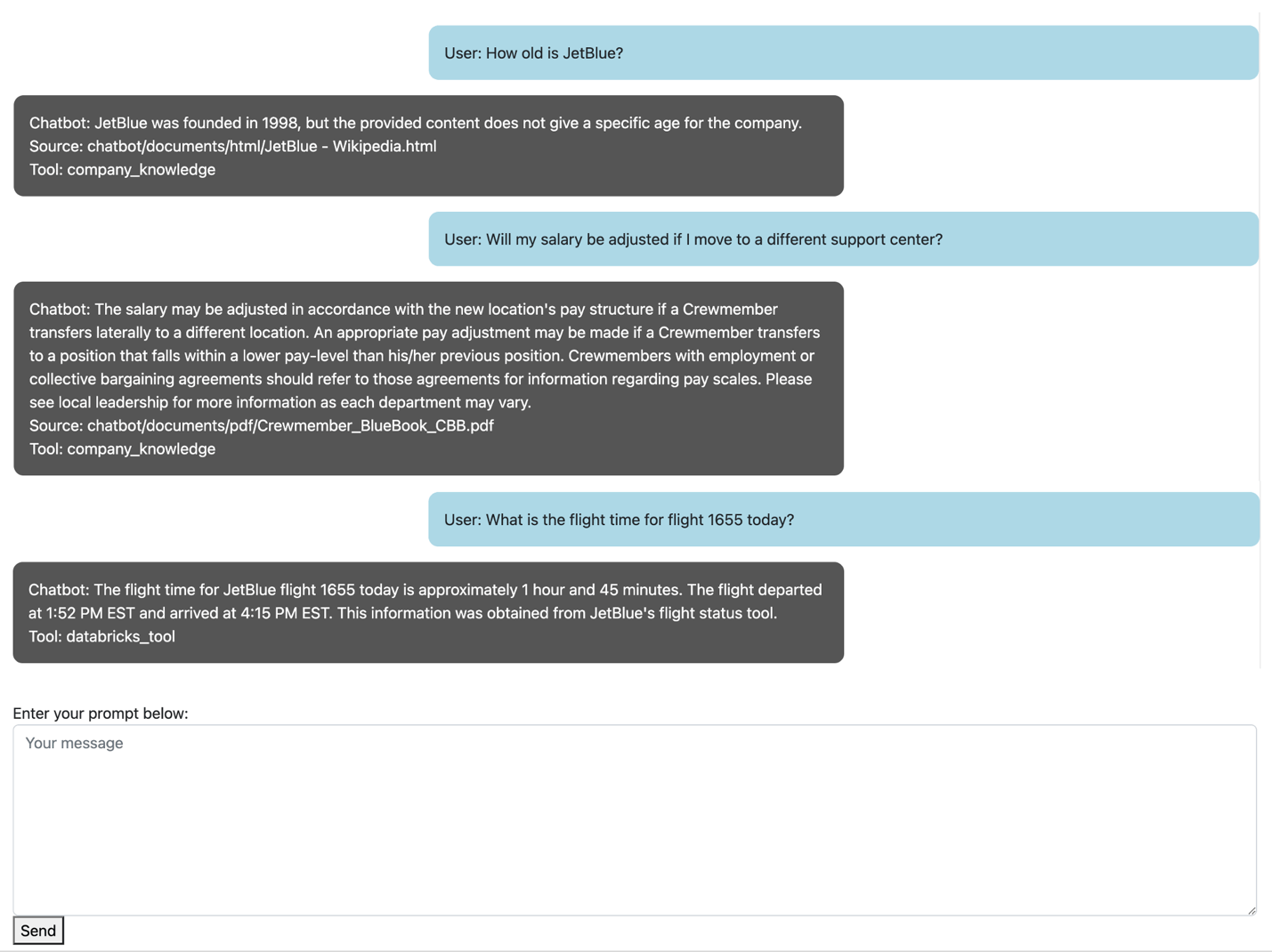

In light of recent advancements in Generative AI, traditional change management and ML product management have been disrupted. Users can now use sophisticated Large Language Model (LLM) technology to gain access to the role-specific KPIs and information, including help using natural language they’re familiar with. This drastically reduces the training required for successful product scaling among users, the turnaround time for product feedback and most importantly, simplifies access to relevant summary of insights; no longer is access to information measured in clicks but number of words in the question.

To address the Generative AI and ML needs, JetBlue's AI and ML engineering team focused on addressing the enterprise challenges.

|

Line of businesses |

Strategic Product(s) |

Strategic Outcome(s) |

|

Commercial Data Science |

|

|

|

Operations Data Science |

|

|

|

AI & ML engineering |

|

|

|

Business Intelligence |

|

|

Using this architecture, JetBlue has sped AI and ML deployments across a wide range of use cases spanning four lines of business, each with its own AI and ML team. The following are the fundamental functions of the business lines:

- Commercial Data Science (CDS) - Revenue growth

- Operations Data Science (ODS) - Cost reduction

- AI & ML engineering – Go-to-market product deployment optimization

- Business Intelligence – Reporting enterprise scaling and support

Each business line supports multiple strategic products that are prioritized regularly by JetBlue leadership to establish KPIs that lead to effective strategic outcomes.

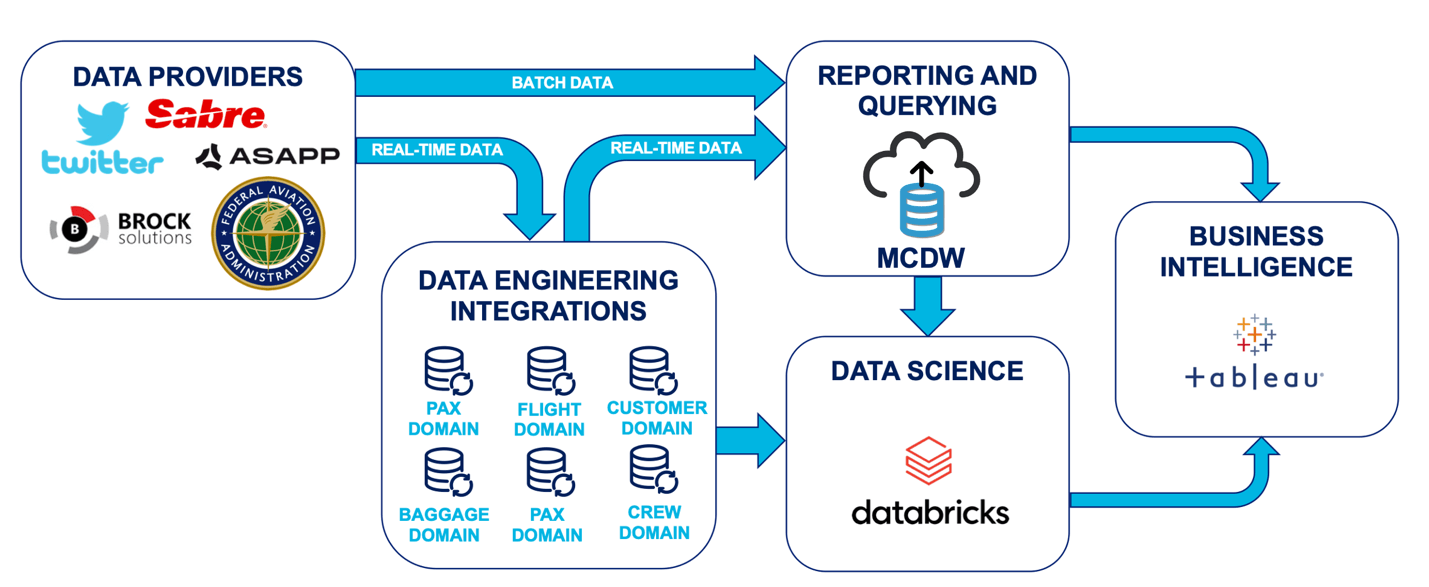

Why move from a Multi-Cloud Data Warehouse Architecture

Data and AI technology are critical in making proactive real-time decisions; however, leveraging legacy data architecture platforms impacts business outcomes.

JetBlue data is served primarily through the Multi Cloud Data Warehouse, resulting in a lack of flexibility for complicated design, latency changes, and cost scalability.

|

High Latency - a 10 minute data architecture latency costs the organization millions of dollars per year. |

|

Complex Architecture – multiple stages of data movement across multiple platforms and products is inefficient for real-time streaming use cases as it is complex and cost-prohibitive. |

|

High Platform TCO – having numerous vendor data platforms and resources to manage the data platform incurs high operating costs. |

|

Scaling up – the current data architecture has scaling issues when processing exabytes (large amounts of data) generated by many flights. |

Due to a lack of online feature store hydration, high latency in the traditional architecture prevented our data scientists from constructing scalable ML training and inference pipelines. When data scientists and AI & ML engineers in the lakehouse were given the freedom to stitch ML models closer to the medallion architecture, go-to-market strategy efficiency was unlocked.

Complex architectures, such as dynamic schema management and stateful/stateless transformations, were challenging to implement with a classic multi-cloud data warehouse architecture. Both data scientists and data engineers can now perform such changes using scalable Delta Live Tables with no barriers to entry. The option to move between SQL, Python, and PySpark has significantly increased productivity for the JetBlue Data team.

Due to the pipelines' inability to scale up quickly, the lack of open source scalable design in multicloud data warehouses resulted in complex Root Cause Analysis (RCAs) when pipelines failed, inefficient testing/troubleshooting, and ultimately a higher TCO. The data team closely tracked compute expenses on the MCDW versus Databricks during the transition; as more real-time and high-volume data feeds were activated for consumption, ETL/ELT costs increased at a proportionally lower and linear rate compared to the ETL/ELT costs of the legacy Multi Cloud Data Warehouse.

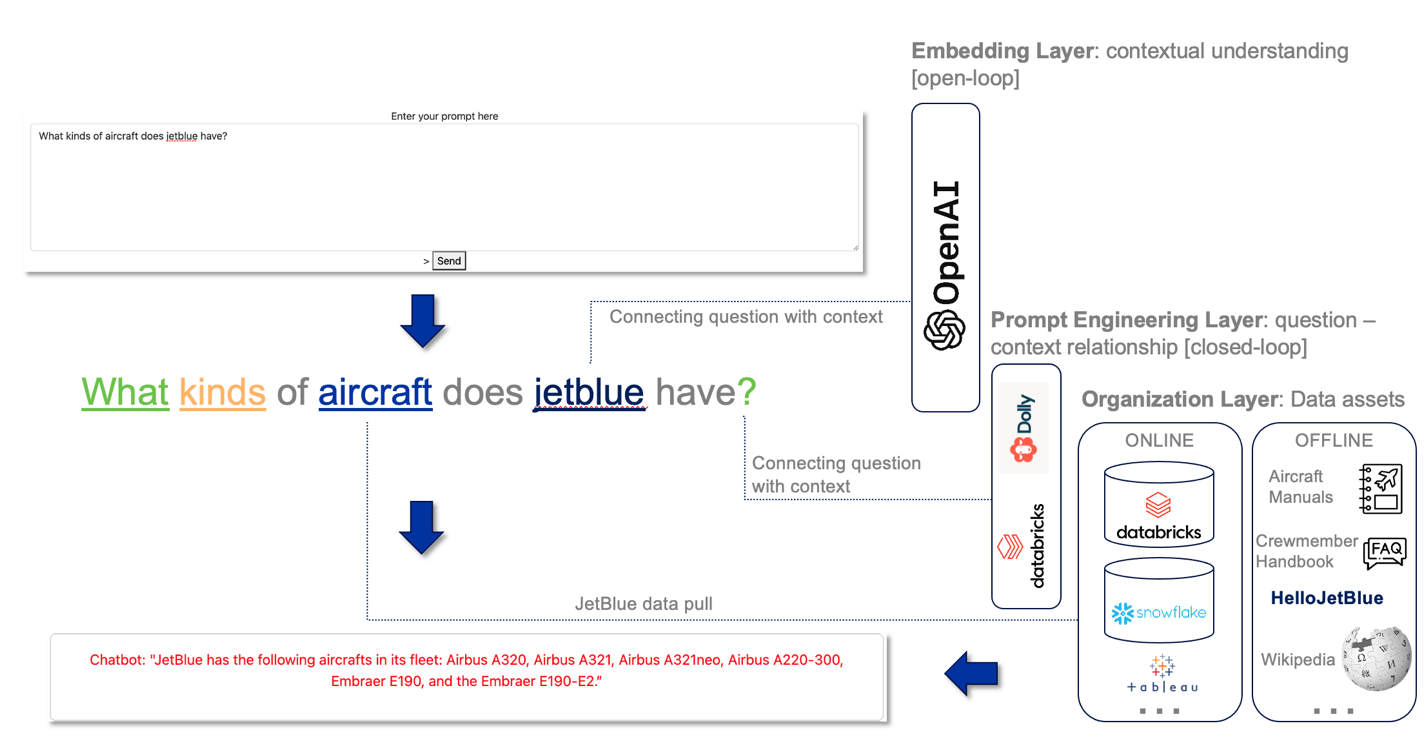

Data governance is the biggest obstacle to deploying generative AI and machine learning in any organization. Because role-based access to crucial data and insights is closely monitored in highly regulated businesses like aviation, these sectors take pride in effective data governance procedures. The necessity for curated embeddings, which are only possible in sophisticated systems with 100+ billion or more parameters, like OpenAI's chatGPT, complicates the organization's data governance. A combination of OpenAI for embeddings, Databricks' Dolly 2.0 for fast engineering, and JetBlue offline/online document repository is required for effective Generative AI governance.

Previous Multi-Cloud Data Warehouse Architecture

Impact of the Lakehouse Architecture

With the Databricks Data Intelligence Platform serving as the central hub for all streaming use cases, JetBlue efficiently delivers several ML and analytics products/insights by processing thousands of attributes in real-time. These attributes include flights, customers, flight crew, air traffic, and maintenance data.

The lakehouse provides real-time data through Delta Live Tables, enabling the development of historical training and real-time inference ML pipelines. These pipelines are deployed as ML serving APIs that continuously update a snapshot of the JetBlue system network. Any operational impact resulting from various controllable and uncontrollable variables, such as rapidly changing weather, aircraft maintenance events with anomalies, flight crews nearing legal duty limits, or ATC restrictions on arrivals/departures, is propagated through the network. This allows for pre-emptive adjustments based on forecasted alerts.

Current Lakehouse Architecture

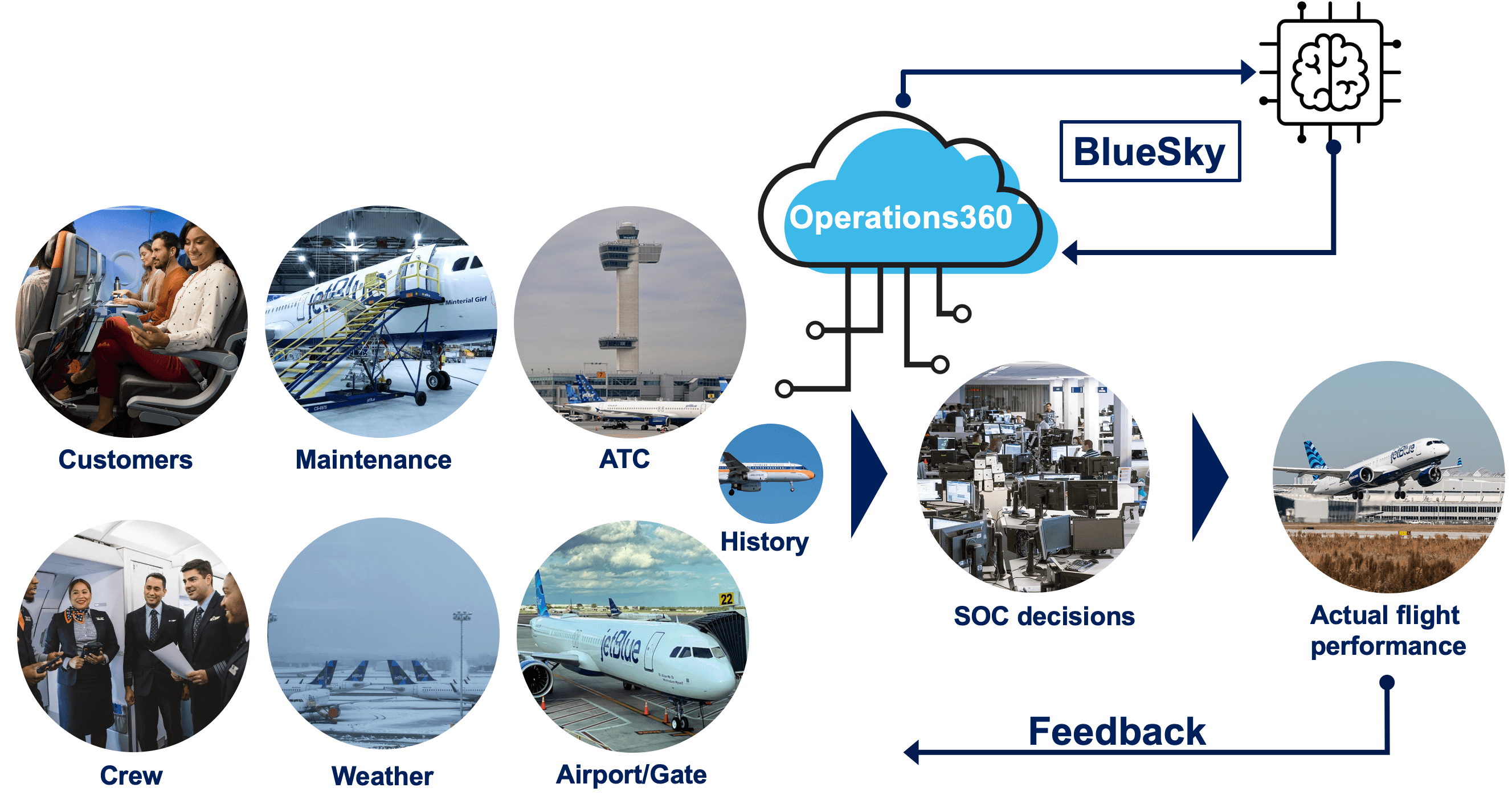

Using real-time streams of weather, aircraft sensors, FAA data feeds, JetBlue operations and more; are used for the world's first AI and ML operating system orchestrating a digital-twin, known as BlueSky for efficient and safe operations. JetBlue has over 10 ML products (multiple models for each product) in production across various verticals including dynamic pricing, customer recommendation engines, supply chain optimization, customer sentiment NLP and several more.

The BlueSky operations digital twin is one of the most complex products currently being implemented at JetBlue by the data team and forms the backbone of JetBlue's airline operations forecasting and simulation capabilities.

BlueSky, which is now being phased in, is unlocking operational efficiencies at JetBlue through proactive and optimal decision-making, resulting in higher customer satisfaction, flight crew satisfaction, fuel efficiency, and cost savings for the airline.

Additionally, the team collaborated with Microsoft Azure OpenAI APIs and Databricks Dolly to create a robust solution that meets Generative AI governance to expedite the successful growth of BlueSky and similar products with minimum change management and efficient ML product management.

The Microsoft Azure OpenAI API service offers sandboxed embeddings download capabilities for storing in a vector database document store. Databricks' Dolly 2.0 provides a mechanism for fast engineering by allowing Unity Catalog role-based access to documents in the vector database document store. Using this framework, any JetBlue user can access the same chatbot hidden behind Azure AD SSO protocols and Databricks Unity Catalog Access Control Lists (ACLs). Every product, including the BlueSky real-time digital twin, ships with embedded LLMs.

By deploying AI and ML enterprise products on Databricks using data in lakehouse, JetBlue has thus far unlocked a relatively high Return-on-Investment (ROI) multiple within two years. In addition, Databricks allows the Data Science and Analytics teams to rapidly prototype, iterate and launch data pipelines, jobs and ML models using the lakehouse, MLflow and Databricks SQL.

Our dedicated team at JetBlue is excited about the future as we strive to implement the latest cutting-edge features offered by Databricks. By leveraging these advancements, we aim to elevate our customers' experience to new heights and continuously improve the overall value we provide. One of our key objectives is to lower our total cost of ownership (TCO), ensuring they receive optimal returns on their investments.

Join us at the 2023 Data + AI Summit, where we will discuss the power of the lakehouse during the Keynote, dive deep into our fascinating Real-Time AI & ML Digital Twin Journey and provide insights into how we navigated complexities of Large Language Models.

Watch the video of our story here.

Never miss a Databricks post

Sign up

What's next?

Technology

December 9, 2024/6 min read

Scale Faster with Data + AI: Insights from the Databricks Unicorns Index

News

December 11, 2024/4 min read