Announcing General Availability of Databricks Model Serving

Simplified Production ML on the Databricks Lakehouse Platform

ML Virtual Event

We are thrilled to announce the general availability of Databricks Model Serving. Model Serving deploys machine learning models as a REST API, allowing you to build real-time ML applications like personalized recommendations, customer service chatbots, fraud detection, and more - all without the hassle of managing serving infrastructure.

With the launch of Databricks Model Serving, you can now deploy your models alongside your existing data and training infrastructure, simplifying the ML lifecycle and reducing operational costs.

"By doing model serving on the same platform where our data lives and where we train models, we have been able to accelerate deployments and reduce maintenance, ultimately helping us deliver for our customers and drive more enjoyable and sustainable living around the world." - Daniel Edsgärd, Head of Data Science at Electrolux

Challenges with building real-time ML Systems

Real-time machine learning systems are revolutionizing how businesses operate by providing the ability to make immediate predictions or actions based on incoming data. Applications such as chatbots, fraud detection, and personalization systems rely on real-time systems to provide instant and accurate responses, improving customer experiences, increasing revenue, and reducing risk.

However, implementing such systems remains a challenge for businesses. Real-time ML systems need fast and scalable serving infrastructure that requires expert knowledge to build and maintain. The infrastructure must not only support serving but also include feature lookups, monitoring, automated deployment, and model retraining. This often results in teams integrating disparate tools, which increases operational complexity and creates maintenance overhead. Businesses often end up spending more time and resources on infrastructure maintenance instead of integrating ML into their processes.

Production Model Serving on the Lakehouse

Databricks Model Serving is the first serverless real-time serving solution developed on a unified data and AI platform. This unique serving solution accelerates data science teams' path to production by simplifying deployments and reducing mistakes through integrated tools.

Eliminate management overheads with real-time Model Serving

Databricks Model Serving brings a highly available, low-latency and serverless service for deploying models behind an API. You no longer have to deal with the hassle and burden of managing a scalable infrastructure. Our fully managed service takes care of all the heavy lifting for you, eliminating the need to manage instances, maintain version compatibility, and patch versions. Endpoints automatically scale up or down to meet demand changes, saving infrastructure costs while optimizing latency performance.

"The fast autoscaling keeps costs low while still allowing us to scale as traffic demand increases. Our team is now spending more time building models solving customer problems rather than debugging infrastructure-related issues." - Gyuhyeon Sim, CEO at Letsur.ai

Accelerate deployments through Lakehouse-Unified Model Serving

Databricks Model Serving accelerates deployments of ML models by providing native integrations with various services. You can now manage the entire ML process, from data ingestion and training to deployment and monitoring, all on a single platform, creating a consistent view across the ML lifecycle that minimizes errors and speeds up debugging. Model Serving integrates with various Lakehouse services, including

- Feature Store Integration: Seamlessly integrates with Databricks Feature Store, providing automated online lookups to prevent online/offline skew - You define features once during training and we will automatically retrieve and join the relevant features to complete the inference payload.

- MLflow Integration: Natively connects to MLflow Model Registry, enabling fast and easy deployment of models - just provide us the model, and we will automatically prepare a production-ready container and deploy it to serverless compute

- Quality & Diagnostics (coming soon): Automatically capture requests and responses in a Delta table to monitor and debug models or generate training datasets

- Unified governance: Manage and govern all data and ML assets, including those consumed and produced by model serving, with Unity Catalog.

"By doing model serving on a unified data and AI platform, we have been able to simplify the ML lifecycle and reduce maintenance overhead. This is enabling us to redirect our efforts towards expanding the use of AI across more of our business." - Vincent Koc, Head of Data at hipages group

Empower teams with Simplified Deployment

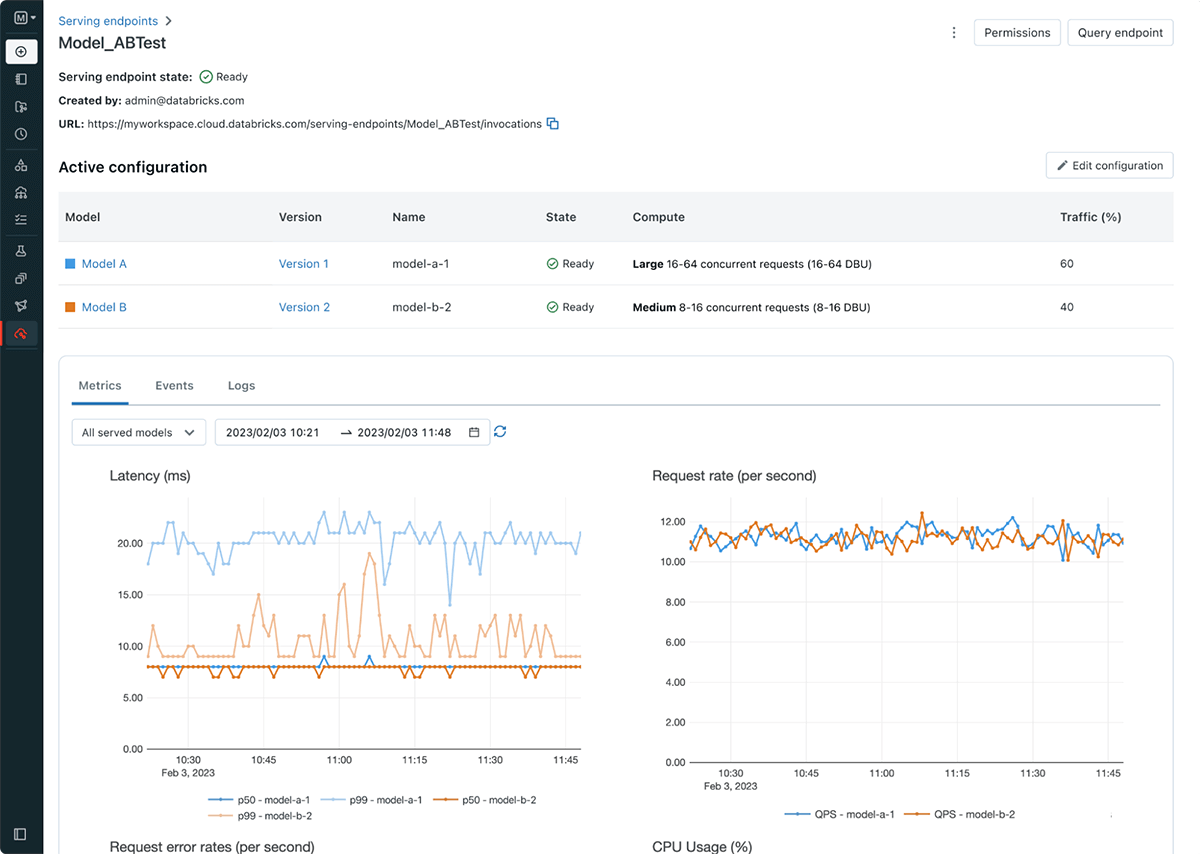

Databricks Model Serving simplifies the model deployment workflow, empowering Data Scientists to deploy models without the need for complex infrastructure knowledge or experience. As part of the launch, we are also introducing serving endpoints, which uncouple the model registry and scoring URI, resulting in more efficient, stable, and flexible deployments. For example, you can now deploy multiple models behind a single endpoint and distribute traffic as desired among the models. The new serving UI and APIs make it easy to create and manage endpoints. Endpoints also provide built-in metrics and logs that you can use to monitor and receive alerts.

Getting Started with Databricks Model Serving

- Register for the upcoming conference to learn how Databricks Model Serving can help you build real-time systems, and gain insights from customers.

- Take it for a spin! Start deploying ML models as a REST API

- Dive deeper into the Databricks Model Serving documentation

- Check out the guide to migrate from the Legacy MLflow Model Serving to Databricks Model Serving

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read