Skip to main content![Ahmed Bilal]()

![OpenAI GPT-5.2]()

![]()

![Anthropic Partnership]()

![Making AI More Accessible: Up to 80% Cost Savings with Meta Llama 3.3 on Databricks]()

![Introducing Structured Outputs for Batch and Agent Workflows]()

Ahmed Bilal

Ahmed Bilal's posts

Product

December 11, 2025/5 min read

OpenAI GPT-5.2 and Responses API on Databricks: Build Trusted, Data-Aware Agentic Systems

Product

April 5, 2025/4 min read

Introducing Meta’s Llama 4 on the Databricks Data Intelligence Platform

Partners

March 26, 2025/3 min read

Announcing Anthropic Claude 3.7 Sonnet is natively available in Databricks

Product

December 12, 2024/4 min read

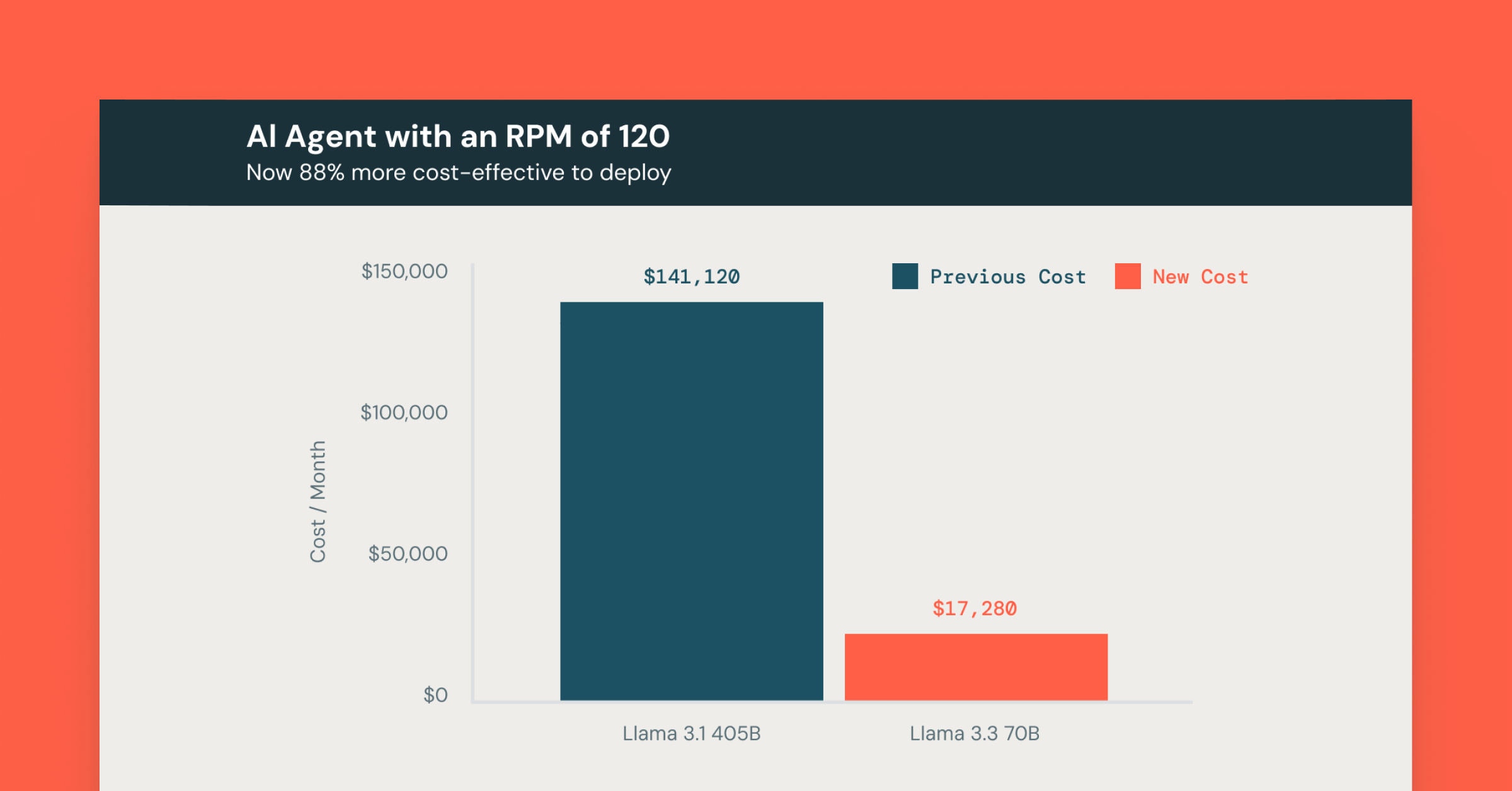

Making AI More Accessible: Up to 80% Cost Savings with Meta Llama 3.3 on Databricks

Generative AI

November 14, 2024/9 min read

Introducing Structured Outputs for Batch and Agent Workflows

Showing 1 - 12 of 20 results